Dr. Freddy Lecue

Dr Freddy Lecue (PhD 2008, Habilitation 2015) is AI Research Director at J.P.Morgan in New York. He is also a research associate at Inria, in WIMMICS, Sophia Antipolis - France.

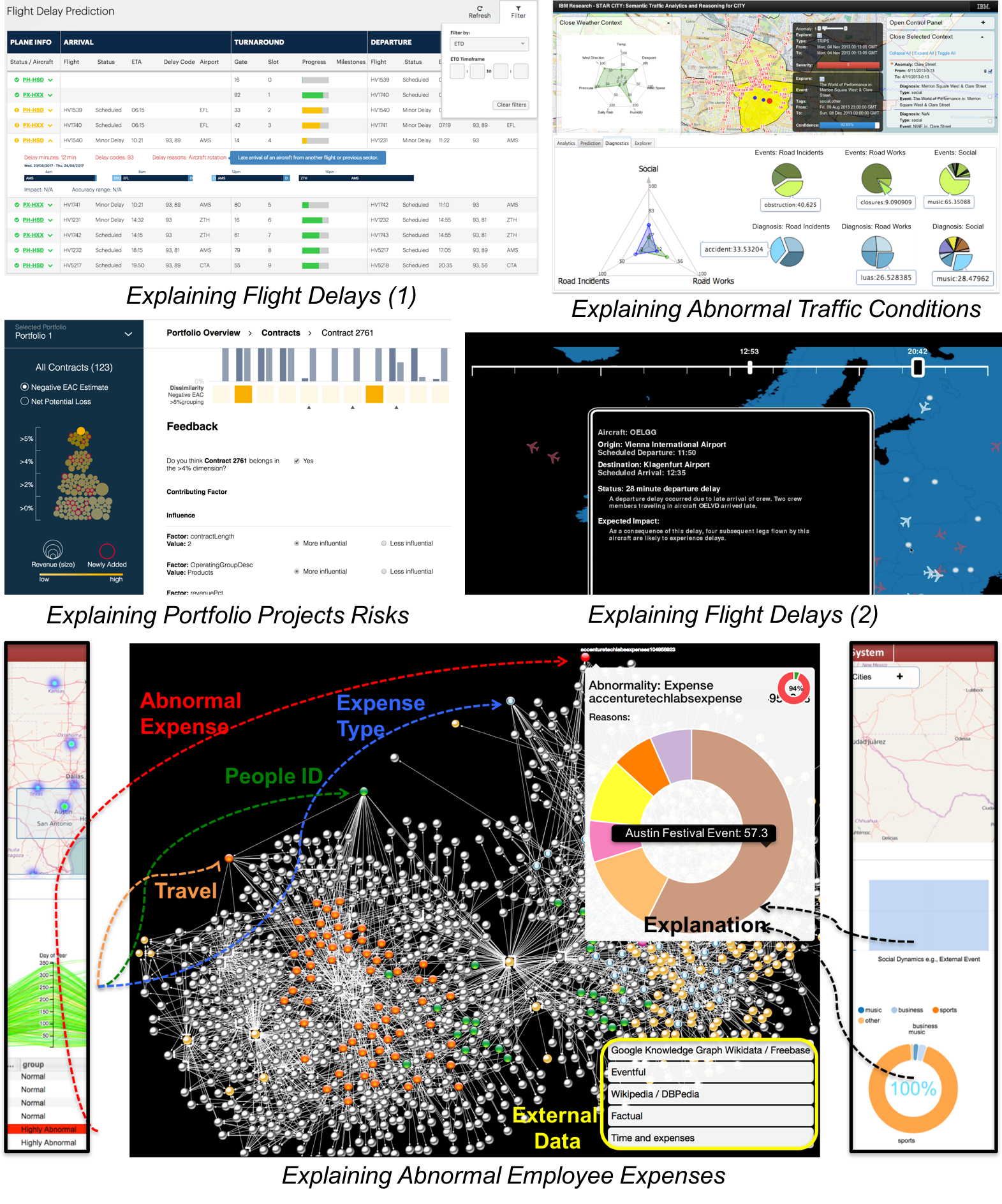

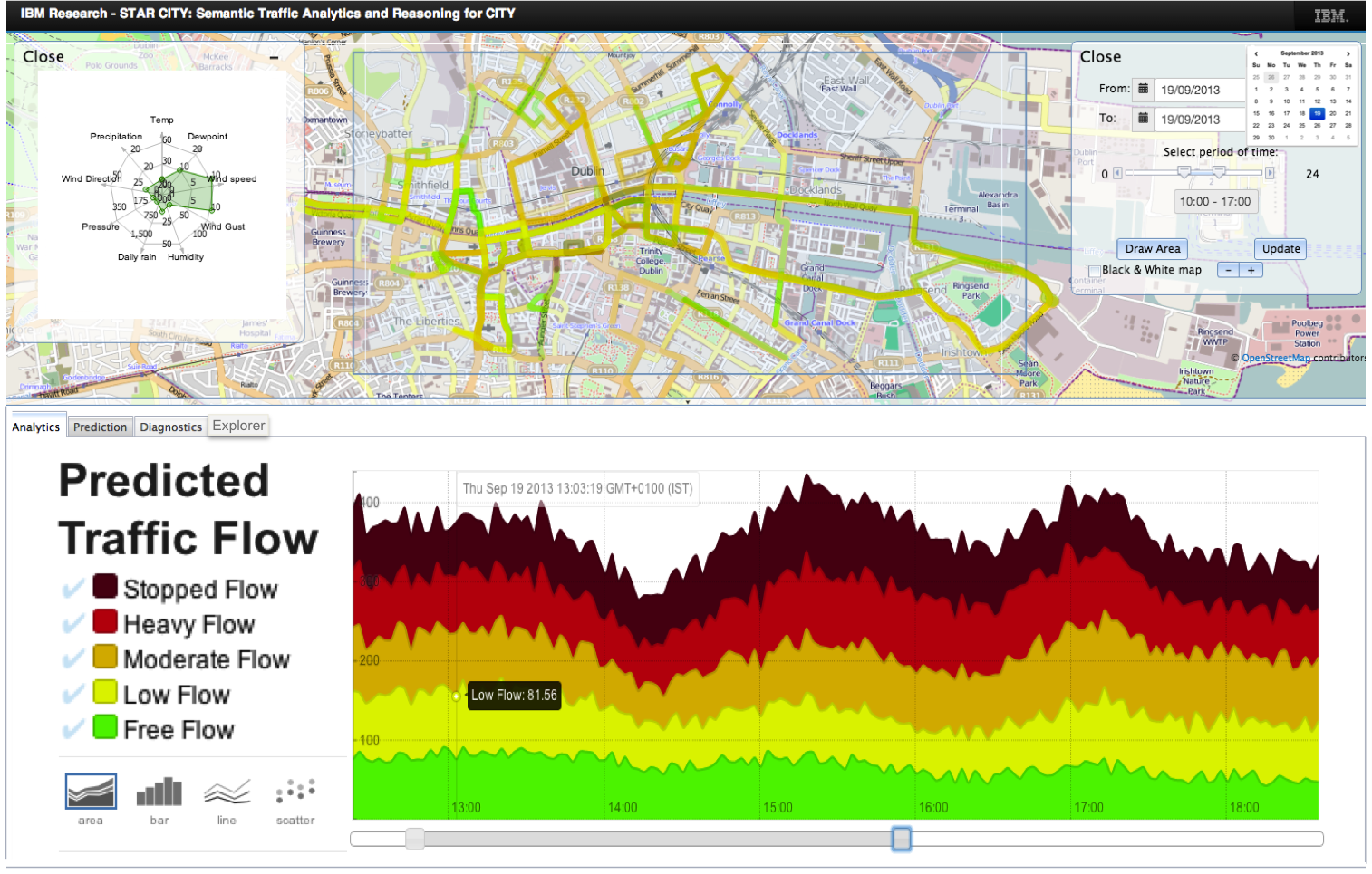

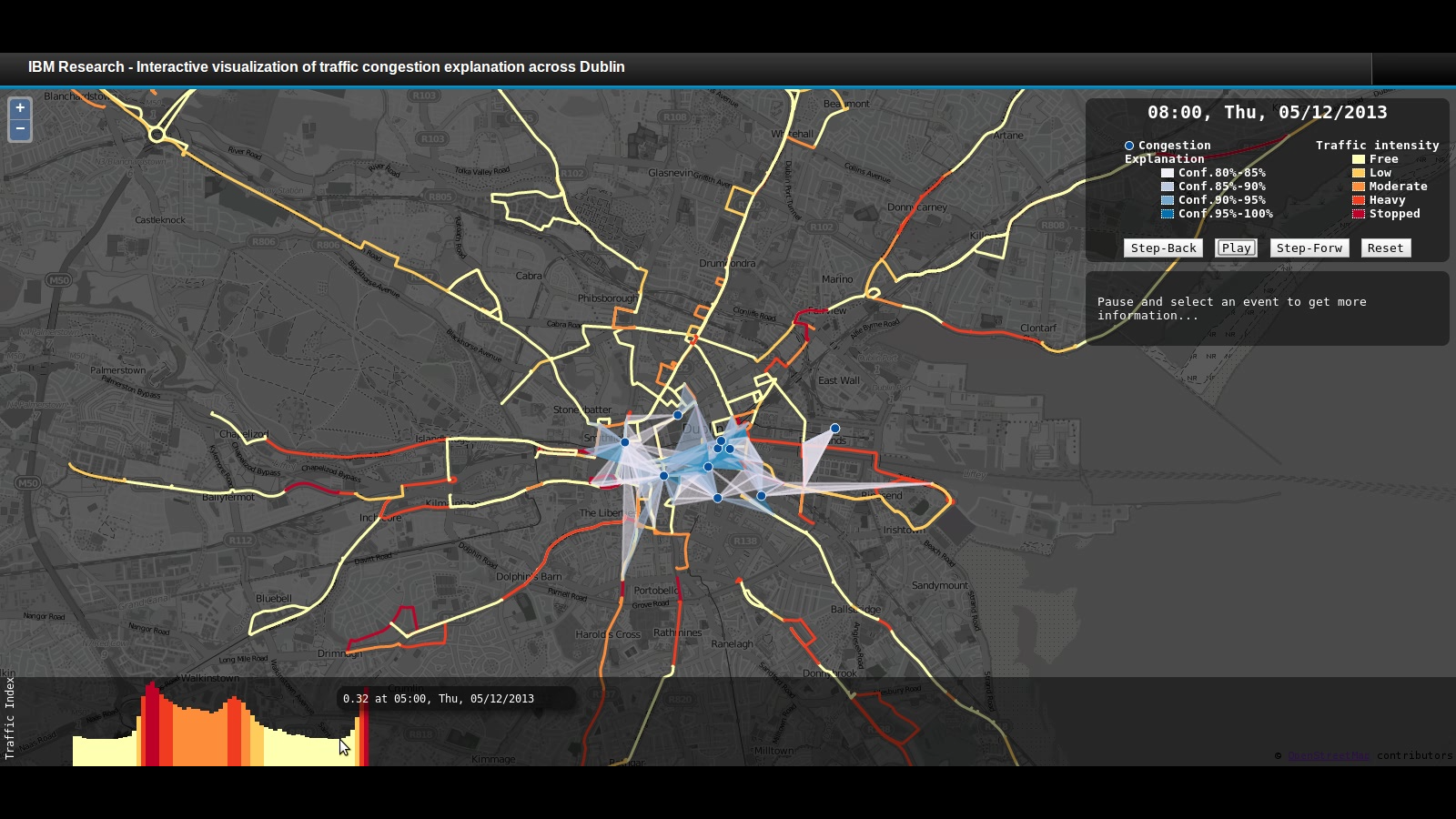

His research area is at the frontier of intelligent i.e., learning and reasoning systems. He has a strong interest on Explainable AI i.e., AI systems, models and results which can be explained to human and business experts cf. recent research / industry presentation. In particular he is interested in: Cognitive Computing, Knowledge Representation and Reasoning, Machine (particularly Deep) Learning, Large Scale Processing, Software Engineering, Service-Oriented Computing, Information Extraction and Integration, Recommendation System, Cloud and Mobile Computing.