|

|

|

|

|

|

|

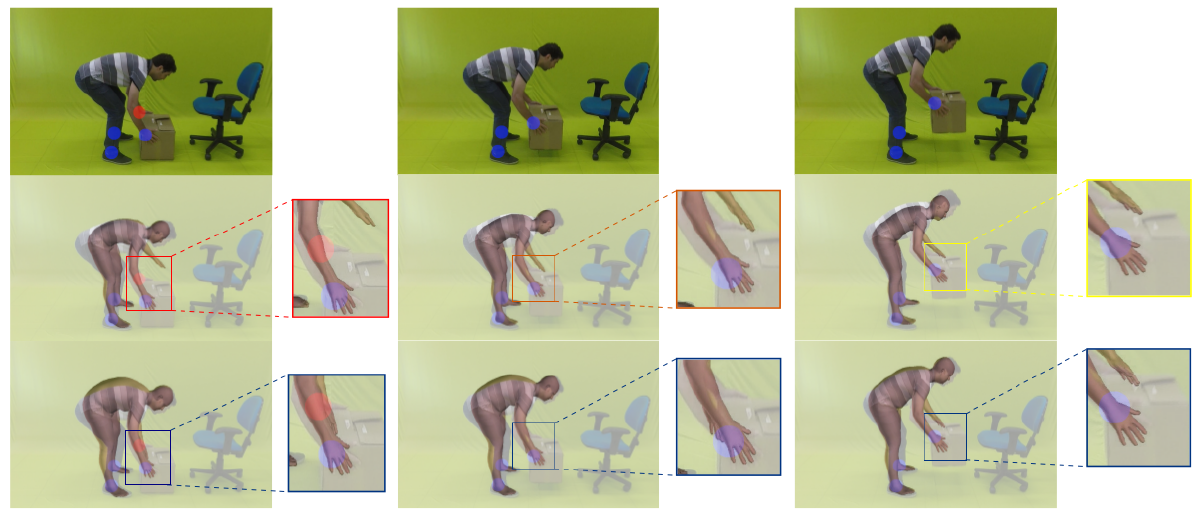

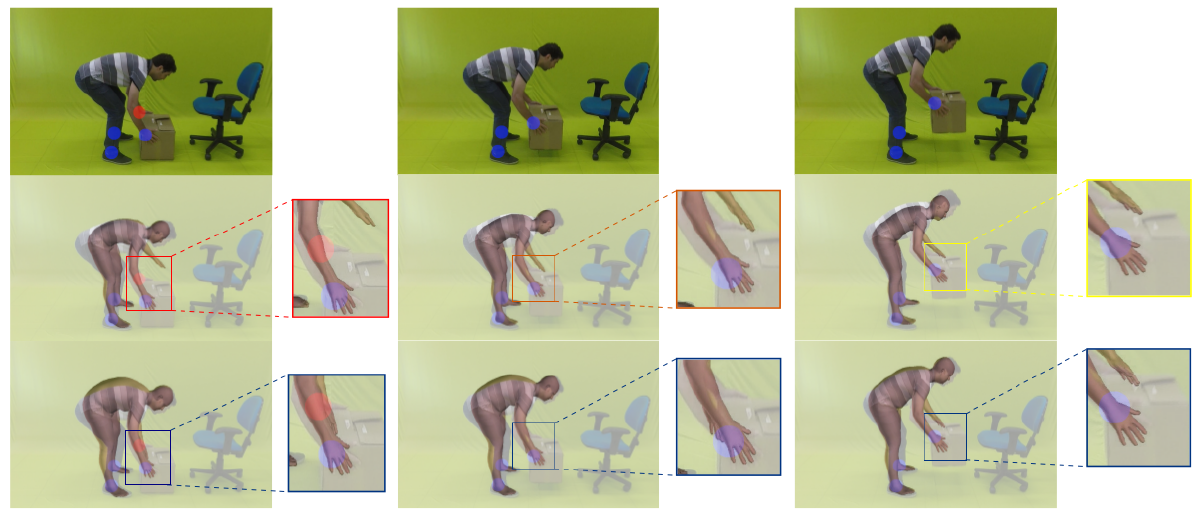

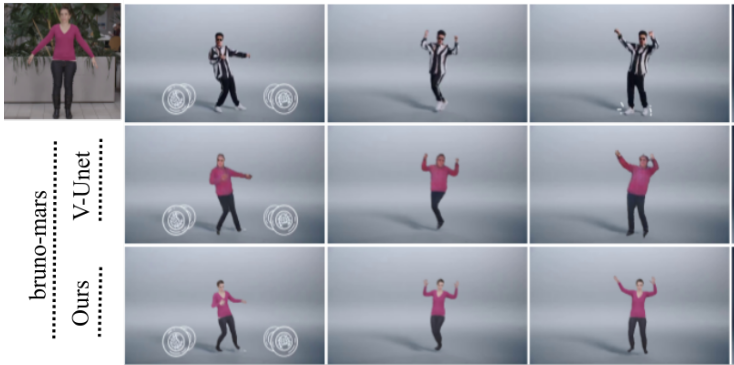

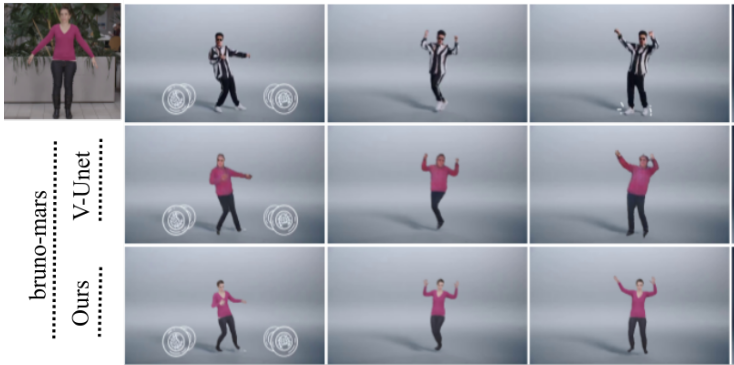

In this paper, we propose a unifying formulation for transferring appearance and retargeting human motion from monocular videos. Our method is composed of four main components and synthesizes new videos of people in a different context where they were initially recorded. Differently from recent human neural rendering methods, our approach takes into account jointly body shape, appearance and motion constraints in the transfer. |

|

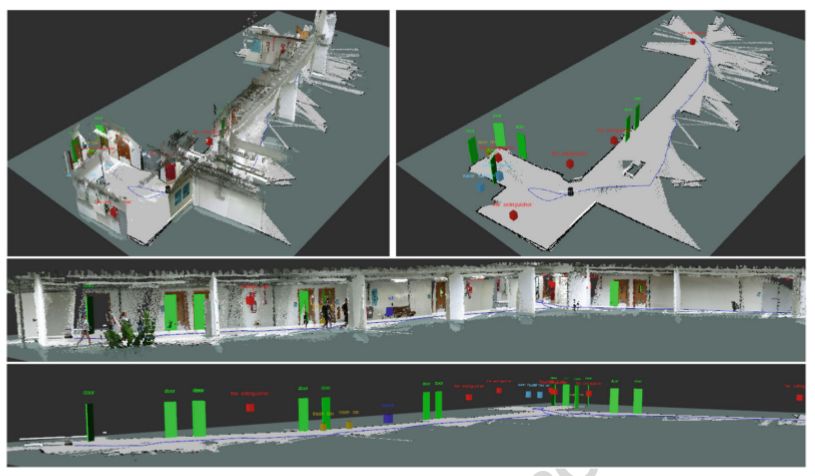

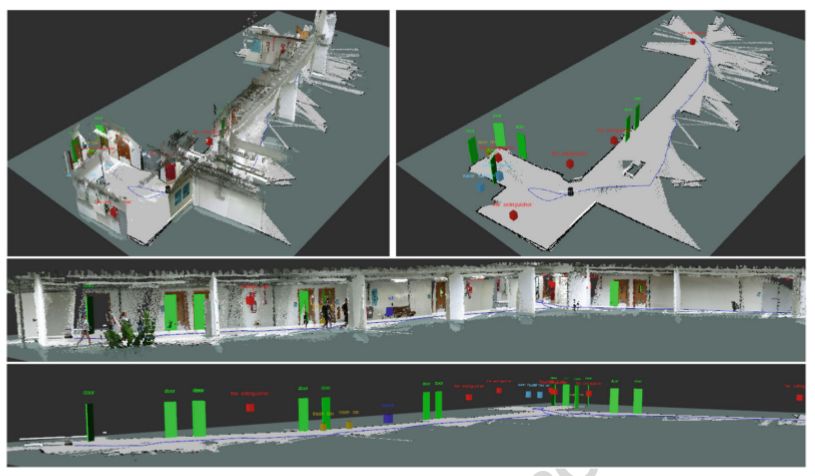

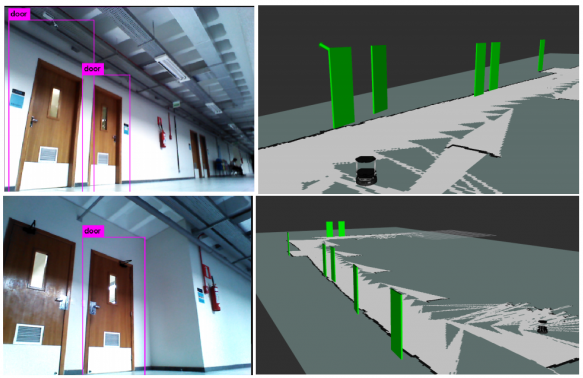

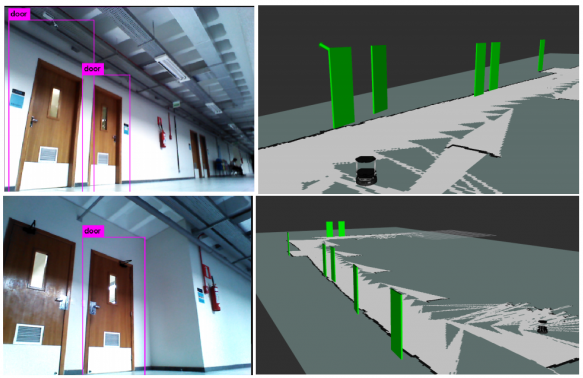

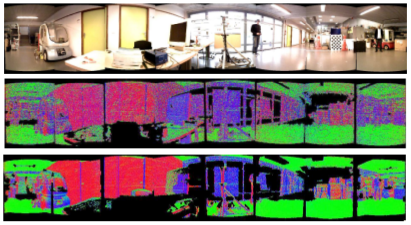

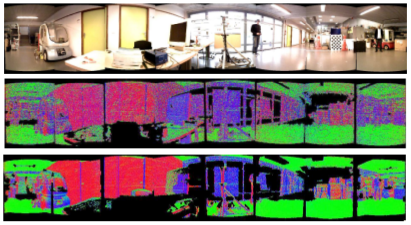

This work addresses the problem of building augmented metric representations of scenes with semantic information from RGB-D images. We propose a complete framework to create an enhanced map representation of the environment with object-level information to be used in several applications such as human-robot interaction, assistive robotics, visual navigation, or in manipulation tasks. Our formulation leverages a CNN-based object detector (Yolo) with a 3D model-based segmentation technique to perform instance semantic segmentation, and to localize, identify, and track different classes of objects in the scene. |

|

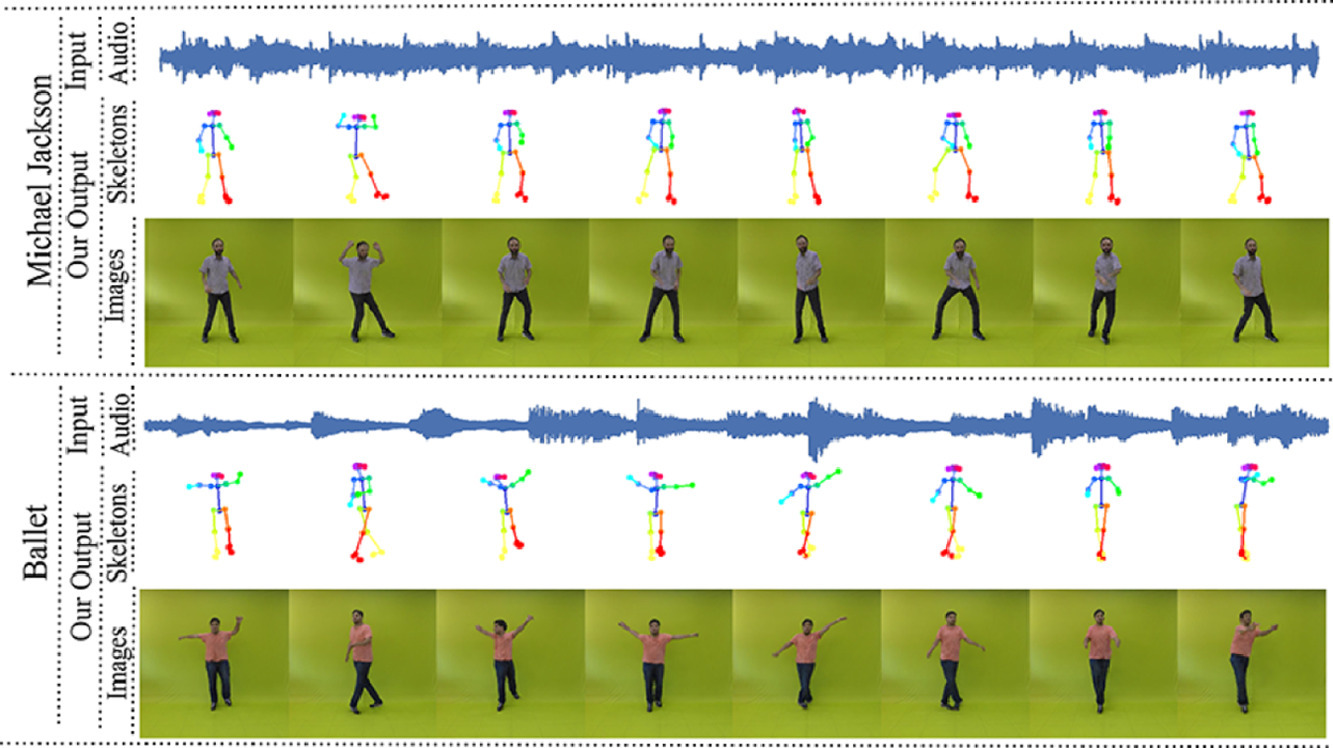

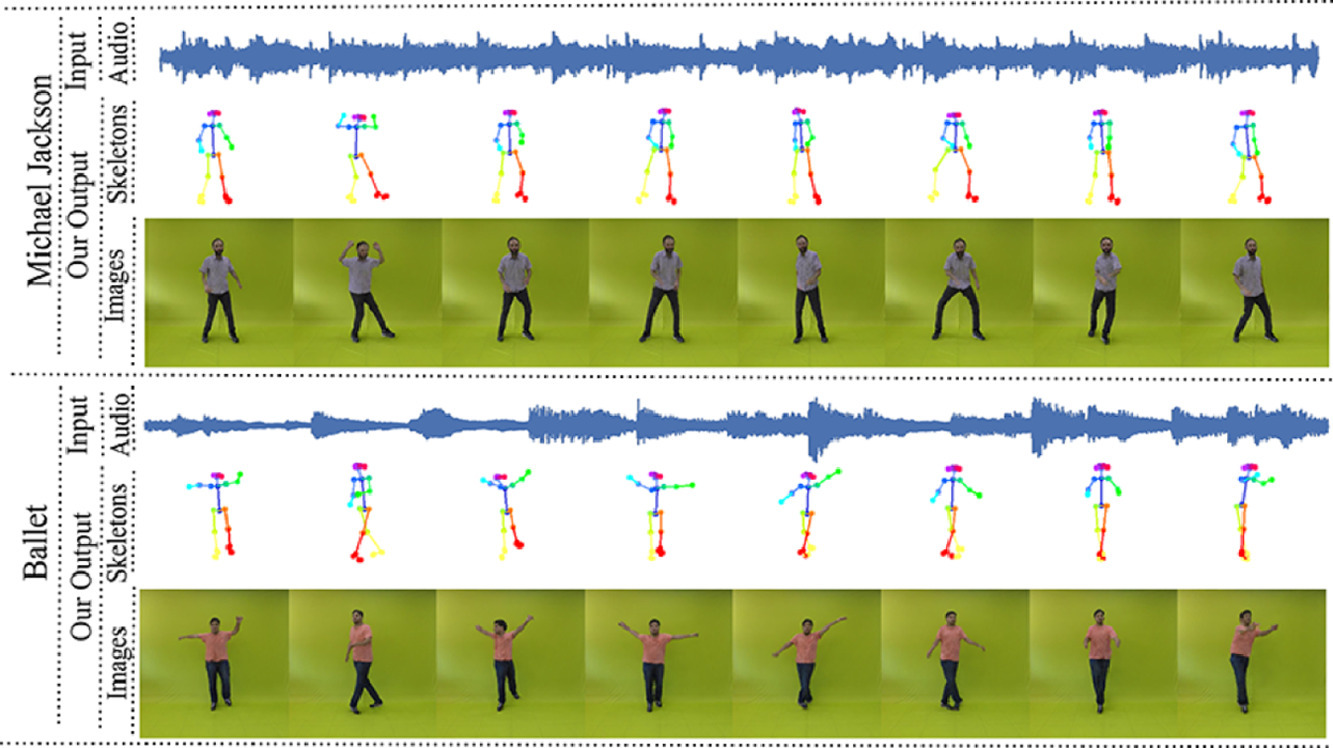

In this project, we design a novel human motion generation method, based on graph convolutional networks (GCN), to tackle the problem of automatic dance generation from audio information. Our method proposes an adversarial learning scheme conditioned by music audios to generate natural dance motions, preserving the key movements of different music styles. The automatic generated motions for different dance styles (such as "ballet" and "salsa") are used to animate virtual human avatars. |

|

|

|

In this paper, we propose a unifying formulation for transferring appearance and retargeting human motion from monocular videos that regards all these aspects. Our method is composed of four main components and synthesizes new videos of people in a different context where they were initially recorded. Differently from recent appearance transferring methods, our approach takes into account body shape, appearance and motion constraints. |

|

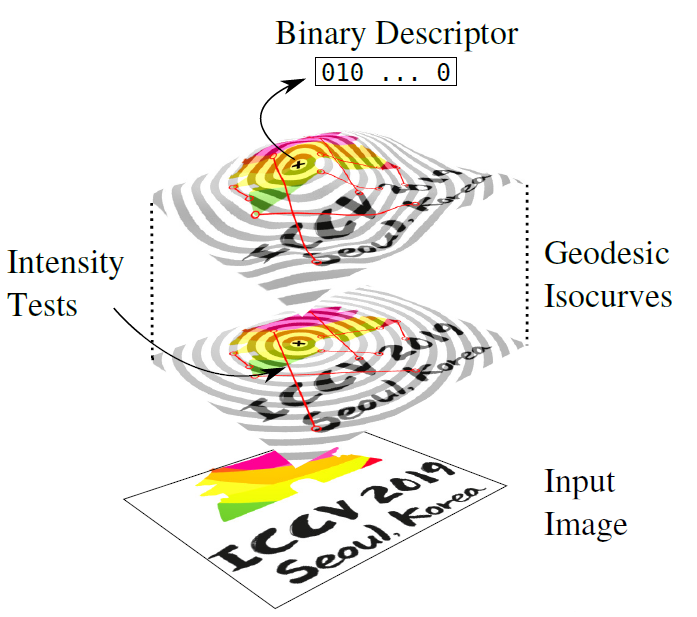

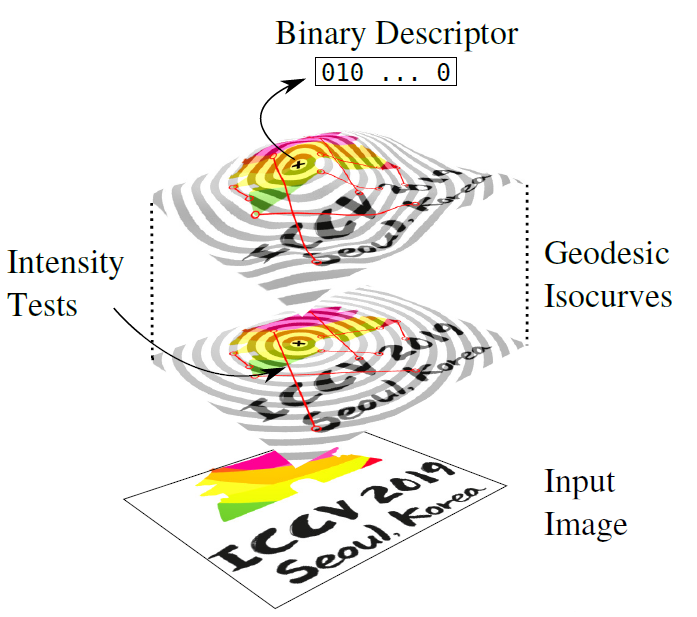

We introduce a novel binary RGB-D descriptor invariant to isometric deformations. Our method uses geodesic isocurves on smooth textured manifolds. It combines appearance and geometric information from RGB-D images to tackle non-rigid transformations. We used our descriptor to track multiple textured depth maps and demonstrate that it produces reliable feature descriptors even in the presence of strong non-rigid deformations and depth noise. |

|

In this work, we propose an open framework for building hybrid maps, i.e., combining both environment structure (metric map) and environment semantics (objects classes) to support autonomous robot perception and navigation tasks. We detect and model objects in the scene from RGB-D images, using convolutional neural networks to extract a semantic layer of the different objects in the scene. Our final environment representation is a metric map augmented with the semantic information of the detected objects. |

|

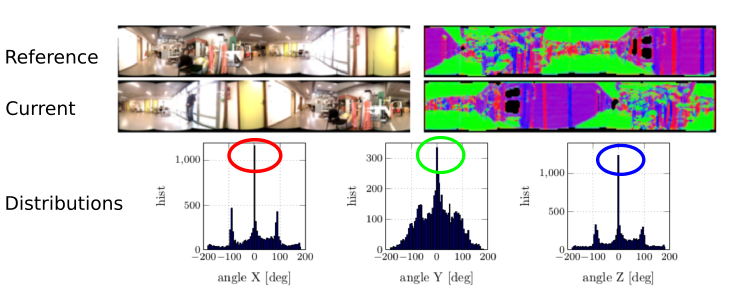

This paper describes a registration technique using the normal vectors of depth images. The technique is computed in a decoupled and non-iterative way, and with a large convergence domain. This formulation can be used with an initialization framework to improve the convergence of direct registration methods. |

|

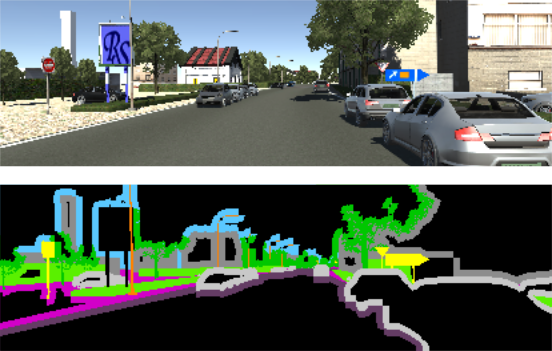

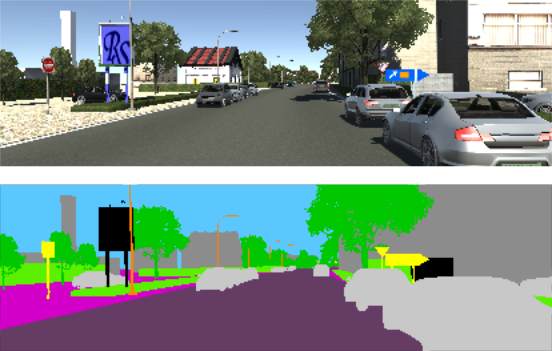

In this paper, we propose a new metric to evaluate semantic segmentation. This new metric accounts for both global and contour accuracy in a simple formulation to overcome the weaknesses of the most commonly used metrics. |

|

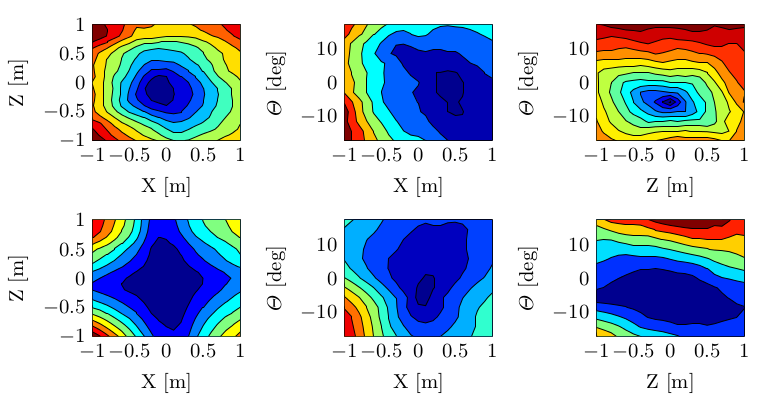

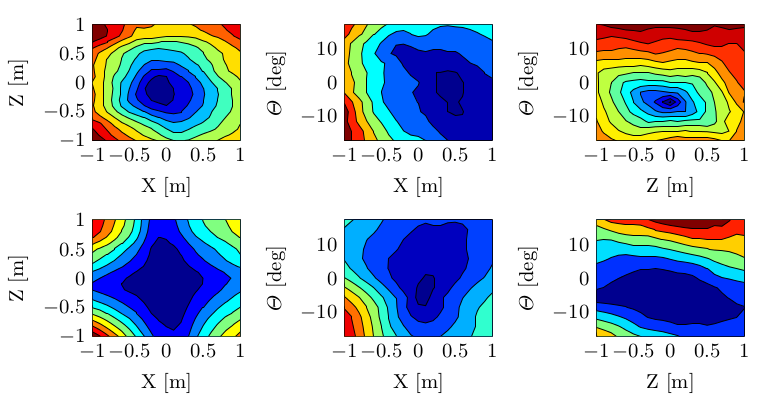

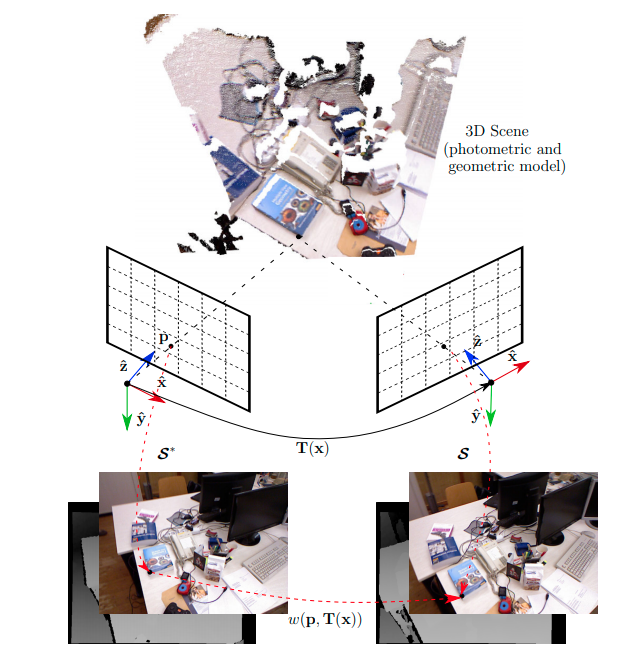

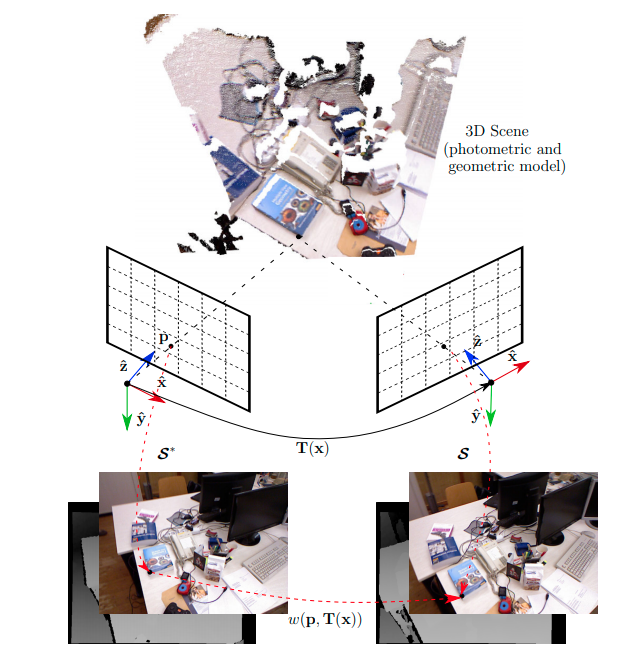

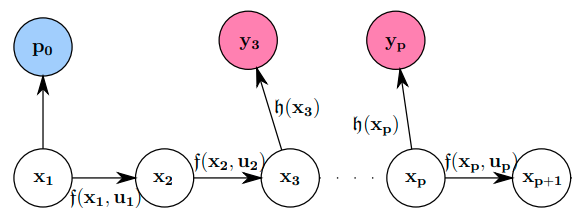

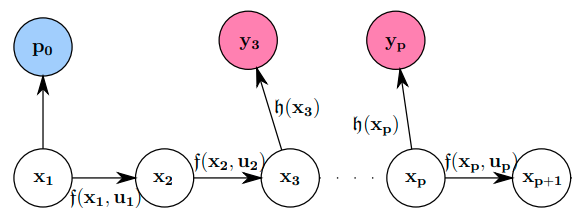

This work addresses the challenging cases of large motions in direct image registration. We explore the complementary aspects of a classical direct VO and direct point-to-plane strategies, in terms of convergence, by using a modified cost function, where the geometric term prevails in the first coarse iterations, while the intensity data term dominates in the finer increments. |

|

In this paper, we show the outcome of a more stable and robust direct registration task in the density/sparsity of the representation (the number of keyframes) in outdoor scene mapping. This allows storing a sparser local representation whilst maintaining a topological structure at large-scale that is accurate enough to ensure the convergence of a task in the neighbourhood of the scene model. |

|

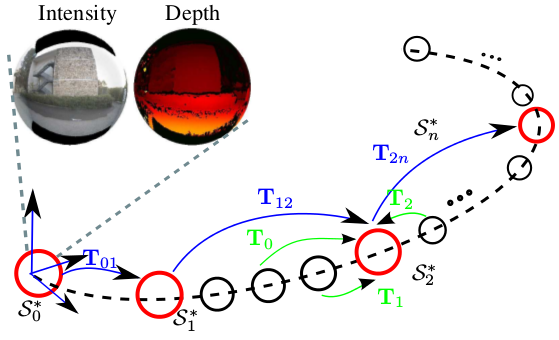

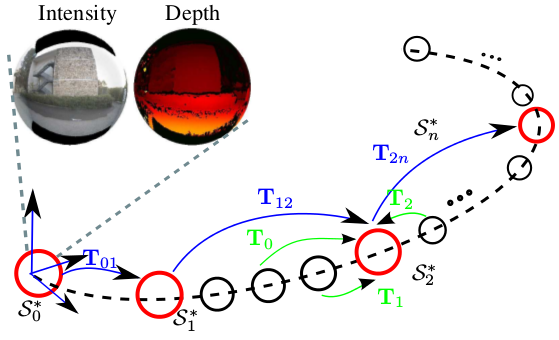

This work is about exploiting jointly intensity and depth information to generate more precise keyframes for visual odometry or image rendering. The main core of the paper is a depth regularization that considers both geometric and photometric image constraints (planar and superpixel segmentation). |

|

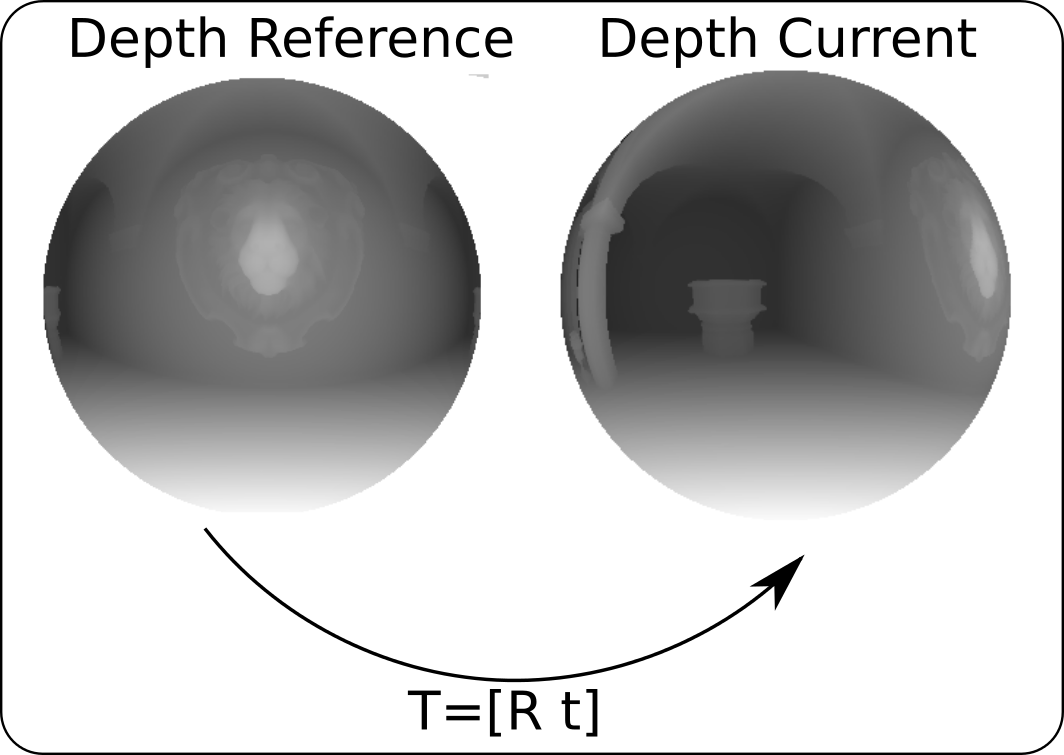

We proposed in this paper an ego-centric spherical representation to efficiently store a full RGB-D model of a 3D environment. For that, we used the notions of "keyframe" to select the most informative frames, along with the propation/correction of the depth image by representing the uncertainties of the geometry and the pose. |

|

|

|

|

|

|

|

|

|

This article presents an autonomous navigation strategy in cultivars (crops) planted in lines. With a low-cost sensory solution, the strategy is based on a single 2D sweeping LiDAR sensor. |

|

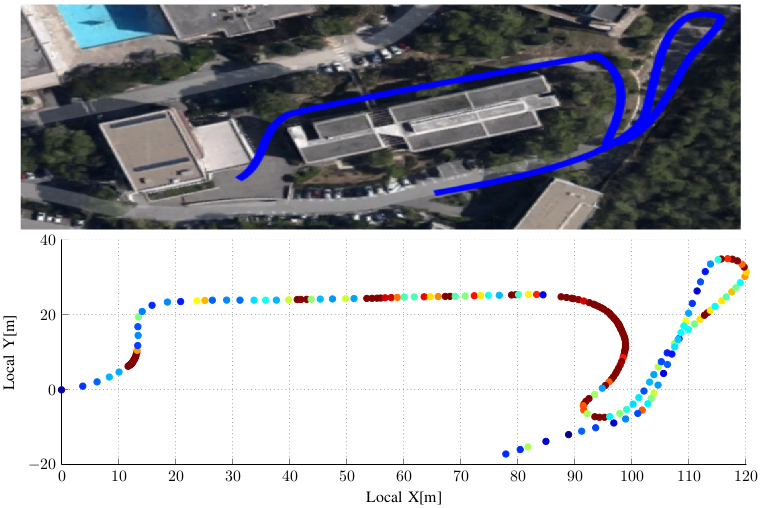

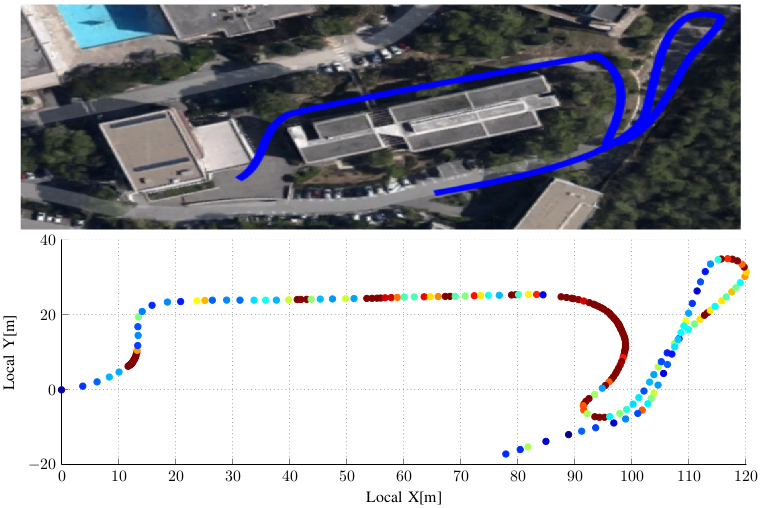

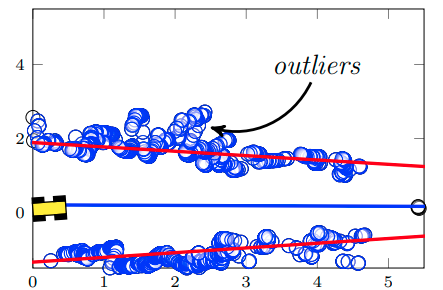

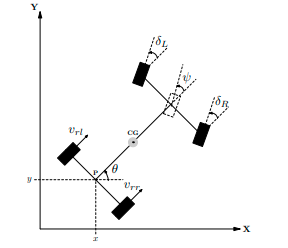

This paper proposes a new 2D localization methodology that optimizes, in a least squares sense, the information gathered from multiple encoders (from four wheels and steering) mounted in an outdoor robotic vehicle. The optimization strategy is designed to reduce the drift from differential odometry. The model is evaluated with data from simulation and real data acquired with an eletric outdoor robotic vehicle of the project VERO. |

|

This paper proposes a localization methodology based on GPS and odometry fusion. An important aspect is a new odometry formulation, wich results from a least squares optimization of the information gathered from multiple encoders (four wheels and steering) of an outdoor robotic vehicle. The sensor fusion is evaluated using both EKF and UKF filters on real experimental data acquired with the eletric robotic vehicle of the project VERO. |

|

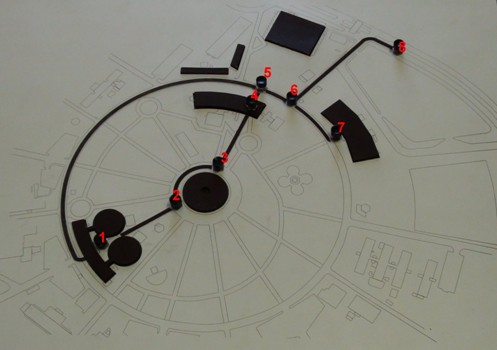

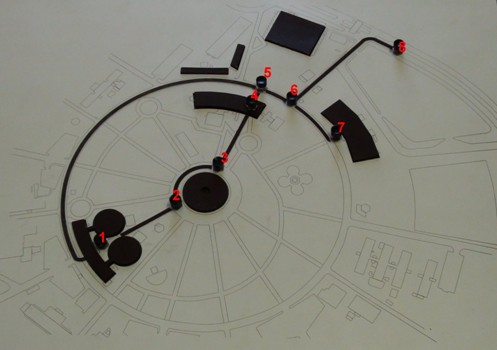

This paper describes the designing and construction of sound-tactile mock-up models to support blind people exploration. The sound-tactile mock-up models are from indoor and outdoor human-made spaces in the main campus of the University of Campinas. |