Psychiatric Disorders in Clinical Interviews

2019–present

INRIA

1 Paper

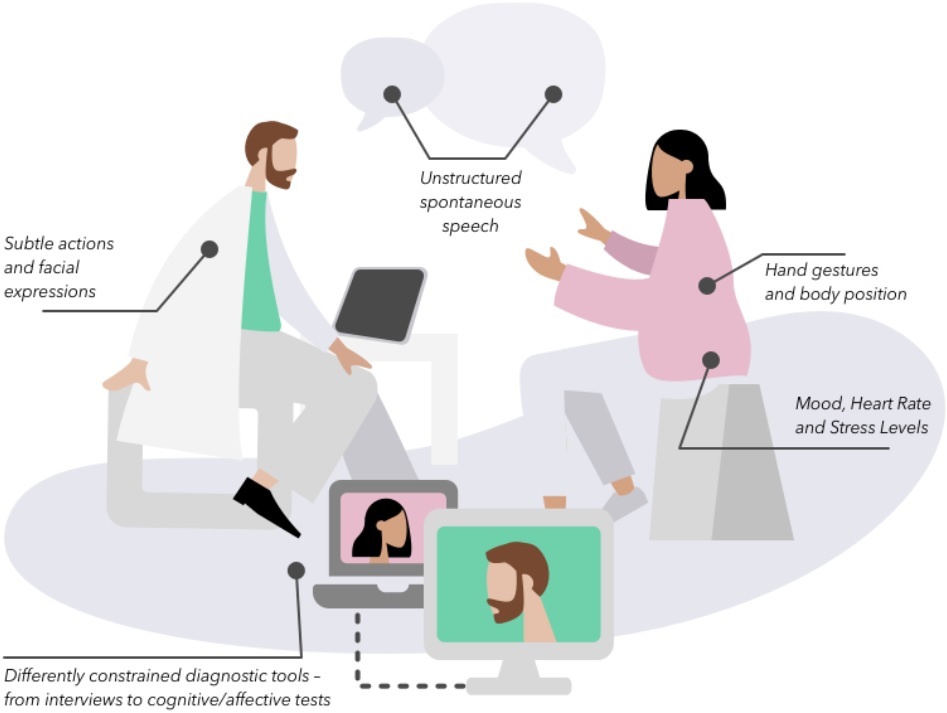

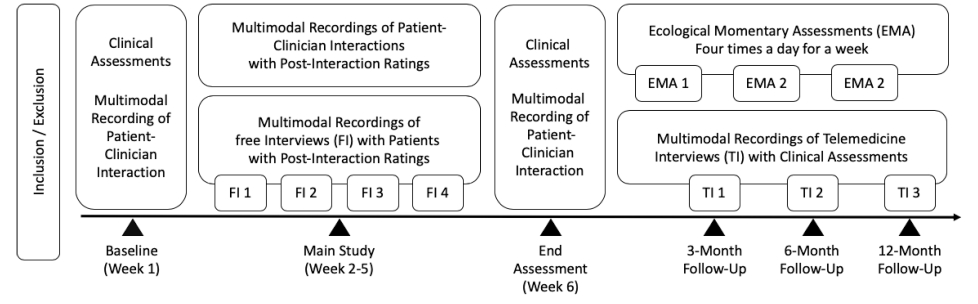

Identifying objective and reliable markers to tailor diagnosis and treatment of psychiatric patients remains a challenge, as conditions like major depression, bipolar disorder, or schizophrenia are qualified by complex behavior observations or subjective self-reports instead of easily measurable somatic features. Recent progress in computer vision, speech processing and machine learning has enabled detailed and objective characterization of human behavior in social interactions. However, the application of these technologies to personalized psychiatry is limited due to the lack of sufficiently large corpora that combine multimodal measurements with longitudinal assessments of patients covering more than a single disorder. To close this gap, we introduce Mephesto, a multi-centre, multi-disorder longitudinal corpus creation effort designed to develop and validate novel multimodal markers for psychiatric conditions. Mephesto consists of multimodal audio, video, and physiological recordings as well as clinical assessments of psychiatric patients covering a six-week main study period as well as several follow-up recordings spread across twelve months.

Projects MEPHESTO and GAIN

We outline the rationale and study protocol and introduced four cardinal use cases that build the foundation of a new state-of-the-art in personalized treatment strategies for psychiatric disorders. The overall study design consists of two phases: During the main study phase, interactions between the patients and clinician are recorded with video, audio, and physiological sensors. In the succeeding follow-up phase, videoconference-based recordings and ecological momentary assessments are recorded using a videoconferencing system.

Diagnoses include schizophrenia, depression and bipolar disorder. Dataset does not include control subjects. Each patient is contributing with 1--8 videos, roughly 5.5 videos on average. In addition to video, the recordings include patients' and clinicians' biosignals EDA, BVP, IBI, heart rate, temperature, and accelerometer. Videos are recorded by Azure Kinect and biosignals by Empatica. People do not wear face masks while being recorded, although to minimize the transmission of COVID-19 there is a large transparent plexi-glass. Dataset is confidential, but many patients agreed to publish their raw or anonymized data for research purposes. Figure below shows the recording scene with two clinicians on the opposite sides of an office desk, wearing Empatica wristbands and separated by the plexi-glass that is out of camera receptive fields.

Screenshot of an example recording with a clinician and a patient (here demonstrated by another clinician):

Extracted features can be visualized using augmentations to the recorded video:

We have a strong collaboration with CHU de Nice, Institute Claude Pompidou, German Center for Artificial Intelligence and Tbilisi University of Technology. The work was published once in a journal.

© Michal Balazia. All Rights Reserved. Designed by HTML Codex