- Introduction

- Non 0-dimensional system

- General purpose solving algorithm

- Mathematical background

- The 3B method

- Simplification procedure

- Implementation

- Examples and Troubleshooting

- General purpose solving algorithm with Jacobian

- General purpose solving algorithm with Jacobian and Hessian

- Stopping the general solving procedures

- Ridder method for solving one equation

- Brent method for solving one equation

- Newton method for solving systems of equations

- Krawczyk method for solving systems of equations

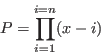

- Solving univariate polynomial with interval analysis

- Solving univariate polynomial numerically

- Solving trigonometric equation

- Solving systems with linear and non-linear terms: the simplex

method

- Solving systems with determinants

- Solving systems of distance equations

- Filtering a system of equation

Solving with Interval Analysis

Introduction

The purpose of this chapter is to describe the methods based on interval analysis available in the ALIAS library for the determination of real roots of system of equations and inequalities.

Interval Analysis

Mathematical background

This section is freely inspired from the book [5]. An interval number is a real, closed interval![\begin{eqnarray*}

&&X+Y= [\underline{x}+\underline{y},\overline{x}+\overline{y}]\\

&&X-Y= [\underline{x}-\overline{y},\overline{x}-\underline{y}]

\end{eqnarray*}](img7.png)

An interval function is an interval-valued function of one or more interval arguments. An interval function

A fundamental theorem is that any rational interval function evaluated with a fixed sequence of operations involving only addition, subtraction, multiplication and division is inclusion monotonic. This means in practice that the interval evaluation of a function gives bounds (very often overestimated) for the value of the function: for any specific values of the unknowns within their range the value of the function for these values will be included in the interval evaluation of the function. A very interesting point is that the above statement will be true even taking into account numerical errors. For example the number 1/3, which has no exact representation in a computer, will be represented by an interval (whose bounds are the highest floating point number less than 1/3 and the smallest the lowest floating point number greater than 1/3) in such way that the multiplication of this interval by 3 will include the value 1. A straightforward consequence is that if the interval evaluation of a function does not include 0, then there is no root of the function within the ranges for the unknowns.

In all the following sections an interval for the variable ![]() will be

denoted by

will be

denoted by

![]() . The width or diameter of an interval

. The width or diameter of an interval

![]() is the

positive difference

is the

positive difference

![]() .

The mid-point of an interval is defined as

.

The mid-point of an interval is defined as

![]() .

.

A box is a set of intervals. The width of a box is the largest width of the intervals in the set and the center of the box is the vector constituted with the mid-point of all the intervals in the set.

Implementation

All the procedures described in the following sections use the free

interval analysis package

BIAS/Profil

in which the basic operations of interval

analysis are implemented2.1.

This package uses a fixed precision arithmetics with an accuracy of

roughly ![]() .

Different types of data structure are implemented in this package. For

fixed value number:

.

Different types of data structure are implemented in this package. For

fixed value number:

BOOL, BOOL_VECTOR, BOOL_MATRIX, INT, INTEGER_VECTOR, INTEGER_MATRIX, REAL, VECTOR, MATRIX, COMPLEXfor intervals:

INTERVAL, INTERVAL_VECTOR, INTERVAL_MATRIXAll basic arithmetic operations can be used on interval-valued data using the same notation than for fixed numbers. Not that for vector and matrices the index start at 1:

For the evaluation of more complex interval-valued function there are

also equivalent function in the BIAS/Profil, whose name is usually

obtained from their equivalent in the C language by substituting their

first letter by the equivalent upper-case letter: for example the

evaluation of ![]() where

where ![]() is an interval will be obtained by

calling the function

is an interval will be obtained by

calling the function ![]() . We have also introduced in ALIAS

some other mathematical operators whose names are derived from their

Maple implementation: ceil, floor, round.

. We have also introduced in ALIAS

some other mathematical operators whose names are derived from their

Maple implementation: ceil, floor, round.

Table 2.1 indicates the substitution for the most used functions.

Note also that the mathematical operators

A special operator is defined in the procedure ALIAS_Signum:

formally it defines the signum operator of Maple defined as

which is not defined at

- $$

- 1if

- $$

- -1 if

- $$

- 1if

is lower than ALIAS_Value_Sign_Signum and

ALIAS_Sign_Signum is positive

is lower than ALIAS_Value_Sign_Signum and

ALIAS_Sign_Signum is positive

- $$

- -1 if

is lower than ALIAS_Value_Sign_Signum and

ALIAS_Sign_Signum is negative

is lower than ALIAS_Value_Sign_Signum and

ALIAS_Sign_Signum is negative

- -1,1

- otherwise

The derivative of ALIAS_Signum is defined in the procedure

ALIAS_Diff_Signum.

Formally this derivative is 0 for any ![]() not equal to 0. In our

implementation ALIAS_Diff_Signum(

not equal to 0. In our

implementation ALIAS_Diff_Signum(![]() ) will return 0 except if

) will return 0 except if

![]() is lower than ALIAS_Value_Sign_Signum in which case the

procedure returns [-1e11,1e11].

is lower than ALIAS_Value_Sign_Signum in which case the

procedure returns [-1e11,1e11].

The derivative of the absolute value is defined in the procedure ALIAS_Diff_Abs. If the interval X includes 0 the procedure returns [-1e11,1e11] otherwise it returns ALIAS_Signum(X).

Using the above procedures

when an user has to write an interval-valued function he has to

convert its C source code using the defined substitution. For example

if a function is written in C as:

then its equivalent interval valued function is

A special care has to be used when transforming an equation into its interval equivalent. The formulation may play a role in the efficiency. For example you should avoid as much as possible multiple occurences of the same variable as this will usually lead to an overestimation of the interval evaluation. For example

Note that all the even powers of an interval are better managed with

the Sqr and Power procedures. Indeed let consider the

interval ![]() , then the interval product

, then the interval product ![]() leads to the

interval [-1,1] while the interval Sqr(

leads to the

interval [-1,1] while the interval Sqr(![]() ) leads to [0,1].

For an interval

) leads to [0,1].

For an interval

![]() the width of the interval is obtained by

using the procedure Diam(

the width of the interval is obtained by

using the procedure Diam(![]() ) while we have

) while we have

![]() Inf(

Inf(![]() ) and

) and ![]() Sup(

Sup(![]() ).

).

We will denote by box a set of intervals which define the possible values of the unknowns. By extension and according to the context boxes may also be used to denote a set of such set. A function intervals will denote the interval values of a set of functions for a given box, while a solution intervals will denote the box which are considered to be solution of a system of functions.

Problems with the interval-valuation of an expression

An important point is that not all expressions can be evaluated using interval arithmetics. Namely constraints that prohibits the interval evaluation of an expression are:

- denominator that may include 0

- argument of square should be positive

- argument of arcsin and arccos should be included in [-1,1]

- argument of log,ln,log10 should be positive

- argument of arccosh should be greater than 1

- argument of arctanh cannot include the interval [-1,1]

- argument

of

of  where

where  is not an integer should be positive

is not an integer should be positive

- argument

of

of  should not be too large to avoid

overflow problem.

should not be too large to avoid

overflow problem.

If you use your own evaluation procedure and are aware of evaluation problems and modify the returned values if such case occurs it will be a good policy to set C++ flags ALIAS_ChangeF, ALIAS_ChangeJ to 1 (default value 0) if a change occurs. Currently the interval Newton scheme that is embedded in some of the solving procedures of ALIAS will not be used if one of these flags is set to 1 during the calculation.

Dealing with infinity

In some specific cases we may have to deal with interval in which infinity is used. These quantities are represented using BIAS convention, BiasNegInf representing the negative infinity and BiasPosInf the positive infinity.

Non 0-dimensional system

Although the solving procedures of ALIAS are

mostly devoted to be used for 0 dimensional system

(i.e. systems having a finite number of solutions) most of them can

still be used for non 0-dimensional system. In that case the result

will be a set of boxes which will be an approximation of the

solution. When dealing with such system it is necessary to set the

global variable ALIAS_ND to 1 (its default value is 0) and to

define a name in the character string ALIAS_ND_File. The

solution boxes of the system will be stored in a file with the

given name.

The quality of the approximation may be estimated with the flags

ALIAS_Volume_In, ALIAS_Volume_Neglected that give

respectively the total volume of the solution boxes and the total

volume of the neglected boxes (i.e. the boxes for which the algorithm

has not been able to determine if they are or not a solution of the

system).

Note that there are special procedures for 1-dimensional system, see

chapter 9.

General purpose solving algorithm

This algorithm enable to determine approximately the solutions of a

system of ![]() equations and inequalities

in

equations and inequalities

in ![]() unknowns. Hence this method may be used to solve

a system

composed of

unknowns. Hence this method may be used to solve

a system

composed of ![]() equations

equations

![]() ,

, ![]() inequalities

inequalities

![]() and

and ![]() inequalities

inequalities

![]() .

.

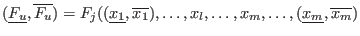

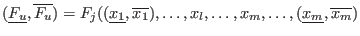

Mathematical background

Principle

Let

![]() be the set of unknowns and

let

be the set of unknowns and

let

![]() be the set of

be the set of ![]() intervals in which you are searching the solutions

of the

intervals in which you are searching the solutions

of the ![]() equations

equations

![]() (for the sake of simplicity we don't consider

inequalities but the extension to inequalities is straightforward).

(for the sake of simplicity we don't consider

inequalities but the extension to inequalities is straightforward).

We will denote by ![]() the interval value of

the interval value of ![]() when this

function is evaluated for the box

when this

function is evaluated for the box

![]() of the unknowns while

of the unknowns while ![]() will denote the

will denote the

![]() -dimensional interval vector constituted of the

-dimensional interval vector constituted of the ![]() when the

unknowns have the interval value defined by the set

when the

unknowns have the interval value defined by the set ![]() .

.

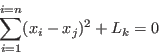

The algorithm will use a list of boxes ![]() whose maximal size

whose maximal size ![]() is an

input of the program. This list is initialized with

is an

input of the program. This list is initialized with ![]() . The

number of

. The

number of ![]() currently in the list is

currently in the list is ![]() and therefore at the

start of the program

and therefore at the

start of the program ![]() . The algorithm will also use an accuracy on

the variable

. The algorithm will also use an accuracy on

the variable ![]() and on the functions

and on the functions ![]() . The norm of a

. The norm of a ![]() is defined as:

is defined as:

The norm of the interval vector

The algorithm uses an index

- if

return

return  and

and  and exit

and exit

- bisect

which produce

which produce  new interval vectors

new interval vectors

and set

and set

- for

- evaluate

- if it exist

with

with  in

in ![$[1,n]$](img11.png) such that

such that

or

or

,

then

,

then

and go to step 3

and go to step 3

- if

or

or

, then store

, then store  in

in  , increment

, increment  and go to step 3

and go to step 3

- store

in

in  , increment

, increment  and go

to step 3

and go

to step 3

- evaluate

- if

increment

increment  and go to step 1

and go to step 1

- if

return a failure code as there is no space

available to store the new intervals

return a failure code as there is no space

available to store the new intervals

- if

store one of the

store one of the  in

in  , the other

, the other

at the end of

at the end of  , starting at position

, starting at position  . Add

. Add  to

to  and go to step 1

and go to step 1

Now three problems have to be dealt with:

- how to choose the

which will be put in place of the

which will be put in place of the

and in which order to store the other

and in which order to store the other  at the

end of the list?

at the

end of the list?

- can we improve the management of the bisection process in order

to conclude the algorithm with a limited number

?

?

- how do we distinguish distinct solutions ?

Managing the bisection and ordering

The second problem is solved in the following way: assume that at some

step of the algorithm the bisection process leads to the creation of

Now we have to manage the ordering of the ![]() . We have defined

two types of order for a given set of boxes

. We have defined

two types of order for a given set of boxes ![]() :

:

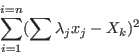

- maximum equation ordering: the box are ordered

along the value of

for

all

for

all  in [1,

in [1, ]. The first box will have the lowest

]. The first box will have the lowest  .

.

- maximum middle-point equation ordering: let

be the

vector whose components are the middle points of the intervals

be the

vector whose components are the middle points of the intervals  .

The box are ordered

along the value of

.

The box are ordered

along the value of

for

all

for

all  in [1,

in [1, ]. The first box will have the lowest

]. The first box will have the lowest  .

.

This method of managing the bisection is called the

Direct Storage mode and is the default mode in ALIAS. But

there is another mode, called the Reverse Storage

mode.

In

this mode we still substitute the ![]() by the

by the

![]() having the lowest

having the lowest ![]() but instead of adding the remaining

but instead of adding the remaining ![]()

![]() at the end of the list

at the end of the list ![]() we shift by

we shift by ![]() the

boxes in the list, thereby freeing the storage of

the

boxes in the list, thereby freeing the storage of

![]() which is used to store the remaining

which is used to store the remaining ![]()

![]() . In other words we may consider the solving procedure as

finding a leaves in a tree which are solutions of the problem: in the

Direct Storage mode we may jump during the bisection from one

branch of the tree to another while in the Reverse storage mode

we examine all the leaves issued from a branch of

the tree before examining the other branches of the tree. If we are

looking for all the solutions the storage mode has no influence on the

total number of boxes that will be examined. But the Reverse

Storage mode may have two advantages:

. In other words we may consider the solving procedure as

finding a leaves in a tree which are solutions of the problem: in the

Direct Storage mode we may jump during the bisection from one

branch of the tree to another while in the Reverse storage mode

we examine all the leaves issued from a branch of

the tree before examining the other branches of the tree. If we are

looking for all the solutions the storage mode has no influence on the

total number of boxes that will be examined. But the Reverse

Storage mode may have two advantages:

- if we are looking for only one solution it may enable to find it more rapidly (but that is not compulsory, see section 2.3.5.4),

- as we are following one branch at a time we will consider very rapidly small box that either will lead to a solution or will be discarded thereby enabling to free some storage space. Hence the storage space available in the reverse mode will be in general higher than in the direct mode: a practical consequence is that a problem may not be solved with the direct mode due to problem in the storage while with the same amount of storage solutions will be obtained in the reverse mode.

An alternative: the single bisection

A possibility to reduce the combinatorial explosion of the previous

algorithm is to bisect not all the variables i.e. to use the

full bisection mode, but only one of

them (it must be noted that the algorithms in ALIAS will not

accept a full bisection mode if the number of unknowns exceed

10). This may reduce the computation time as the number of function

evaluation may be reduced. But the problem is to determine which

variable should be bisected. All the solving algorithms of ALIAS

may manage this single bisection by setting the flag Single_Bisection to a value different from 0.

The value of this global variable indicates various bisection modes.

Although the behavior of the

mode may change according to the algorithm here are the

possible modes for the general solving algorithm and the corresponding

values for Single_Bisection:

- 1 : we just split the variable having the largest width (valid for all algorithms). Note however that it is still possible to order the bisection i.e. to split first a subset of the unknowns until their width is small (i.e. lower than ALIAS_Accuracy, then another subset and so on. This is obtained by setting flag ALIAS_Ordered_Bisection to 1 and defining an integer matrix ALIAS_Order_Bisection whose rows indicate the bisected subset and should end by 0. For example if this matrix has as rows [1,3,0],[2,4,5,0], then the algorithm will first bisect the unknowns 1 and 3 until their width is small, then the unknowns 2,4,5. If all unknowns indicated in the rows of the matrix have a small width, then the bisection algorithm revert to the normal behavior.

- 2: to determine the variable that will be bisected we use the following

approach: we compute the order criteria for the two boxes

that will result from the bisection of variable

that will result from the bisection of variable  and retain the lowest

criteria

and retain the lowest

criteria  . The variable that will be bisected is the one that has the

lowest

. The variable that will be bisected is the one that has the

lowest  except if for at least one variable the interval

evaluation of the function for

except if for at least one variable the interval

evaluation of the function for  or

or  does not contain 0. In

that case the variable that will be bisected is the one that

verify the previous property and which has the lowest

does not contain 0. In

that case the variable that will be bisected is the one that

verify the previous property and which has the lowest  among all

the input intervals having the property.

However to avoid bisecting over and over

the same variable we use another test: let

among all

the input intervals having the property.

However to avoid bisecting over and over

the same variable we use another test: let  be the width of the

interval

be the width of the

interval

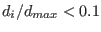

![$[\underline{x_i},\overline{x_i}]$](img118.png) and

and  be the

maximum of all the

be the

maximum of all the  . If

. If

we don't consider the

variable

we don't consider the

variable  as a possible bisection direction.

It is also possible to mix this mode with mode 1. If the integer

variable ALIAS_RANDG is set to a strictly positive value then

ALIAS_RANDG bisection will be performed using mode 2 while the

next bisection will be performed using mode 1 and the process will be

repeated

as a possible bisection direction.

It is also possible to mix this mode with mode 1. If the integer

variable ALIAS_RANDG is set to a strictly positive value then

ALIAS_RANDG bisection will be performed using mode 2 while the

next bisection will be performed using mode 1 and the process will be

repeated

- 3, 4 : similar to 1

- 5 : we use a round-robin mode i.e. each variable is

bisected in turn (first

, then

, then  and so on) unless the width

of the input intervals is less than the desired accuracy on the

variable, in which case the bisected variable is the next one having a

sufficient width (valid for all algorithms)

The flag ALIAS_Round_Robin is used to indicate at each

bisection which variable should be bisected.

and so on) unless the width

of the input intervals is less than the desired accuracy on the

variable, in which case the bisected variable is the next one having a

sufficient width (valid for all algorithms)

The flag ALIAS_Round_Robin is used to indicate at each

bisection which variable should be bisected.

- 6: we emulate the smear function (see section 2.4.1.3) with an estimation of the gradient based on finite difference (procedure Select_Best_Direction_Grad)

- 7: here again we use the flag ALIAS_Ordered_Bisection set to 1 and defining an integer matrix ALIAS_Order_Bisection whose rows indicates an order for bisecting the unknowns. The largest variable in the first row will be bisected first and so on until all the variables in a row have a width lower than ALIAS_Accuracy. We then proceed to the second row. As soon as all variables in all rows have a width lower than ALIAS_Accuracy we use the bisection 1.

- 20: the user has defined its own bisection procedure, see section 11.3

There is another mode called the mixed bisection: among the ![]() variables we will bisect

variables we will bisect ![]() variables, which will lead to

variables, which will lead to

![]() new boxes. This mode is obtained by setting

the global integer variable

new boxes. This mode is obtained by setting

the global integer variable

ALIAS_Mixed_Bisection

to ![]() . Whatever is the value of

Single_Bisection we will order the variables according to their width

and select the

. Whatever is the value of

Single_Bisection we will order the variables according to their width

and select the ![]() variables having the largest width.

variables having the largest width.

Solutions and Distinct solutions

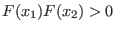

An interval will be considered as a solution for a function of the system in the following cases:

- for equations the maximal diameter of the intervals is less than a given threshold epsilon and the corresponding interval evaluation of the function contains 0 or the corresponding interval evaluation of the function has a diameter less than a given threshold epsilonf and the interval contains 0

- for inequalities

: the upper bound of

the interval evaluation of the

function is negative or the maximal diameter of the

intervals is less than

a given threshold epsilon and the corresponding interval

evaluation of the function has at least a negative lower bound or the corresponding interval

evaluation of the function has a diameter less than a given threshold

epsilonf and the interval contains 0

: the upper bound of

the interval evaluation of the

function is negative or the maximal diameter of the

intervals is less than

a given threshold epsilon and the corresponding interval

evaluation of the function has at least a negative lower bound or the corresponding interval

evaluation of the function has a diameter less than a given threshold

epsilonf and the interval contains 0

- for inequalities

: the lower bound of

the interval evaluation of the

function is positive or the maximal diameter of the

intervals is less than

a given threshold epsilon and the corresponding interval

evaluation of the function has at least a positive upper bound or the corresponding interval

evaluation of the function has a diameter less than a given threshold

epsilonf and the interval contains 0

: the lower bound of

the interval evaluation of the

function is positive or the maximal diameter of the

intervals is less than

a given threshold epsilon and the corresponding interval

evaluation of the function has at least a positive upper bound or the corresponding interval

evaluation of the function has a diameter less than a given threshold

epsilonf and the interval contains 0

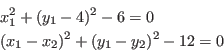

Assume that two solutions

![]() have been found

with the algorithm.

We will first consider the case where we have to solve a system of

have been found

with the algorithm.

We will first consider the case where we have to solve a system of ![]() equations in

equations in ![]() unknowns, possible with additional inequality

constraints.

First we will check with the Miranda theorem (see

section 3.1.5) if

unknowns, possible with additional inequality

constraints.

First we will check with the Miranda theorem (see

section 3.1.5) if

![]() include one (or

more) solution(s). If both solutions are Miranda, then they will kept

as solutions. If one of them is Miranda and other one is not Miranda

we will consider the distance between the mid-point of

include one (or

more) solution(s). If both solutions are Miranda, then they will kept

as solutions. If one of them is Miranda and other one is not Miranda

we will consider the distance between the mid-point of

![]() : if this distance is lower than a given threshold we will

keep as solution only the Miranda's one. If none of

: if this distance is lower than a given threshold we will

keep as solution only the Miranda's one. If none of

![]() is Miranda we keep these solutions, provided that their distance

is greater than the threshold. Note that in that case these solutions

may disappear if a Miranda solution is found later on such that the

distance between these solutions and the Miranda's one is lower than

the threshold.

is Miranda we keep these solutions, provided that their distance

is greater than the threshold. Note that in that case these solutions

may disappear if a Miranda solution is found later on such that the

distance between these solutions and the Miranda's one is lower than

the threshold.

In the other case the solution will be ranked according the chosen order and if a solution is at a distance from a solution with a better ranking lower than the threshold, then this solution will be discarded.

The 3B method

In addition to the classical bisection process all the solving algorithms in the ALIAS library may make use of another method called the 3B-consistency approach [2].

Although its principle is fairly simple

it is usually very efficient (but not always, see

section 2.4.3.1). In this method we consider each variable

![]() in turn and its range

in turn and its range

![]() . Let

. Let

![]() be the middle point of this range. We will first calculate the

interval evaluation of

the functions in the system with the full ranges for the variable

except for the variable

be the middle point of this range. We will first calculate the

interval evaluation of

the functions in the system with the full ranges for the variable

except for the variable ![]() where the range will be

where the range will be

![]() . Clearly if one of the equations is not

satisfied (i.e. its interval evaluation does not contain 0), then we

may reduce the range of the variable

. Clearly if one of the equations is not

satisfied (i.e. its interval evaluation does not contain 0), then we

may reduce the range of the variable ![]() to

to

![]() . If this is not the case we will define a new

. If this is not the case we will define a new

![]() as the middle point of the interval

as the middle point of the interval

![]() and repeat the process until either we have found an equation that is

not satisfied (in which case the interval for the variable

and repeat the process until either we have found an equation that is

not satisfied (in which case the interval for the variable ![]() will be

reduced to

will be

reduced to

![]() ) or the width of the interval

) or the width of the interval

![]() is lower than a given threshold

is lower than a given threshold ![]() . Using this

process we will reduce the range for the variable

. Using this

process we will reduce the range for the variable ![]() on the left side

and we may clearly use a similar procedure to reduce it on the left

side. The 3B procedure will be repeated if:

on the left side

and we may clearly use a similar procedure to reduce it on the left

side. The 3B procedure will be repeated if:

- the variable ALIAS_Full3B is set to 1 or 2 (default value: 0) and if there are two changes on the variable (a change is counted when a variable is changed either on the left or right side) or the change in at least one variable is larger than ALIAS_Full3B_Change

- the variable

ALIAS_Full3B

is set to 1 and the change in at least

one variable is larger than

ALIAS_Full3B_Change

For all the algorithms of ALIAS this method may be used by

setting the flag ALIAS_Use3B to 1 or 2. In addition you will have to

indicate for each variable a threshold ![]() and a maximal width for the

range (if the width of the range is greater than this maximal value

the method is not used). This is done through the VECTOR

variables ALIAS_Delta3B and ALIAS_Max3B. The difference

of behavior of the method if ALIAS_Use3B is set to 1 or 2 is

the following:

and a maximal width for the

range (if the width of the range is greater than this maximal value

the method is not used). This is done through the VECTOR

variables ALIAS_Delta3B and ALIAS_Max3B. The difference

of behavior of the method if ALIAS_Use3B is set to 1 or 2 is

the following:

- 1: let e be the value of ALIAS_Delta3B for the current variable which is in the range [a,b]. On the left side we will check if [a,a+e] may lead to no solution. If yes then the current value of the variable is [a+e,b]. We will start again but this time we will double the size of of the interval we will check i.e. we will test the elimination of [a+e,a+3e], then [a+3e,a+7e] and will stop as soon as the check on one interval fail. For example assume that the test for [a+3e,a+7e] fails, then the updated range for the variable will be [a+3e,b].

- 2: the procedure at the beginning is similar to the previous one but changes when the check fails. In the previous example after the failure for [a+3e,a+7e] we will start again to examine if interval with width e can be eliminated. Hence we will check [a+3e,a+4e], then [a+4e,a+6e] and so on. In consequence in this mode we will get as left bound for the interval the highest possible value A such that [A,A+e] cannot be eliminated. Clearly in that case the procedure will be more computer intensive but will produce better results.

ALIAS_Use3B=1;

Resize(ALIAS_Delta3B,25);Resize(ALIAS_Max3B,25);

for(i=1;i<=25;i++)

{

ALIAS_Delta3B(i)=0.1;ALIAS_Max3B(i)=7;

}

which indicate that we will start using the 3B method as soon as the

width of a range is lower than 7 and will stop it if we cannot improve

the range by less than 0.1.

A drawback of the 3B method is that it may imply a large number of

calls to the evaluation of the functions. The larger number of

evaluation will be obtained by setting the ALIAS_Use3B to 2 and

ALIAS_Full3B to 1 while the lowest number will be obtained if

these values are 1 and 0. It is possible to specify

that only a subset of the functions (the simplest)

will be checked in the process. This is done with the global variable

ALIAS_SubEq3B,

an integer array whose size should be set to the number of functions

and for which a value of 1 at position ![]() indicates that the function

indicates that the function

![]() will be used in the 3B process while a value of 0 indicates that

the function will not be used.

For example:

will be used in the 3B process while a value of 0 indicates that

the function will not be used.

For example:

Resize(ALIAS_SubEq3B,10); Clear(ALIAS_SubEq3B); ALIAS_SubEq3B(1)=1; ALIAS_SubEq3B(2)=1;indicates that only the two first functions will be used in the 3B process. If you are using your own solving procedure, then it is necessary to indicate that only part of the equations are used by setting the flag ALIAS_Use_SubEq3B to 1.

In some cases it may be interesting to try to use at least once the 3B method even if the width of the range is larger than ALIAS_Max3B. If the flag ALIAS_Always3B is set to 1, then the 3B will be used once to try to remove the left or right half interval of the variables.

If you are using also a simplification procedure (see section 2.3.3) you may avoid using this simplification procedure by setting the flag ALIAS_Use_Simp_In_3B to 0. You may also adapt the simplification procedure when it is called within the 3B method. For that purpose the flag ALIAS_Simp_3B is set to 1 instead of 0 when the simplification procedure is called within the 3B method. For some procedure if ALIAS_Use_Simp_In_3B is set to 2 then ALIAS_Simp_3B is set to 1 when the whole input is checked. But if ALIAS_Use_Simp_3B is set to a value larger than 2 then ALIAS_Simp_3B is set to 0.

Some methods allows to start the 3B method not by a small increment that is progressively increased but by a large increment (half the width of the interval) and to decrease it if it does not work. This is done by setting the flag ALIAS_Switch_3B to a value between 0 and 1: if the width of the current interval is lower than the width of the initial search domain multiplied by this flag, then a small increment is used otherwise a large increment is used.

When the routine that evaluate the expression uses the derivatives of the expression we may avoid to use these derivatives if the width of the ranges in the box are too large. This is obtained by assigning the size of the vector ALIAS_Func_Grad to the number of unknowns and assigning to the components of this vector to the maximal width for the ranges of the variables over which the derivatives will not be used: if there is a range with a width larger than its limits then no derivatives will be used.

Note also that the 3B-consistency is not the only one that can be used: see for example the ALIAS-Maple manual that implements another consistency test for equations which is called the 2B-consistency or Hull-consistency in the procedure HullConsistency (similarly HullIConsistency implement it for inequalities). See also the section 2.17 for an ALIAS-C++ implementation of the 2B and section 11.4 for detailed calls to the 3B procedures.

Simplification procedure

Most of the procedures in ALIAS will accept as optional last argument the name of a simplification procedure: a user-supplied procedure that take as input the current box and proceed to some further reduction of the width of the box or even determine that there is no solution for these box, in which case it should return -1. Such procedure must be implemented as:

int Simp_Proc(INTERVAL_VECTOR & P)where P is the current box. This procedure must return either -1 or any other integer. If a reduction of an interval is done within this procedure, then P must be updated accordingly.

This type of procedure allows the user to add information to the algorithm without having to add additional equations. The simplification procedure is applied on a box before the bisection and is used within the 3B method if this heuristic is applied.

Note that the Maple package associated to ALIAS allows in some cases to produce automatically the code for such procedure (see the ALIAS-Maple manual) and that section 2.17 presents a standard simplification procedure that may be used for almost any system of equations.

Implementation

The algorithm is implemented as:

int Solve_General_Interval(int m,int n,

INTEGER_VECTOR Type_Eq,

INTERVAL_VECTOR (* IntervalFunction)(int,int,INTERVAL_VECTOR &),

INTERVAL_VECTOR & TheDomain,

int Order,int M,int Stop,

double epsilon,double epsilonf,double Dist,

INTERVAL_MATRIX & Solution,int Nb,

int (* Simp_Proc)(INTERVAL_VECTOR &))

the arguments being:

- m: number of unknowns

- n: number of functions, see the note 2.3.4.1

- Type_Eq: type of the functions, see the note 2.3.4.2

- IntervalFunction: a function which return the interval vector evaluation of the functions, see the note 2.3.4.3

- TheDomain: box in which we are looking for solution of the system. A copy of the search domain is available in the global variable ALIAS_Init_Domain

- Order: the type of order which is used to store the intervals created during the bisection process. This order may be either MAX_FUNCTION_ORDER or MAX_MIDDLE_FUNCTION_ORDER. See the note on the order 2.3.4.4.

- M: the maximum number of boxes which may be stored. See the note 2.3.4.5

- Stop: the possible values are 0,1,2

- 0: the algorithm will look for every solution in TheDomain

- 1: the algorithm will stop as soon as 1 solution has been found

- 2: the algorithm will stop as soon as Nb solutions have been found

- epsilon: the maximal width of the solution intervals, see the note 2.3.4.6

- epsilonf: the maximal width of the function intervals for a solution, see the note 2.3.4.6

- Dist: minimal distance between the middle point of two interval solutions, see the note 2.3.4.7

- Solution: an interval matrix of size (Nb,m) which will contained the solution intervals. This list is sorted using the order specified by Order

- Nb: the maximal number of solution which will be returned by the algorithm

- Simp_Proc: a user-supplied procedure that take as input the current box and proceed to some further reduction of the width of the box or even determine that there is no solution for this box, in which case it should return -1. Remember also that you may use the 3B method to improve the efficiency of this algorithm (see section 2.3.2).

Note that the following arguments may be omitted:

- Type_Eq: in that case all the functions will supposed to be equations.

- Simp_Proc: no simplification procedure is provided by the user

Number of unknowns and functions

The only constraint on n,m is that they should be strictly positive. So the algorithm is able to deal with under-constrained or over-constrained systems.

Type of the functions

The i-th value

in the array of - Type_Eq(i)=0 :

must verify

must verify

- Type_Eq(i)=1 :

must verify

must verify

- Type_Eq(i)=-1 :

must verify

must verify

Interval Function

The user must provide a function which will compute the function intervals of the functions for a given box. When designing ALIAS we have determined that to be efficient we need a procedure that allow to calculate the interval evaluation of all the functions or only a subgroup of them in order to avoid unnecessary calculations. Hence the syntax of this procedure is:

INTERVAL_VECTOR IntervalFunction (int l1,int l2,INTERVAL_VECTOR & x)

- x: a

dimensional interval vector which define the

intervals for the unknowns

dimensional interval vector which define the

intervals for the unknowns

- l1,l2: the function must be able to return the interval value of the functions l1 to l2. The first function has number 1, the last m. So if l1=l2=1 the function should return an interval vector whose only the first component has been computed.

The efficiency of the algorithm is heavily dependent on the way this procedure is written. Two factors are to be considered:

- efficiency of the evaluation

- sharp bound on the evaluation

The evaluation function may be written as:

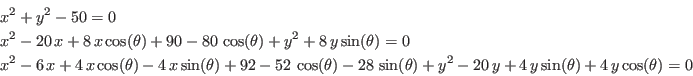

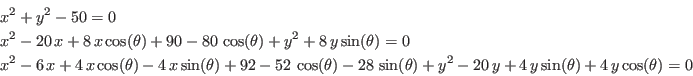

e1 = Sqr(x)+Sqr(y)-50.0 ;

e2 =Sqr(x)-20.0*x+8.0*x*Cos(teta)+90.0-80.0*Cos(teta)+Sqr(y)+8.0*y*Sin(teta);

e3 =Sqr(X)-6.0*x+4.0*x*Cos(teta)-4.0*x*Sin(teta)+92.0-52.0*Cos(teta)-28.0*

Sin(teta)+SQR(Y)-20.0*y+4.0*y*Sin(teta)+4.0*y*Cos(teta);

or, using temporary variables:

t1 = Sqr(x); t2 = Sqr(y); t5 = Cos(teta); t6 = x*t5; t9 = Sin(teta); t10 = y*t9; e1 = t1+t2-50.0; e2 = t1-20.0*x+8.0*t6+90.0-80.0*t5+t2+8.0*t10; e3 = t1-6.0*x+4.0*t6-4.0*x*t9+92.0-52.0*t5-28.0*t9+t2-20.0*y+4.0*t10+4.0*y*t5;the second manner is more efficient as the intervals

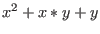

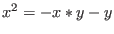

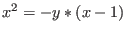

The second point is the sharpness of the evaluation. Let consider the

polynomial ![]() . If the variable lie in the interval [0,1] the

evaluation will lead to the interval [-1,1]. The same polynomial may

we written in Horner form

as

. If the variable lie in the interval [0,1] the

evaluation will lead to the interval [-1,1]. The same polynomial may

we written in Horner form

as ![]() the function being then evaluated as [-1,0].

Now suppose that

the function being then evaluated as [-1,0].

Now suppose that ![]() lie in [0.8,1.1]. The initial polynomial will be

evaluated as [-0.46,0.41] while in Horner form the evaluation leads to

[-0.22,0.11]. But this polynomial may also be written as

lie in [0.8,1.1]. The initial polynomial will be

evaluated as [-0.46,0.41] while in Horner form the evaluation leads to

[-0.22,0.11]. But this polynomial may also be written as ![]() (which is the centered form at 1)

whose evaluation leads to [-0.2,0.14] which has a sharper lower bound

than in the Horner form (note that Horner form is very efficient

for the evaluation of a polynomial but do not lead always to the sharpest

evaluation of the bounds on the polynomial although this is some time

mentioned in the literature). Unfortunately there is no known method which

enable to determine what is the best way to express a given function

in order to get the sharpest possible bounds. For complex expression

you may use the procedures MinimalCout or Code of

ALIAS-Maple that try to produce the less costly formulation of a given

expression.

(which is the centered form at 1)

whose evaluation leads to [-0.2,0.14] which has a sharper lower bound

than in the Horner form (note that Horner form is very efficient

for the evaluation of a polynomial but do not lead always to the sharpest

evaluation of the bounds on the polynomial although this is some time

mentioned in the literature). Unfortunately there is no known method which

enable to determine what is the best way to express a given function

in order to get the sharpest possible bounds. For complex expression

you may use the procedures MinimalCout or Code of

ALIAS-Maple that try to produce the less costly formulation of a given

expression.

Another problem is the cost of the tests which are necessary to determine if the interval evaluation of one of the function does not include 0. Indeed let us assume that we have 40 equations and 7 unknowns and that we are considering a box such that the function interval all contain 0. When testing the functions we may either evaluate all the functions with one procedure call (with the risk of performing useless evaluations e.g. if the interval evaluation of the first equations does not contain 0) or evaluate the functions one after the other (at a cost of 40 procedure calls but avoiding useless equation evaluations). The best way balances the cost of procedure calls compared to the cost of equation evaluations. By default we are evaluating all the functions in one step but by setting the variable Interval_Evaluate_Equation_Alone to 1 the program will evaluate the functions one after the other.

A last problem is the interval valuation of the equations. Indeed you may remember that some expression may not be evaluated for some ranges for the unknowns (see section 2.1.1.3). If such problem may occur a solution is to include into this procedure a test before each expression evaluation that verify if the expression is interval-valuable. If not two cases may occur:

- the expression will never be interval-valuated whatever is the value of one of the unknown in its range (e.g. the expression involves ArcSin(x) and the range for x is [-4,-3])

- the expression may be evaluated for some values of the unknowns in their range (e.g. the expression involves Sqrt(x) while the range for x is [-3,10])

The order

Basically the algorithm just bisect the box TestDomain until one of the criteria described in 2.3.4.6 is

satisfied. The boxes resulting from the bisection process

are stored in a list and the boxes in the list are treated

sequentially. If we are looking only for one solution of the equation

or for the first Nb solutions of a system (see the Stop

variable) it is

important to store the new boxes in the list in an order

which ensure that we will treat first the boxes having the

highest probability of containing a solution.

Two types of ordering may be used, see section 2.3.1.2,

indicated by the flag MAX_FUNCTION_ORDER or MAX_MIDDLE_FUNCTION_ORDER.

Note that if we are looking for all the solutions of the system the order has still an importance: although all the boxes of the list will be treated the order define how close solution intervals will be distinguished (see for example section 2.3.5.2).

Storage

The boxes generated by the bisection process are stored in an interval matrix:

Box_Solve_General_Interval(M,m)The algorithm try to manage the storage in order to solve the problem with the given number M. As seen in section 2.3.1.2 two storage modes are available, the Direct Storage and the Reverse Storage modes, which are obtained by setting the global variable Reverse_Storage to 0 (the default value) or at least to the number of unknowns plus 1. See also section 8.3 to use a mixed strategy between the direct and reverse mode.

For both modes the algorithm will first run until the bisection of the current box leads to a total number of boxes which exceed the allowed total number. It will then delete the boxes in the list which have been already bisected, thereby freeing some storage space (usually larger for the reverse mode than for the direct mode) and will start again.

If this is not sufficient the algorithm will consider each box in the list and determine if the bisection process applied on the box does create any new boxes otherwise the box is deleted from the list. Note that this procedure is computer intensive and constitute a "last ditch" effort to free some storage space. You can disable this feature by setting the integer variable Enable_Delete_Fast_Interval to 0. If the storage space freed by this method is not sufficient the algorithm will exit with a failure return.

If epsilonf=0, epsilon=![]() and

and ![]() is the largest

width of the intervals in TestDomain, then the number of boxes

that will be considered in the direct mode

is

is the largest

width of the intervals in TestDomain, then the number of boxes

that will be considered in the direct mode

is ![]() with, in the worst case:

with, in the worst case:

| (2.2) |

| (2.3) |

If the procedure has to be used more than once it is possible to speed up the computation by allocating the storage space before calling the procedure. Then you may indicate that the storage space has been allocated beforehand by indicating a negative value for M, the number of boxes being given by the absolute value of M.

Note also that the bisection process applied only to one variable may lead to a better estimation of the roots of the system if the algorithm stops when the accuracy required on the variable is reached: indeed, compared to the standard algorithm, one (or more) of the variable may have been individually split before reaching the step where a full bisection will lead to a solution (see the example in section 2.4.3.2).

Note also a specific use of ALIAS_RANDG: if this integer is not set to 0, then every ALIAS_RANDG iteration the algorithm will put the box having the largest width as current box, except if the number of boxes remaining to be processed is greater than half the total number of available boxes.

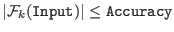

Accuracy

Two criterion are used to determine if a box possibly includes a solution of the system:

- the largest width of the components of the box is lower than epsilon and the functions intervals for this box all contain 0

- the largest width of the function intervals is lower than epsilonf and they contain all 0. You must be aware that this test is only used if there is no inequality in the system. In that case it is compulsory to have an epsilon not equal to 0 otherwise the procedure may lead to an infinite loop.

If we use only the second criteria the width of the solution intervals cannot be determined and the functions should be roughly normalized (see the example in section 15.1.3 for the importance of the conditioning).

Distinct solutions

Two solution intervals will be assumed to contain distinct solutions if the minimal distance between the middle point of all the intervals is greater than the threshold Dist.

Return code

The procedure will return an integer : number of solutions

: number of solutions

: the size of the storage is too low (

possible solutions: increase M,

or use the 3B method, or use the reverse storage mode or the single

bisection mode)

: the size of the storage is too low (

possible solutions: increase M,

or use the 3B method, or use the reverse storage mode or the single

bisection mode)

: m or n is not strictly positive

: m or n is not strictly positive

: Order is not 0 or 1

: Order is not 0 or 1

: one of the function in the system has not a type 0, -1

or 1 (i.e. it's not an equation, neither inequality

: one of the function in the system has not a type 0, -1

or 1 (i.e. it's not an equation, neither inequality  or an

inequality

or an

inequality  )

)

: we are in the optimization mode and more than one

functions are expressions to be optimized (see the Optimization chapter)

: we are in the optimization mode and more than one

functions are expressions to be optimized (see the Optimization chapter)

: in the mixed bisection mode the number of variables

that will be bisected is larger than the number of unknowns

: in the mixed bisection mode the number of variables

that will be bisected is larger than the number of unknowns

: one of the value of ALIAS_Delta3B or

ALIAS_Max3B is negative or 0

: one of the value of ALIAS_Delta3B or

ALIAS_Max3B is negative or 0

: one of the value of ALIAS_SubEq3B is not 0 or 1

: one of the value of ALIAS_SubEq3B is not 0 or 1

: although ALIAS_SubEq3B has as size the number of

equations none of its components is 1

: although ALIAS_SubEq3B has as size the number of

equations none of its components is 1

: ALIAS_ND is different from 0 (i.e. we are

dealing with a non-0 dimensional problem, see the corresponding

chapter) and the name of the result file has not been specified

: ALIAS_ND is different from 0 (i.e. we are

dealing with a non-0 dimensional problem, see the corresponding

chapter) and the name of the result file has not been specified

: the value of the flag Single_Bisection is not

correct

: the value of the flag Single_Bisection is not

correct

: we use the full bisection mode and the problem has

more than 10 unknowns

: we use the full bisection mode and the problem has

more than 10 unknowns

Debugging

If the algorithm fail some debugging options are provided. The

integer variable

Debug_Level_Solve_General_Interval indicate which level of debug is used:

- 0: no debug (the default value)

- 1: during the process are printed on the standard output: the index of the current box, the total number of boxes and the number of remaining boxes together with the current number of solutions

- 2 : same as 1 but the intervals of the current box are also printed and when it is split the new boxes are printed together with their function intervals

Examples and Troubleshooting

Example 1

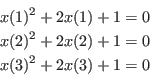

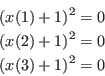

We will present first a very silly example of system in the three

unknowns

Clearly this system has the unique solution (-1,-1,-1). We choose to define the TestDomain as the interval [-2,0] for all three unknowns. So we define a function which specify the TestDomain:

VOID SetTestDomain (INTERVAL_VECTOR & x)

{

Resize (x, 3);

x(1) = Hull (-2.0,0.0);

x(2) = Hull (-2.0,0.0);

x(3) = Hull (-2.0,0.0);

}

The we have to define the IntervalFunction:

INTERVAL_VECTOR IntervalTestFunction (int l1,int l2,INTERVAL_VECTOR & x)

// interval valued functions. The input are intervals for the

//variables and the output is intervals on the functions

//x are the input variables and xx the function intervals

{

INTERVAL_VECTOR xx(3);

if(l1==1) xx(1)=x(1)*(x(1)+2)+1;

if(l1<=2 && l2>=2) xx(2)=x(2)*(x(2)+2)+1;

if(l2==3) xx(3)=x(3)*(x(3)+2)+1;

return xx;

}

This function returns the interval vector xx which will contain

the value of the function from l1 to l2 for the box

x. Note that the initial functions have been written

in Horner form (or "nested" form) which may lead to a sharper estimation of the

function intervals.

The main program may be written as:

INT main()

{

int Num; //number of solution

INTERVAL_MATRIX SolutionList(1,3);//the list of solutions

INTERVAL_VECTOR TestDomain;//the input intervals for the variable

//We set the value of the variable intervals

SetTestDomain (TestDomain);

//let's solve....

Num=Solve_General_Interval(3,3,IntervalTestFunction,TestDomain,

MAX_FUNCTION_ORDER,50000,1,0.001,0.0,0.1,SolutionList,1);

//too much intervals have been created, this is a failure

if(Num== -1)cout << "The procedure has failed (too many iterations)"<<endl;

return 0;

}

This main program will stop as soon as one solution has been found. We

set epsilonf to 0 and epsilon to 0.001 which mean that the

maximal width of the interval solution will be lower than 0.001. The

chosen order is the maximum equation ordering. The number of boxes

should not be larger than 50000 and the distance between the

distinct solutions must be greater than 0.1.

Running this program will provide the solution interval [-1.000977,-1] for all three variables and uses 71 boxes. The result will have been similar if we have chosen the maximum middle-point equation ordering.

On the other hand if we have epsilon to 0 and epsilonf to 0.001 the algorithm find the solution interval [-1.00048828125,-1] and use 78 boxes.

Now let's look at a more complete test program which enable to test the various options of the procedure.

INT main()

{

int Iterations;//maximal size of the storage

int Dimension,Dimension_Eq; // size of the system

int Num,i,j,order,precision,Stop;

// accuracy of the solution either on the function or on the variable

double Accuracy,Accuracy_Variable,Diff_Sol;

INTERVAL_MATRIX SolutionList(200,3);//the list of solutions

INTERVAL_VECTOR TestDomain;//the input intervals for the variable

INTERVAL_VECTOR F(3),P(3);

//We set the number of equations and unknowns and the value of the variable intervals

Dimension_Eq=Dimension=3;

SetTestDomain (TestDomain);

cerr << "Number of iteration = "; cin >> Iterations;

cerr << "Accuracy on Function = "; cin >> Accuracy;

cerr << "Accuracy on Variable = "; cin >> Accuracy_Variable;cerr << "Order (0,1)"; cin >>order;

cerr << "Stop at first solutions (0,1,2):";cin>>Stop_First_Sol;

cerr << "Separation between distincts solutions:";cin>> Diff_Sol;

//let's solve....

Num=Solve_General_Interval(Dimension,Dimension_Eq,IntervalTestFunction,TestDomain,order,Iterations,Stop,

Accuracy_Variable,Accuracy,Diff_Sol,SolutionList,1);

//too much intervals have been created, this is a failure

if(Num== -1){cout<<"Procedure has failed (too many iterations)"<<endl;return -1;}

cout << Num << " solution(s)" << endl;

for(i=1;i<=Num;i++)

{

cout << "solution " << i <<endl;

cout << "x(1)=" << SolutionList(i,1) << endl;

cout << "x(2)=" << SolutionList(i,2) << endl;

cout << "x(3)=" << SolutionList(i,3) << endl;

cout << "Function value at this point" <<endl;

for(j=1;j<=3;j++)F(j)=SolutionList(i,j);

cout << IntervalTestFunction(1,Dimension_Eq,F) <<endl;

cout << "Function value at middle interval" <<endl;

for(j=1;j<=3;j++)P(j)=Mid(SolutionList(i,j));

F=IntervalTestFunction(1,Dimension_Eq,P);

for(j=1;j<=3;j++)cout << Sup(F(j)) << endl;

}

return 0;

}

This program is basically similar to the previous one except that it

enable to define interactively the order, M, Stop, epsilon, epsilonf,Dist. Then it print the solution

together with the function interval for the solution interval and the

value of the functions at the middle point of the solution

interval. Let's test the algorithm to find all he solution (Stop=0) with epsilonf=0 and epsilon=0.001. The algorithm

will fail: indeed let's compute the maximal storage that we may need

using the formula (2.1). We end up with the number

Although academic this system shows several properties of interval analysis. If we set epsilon=epsilonf=1e-6, which is the standard setting of ALIAS-Maple, the algorithm will run for a very long time before finding the solution of the system. Even using the 3B method, section 2.3.2 and the 2B method (section 2.17) the computation time, although improved, will still be very large. Now if we write the system as

the system will be solved with only 8 boxes, with 2 boxes if we use the 3B method and without any bisection if we use the 2B method. This shows clearly the importance of writing the equations in the most compact form.

Example 2

The problem we want to solve is presented in section 15.1.1. We

consider a system of three equations in the unknowns ![]() :

:

which admit the two solutions:

By looking at the geometry of the problem it is easy to establish a rough TestDomain:

VOID SetTestDomain (INTERVAL_VECTOR & x)

{

Resize (x, 3);

x(1) = Hull (0.9,7.1);

x(2) = Hull (2.1,7.1);

x(3) = Hull (-Constant::Pi,Constant::Pi);

}

and to determine that the maximum number of real solution is 6.

The IntervalFunction is written as:

INTERVAL_VECTOR IntervalTestFunction (int l1,int l2,INTERVAL_VECTOR & in)

{

INTERVAL_VECTOR xx(3);

if(l1==1)xx(1)=in(1)*in(1)+in(2)*in(2)-50.0;

if(l1<=2 && l2>=2)

xx(2)=-80.0*Cos(in(3))+90.0+(8.0*Sin(in(3))+in(2))*in(2)+(-20.0+8.0*Cos(in(3))+in(1))*in(1);

if(l2==3)

xx(3)=92.0-52.0*Cos(in(3))-28.0*Sin(in(3))+(-20.0+4.0*Sin(in(3))+

4.0*Cos(in(3))+in(2))*in(2)+(-4.0*Sin(in(3))-6.0+4.0*Cos(in(3))+in(1))*in(1);

return xx;

}

and we may use the same main program as in the previous example

(the name of this program is

Test_Solve_General1).

Let's assume that we set epsilonf to 0 and epsilon to 0.01 while looking at all the solutions (Stop=0), using the maximum equation ordering and setting Dist to 0.1. The algorithm provide the following solutions,using 684 boxes:

![\begin{eqnarray*}

&&x=[4.99297,4.99902] y=[5.00527,5.01016]

\theta=[-0.00...

...7031] y=[6.21133,6.21621]

\theta=[-0.809942,-0.803806]

\end{eqnarray*}](img172.png)

We notice that indeed none of the roots are contained in the solution intervals. If we use the maximum middle-point equation ordering the algorithm provide the solution intervals, using 684 boxes:

![\begin{eqnarray*}

&&x=[3.36426,3.37031] y=[6.21621,6.22109]

\theta=[-...

...508,5.01113] y=[4.99063,4.99551]

\theta=[0,0.00613592]

\end{eqnarray*}](img173.png)

which still does not contain the root (5,5,0) (but contain one of the root which show the importance of the ordering). Let's look at what is happening by setting the debug flag Debug_Level_Solve_General_Interval to 2 (see section 2.3.4.9). At some point of the process the algorithm has determined four different solution intervals:

![\begin{eqnarray*}

&& {\rm Solution 1}

[4.9990234375,5.005078125]

[4.990625...

...

[6.211328125,6.2162109375]

[-0.809941856,-0.803805932852]

\end{eqnarray*}](img174.png)

the criteria

Clearly solution 3 has the lowest criteria and will therefore be stored as the first solution. Then solution 1 will be considered: but the distance between the middle point of solution 3 and 1 is lower than Dist and therefore solution 1 will not be retained. The solution 2 will be considered but for the same reason than for solution 1 this solution will not been retained. Finally solution 4 will be considered and it spite of his index being the worse this solution will be retained as its distance to solution 3 is greater than Dist.

Note that if the single bisection is activated and setting the flag Single_Bisection to 1 we find the two roots for epsilonf to 0 and epsilon to 0.01 with 650 boxes using the maximum equation ordering.

We may also illustrate on this example how to deal

with

inequalities. Assume now that we want to deal with the same system but

also with the inequality ![]() . We modify the

IntervalTestFunction as:

. We modify the

IntervalTestFunction as:

INTERVAL_VECTOR IntervalTestFunction (int l1,int l2,INTERVAL_VECTOR & in)

{

INTERVAL x,y,teta;

INTERVAL_VECTOR xx(4);

x=in(1);y=in(2);teta=in(3);

if(l1==1)xx(1)=x*x+y*y-50.0;

if(l1<=2 && l2>=2)

xx(2)=-80.0*Cos(teta)+90.0+(8.0*Sin(teta)+y)*y+(-20.0+8.0*Cos(teta)+x)*x;

if(l1<=3 && l2>=3)

xx(3)=92.0-52.0*Cos(teta)-28.0*Sin(teta)+(-20.0+4.0*Sin(teta)+

4.0*Cos(teta)+y)*y+(-4.0*Sin(teta)-6.0+4.0*Cos(teta)+x)*x;

if(l2==4)

xx(4)=x*y-22.;

return xx;

}

Part of the main program will be:

Type(1)=0;Type(2)=0;Type(3)=0;Type(4)=-1; Num=Solve_General_Interval(3,4,Type,IntervalTestFunction,TestDomain,order, Iterations,Stop_First_Sol,Accuracy_Variable, Accuracy,Diff_Sol,SolutionList,6);Here Type(4)=-1; indicates that the fourth function is an inequality of the type

Example 3

This example is derived from example 2. We notice that in the three

functions of example 2 the second degree terms of

INTERVAL_VECTOR IntervalTestFunction (int l1,int l2,INTERVAL_VECTOR & in)

{

INTERVAL_VECTOR xx(1);

xx(1)=11092.0+(-25912.0+(19660.0-4840.0*Cos(in(1)))*Cos(in(1)))*Cos(in(1))+(

-508.0+(3788.0-1600.0*Cos(in(1)))*Cos(in(1)))*Sin(in(1));

return xx;

}

This program is implemented under the name

Test_Solve_General2.

With epsilonf=0 and epsilon=0.001

we get the solution

intervals, using 32 boxes:

for whatever order. If we use epsilon=0 and epsilonf=0.1 we get, using 50 boxes:

![\begin{eqnarray*}

&& \theta=[-0.806784012741056,-0.806781016684830]\\

&& \theta=[-4.793689962142628e-05,0]

\end{eqnarray*}](img182.png)

In both cases the solution intervals contain the roots of the equation.

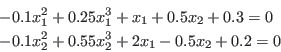

Example 4

In this example (see section 15.1.3) we deal with a complex problem

of three equations in three unknowns

The system has a solution which is approximately:

This problem is extremely ill conditioned as for the TestDomain

the functions intervals are:

This program is implemented under the name Test_Solve_General. With espsilonf=0 and epsilon=0.001 and if we stop at the first solution we find with the maximum equation ordering:

with 531 boxes. We may also mention the following remarks:

- we get no improvement with the single bisection mode as we need 2435 boxes to find the first solution,

- using the Reverse Storage mode does not lead to any improvement for finding the first root: in this mode we need 5587 boxes to get the first solution,

with 203 boxes. The importance of normalizing the functions appears if we use epsilonf=0.1 and epsilon=0. If we stop at the first solution we find:

![\begin{eqnarray*}

&&\psi=[4.661660388259656,4.661660388340929]\\

&&\theta=[1.70...

...00898180284073]\\

&&\phi=[0.869388881899751,0.869388881940387]

\end{eqnarray*}](img189.png)

while if we divide the first function by 1000 we find:

![\begin{eqnarray*}

&&\psi=[4.661658091884636,4.661658424779772]\\

&&\theta=[1.70...

...00898570395561]\\

&&\phi=[0.869388105618527,0.869388272066095]

\end{eqnarray*}](img190.png)

in four time less computation time.

General comments

The advantages of the proposed algorithm is that it is easy to use and implement for a fast check. For sharp system it may provide quickly solutions with a reasonable accuracy. The drawback is that it may provide solutions intervals which does not contain roots or, worse, miss some roots if Dist is not set to 0 (see section 2.3.5.2).

This algorithm may be used also for analysis: if we have to solve numerous systems we may use this algorithm with a low M in order to fast check if the current system may have some real roots, in which case we may consider using a more sophisticated algorithm.

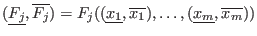

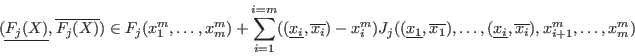

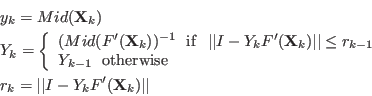

General purpose solving algorithm with Jacobian

Mathematical background

Assume now that we are able to compute the jacobian matrix of the system of functions. We will use this jacobian for improving the evaluation of the function intervals using two approaches:

- use the monotonicity of the function

- use the gradient for the evaluation of the function intervals

A notable difference with the previous procedure is that we use Moore theorem (see section 3.1.1) to determine if a unique solution exists in a given box, in which case we use Krawczyk method for determining this solution (see section 2.10). Therefore if the algorithm proposes as solution a point instead of a range this imply that this solution has been obtained as the result of Moore theorem. Note however that getting a range for a solution instead of a point does not always imply that we have a singular solution. For example it may happen that the solution is exactly at one extremity of a box (see example in section 2.4.3.2) which a case that our algorithm does not handle very well. A local analysis of the solution should however confirm quickly if the solution is indeed singular.

In addition we use also the inflation method presented in section 3.1.6 to increase the width of the box in which we may guarantee that there is a unique solution.

Hence this algorithm allows to determine exact solutions in the sense that we determine boxes that contains a unique solution and provides a guaranteed numerical scheme that allows for the calculation of the solution.

In the same way we use the Hansen-Sengupta version of the interval Newton method to improve the boxes (see [21]). Note that an improved interval Newton that may benefit from the structure of the system is available (see section 3.1.4).

This algorithms allows also to determines the solutions of non-0 dimensional system, see section 2.2.

Using the monotonicity

For a given box we will compute the jacobian matrix using

interval analysis. Each row ![]() of this interval matrix enable to get some

information of the corresponding function

of this interval matrix enable to get some

information of the corresponding function ![]() .

.

- if the i-th column of the j-th row is an interval which is

strictly negative or strictly positive, then

is monotonic with

respect to the unknowns

is monotonic with

respect to the unknowns

- if the i-th column of the j-th row is equal to 0, then function

does not depend on the variable

does not depend on the variable

The algorithm for computing a sharper evaluation of ![]() is:

is:

![]() =Evaluate

=Evaluate![]() (

(

![]() )

)

- compute

- let

be the number of components of

be the number of components of  such that

such that

or

or

and let

and let

be the variables for which this occur

be the variables for which this occur

- if

loop: for all combination of in the set

in the set

:

:

- if

- compute

- let

be the number of components of

be the number of components of  such that

such that

or

or

- if

, then

, then

=Evaluate

=Evaluate (

(

)

)

- otherwise

)

)

- if this is the first estimation of

then

then

- otherwise

- if

, then

, then

- if

, then

, then

- if

- compute

- otherwise

-

)

)

- if this is the first estimation of

then

then

- otherwise

- if

, then

, then

- if

, then

, then

- if

-

- if

- end loop:

- otherwise

- return

Improving the evaluation using the Jacobian and centered form

Let ![]() be the middle point of

be the middle point of

![]() and

and

![]() be the box. Then:

be the box. Then:

|

(2.4) |

This evaluation is embedded into the evaluation procedure of the solving algorithms using the Jacobian. It is also available in its general form as

INTERVAL_VECTOR Centered_Form(int DimVar,int DimEq,

INTERVAL_VECTOR (* TheIntervalFunction)(int,int,INTERVAL_VECTOR &),

INTERVAL_MATRIX (* Gradient)(int, int, INTERVAL_VECTOR &),

VECTOR &Center,

INTERVAL_VECTOR &Input)

where

- DimVar: number of variables

- DimEq: number of expressions

- TheIntervalFunction: procedure in MakeF format for interval evaluating the expressions

- Gradient: procedure in MakeJ format for evaluating the derivatives of the expressions

- Center:the center point for the centered form

- Input: the ranges for the variables

INTERVAL Centered_Form(int k,int DimVar,int DimEq,

INTERVAL_VECTOR (* TheIntervalFunction)(int,int,INTERVAL_VECTOR &),

INTERVAL_MATRIX (* Gradient)(int, int, INTERVAL_VECTOR &),

VECTOR &Center,

INTERVAL_VECTOR &Input)

which is used to evaluate only expression number

A more sophisticated evaluation for the centered form is based on

Baumann theorem [18]. First we define the procedure

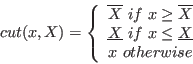

cut(double x,INTERVAL X) as:

For a system of

For a given equation

with

INTERVAL_VECTOR BiCentered_Form(int DimVar,

int DimEq,

INTERVAL_VECTOR (* TheIntervalFunction)(int,int,INTERVAL_VECTOR &),

INTERVAL_MATRIX (* Gradient)(int, int, INTERVAL_VECTOR &),

INTERVAL_VECTOR &Input,

int Exact)

where

- DimVar: number of variables

- DimEq: number of expressions

- TheIntervalFunction: procedure in MakeF format for interval evaluating the expressions

- Gradient: procedure in MakeJ format for evaluating the derivatives of the expressions

- Input: the ranges for the variables

- Exact: if 0 the procedure will return as soon as an interval evaluation of one expression does not include 0

INTERVAL_VECTOR BiCentered_Form(int k,int DimVar,

int DimEq,

INTERVAL_VECTOR (* TheIntervalFunction)(int,int,INTERVAL_VECTOR &),

INTERVAL_MATRIX (* Gradient)(int, int, INTERVAL_VECTOR &),

INTERVAL_VECTOR &Input,

int Exact)

Another variant is based on the property that the numerical interval evaluation of the product J(Input)(Input-Center) may be overestimated as there may be several occurence of the same variable in this product. We may assume that this product has been computed symbolically, then re-arranged to reduce the number of occurence of the same variable leading to a procedure in MakeF format that computes directly the product. The syntax of the bicentered form procedure is

INTERVAL_VECTOR BiCentered_Form(int DimVar,

int DimEq,

INTERVAL_VECTOR (* TheIntervalFunction)(int,int,INTERVAL_VECTOR &),

INTERVAL_MATRIX (* Gradient)(int, int, INTERVAL_VECTOR &),

INTERVAL_VECTOR (* ProdGradient)(int, int, INTERVAL_VECTOR &),

INTERVAL_VECTOR &Input,

int Exact)

where ProdGradient is the procedure that computes the product

J(Input)(Input-Center), being understood that the Center

is available in the global variable

ALIAS_Center_CenteredForm.

Single bisection mode

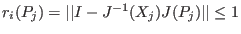

We may use the single bisection mode i.e. bisect only one variable at a time. Fives modes exist for determining the variable to be bisected, the choice being made by setting Single_Bisection to a value from 1 to 8

- 1 : we just split the variable having the largest width

- 2 : this mode is based on the smear function

as defined by

Kearfott [7]: let

be the Jacobian

matrix of the system and let define for the variable

be the Jacobian

matrix of the system and let define for the variable  the

smear value

the

smear value

![$s_i = {\rm Max}(

\vert\underline{J_{ij}[\underline{x_i},\overline{x_i}]}\vert,

\vert\overline{J_{ij}[\underline{x_i},\overline{x_i}]}\vert$](img240.png)

![$\forall j\in

[1,n]$](img241.png) where

where  is the total number of functions. The variable that

will be bisected will be the one having the largest

is the total number of functions. The variable that

will be bisected will be the one having the largest  .

There is however a drawback f the smear function: let consider for

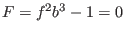

example the equation

.

There is however a drawback f the smear function: let consider for

example the equation  where

where  are large identical

intervals centered at 0.

The derivative of

are large identical

intervals centered at 0.

The derivative of  with respect to

with respect to  is

is  and

with respect to

and

with respect to

: multiplied by the width of the interval

we get

: multiplied by the width of the interval

we get  and

and  . Hence the smear function for

. Hence the smear function for  will

be in general larger than for

will

be in general larger than for  and

and  will always be bisected until its

width is lower than the desired accuracy. Another example in which the

smear function is not the best choice is presented in

section 2.4.3.4.

However the smear function is very often the most efficient mode and

should be privileged.

will always be bisected until its