|

||||||||

The most up to date information on our work is in the publications

page of the group. You can also find lots of detailed

information in our yearly activity reports (2011, 2010, 2009, 2008, 2007, 2006, 2005, 2004, 2003, 2002),

which describe our activities.

| Ongoing Projects | ||||||||||||||||||||||||||||||||||||||||||||||||||||

During the recent years, we developed the three following objectives: |

||||||||||||||||||||||||||||||||||||||||||||||||||||

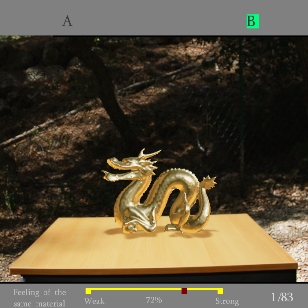

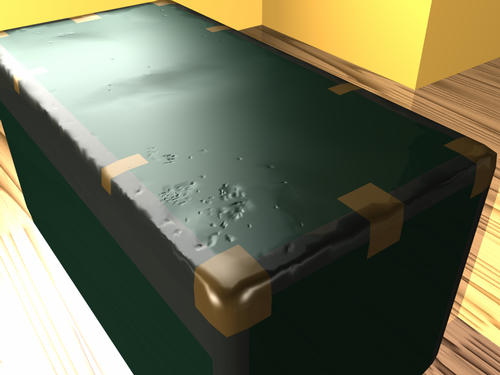

Unimodal Graphics Perceptual Rendering. We first investigated different perceptual models such as the threshold map. Using a fast GPU implementation from the Un. of Erlangen, we developed an algorithm based on “masking layers” to select perceptually-driven levels of detail for complex objects [DBDLSV07]. We demonstrated that this approach improves performance while maintaining perceptually equivalent levels of quality. This result was verified with a perceptual study performed with guidance from our neuroscience collaborators. Crossmodal Perceptual Rendering. The first problem concerned sound rendering of complex environments. Our goal was to determine if visuals had an effect on the quality of sound when using our clustering algorithm [TGD04] for sound rendering of complex scenes. We performed a psychophysical study to investigate this question; the result was that it is best to place more clusters in the visible frustum. We subsequently developed an algorithm which appropriately influences the clustering algorithm to optimize for this goal [DBDLSV07]. Collisions and explosions naturally generate large numbers of sound sources and involve an inherently audio-visual phenomenon. We developed a novel algorithm which computes impact sounds in the frequency domain, utilizing the natural spectral sparseness of modal synthesis [BDTVJ08]. To improve performance we used the perceptual effect which shows that we do not perceive delays of sound with respect to the corresponding visual impact. This allowed us to delay sound processing, thus smoothing out computation load over time. We performed a perceptual study with the goal of understanding whether audio can influence the per¬ception of visual quality of materials and vice versa. Our study demonstrated that higher quality audio can improve the perceived similarity of a given material to a (hidden) reference [BSVD10]. To our knowledge, this is the first time a bimodal audio-visual perceptual effect has been demonstrated for mate-rial perception. The results of this study were applied to a crossmodal audio-visual algorithm [GBWAD09] which optimizes the choice of audio and material quality rendering based on the result of the perceptual study of [BSVD10]. One of the applications of CROSSMOD was the use of audiovisual rendering results to study the effect of virtual environments on emotion. A virtual environment was developed for subjects with cynophobia, which allowed us to study the consequences of audio and visual effects on emotion [VZBSWND08]. We are currently following up this work in the context of the ARC NIEVE collaborative project. Sound Rendering. In the context of the solutions developed in CROSSMOD, we developed several “pure” sound-related algorithms, in particular in the goal of rapid simulation of HRTFs. We first developed an efficient approximation to first order sound scattering from complex surfaces [TDLD07] based on the Helmoltz-Kirchoff integral. The approach leverages programmable graphics hardware (similar to reflection shadow maps) to efficiently sample an acoustic surface scattering integral on complex 3D meshes. For 1st order scattering and in far field from the surfaces, the approach gives results that compare favorably with boundary element techniques. We applied this technique to model the scattering of a 3D model of the head and torso for a given subject to individualize 3D audio rendering over headphones [DPTAS08]. This was used to develop a technique to fit a proxy head, ears and torso geometry to a set of photographs of a subject and compute individualized binauralization filters. Finally, we developed a novel approach to rendering reverberation effects in acoustically coupled environments comprising multiple rooms [STC08]. We precompute “form-factor” propagation filters between a point in each room and the connecting openings/portals. Propagation paths are topologically determined by traversing the graph defined by rooms and portals and the filters are convolved along each propagation paths. Contributions from all paths are added to obtain the final response. The approach allows for modeling dynamic environments with opening or closing portals.

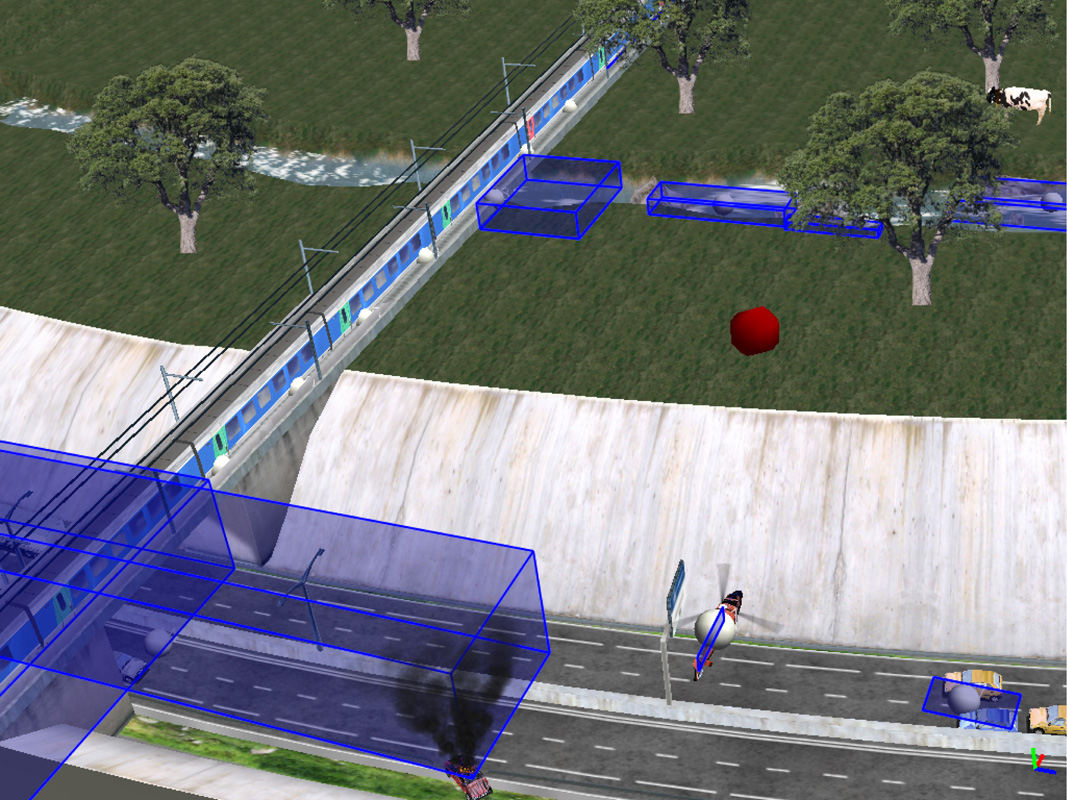

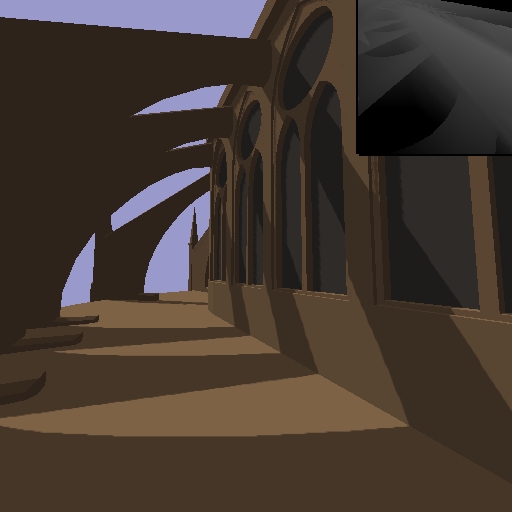

Figure 1: On the left we show a screenshot from a complex scenes where impacts sounds are computed using [BDTVJ08]. Other perception based work. We performed perceptual evaluations in two other projects since the end of CROSSMOD. In the context of the hair reflectance parameter extraction project [BPVDD09] we evaluated the success of our metric for selecting appropriate feature vectors in our data set using a perceptual experiment. Similarly, in our recent work on non-photorealistic rendering [BLVLDT10], we performed a perceptual evaluation of different NPR techniques to determine the relative strengths and weaknesses of each approach.

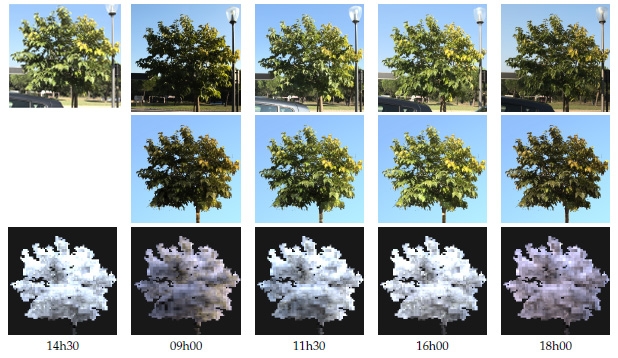

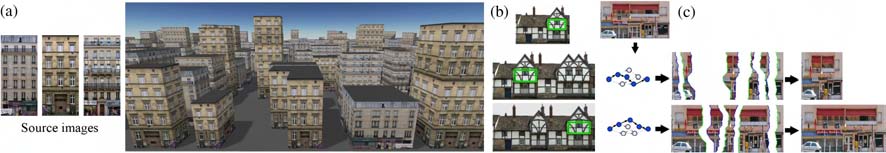

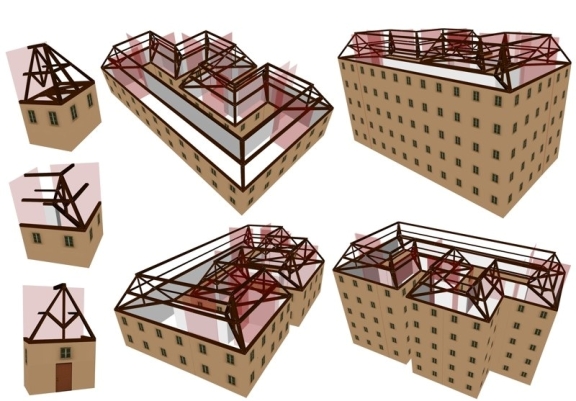

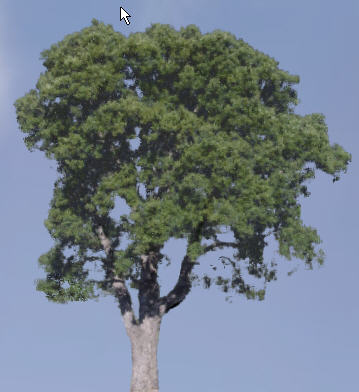

Combining Data-Driven and Simulation Approaches Relighting and reflectance estimation. We developed a solution for relighting of tree canopy photographs. The input is simply a set of photographs at a single time of day. We use information from the images to develop a single scattering volumetric rendering model approximation. By using this model and combining it with an analytical sun/sky model, we are able to relight the tree photographs at any other target time of day. We also developed a solution to estimate hair reflectance parameters from photographs [BPVDD09]. To do this, we use a learning approach based on synthetic rendering of an analytical model to find the appropriate parameters for an interactive variant of the Marschner hair reflectance model. Fig. 2: Our solution allows relighting of tree canopies by using only a single input photograph, Sound re-rendering and grain-synthesis. We developed acoustical scene modeling from recordings inspired by computer vision techniques. Using a set of non-coincident microphones, we developed an approach allowing the extraction of information about the position of the different sound sources present in the recording. The original recordings can then be dynamically warped to re-render the acoustical scene from different viewpoints not originally captured. Sources can also be moved and occlusion from virtual walls can also be simulated [GTL07, GT07]. Synthesizing contact sounds is a hard and important problem. While simulation-based methods produce good results, they often lack the richness of real sounds. We developed a ”sound texture”-based approach to the synthesis of rolling and scraping sounds using recordings; our approach allows us to re-target example contact sounds to different simulations. Our method extracts short audio elements or ”grains” from the original recordings. The grains can then be re-triggered appropriately based on contact information reported by an interactive physics engine. The original recordings can also be easily time-stretch to match a new simulation scenario and the timbre and temporal events can be decoupled [PTF08, PTF09, PFDK09]. Texture synthesis and rendering. As discussed in the objectives set out four years ago, we considered texture synthesis and procedural techniques to be a very promising challenge in the domain of data-driven methods. A typical way to give an appearance to a surface is to define colors in a volume surrounding the object. The volume is then sampled in every point of the surface to determine its color. We developed an algorithm to automatically generate such volumes of colors from a set of 2D example images. Our algorithm has the unique ability to restrict computations around the surface, while enforcing spatial determinism [DLTD08]. In the work “Texturing from Photographs” [ELS08] we extract the input example from any surface from within an arbitrary photograph. This introduces several challenges: Only parts of the photograph are covered with the texture of interest, perspective and scene geometry introduce distortions, and the texture is non-uniformly sampled during the capture process. In recent work we developed a new texture synthesis algorithm targeted at architectural textures. While most existing algorithms support only stochastic textures, ours is able to synthesize new images from highlystructured examples such as images of architectural elements (facades, windows, doors, etc.). In addition, our approach is designed so that results are compactly encoded and quickly accessed from the graphics processor during the rendering process. Thus, when used as textures our synthesis results use little memory compared to the equivalent images they represent [LHL10] (see Fig. 3).

Figure 3: (a) From a source image our synthesizer generates new textures fitting surfaces of any size. We have also used texture synthesis to perform rendering based on a single image, we do this by combining an image-analogies type approach and a reprojection scheme [BVLD10]. Based on only a single segmented image, we are able to move around at near-interactive rates. While the results are still preliminary, this direction is very promising. Interactive solutions for content creation. A novel axis in this objective is the development of interactive solutions for content creation. We have developed a solution for interactive modeling of textured architectural models and for texture assignment. Our interactive modeling approach [CLDD09], allows the user to manipulate a small number of vertices, and imposes a set of constraints (e.g., preserve angles during manipulation). We also analyse texture of the model, allowing the texture detail to be preserved during stretching. We use a sparse system solver, allowing interactive performance. In recent work we developed an algorithm to help modelers texture large virtual environments [CLS10]. Modelers typically manually select a texture from a database of materials for each and every surface of a virtual environment. Our algorithm automatically propagates user input throughout the entire environment as the user is applying textures to it. After choosing textures for only a small subset of the surface, the entire scene is textured. Fig. 4: Our solution allows the creation of novel 3D models (show on the right side) by combining Plausible to High-Quality Continuum for Rendering

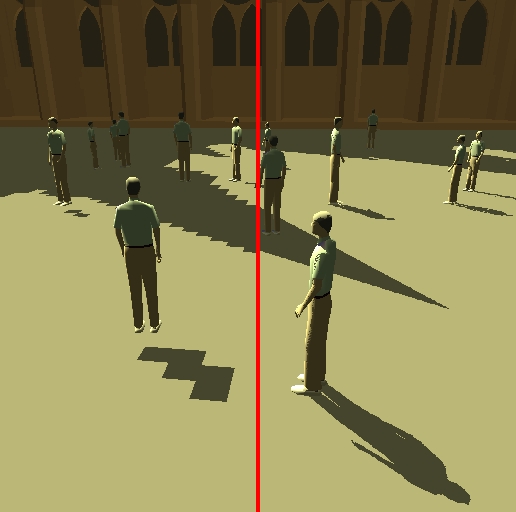

Figure 5: Left, we show a screenshot of the Antiradiance solution [DSDD07] Sound rendering. We developed a novel solution for progressive rendering of impact sounds, based on a new hierarchical data structure for solid objects [PFDK09]. The hierarchical nature of the spatial data structure allows us to progressively improve the quality of the impact sound approximation, since the modal analysis is performed on the simplified tetrahedral approximation to the original surface. To our knowledge, this is the first progressive approach to impact sound synthesis. As a followup to the work in CROSSMOD, we developed a complete pipeline for 3D audio synthesis which incorporates all our scalable sound rendering algorithms[GBWAD09], including our frequency-based impact sound solution. Initially this effort was part of our attempt to spin-off our audio technologies; however after a one year market survey, we concluded that the commercial potential of the audio technologies developed did not justify the investment for full-fledged startup. Nonetheless, an INRIA funded “young engineer” was hired to create a high-quality audio library (APF – audio processing framework). APF is now available for the group. Procedural texture methods. Noise is an essential tool for texturing and modeling. We developed a new noise function, called Gabor noise, based on sparse convolution and the Gabor kernel [LLDD09]. Gabor noise offers a unique combination of properties not found in other noise functions: accurate spectral control with intuitive parameters for easy texture design, setup-free surface noise without surface parametrization for easy application on surfaces, and analytical anisotropic filtering for high-quality rendering. By varying the number of impulses used, we achieve a quality/speed tradeoff. Gabor noise can be used as an interactive tool for designing procedural textures based on noise. We have extended this approach to non-photorealistic rendering, which poses challenging questions between 2D and 3D representations [BLVLDT10]. Data Structures. Texture mapping with atlases suffer from several drawbacks: Wasted memory, seams, and uniform resolution. We proposed new data-structures to overcome these limitations [LD07]. The Tile-Tree stores square texture tiles into the leaves of an octree surrounding the surface. At rendering time the surface is projected onto the tiles, and the color is retrieved by a simple 2D texture fetch into a tile map. Our method is simple to implement, does not require long pre-processing time, nor any modification of the textured geometry. We also proposed a new, highly compressed adaptive multiresolution hierarchy to store spatially coherent graphics data. Our data-structure provides very efficient random access – required by rendering algorithms – by using a compact primal tree structure [LH07].

|

||||||||||||||||||||||||||||||||||||||||||||||||||||

| Past Projects (2001-2006) |

||||||||||||||||||||||||||||||||||||||||||||||||||||

Perceptual 3D

Audio Processing

We exploit limitations of human auditory perception to optimize rendering times for complex scenes while preserving the perceived audio quality [TGD04].We proposed a real-time masking estimator derived from work in perceptual audio coding, which can determine at each frame of a simulation which sound sources are audible to the listener as well as a clustering strategy grouping neighboring sound sources based on spatial proximity but weighted by the energy of the signal emitted by each source. We performed perceptual validation studies which showed that such techniques have minimal impact on perceived audio quality and do not affect user performance in terms of 3D sound localization. We also introduced a fully scalable pipeline for real-time processing of massive numbers of signals using our masking algorithm and a calculated importance value of the various sound streams [TSI05, GLT05]. Overall, it is interesting to note the similarity between this work in audio rendering and related techniques in computer graphics: occlusion culling, level of detail and importance sampling for point-based representations or global illumination. For more information, see the project pages and the publications: [TGD04] (SIGGRAPH 2004), [Tsi05] (DAFx'05) see also the results and [GLT05] (ICAD'05). Image-based Reconstruction and Rendering

The thesis of A. Reche was on image-based methods for capture and rendering of real objects. The main result was a novel approach for the capture and rendering of real trees [RMD04]. Initially a set of photographs is taken around the tree, and the cameras of these images are calibrated. Then, alpha-mattes are extracted from the images, giving an opacity value to each pixel in each view. In a second step, the opacity values are used to perform a density estimation on a hierarchical grid, resulting the assignment of a density value for each grid cell. In the final step, textures are extracted from the images and assigned to a billboard in each cell. There is thus one billboard per cell, and one texture per billboard per input camera position.This work is being continued, to allow reduction in texture memory and efficient multi-resolution rendering. For more information, see the SIGGRAPH 2004 publication: [RMD04] Another result of the thesis [RD03b] was on a method which enables manual intervention in the use of view-dependent texture maps. By introducing a visibility ordering to create visibility layers and by allowing and image editing of each layer (e.g., in GIMP), it is possible to achieve very realistic renderings with a smaller number of input images. For more information, see the Pacific Graphics'03 publication: [RD03b] Interactive Illumination

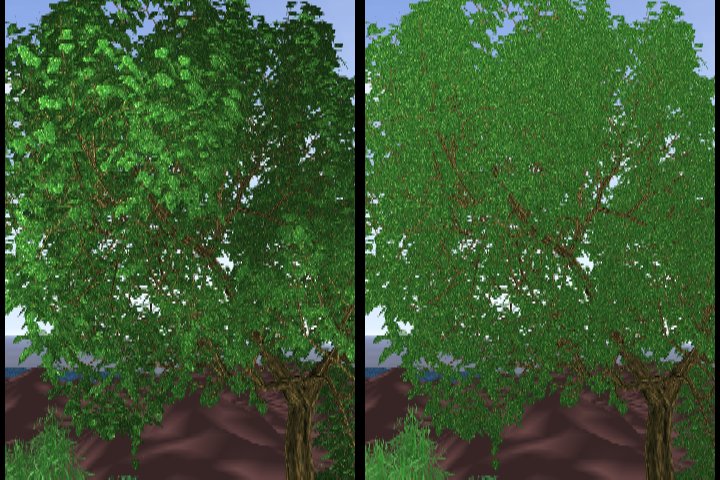

We have developed an approximation for ambient occlusion for trees and grass [HPAD06], in collaboration with K. Hegeman and M. Ashikhmin of Sony Brook Univ. and S. Premoze. This is a classic case of an approximation which is plausible, but does not correspond to physical principles (see Fig. 3). In this topic, we have also developed an interactive method for rendering specular reflections, in collaboration with the University of Girona (P. Estalella, I. Martin) and UPC Barcelona (D. Tost), based on the ``virtual object'' model. A fast method has been developed to find reflection points and then blend them into the scene [EMD+05]; we are currently extending this approach to the GPU. In collaboration with M. Stamminger and C. Dachshacber (U. Erlangen) we are investigating GPU-accelerated global illumination. For more details see the I3D'06 publication [HPAD06] and the VMV'05 publication [EMD+05]. Audio-visual Crossmodal Rendering Since December 2005, we are coordinating an EU Open FET IST ``CROSSMOD'' on audio-visual crossmodal rendering. The partners are Univ. Bristol, UK, CNRS, IRCAM France, TUW, Austria, CNR, Pisa, Italy and FAU, Erlangen Germany. We intend to better understand cross-modal effects by specific initial experiments and then to develop new algorithmic approaches exploiting cross-modal effects for better audiovisual display. This will be achieved by validating initial hypotheses in more realistic and complex environments by evalutating results in different target applications and VE setups. We hope to achieve faster and perceptually more realistic display, and use use active cross-modal effects such as attention control in the display and authoring of VEs. The CROSSMOD project website is here . Virtual Environments and Urban Planning (CREATE, 2002-2005)

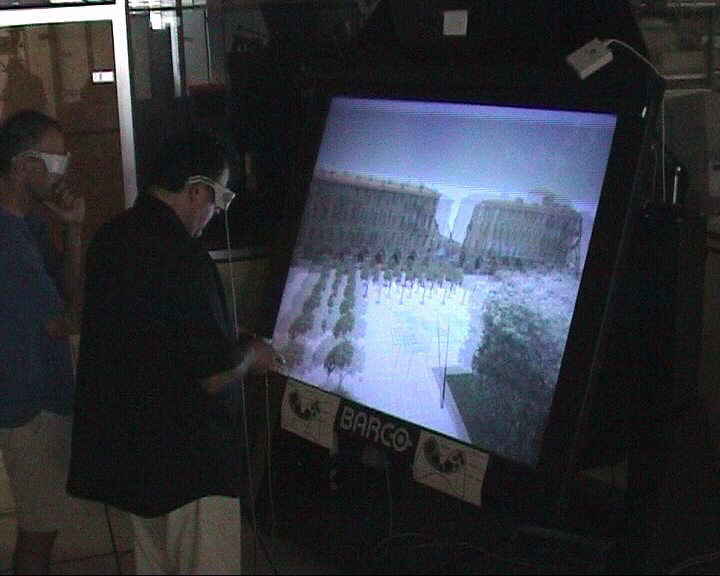

The goal of this project was the combination of realism and interactivity in virtual environments, and how this can be applied to two application domains: heritage and urban planning/architecture. Our participation in this project concetrated on the integration of previous solutions [SD02, DCSD02], and the development of novel solutions for both graphics and sound [TGD04, RD03b] into a multi-platform VR-system for the project. The overall choices for integration were presented at the EG symposium on Virtual Environments [DRT+04]. One important aspect of this project was the decision to work with a real-world application, which was the Tramway construction project in Nice. After presenting a simple demonstrator to the people in charge, we developed a strong working relationship with them. In particular we chose to reconstruct and represent the potential development of a historical square in Nice (place Garibaldi, see left Figure above, which is a view of the VE simulator). We were able to perform an in-depth analysis of our choices for the virtual environment, by studying the usage of our system by the architects who were actually in charge of the real-world project Figure left above. This work has resulted in a paper in the Presence journal [DRTR07], accepted for publication in 2007. For more information, see the publications: EGVE'04 [DRT+04] (Presence'07, [DRTR07], forthcoming) Virtual Archeology (2003-2005)

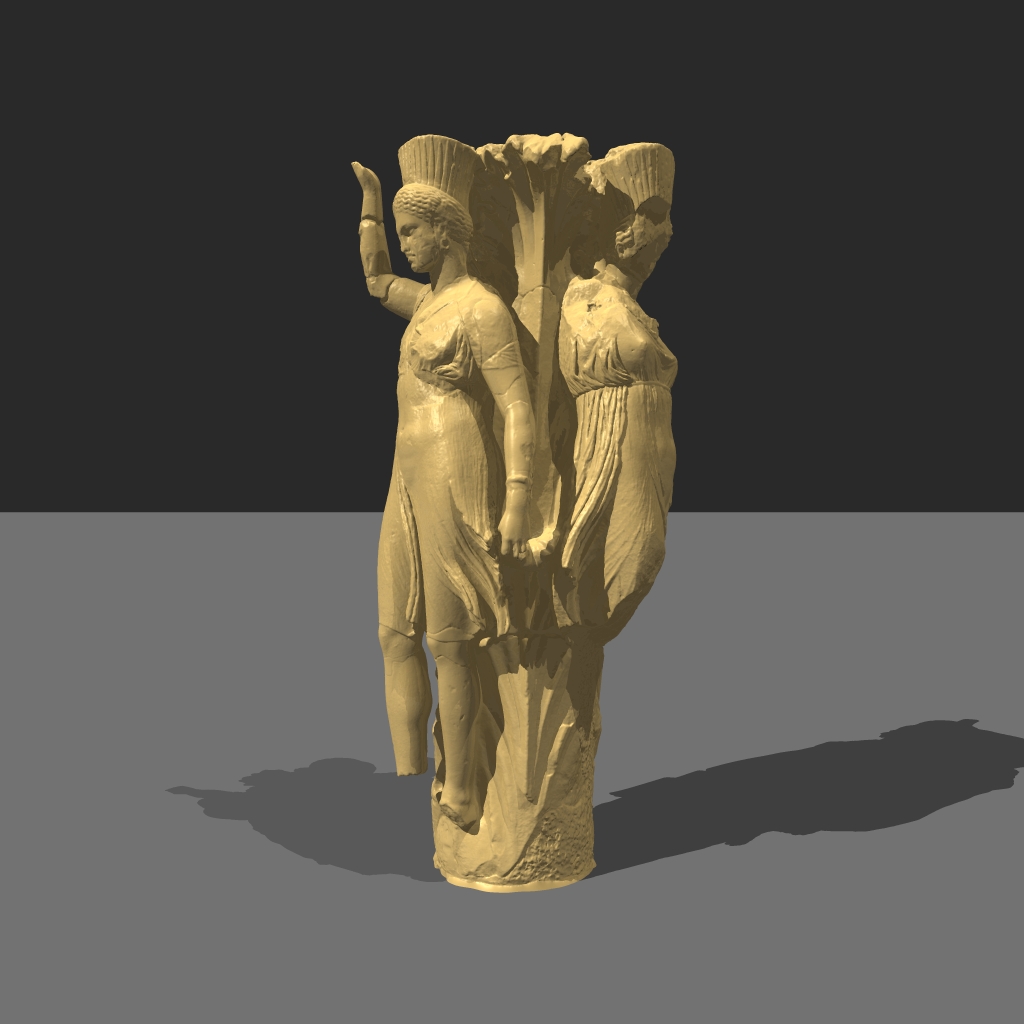

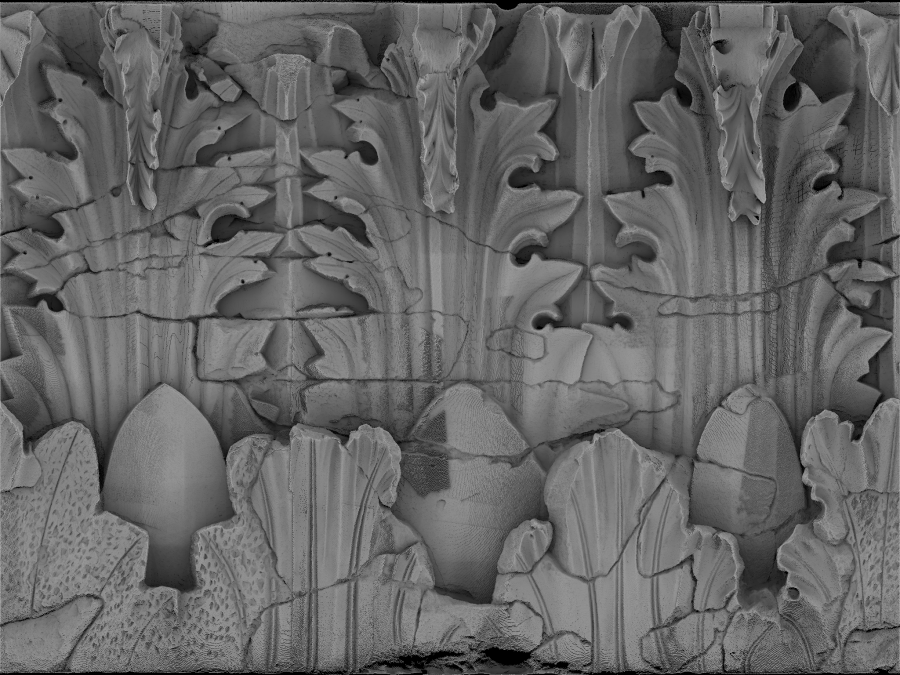

One main archeological project was in the context of the thesis of F. Duguet. We established a collaboration with the VR department of EDF (G. Thibault) who have a long history of working on cultural heritage projects, financed by the ”Mecenat” of the company. The project involved scanning and processing the “Dancers column” (see left Figure above) at Delphi. As part of F. Duguet's thesis, we developed a solution for the display of “unfolded images” (développée) (righthand figure above), which are an indispensable tool for the study of such monuments. This work was presented at the VAST virtual archeology conference [DDGM+04]. Further reconstruction and display techniques were developed in the last part of F. Duguet’s thesis [DEDS05]; these techniques are being used in the archeological project. For more information, see the publications: VAST'04 [DDGM+04], and the technical report [DEDS05].

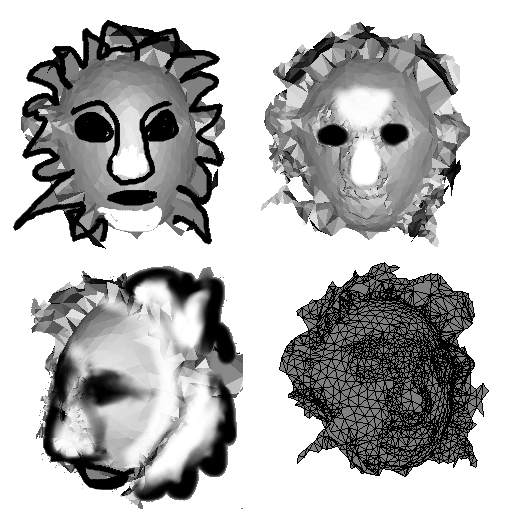

Our work on archeology, in the context of the ARC ARCHEOS concentrated on the issues of appropriate representations for archeological reconstructions [RD03c]. In the context of the ARC project, we worked with archeologists from the ENS Paris, and the Ecole Franc¸aise d’Athènes. Through the contacts of our colleagues in Grenoble, we were able to present our reconstructions of the Argos site, developed on the workbench, to the archeologists who were in charge of the actual dig and study of monument we reconstructed (the Tholos at Argos). For more information, see the VAST'03 publication [RD03cc] Sketch-based Modelling (2001-2004)

In the Ph.D. work of David Bourguignon (under the supervision of M-P. Cani at Grenoble), we worked on sketch-based illustration and modelling. The first result consisted in a simple system to ``draw in 3D'' using a simple metaphor for placing objects [BCD01]. The second result was a more elaborate approach to create 3D drawings from 2D sketches, using a reconstruction technique and a metaphor for indicating more or less depth in the image [BCCD04] (in collaboration with R. Chaine, LIRIS). For more information see the Eurographics 01 [BCD01] and the EG Symposium on Sketch-Based Modelling 04 [BCCD04] publications. Point-Based Modelling and Rendering (2001-2004)

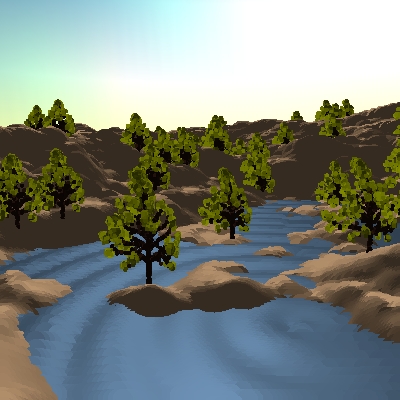

As part of M. Stamminger's postdoc (2001-2002), we developed a point-based rendering technique which could handle procedural objects thanks to a novel sampling scheme (sqrt(5)-sampling), and also complex polygonal objects with appropriate sampling. This latter sampling afforded a natural and efficient level-of-detail mechanism [SD01]. We applied and extended this approach in an interactive rendering solution for complex ecosystems [DCSD02] (righthand figure), based on point and line rendering. For more information see the EGWR 01 paper (project page) [SD01] and the IEEE Viz'02 publication [DCSD02].

In [DD04] we presented an approach for flexible point rendering on mobile devices. In [PSD+03] we developed an approach to enhance image-based modeling with an interactive point-based system, in collaboration with F. Duranleau and P. Poulin of the Univ. of Montreal. For more information, see the publications: Ecosystem rendering [DCSD02] , flexible point rendering on mobile devices [DD04], interactive image-based modelling using points [PSD+03] . For more information, see the Graphics Interface'03 publication [PSD+03]. Perspective Shadow Maps (2002)

As part of M. Stamminger’s postdoc (2001-2002), we developed a new solution [SD02] for accurate shadow map calculation. The idea is simple, but very powerful: compute the shadow map after the perspective projection, thus compressing the resolution of the shadow map far from the observer and concentrating resolution in regions close to the viewer, in which shadows are large (see figures above). Theres advantage of this approach is that this implies only a modification of the matrix used in the rendering of the shadow map from the light source, and thus maps directly to hardware. Consequently, the only additional cost of this new method is the recomputation of the shadow map at each frame, which is required for the traditional map when objects in the scene move. For more information, see the project page and SIGGRAPH 2002 publication: [DD02] Robust Epsilon Visibility (2002)  In this work, we developed a general and flexible formalism to describe visibility events based on sets of generators (i.e., vertices and edges). This allowed the computation of the visibility skeleton (the 0 and 1-nodes of the visibility complex) for relatively complex scenes. The method is based on “fat lines”, which are lines with an epsilon thickness, and consisten treatment of intersections of these lines with the scene. Examples of the results of this methods are shown in 4(left). For more information, see the SIGGRAPH 2002 publication: [DD02] This work is a continuation of the work developed at iMAGIS Grenoble, by F. Durand on the visibility complex. See the ACM Transaction on Graphics publication for more details [[DDP02]. Aging (2001-2002)

In the context of the thesis of E. Paquette, which started in Grenoble, and was concluded at REVES, was a ``co-tutelle'' between the Univ. of Montreal and Univ. Grenoble. The first part of the work presented a solution for simulating impacts [PPD01] while the second part concetrated on efficient simulation of paint cracking and peeling [PPD02], using geometric approximations based on the underlying physical phenomena. Global Illumination (2001-2002)

The Ph.D. thesis of X. Granier, started in Grenoble and completed at REVES in 2001, , was on a unified global illumination framework, combining hierarchical radiosity with a particle tracing mechanisms across links, resulting in efficient images with and high-quality glossy and diffuse effects [GD04] (ACM TOG 2004). The work of M. Stamminger [SSS02], permitted the acceleration of final gathering using a camera-aligned grid structure, in collaboration with A. Scheel and H-P. Seidel of MPII. For more information on X. Granier's thesis, see the ACM Trans. on Graphics 2004 publication: [GD04], and the EGWR 2002 paper [WDG02] and Eurographics 2001 paper [GD01]. For the work of M. Stamminger, see the Eurographics 2002 paper [SSS02]. |

||||||||||||||||||||||||||||||||||||||||||||||||||||