1 Introduction

1.1 Concurrent Programming

|

Concurrent programming is the use of several programs, running

together in the same application. It is opposed to traditional

sequential programming, in which there is one unique program, which

thus coincides with the whole application. Concurrent programming is

usually considered to increase modularity, as one can often naturally

decompose a complex application in several cooperating programs which

can be run concurrently. This is for example the case of servers in

which each user request can quite logically be mapped to a specific

program processing it. With concurrent programming there is no more

need to code a complex application made of many distinct parts in one

single program, as it is the case in sequential programming.

There are two main variants of concurrent programming: the one in

which the programs, called

threads, are sharing a common

memory; and the one in which programs are basically

processes which have their own memory. The presence of a shared

memory eases communication between threads, as threads can directly

use shared data to communicate, while processes have to use

specialized means, for example sockets, for the same purpose. But, the

counterpart is that shared data may have to be protected from

concurrent accesses from distinct threads. Of course, this is not

necessary with processes in which, by definition, data are fully

protected from being accessed by other processes.

Parallelism is the possibility to simultaneously use several computing

resources. It is available with multiprocessor machines, or more

generally, when several machines are distributed over a network. It

is well-known that parallelism is not the best solution in all cases,

and there are systems which are more efficiently run by a single

processor machine than by a multiprocessor one. Parallelism is now

directly available, with no special effort, in standard OS such as

Linux which can operate hardware mapped on

Symmetric

Multi-Processor (SMP) architectures. Parallelism appears quite

natural in concurrent programming, as generally there is no logical

need that concurrent programs should be run by one unique processor.

Concurrent programming is largely recognized as being, in the general

case, much more difficult than sequential programming. Some problems,

such as deadlocks, are specific to it. Moreover, concurrent programs

are most of the time nondeterministic, and thus more difficult to

debug. For these reasons, concurrent programming is often considered

as an activity which must be left to specialists. This seems of course

largely justified in domains such as the programming of OS

kernels. But the question remains of designing a set of concurrent

primitives that can be not too difficult or painful to use, and to

provide general users with it.

Concurrency at Language Level

|

Most of the time, programming languages do no offer any primitive for

concurrent programming. This is the case of C and C++, in which

concurrent programming is only available using system calls (for

example

fork or

exec) or through libraries of

threads (for example, the pthread library). Parallelism is also most

of the time uncovered by programming languages primitives. The way to

benefit from parallelism is either to use threads in a SMP context, or

to design distributed systems where programs (often considered as

distributed objects) communicate and synchronize through the network.

Amongst the languages that have primitives to deal with concurrency,

some use message passing such as Erlang, others propose "rendez-vous"

such as Ada and Occam, and several, as CAML, directly include threads.

The most achieved proposal of such an inclusion seems to be

Java[

8] in which threads are first-class objects and

data can be protected using locks and monitors, as defined by

E.W. Dijkstra in the sixties (see [

14] for

reference papers on concurrent programming). In

Java, threads are

supposed to be used in almost every context (the simplest applet

involves several threads, one of them being for example dedicated to

graphics). However,

Java does not give particular means to ease

thread programming, which remains difficult and error-prone (see [

23] for a discussion on this point). Concerning

parallelism,

Java proposes the RMI mechanism to define distributed

objects, methods of which can be invoked through the network. Remote

method invocations basically use specialized threads dedicated to

communications.

Synchronous languages[

19] propose

concurrent programming primitives in the special context of reactive

systems[

20]. In this context, which is roughly

the one of embedded systems, concurrent programs are deterministic and

can be formally verified. However, synchronous languages suffer

several limitations which limit their use. Amongst these is the

limitation to static systems, in which the number of concurrent

programs is known at compile time, and to mono-processor

architectures.

A proposal to extend the synchronous approach to dynamic systems

(systems which are not static) is called the

reactive

approach. It is described in full details in [

6]. Several language belonging to this approach have been designed;

amongst them are

Reactive-C[

11] which implements

the approach in C, and SugarCubes[

13] which

implements it in

Java. Reactive programs can be very efficiently

implemented as they are basically cooperative. However, up to now,

they were not able to get direct benefit from multiprocessor machines.

In this text, one proposes a language for concurrent programming

inspired by the reactive approach and based on special threads, called

fair threads. One major point is that the language contains

primitives that allows multiprocessing. The rest of the section

considers threads and presents the basics of the language proposal.

In sequential programming, a program is basically a list of

instructions run by the processor. The program has access to the

memory in which it can read and write data. Amongst the instructions

are tests and jumps which are the way to control the execution

flow. In this standard computing model, a

program-counter points to

the current instruction executed by the processor.

The model of threads extends the previous sequential model by allowing

the presence of several programs, the

threads, sharing the

same memory. There are thus several program counters, one by thread,

and a new component, the

scheduler, which is in charge of

dispatching the processors to the threads. The transfer of a processor

from one thread to another is called a

context-switch, because

it implies that the program-counter of the thread which is left must

be saved, in order to be restored later, when the thread will regain

the processor. When several threads can be executed, the scheduler

can choose arbitrarily between them, depending on implementation

details, and it is thus generally unpredictable to determine which one

will finally get the processor. This is a source of nondeterminism in

multi-threaded programming.

The strategy of the scheduler is said to be

preemptive when it

is able to force a running thread to release a processor executing it,

in which case one says that the thread is preempted. With a

preemptive scheduler, situations where a non-cooperative thread

prevents the others from any execution, are not possible. A preemptive

scheduler can withdraw a processor from a running thread because of

priority reasons (there exist threads with higher priorities in the

system), or for other reasons. This is also a source of

nondeterminism. In a preemptive context, to communicate or to

synchronize generally implies the need to protect some shared data

involved in the communication or in the synchronization. Locks are

often used for this purpose, but they have a cost and are error-prone

as they introduce possibilities of deadlocks, that are situations

where two threads cannot progress because each one owns a lock needed by

the other.

Preemptive threads are generally considered to be well adapted for

systems made of heavy-computing tasks and needing few communications

and synchronizations. This is typically the case of Web servers, in

which a thread (usually picked out from a pool of available threads)

is associated to each new user request. Advantages are clear: first,

modularity is increased, because requests do not have to be split in

several small parts, as it would be the case with sequential

programming. Second, servers can be run by multiprocessor machines

without any change, and for example they can immediately benefit from

SMP architectures. Finally, blocking I/Os, for example reading from a

file, do not need special attention. Indeed, as the scheduler is

preemptive, there is no risk that a thread blocked forever on an I/O

operation will also block the rest of the system.

The strategy of the scheduler is said to be

cooperative if the

scheduler has no way to force a thread to release the processor it

owns. With this strategy, a running thread must cooperate and release

by itself the processor from time to time, in order to let the other

threads the possibility to get it. Cooperation is of course absolutely

mandatory if there is only one processor: a running thread which would

never release the processor would indeed forbid any further execution

of the other threads. Cooperative threads, sometimes called

green-threads, are threads that are supposed to run under the

control of a cooperative scheduler. Cooperative threads are adapted

for tasks that need a lot of communications between them. Indeed, data

protection is no more needed, and one can thus avoid to use

locks. Cooperative threads can be efficiently implemented at user

level, but they cannot benefit from multiprocessor machines. Moreover,

they need special means to deal with blocking I/O. Thus, purely

cooperative threads are not sufficient to implement important

applications such as servers.

It is often claimed that reuse of sequential code is made easier by

using threads. What is meant is that a sequential program can be

easily embedded in one thread, and then added into a system to be run

concurrently with the others programs present in it.

However, one generally cannot be sure that a sequential program never

enters a loop in which actions leading to releasing the processor,

such as I/Os, will never be performed. In a cooperative scheduler,

such a non-cooperative program would block the whole system (supposing

that the processor is unique). In a way, this is a major justification

for preemptive scheduling: a non-cooperating program is no more a

problem because it can now be preempted by the scheduler.

This is however only one aspect of the problem, because a sequential

program cannot generally be used directly as it, even in a preemptive

context. The first reason is that some data may have to be protected

from being accessed by other threads, which needs locks which were of

course absent in the sequential code. A second reason is that in some

cases, for example libraries, the sequential code must be made

reentrant, because several threads can now make concurrent calls to

it. Finally, the granularity of the execution, which is under control

of the scheduler and only of it, can be too large and can need the

introduction in the initial code of supplementary cooperation points.

In conclusion, it seems that in all cases reuse of sequential code is

largely an illusion in the context of multi-threading.

Portability is very difficult to achieve for multi-threaded systems.

In the larger sense, portability means that a system should run in the

same way, independently of the scheduling strategy. This is of course

a quite impossible challenge in the general case: data should be

protected, for the case of a preemptive scheduling, but cooperation

points should also be introduced, for the case of a cooperative

scheduling, and the conjunction of these two requirements would lead

to very inefficient code. Portability is, thus, often considered as a

goal that can only be partially achieved with multi-threaded

systems. Note that this is a major problem in Java which claims to

be portable but does not specify the scheduling strategy that should

be used for multi-threaded applications.

Determinism and Debugging

|

The choices made by the scheduler are a major source of nondeterminism

which make thread programming complex. In particular, debugging is

more difficult than in sequential programming because one may have to

take in account the choices of the scheduler when tracking a

bug. Moreover, the replay of a faulty situation may be difficult to

achieve, as it may actually depend on the scheduler choices. For

example, it may happens that the introduction of printing instructions

to trace an execution change the scheduling and makes the bug

disappear.

Nondeterminism seems inherent to preemption as it is very difficult to

conceive a multi-threading framework both deterministic and

preemptive. For the reader who is not convinced, imagine two threads

running in a preemptive context, one which cyclically increments a

counter, and one which prints the value of the same counter. What

should be the (unique, because of the determinism) printed value and

how can one simply find it?

However, it should be noticed that it is possible to design completely

deterministic frameworks based on cooperative threads, with clear and

simple semantics. This is actually the case of fair threads which are

introduced later.

The way resources are consumed is a major issue for threads. Several

aspects are relevant to this matter.

First, as any standard sequential program, each thread needs a private

memory stack to hold its local variables and the parameters and

intermediate results of executed procedures. The use of a stack is

actually mandatory in the context of a preemptive scheduler, because

the moments context-switches occur are independent of the thread,

which has thus no possibility to save its local data before the

release of the processor. Note that the situation is different in a

cooperative context, where context-switches are predictable. The

necessity of a private stack for each thread certainly contributes to

waste memory.

Second, user threads are often mapped to kernel threads which are

under supervision of the OS. Of course, this is only meaningful with a

preemptive OS, in order to avoid the risk of freezing the whole

machine when a non-cooperative thread is encountered. The need to

perform context-switches at kernel level is generally costly in CPU

cycles. An other problem that can occur with kernel threads is the

limitation which generally exists on the number of such threads

(typically, the number of allowed threads can vary on a Linux system

from 256 to about 4 thousands). Several techniques can be used to

bypass these problems, specially when large numbers of short-lived

components are needed. Among these techniques are thread-pooling, to

limit the number of created threads, and the decomposition of tasks

in small pieces of code, sometimes called "chores" or "chunks",

which can be executed in a simpler way than threads are.

Preemptive threads (threads designed to be run by a preemptive

scheduler) are also subject to an other problem: some unnecessary

context-switches can occur which are time consuming. Actually,

threads have no way at all to prevent a context-switch. Unnecessary

context-switches causes a loss of efficiency, but this is of course the

price to pay for preemptive scheduling.

Actually, programming with threads is difficult because threads

generally have very "loose" semantics, which strongly depend on

the scheduling strategy in use. This is particularly true with

preemptive threads. Moreover, the semantics of threads may also

depends on many others aspects, for example, the way priorities of

threads are mapped at the kernel level. As a consequence, portability

of multi-threaded systems is very hard to achieve. Moreover,

multi-threaded systems are most of the time nondeterministic which

makes debugging difficult.

Cooperative threads have several advantages over preemptive ones: it

is possible to give them a precise and simple semantics, which

integrates the semantics of the scheduler. They can be implemented

very efficiently. They lead to a simple programming approach, which

limits the needs of protecting data. However, purely cooperative

systems are not usable as it, and preemption facilities have to be

provided in a way or an other. The question is thus to define a

framework providing both cooperative and preemptive threads, and

allowing programmers to use these two kinds of threads, according to

their needs.

Loft basically gives users the choice of the context, cooperative

or preemptive, in which threads are executed. More precisely,

Loft

defines

schedulers which are cooperative contexts to which

threads can dynamically link or unlink. All threads linked to the

same scheduler are executed in a cooperative way, and at the same

pace. Threads which are not linked to any scheduler are executed by

the OS in a preemptive way, at their own pace. An important point is

that

Loft offers programming constructs for linking and unlinking

threads. The main characteristics of

Loft are:

- It is a language, based on C and compatible with it.

- Programs can benefit from multiprocessor

machines. Indeed, schedulers and unlinked threads can be run in real

parallelism, on distinct processors.

- Users can stay in a purely cooperative context by

linking all the threads to the same scheduler, in which case systems

are completely deterministic and have a simple and clear semantics.

- Blocking I/Os can be implemented in a very simple way, using

unlinked native threads.

- There exist instants shared by all the threads linked

to the same scheduler. Thus, all threads linked to the same scheduler

execute at the same pace, and there is an automatic synchronization at

the end of each instant.

- Events can be defined by users. They are

instantaneously broadcast to all the threads linked to a

scheduler; events are a modular and powerful means for threads to

synchronize and communicate.

- It can be efficiently implemented on various platforms.

Implementation of the whole language needs the full power of native threads.

However, the cooperative part of the language can be implemented without any

native thread support. This leads to implementations suitable for

platforms with few resources (PDAs, for example).

Loft is strongly linked to an API of threads called

FairThreads[

12]: actually,

Loft stands for

Language Over Fair

Threads. This API mixes threads with the reactive approach, by

introducing instants and broadcast events in the context of

threads.

Loft can be implemented in a very direct way by a

translation into

FairThreads (this implementation is described in section

Implementation in FairThreads7.1).

1.4 Structure of the Document

|

Section

The Language LOFT2 contains the description

of the language. First, an overview of

Loft is given. Then, modules

and threads are considered. Atomic and non-atomic instructions are

described. Finally, native modules are considered. A first serie of

examples is given in section

Examples - 13. Amongst

them is the coding of a small reflex-game which shows the reactive

programming style. The case of data-flow programming is also

considered. Various sieves are coded, showing how the presence of

instants can benefit to code reuse. Section

Programming Style4 contains a discussion on the programming style

implicitly supposed by

Loft. Section

Semantics5

contains the formal semantics of the cooperative part of

Loft by

means of a set of rewriting rules.

FairThreads is described in section

FairThreads in C6. Several implementations of

Loft are described in section

Implementations7. One consists in a direct translation in

FairThreads. Another is a direct implementation of the semantics rules of

section

Semantics5. One implementation is suited for

embedded systems. Section

Examples - 28 contains a

second serie of examples. Amongst them are some example which can

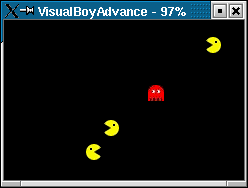

benefit from multiprocessor architectures. A prey/predator demo

running on an embedded system (the Game Boy Advance of Nintendo) is

also described. Related work is described in section

Related Work9 before the conclusion. A first annex gives the API of

FairThreads. A second one is the Reference Manual of

Loft. The third one

is a quick description of the compiling command.

2 The Language LOFT

One first gives an overview of the language. Then, the basic

notions of modules, threads, schedulers, events, and atomic and non-atomic

instructions are presented. Finally, native modules are considered.

2.1 Overview of the Language

|

Modules are the basic units of the language. The syntax is based on C

and modules are defined in files that can also contain C code.

Threads are created from modules. A thread created from a module is

called an instance of it. A module can have parameters which

define corresponding parameters of its instances. Arguments provided

when an instance is created are associated to these parameters. A

module can also have local variables, new fresh instances of which are

automatically created for each thread created from it.

The body of a module is basically a sequence of instructions executed

by its instances. There are two types of instructions: atomic

instructions and non-atomic ones. Atomic instructions are run

in one single step by the same processor. Usual terminating C code

belongs to this kind of instructions. Another example of atomic

instruction is the generation of an event, described later, which is

broadcast to all the threads.

Execution of non-atomic instructions may need several steps up to

completion. This is typically the case of the instruction which waits

for an event to be generated. Execution steps of non-atomic

instructions are interleaved, and can thus interfere during

execution. Note that all the execution steps of a non-atomic

instruction may not be necessarily executed by the same processor.

The basic task of a scheduler is to control execution of the threads

that are linked to it. The scheduling is basically

cooperative: linked threads have to return the control to the

scheduler to which they are linked, in order to let other threads

execute. Leaving the control can be either explicit, with the

instruction cooperate, or implicit, by waiting for an event

which is not present.

All linked threads are cyclically considered in turn by the scheduler

until all of them have reached a cooperation point (cooperate, or waiting instructions). Then, and only then, a new

cycle can start. Cycles are called instants. Note that the

same thread can receive the control from the scheduler several times

during the same instant; this is for example the case when the

thread waits for a first event which is generated by another

thread, later in the same instant. In this case, the thread receives

the control a first time and then blocks, waiting for the

event. The control goes to the others threads, and returns back to

the first thread after the generation of the event.

A schedulers define some kind of automatic synchronization which

forces the threads linked to it to run at the same pace: all the

threads must have finished their execution for the current instant,

before the next instant can start.

The order in which threads are cyclically considered is always the

same: it is the order in which they have been linked to the

scheduler. This leads to deterministic executions, which are

reproducible. Determinism is of course an important point for

debugging.

At creation, each thread is linked to one scheduler. There exists an

implicit scheduler to which threads are linked by default when no

specific scheduler is specified. Several schedulers can be defined

and actually running in the same program. Schedulers thus define synchronized areas in which threads execute in cooperation.

Schedulers can run autonomously, in a preemptive way under the

supervision of the OS.

During their execution, threads created from special modules, called

native modules, can unlink from the scheduler to which they

are currently linked, and become free from any scheduler

synchronization. Such free threads are run by kernel threads of the

system. Of course, native modules and native threads have a meaning

only in the context of a preemptive OS.

Three kinds of variables exist in

Loft:

- Global variables that are C global variables shared by all the

threads. These variables are placed in the shared memory in which the

program executes.

- Local variables that are instances of local variables of

modules. These variables are local to threads. Their specific

characteristic is that their value is preserved across instants: they

have thus to be stored in the heap.

- Automatic variables which are defined in atomic instructions,

and which are automatically destroyed when the atomic instruction in

which they appear is left. The value of an automatic variable is

not preserved from one instant to the next. As in C, automatic

variables can be stored in registers or in the stack.

Note that non-native threads need a stack only for execution of their

atomic instructions. Indeed, atomic instructions cannot be preempted

during execution, by definition. Thus, in this case, the stack is

always in the same state at the beginning and at the end of the

execution of each atomic instruction. Thus, the content of the stack

needs not to be saved when a context switch occurs. The situation is

of course different for native threads: because they can be preempted

arbitrarily by the OS, they need a specific stack, provided by the

native thread in charge of running them.

The simpler way for threads to communicate is of course to use shared

variables. For example, a thread can set a boolean variable to

indicate that a condition is set, and others threads can test the

variable to know the status of the condition. This basic pattern works

if all threads accessing the variable are linked to the same

scheduler. Indeed, in this case atomicity of the accesses to the

variable is guaranteed by the cooperativeness of the scheduler. A

general way to protect a data from concurrent accesses is thus to

associate it with one unique scheduler to which threads willing to

access the data should first link to.

However, this solution does not work if some of the threads are

non-native and belong to different schedulers or are unlinked. This is

actually the standard situation in concurrent programming, where

protection is basically obtained with locks. Standard POSIX mutexes

can be used for this purpose.

Events, described in the next section, give threads an other different

means of communication.

Events are synchronizing data basically used by threads to avoid

busy-waiting on conditions. An event is created in a scheduler which

is in charge of it during all its lifetime. An event is either present

or absent during each instant of the scheduler which manages it. It is

present if it is generated by the scheduler at the beginning of the

instant, or if it is generated by one of the thread executed by the

scheduler during the instant; it is absent otherwise. The presence

or the absence of an event can change from an instant to another, but

it cannot change during the course of an instant: all the threads

always "see" the presence or the absence of an event in the same way,

independently on the order in which they are scheduled. This is what

is meant by saying that events are broadcast.

Values can be associated to event generations; they are collected

during each instant and are available only during it.

The basic implementation of Loft is build on top of a C library of

native threads. Each scheduler and each instance of a native module is

implemented as a native thread. Threads which are instances of

non-native modules do no need the full power of a native thread to

execute. Actually, they don't need any specific stack as they can use

the one of the native thread of the scheduler to which they are

linked. In this way, the number of native threads can be limited to a

minimum. This is important when a large number of concurrent

activities is needed, as the number of native threads that can be

created in systems is usually rather low.

Unlinked threads and schedulers can be run in full parallelism, by

distinct processors. Programs can thus take benefit of SMP

architectures, and be speed-up by multiprocessors.

Note that one gets program structures that conform to the so-called

M:N architectures: M linked threads and N schedulers running them. An

important point is that these architectures become programmable directly at language level, and not through the use of

a library (as for example in the Solaris system of Sun).

Modules are templates from which threads are created. A thread created

from a module is called an instance of it. A thread is always created

in a scheduler which is initially in charge of executing it. Modules

are made from atomic and non-atomic instructions that are defined in

the next sections.

A module can have parameters which define corresponding parameters of

its instances. Arguments provided when an instance is created are

associated to these parameters. A module can also have local

variables, new fresh instances of which are automatically created for

each thread created from it.

The syntax of

Loft is based on C and modules are defined in files

that can also contain C code. The syntax of modules is:

module <kind> <name> ( <params> )

<locals>

<body>

<finalizer>

end module

- <kind> is the optional

native keyword. The case of

native modules is considered in section Native Modules2.5.

- <name> is the module name; it can be any C identifier.

- <params> is the list of parameters; it is empty if

there is no parameter at all.

- <locals> is the optional list of local variables

declarations. This list begins with the keyword

local.

- <body> is a sequence of instructions defining the module

body.

- <finalizer> is an optional atomic instruction executed

in case of forced termination (instruction

stop). This part

begins with the keyword finalize.

As example, consider the module trace defined by:

module trace (char *s)

while (1) do

printf ("%s\n",local(s));

cooperate;

end

end module

This module is a non-native one and it has a parameter which is a

character string. The body is reduced to a single while

loop (actually, an infinite one) considered in section Loops2.4.4. At each instant, the loop prints the message in

argument and cooperates. Note the presence of the do and

end keywords around the loop body; indeed, as in Loft

"{" and "}" are used to delimit atomic

instructions (see C Statements2.3.4), one needs a

different notation for loop bodies.

The parameter s is actually a local variable which is passed

at creation to the instances of trace. In Loft, all

accesses to local variables, and thus also to parameters, must be of

the form local(...) (local is actually a macro).

Threads can have local variables which keep their values across

instants. These are to be distinguished from static variable which are

global to all the threads, and from automatic variables which loose

their values across instants.

Let us return to the previous module

trace and suppose that

one wants to also print the instant number. A first idea would be to

define a variable inside the atomic instruction:

module trace (char *s)

while (1) do

{

int i = 0;

printf ("%s (%d)\n",local(s),i++);

}

cooperate;

end

end module

This is not correct because the variable i is allocated in

the stack at the beginning of the atomic instruction, and vanishes at

the end of it. Thus, a new instance of the variable is used at each

instant.

A solution would be to declare a global C variable to store the

instant number:

int i = 0;

module trace (char *s)

while (1) do

printf ("%s (%d)\n",local(s),i++);

cooperate;

end

end module

This solution produces a correct output. However, i is a

global variable shared by all instances of trace. A local

variable should be used if one wishes that each instance owns a

distinct variable:

module trace (char *s)

local int i;

{local(i) = 0;}

while (1) do

printf ("%s (%d)\n",local(s),local(i)++);

cooperate;

end

end module

Note that local variables must always be accessed using the form

local(...). This allows one to access in the same context a

local variable and a global or automatic one having the same name.

Threads are instances of modules. Threads which are instances of a

module

m are created using the function

m_create

which returns a new thread. Threads are of the pointer type

thread_t. Note that, as in many C APIs, all types defined in

Loft have their name terminated by "

_t". Arguments given

to

m_create are passed to the created instance (as in C,

arguments are passed by value).

For example, the following atomic instruction creates two instances of

the previous module

trace:

{

trace_create ("first thread");

trace_create ("second thread");

}

The output is:

first thread (0)

second thread (0)

first thread (1)

second thread (1)

first thread (2)

second thread (2)

...

Several points are important:

- Threads are created in the implicit scheduler which

is automatically created and started in all program (see Schedulers7.2.4).

- Threads are automatically started. This is a difference with

Java in which threads have to be explicitly started.

- Threads are always executed in the same order, which is the

order of their creation. Thus, the previous output is the only one

possible.

It is possible to create a thread in a specific scheduler using a

creation function of the form create_in. For example, an

instance of trace is created in the scheduler sched by:

trace_create_in (sched,"a thread");

Threads are always incorporated in the scheduler at the beginning of

an instant, in order to avoid interferences with already running

threads. Moreover, if the current scheduler and the one in which the

thread is created are the same, then this instant is the next one.

The running thread is returned by the function self().

The finalizer of a module is an atom executed when an instance of the

module is forced to terminate by a

stop instruction (defined

in

Control over Threads). The finalizer is only

executed if the stopped thread is not already terminated. A typical use

of finalizer is for deallocation purposes, as in:

module m (...)

local resource_type resource;

{local(resource) = allocate ();}

...

{deallocate (local(resource));}

finalization

{deallocate (local(resource));}

end module

The allocated resource is deallocated in case of normal termination,

at the end of the module body. It is also deallocated if termination

is forced, as in this case the finalizer is executed.

An important point to note is that the thread executing a finalizer

is unspecified, as the instance considered is stopped. This means that

self cannot be safely used in finalizers.

The entry point of a program is a module with name

main. An

instance of it is automatically created in the implicit scheduler.

For example, the previous output could be produced by the execution of a

program with the following module

main:

module main ()

trace_create ("first thread");

trace_create ("second thread");

end module

As in C, arguments can be given to the module main,

following the standard argc/argv convention.

The global program does not terminate when the main thread terminates:

it is the programmer's responsibility to end the program (typically,

by calling the exit function of C).

In this section, one considers the atomic instructions which terminate

at the same instant they start. We have already seen two examples in

previous sections: call to the C function

printf, and

creation of instances of a module

m with the

m_create and

m_create_in functions.

Creation

Schedulers can be created arbitrarily with the

scheduler_create atomic instruction. They are of the type

scheduler_t.

scheduler_t sched = scheduler_create ();

One instant of a scheduler is got by executing the acheduler_react atomic instruction.

scheduler_react (sched);

The scheduler_react function allows user to build

hierarchies of schedulers and to make them react following arbitrary

strategies.

Implicit and Current Schedulers

A scheduler, called the

implicit scheduler, always exists

in every program. Two atomic instructions are available to get the

implicit scheduler and also the

current scheduler, which is

the one to which the executing thread is linked (the

NULL

value is returned if the thread is not linked to any scheduler).

scheduler_t implicit = implicit_scheduler ();

scheduler_t current = current_scheduler ();

Events are basically variables with 3 possible values: present,

absent, or unknown. An event is created in a scheduler and managed by

it during all its lifetime. The value of an event is automatically

set to unknown at the beginning of each instant. There is a way for

the programmer to set an event to present (namely, to

generate

it), but there is no way to set it to absent: only the system is able

to decide that an event is absent. Actually, the system automatically

decides that unknown events are absent when all threads have reached a

cooperation point and when there is no thread waiting for an event

which has been previously generated. The language definition assures

that, once unknown events are declared absent, all is finished for the

current instant (in particular, no event can be generated anymore

during the same instant). As consequence, one can consider that:

- Events are non-persistent data which lose their

presence status at the beginning of each instant.

- Events are broadcast: all threads always "see" their

presence/absence status in the same way, independently on the order in

which they are scheduled.

- Reaction to the absence of an event is always postponed to the

next instant.

The last point is a major difference with the synchronous approach, in

particular with the

Esterel[

9] language (see

Related Work9 for details).

Creation

A event is created in a scheduler whose instants determine its

presence/absence status.

event_create creates an event in

the implicit scheduler.

event_create_in creates an event in

the scheduler given as argument. Events are of the type

event_t.

event_t evt1 = event_create ();

event_t evt2 = event_create_in (sched);

The first event is created in the implicit scheduler while the second one

is created in the scheduler sched.

Generation

Generation of an event makes it present in the scheduler in charge of

it (the one in which the event has been created). If the thread

running the generation is linked to the scheduler of the event, then

the generation becomes immediately effective. In this case, all the

threads waiting for the event will be re-scheduled during the same

instant, and thus will see the event as present. If the scheduler of

the event and the one of the thread are different, then the

order to generate the event is sent to the scheduler of the

event. In this case, the event will be generated later, at the beginning of

the next instant of the target scheduler. Note that this can occur at

an aritrary moment if the target scheduler is run asynchronously by a

dedicated native thread.

A value can be associated to an event generation; in this case one

speaks on a

valued generation of the event; in the other

case, the generation is said to be

pure. The value must be of

a pointer type, or of a type that can be cast to such a type. All the

values generated for a same event are collected during the instant and

are available during this instant using the

get_value

non-atomic instruction (see section

Generated Values2.4.8).

A pure generation of a present (that is, already generated) event has

no effect. This is of course different for a valued generation: then,

the value is appended to the list of values of the event. Note that,

as for the presence/absence status, the list of values of an event is

automatically reset to the empty list at the beginning of each

instant.

generate (evt);

generate_value (evt,val);

The first call is the pure generation of the event evt. The

second call generates the same event, but with the value val. Note that a valued generation with a null value is not

equivalent to a generation without any value.

2.3.3 Control over Threads

|

Three means for controlling threads execution are provided: the way to

stop the execution of a thread which is thus forced to

terminate definitively; the way to suspend and resume the execution

of a thread. With these means, the user can design its own scheduling

strategies for controlling threads.

Stop

Execution of a thread is stopped with a call to the

stop

instruction. To stop a thread means that its execution is definitively

abandoned. The effect of the

stop instruction is to send to the

scheduler of the thread in argument the order to stop the thread. The

order will always be processed at the beginning of a new instant, in

order to avoid interferences with the other threads. If the executing

thread and the stopped threads are both linked to the same scheduler,

then the thread will be stopped at the beginning of the next

instant. As example, a thread can stop itself by:

stop (self ());

Note that the thread terminates its execution for the current instant

and is only effectively stopped at the next instant; the

preemption is actually a "weak" one.

Suspend and Resume

Threads can be suspended and resumed using the two atomic instructions

suspend and

resume. Suspension of a thread means

that its execution is suspended until it is resumed. Suspension and

resuming are orders given to the scheduler running the considered

thread, and are always processed at the beginning of new

instants. Like for

stop, if the executing thread and the

concerned threads are both linked to the same scheduler, then the

thread execution status will be changed at the beginning of the next

instant.

suspend (main_thread);

resume (other_thread);

Orders are processed in turn. For example, the following sequence

of instruction has no effect as thread is immediately

resumed after being suspended:

suspend (thread);

resume (thread);

Basic atomic instructions are standard C blocks, made of C statements

enclosed by "

{" and "

}". Of course, the C

statement must terminate and one must keep in mind that it should not

take too much time to execute it. Indeed, the others threads belonging

to the same scheduler cannot execute while the current thread has not

finish to execute it (see the discussion on this point in

Necessity of Cooperation4.1).

Almost any C code can syntactically appear in atomic instructions,

between "

{" and "

}". It is of the programmer's

responsibility to avoid the use of any statement that would prevent

the complete execution of an atomic instruction. For example,

setjump/longjump should absolutely be avoided. However, some

forbidden statements are detected, and lead to program rejection. This

is the case of the C

return statement.

break

statements not enclosed in a C bloc are also forbidden, as well as

goto statements.

Procedure calls are considered as atomic instructions and can thus be

used directly without being put inside a C block. This is however just

a syntactic facility and the semantics of an atomic action made of a C

procedure call is exactly the one of the block containing it. For

example, the module

main of

Main Module12.1.2

could be equivalently written:

module main ()

{

trace_create ("first thread");

trace_create ("second thread");

}

end module

2.4 Non-atomic Instructions

|

In this section, one considers the non-atomic instructions. As

opposite to atomic instructions, execution of non-atomic instructions

can last several instants.

The basic non-atomic instruction is the

cooperate

instruction which returns the control back to the scheduler. When

receiving the control after a

cooperate instruction, the

scheduler knows that the executing thread has finished its execution

for the current instant, and thus that is is not necessary to give it

back the control another time during the instant. When the thread

will receive the control in a future instant, the

cooperate

instruction terminates and passes the control in sequence. Execution

of a

cooperate instruction thus needs two instants to

complete.

Execution of an

await instruction suspends the executing

thread until the event is generated. There is of course no waiting at

all if the event is already present. Otherwise, the waiting can just

take a portion of the current instant, if the awaited event is

generated later in the same instant, by a thread scheduled later;

the waiting can also last several instants, or can even be infinite,

if the awaited event is never generated.

await (event);

There is a way to limit the time during which the thread is suspended

waiting for an event. The limitation is expressed as a number of

instants. The thread is resumed when the limit is reached. Of course,

the waiting ends, as previously, as soon as the event is generated

before reaching the limit. For example, the following instruction

suspends the executing thread at most 10 instant, waiting for the

event e.

await (e,10);

After resuming, one can know if the limit was exceeded or if the event

has been generated before the limit was reached, by examining the

value returned by the predefined function return_code ():

ETIMEOUT means that the limit has been

reached.OK means normal termination because the awaited event

is generated.

For example, the following code tests the presence of an event during

one instant:

await (e,1);

{

if (return_code () == ETIMEOUT)

printf ("was absent");

else

printf ("is present");

}

Note that the message "was absent" is printed at the instant

that follows the starting of the waiting, while "is present"

is printed at the same instant. Reaction to the absence of an event

(here, the printing action) cannot be immediate, but is always

postponed to the next instant.

The value returned by return_code () concerns the last

non-atomic instruction executed. For example, in the following code,

the printed messages concern f, not e:

await (e,1);

await (f,1);

{

if (return_code () == ETIMEOUT)

printf ("f was absent");

else

printf ("f is present");

}

The

join instruction suspends the executing thread until

another one, given as argument, has terminated; this is called

joining the thread. Note that there are actually two ways for

a thread to terminate: either because execution has returned, or

because the thread has been stopped.

As for

await, there is a possibility to limit the time

during which the thread is suspended, and the

return_code

function is used in the same way to detect if the limit was reached.

join (th1);

join (th2,10);

The first instruction waits unconditionally for the termination of th1,

while the second waits at most 10 instants to join th2.

There are two kinds of loops:

while loops, that cycle while

a boolean condition is true, and

repeat loops that cycle a

fixed number of times. There is no equivalent of a

for loop,

as such loops would need in most cases an associated local

variable;

for loops are thus, when needed, to be

explicitly coded.

While

While loops are executing their bodies while a boolean condition is true.

while (1) do

printf ("loop!");

cooperate;

end

The value of the condition is only considered at the first instant and

when the body terminates. For example, the following loop never

terminates, despite the fact that the condition is false at some

instants:

{local(i) = 1;}

while (local(i)) do

{local(i) = 0;}

cooperate;

printf ("loop!");

cooperate;

{local(i) = 1;}

end

Instantaneous loops are loops with a non-cooperating body. This is for

example the case of:

while (1) do

printf ("loop!");

end

Instantaneous loops should generally be avoided as they forbid

execution of the others threads (see

Necessity of Cooperation4.1).

Nevertheless, they can be useful in unlinked threads,

or when the executing thread is the only one run by the scheduler

(which is a rather special situation, indeed).

Repeat

A

repeat loop executes its body a fixed number of times. It

could be coded with a

while loop and a counter implemented

as a local variable; the

repeat instruction avoids

the use of such a local variable.

repeat (10) do

printf ("loop!");

cooperate

end

The expression defining the number of cycles is evaluated when the

control reaches the loop for the first time.

The

if statement can be used in atomic instructions as other

standard C statements. However, when it is used in this context, both

then and

else branches must be atomic

instructions. A non-atomic version of the

if is

available. The syntax is:

if (<expression>) then <instruction> else <instruction> end

For example, the instruction of Await12.9.4, which

tests for the presence of e during the current instant, can

be equivalently written as:

await (e,1);

if (return_code () == ETIMEOUT) then

printf ("was absent");

else

printf ("is present");

end

Both then and else branches are optional. The

boolean expression exp is evaluated once, when the control

reaches the if for the first time. Its value determines

which branch is chosen for execution. The chosen branch is then

executed up to completion, which can takes several instants, as it can

be non-atomic.

For example, the following instructions prints at the next instant

the value exp has at the current instant:

if (exp) then

cooperate;

printf ("true!");

else

cooperate;

printf ("false!");

end

The

return statement of C cannot be used in atomic

instruction, as it would prevent the instruction to

terminate. However, it can be used as a non-atomic instruction to

force the termination of the executing thread. For example, the

following module terminates when the boolean expression

exp

becomes true:

module m ()

while (1) do

if (exp) then return; end

cooperate;

end

end module

Note that, as the return statement is forbidden in atomic

instructions, the following module is incorrect:

module bug ()

while (1) do

{if (exp) return;}

cooperate;

end

end module

The

halt instruction never terminates. It is equivalent to:

while (1) do cooperate; end

Its basic use is to block the execution flow, as in:

if (!exp) then

generate (error);

halt;

end

...

In this code, the if instruction tests exp and

forbids the control to pass in sequence if it is false. In this case,

an event is generated which should be used by an other thread to

recover from this situation.

The

get_value instruction is the means to get the values

associated to an event by the valued generations of it. The values are

indexed and, when available, returned in a variable of type

void**. The instruction

get_value (e,n,r) is an attempt

to get the value of index

n, generated for the event

e during the current instant. If available, the value is assigned

to

r during the current instant; otherwise,

r

will be set to

NULL at the next instant, and the function

return_code () will return the value

ENEXT.

For example, the following instruction is an attempt to get the first

value of

evt (for simplicity, values are supposed to be of type

int):

get_value (evt,0,res);

{

if (return_code () == ENEXT) printf ("there was no value! ");

else printf ("first value is %d ", (int)(*res));

}

The following module awaits an event and prints all the values

generated for it:

module print_all_values (event_t evt)

local int i,int res;

await (local(evt));

{local(i) = 0;}

while (1) do

get_value (local(evt), local(i), (void**)&local(res));

if (return_code () == ENEXT) then return;

else

{

printf ("value #%d: %d\n", local(i), local(res));

local(i)++;

}

end

end

end module

Note that the module always terminates at the next instant. This

should not be surprising: one must wait for the end of the current

instant to be sure that all the values have been effectively got.

A module can run another one, using the

run non-atomic

instruction. The calling thread suspends execution when encountering

a

run instruction. Then, an instance of the called module

is created with the arguments provided. Finally, the calling thread is

resumed when the instance of the called module terminates. Moreover,

a thread which is stopped retransmits the stop order to the thread it

is running, if there is one. Thus, the instance of a called module is

automatically stopped when the calling thread is.

run mod1 ();

run mod2 ("mod2 called");

In this sequence, an instance of mod1 is first

executed. When it terminates (if it does), then an instance of mod2 is executed with a string argument. Finally, the sequence

terminates when the last instance does.

When created, a thread is always linked to a scheduler. During

execution, the thread can link to others schedulers, using the

link instruction. The effect of execution the instruction

link (sched) is to extract the executing thread from the current

scheduler and to add it to

sched, in the state in which it

has left the current scheduler. Thus, after re-linking, the thread

will resume execution in the new scheduler. This can be seen as a

restricted migration of threads.

For example, the following module cycles between two schedulers. On

each scheduler, it awaits an event and then prints a message. Then, if

the two events are generated at each instant, the program prints

"

Ping Pong" forever:

module play ()

while (1) do

link (sched1);

await (evt1);

printf ("Ping ");

link (sched2);

await (evt2);

printf ("Pong\n");

end

end module

Native modules only have meaning in the context of a preemptive OS as

instances of native modules should be run by native threads belonging

to the kernel. The presence of the

native keyword just after

the keyword

module defines the module as native. The

unlink non-atomic instruction unlinks the instance of a native

module from the scheduler to which it was previously linked. After the

unlink instruction, the thread is free from any

synchronization. However, an unlinked thread can always re-link to a

scheduler using the

link non-atomic instruction (see

Link12.7.2).

The

unlink instruction is specific to native modules:

programs where

unlink instructions appears in non-native

modules are rejected at compile time.

When unlinked, instances of native modules are autonomous, which means

that they execute independantly of any scheduler, at their own

pace. For example, consider the following program which creates

two native threads instances of the same native module

pr:

module native pr (char *s)

unlink;

while (1) do

printf (local(s));

end

end module

module main ()

pr_create ("hello ");

pr_create ("world!

");

end module

The two lists of messages produced are merged in an unpredictable way

in the output: nondeterminism is introduced by unlinked instances of

native modules. Note that the loop in pr is

instantaneous; this is not a problem as the thread is

unlinked. The granularity of each thread is, however, under the

dependance of the OS, which can be unacceptable in some situations

(see Granularity of Instants4.2).

Native module are important for using standard blocking I/Os in a

cooperative context. For example, the following module uses the getchar function which blocks execution of the calling thread until

a character is read on the standard input:

module native AnalyseInput ()

unlink;

...

while (1) do

{

switch (getchar ()){

...

}

}

end

end module

The first instruction unlinks the thread from the current

scheduler. Then, the getchar function can be safely called

without any risk to block other threads.

The following module read_module implements a cooperative

read I/O, using the standard blocking read function. The

thread first unlinks from the scheduler, then performs the read, and

finally re-links to the scheduler:

module native read_module (int fd,void *buf,size_t count,ssize_t *res)

local scheduler_t sched;

{local(sched) = current_scheduler ();}

unlink;

{(*local(res)) = read (local(fd),local(buf),local(count));}

link (local(sched));

end module

Note that the pattern suggested by this example is a very general one,

useful to reuse code which was not designed to be run in a cooperative

context.

3 Examples - 1

One considers several examples of programming in

Loft. First, some

basic examples are given in

Basic Examples3.1. The

complete programming of a little reflex game is considered in

Reflex Game Example3.2. Sieve algorithms are coded in

Sieve Examples3.3. Finally, data-flow programming is considered

Data-Flow Programming3.4.

One considers several basic examples which highlight some aspects of

the language. Section

Mutual Stops3.1.1 shows

the benefit of a precise semantics. A notification-based communication

mechanism is implemented in

Wait-Notify3.1.2. Barriers

are considered in

Synchronization Points3.1.3. A

reader/writer example is code in

Readers/Writers3.1.4.

Finally, a producer/consumer example is considered in section

Producers/Consumers3.1.5.

Let us consider a system made of two threads implementing two variants

of a service, say a fast one and a slow one. Two events are used to

start each of the variants. After a variant is chosen, the other one

should become unavailable, that is each variant should be able to stop

the other variant. The coding of such an example is straightforward.

First, the two threads

fast and

slow, and the two

events

start_fast and

start_slow are declared:

thread_t fast, slow;

event_t start_fast, start_slow;

The thread fast awaits start_fast to start. Then,

before serving, it stops the slow variant. It is an instance of the

following module fast_variant:

module fast_variant ()

await (start_fast);

stop (slow);

< fast service >

end module

The thread slow is an instance of slow_variant

defined similarly by:

module slow_variant ()

await (start_slow);

stop (fast);

< slow service >

end module

The program consists in creating the two threads and the two events:

....

fast = fast_variant_create ();

slow = slow_variant_create ();

start_fast = event_create ();

start_slow = event_create ();

....

The question is now: what happens if both start_fast and

start_slow are simultaneously present (this should not

appear, but the question remains of what happens if by accident it is

the case)? The answer is clear and precise, according to the

semantics: the two variants are executed during only one instant, and,

then, they both terminate at the next instant. Note that to insert a

cooperate just after the stop in the two modules

would prevent both threads to start servicing..

Now, suppose that the same example is coded using standard pthreads,

replacing events by condition variables and stop by pthread_cancel. The resulting program is deeply

non-deterministic. Actually, one of the two threads could cancel the

other and run up to completion. But the situation where both threads

cancel the other one is also possible in a multiprocessor context

(where each thread is run by a distinct processor); however, in

this case, both variants execute simultaneously while they are not

canceled, which produces unpredictable results.

Note that the possibility of mutual stopping exhibited in this example

cannot be expressed in a synchronous language, because it would be

rejected at compile time as having a causality cycle.

One considers thread synchronization using

conditions which

basically correspond to (simplified)

condition variables of

POSIX. A thread can

wait for a condition to be set by another

thread. The waiting thread is said to be

notified when the

condition is set. In a very first naive implementation, conditions

are simply boolean variables (initially false). To notify a condition

means to set the variable, and to wait for the condition means to test

it.

int condition = 0;

void notify (int *condition)

{

(*condition) = 1;

}

module wait (int *cond)

while ((*local(cond)) == 0) do

cooperate;

end

{(*local(cond)) = 0;}

end module

Note that no mutex is needed: because of the cooperative model, simple

boolean shared variables are sufficient to implement atomic test and

set operations needed. There is however a major drawback: the module

wait performs busy-waiting and is thus wasting the CPU

resource.

3.1.2.1 Use of Events

Of course, events are the means to avoid busy-waiting. One now

considers conditions as made of a boolean variable with an associated

event:

typedef struct condition_t

{

int condition;

event_t satisfied;

}

*condition_t;

The notification sets the condition variable and generates

the associated event:

void notify (condition_t cond)

{

cond->condition = 1;

generate (cond->satisfied);

}

The wait module awaits the event while the condition

is not set:

module wait (condition_t cond)

while (local(cond)->condition == 0) do

await (local(cond)->satisfied);

if (local(cond)->condition == 0) then cooperate; end

end

{local(cond)->condition = 0;}

end module

Note that once the event is received, the condition must be tested

again because an other thread waiting for the same condition could have

been scheduled before. In this case, the wakening is not productive

and the thread has just to cooperate. Without the second test, an

instantaneous loop would occur in this situation.

The solution proposed does not allow single notifications,

where only one thread is notified at a time. Actually, all threads

waiting for the same condition are simultaneously awaken and all but

one will be able to proceed, the other ones returning to their

previous state. This can be inefficient when several threads are often

simultaneously waiting for the same condition.

3.1.2.2 Single Notification

To be able to notify threads one by one, an event is associated to

each waiting thread. Thus, a condition now holds a list of events

managed in a fifo way and storing the waiting threads. There are two

notifications:

notify_one which notifies a unique thread and

notify_all which, as previously, notifies all the threads.

The

notify_one function gets the first event (

get)

and generates it:

void notify_one (condition_t cond)

{

event_t event = get (cond);

if (event == NULL) return;

cond->condition = 1;

generate (event);

}

The function get returns NULL if no thread is currently

waiting on the condition. The notification is lost in this case (this

conforms to POSIX specification). The notify_all function

notifies all the threads in one single loop:

void notify_all (condition_t cond)

{

int i, k = cond->length;

for (i = 0; i < k; i++) notify_one (cond);

}

The module wait now begins with the creation of a new

event which is stored (put) in the condition:

module wait (condition_t cond)

local event_t event;

while (local(cond)->condition == 0) do

{

local(event) = event_create_in (current_scheduler ());

put (local(cond),local(event));

}

await (local(event));

if (local(cond)->condition == 0) then cooperate; end

end

{local(cond)->condition = 0;}

end module

The notification of a unique thread does not imply the competition of

all the other threads waiting for the same condition because they are

actually waiting on distinct events. Note that the choice of a fifo

strategy to manage events in conditions is quite arbitrary (while

reasonable). This is of course a question which is inherent to the

notify_one primitive: how is the notified thread chosen?

Either which thread is notified is left unspecified, which introduces

some nondeterminism, or the way threads are managed is specified,

which can be felt as an over-specification. This is actually an issue

which can only be solved by application programmers according to their

needs.

3.1.3 Synchronization Points

|

A

synchronization point is a given point in a program where

different threads must wait until a certain number of threads have

reached it. The type of synchronization points is defined by:

typedef struct sync_point_t

{

int sync_count;

int threshold;

event_t go;

}

*sync_point_t;

If the threshold is reached, then the counter is reset to 0 and the

event go is generated. Thus, all the waiting threads can

immediately proceed. If the threshold is not reached, then the

counter is incremented and the thread awaits go to continue.

module sync (sync_point_t cond)

if (local(cond)->threshold > local(cond)->sync_count) then

{local(cond)->sync_count++;}

await (local(cond)->go);

else

{

local(cond)->sync_count = 0;

generate (local(cond)->go);

}

end

end module

One considers several threads that are reading and writing a shared

1. The

writers have priority over readers. Several readers can simultaneously

read the resource while a writer must have exclusive access to it (no

other writer nor reader) while writing. One adopts the terminology of

locks and note

rwlock_t the type of control structures:

typedef struct rwlock_t

{

int lock_count;

int waiting_writers;

event_t read_go;

event_t write_go;

}

*rwlock_t;

The convention for lock_count is the following: 0 means that

the lock is held by nobody; when held by the writer, lock_count has value -1; when positive, lock_count is

the number of readers currently reading.

3.1.4.1 Writers

In order to write, a writer must first run the module

write_lock:

module write_lock (rwlock_t rw)

{local(rw)->waiting_writers++;}

while (local(rw)->lock_count) do

await (local(rw)->write_go);

if (local(rw)->lock_count) then cooperate; end

end

{

local(rw)->lock_count = -1;

local(rw)->waiting_writers--;

}

finalize

{

local(rw)->waiting_writers--;

}

end module

When writing is finished, the writer must call the write_unlock

function:

void write_unlock (rwlock_t rw)

{

rw->lock_count = 0;

if (!rw->waiting_writers) generate (rw->read_go);

else generate (rw->write_go);

}

write_unlock should also be called in the finalizer of the writer

in order to release the lock when the writer is forced to terminate.

3.1.4.2 Readers

A reader can read if no writer is currently writing (

lock_count < 0) and if there is no waiting writer:

module read_lock (rwlock_t rw)

while ((local(rw)->lock_count < 0) || (local(rw)->waiting_writers)) do

await (local(rw)->read_go);

end

{local(rw)->lock_count++;}

end module

After reading or when stopped, a reader should call the function read_unlock:

void read_unlock (rwlock_t rw)

{

rw->lock_count--;

if (!rw->lock_count) generate (rw->write_go);

}

3.1.5 Producers/Consumers

|

3.1.5.1 Unique area

One implements the simplest form of a producers/consumers system where

several threads are processing values placed in a buffer. Each thread

gets a value from the buffer, process it, and then put the result back

in the buffer. To simplify, one considers that values are integers

that are decremented each time they are processed. Moreover, the

processing terminates when 0 is reached. First, a buffer implemented

as a list of type

channel, and an event are defined:

channel buffer;

event_t new_input;

The processing module cyclically gets a value from the buffer, tests

if it is zero, and if it is not, processes the value. When it is

finished, the value decremented by one is put back in the buffer. The

event new_input is used to avoid busy-waiting while the

buffer is empty (one considers that the buffer is rarely empty;

so, a unique event is preferred to single notifications of section

Wait-Notify3.1.2).

module process ()

local int v;

while (1) do

while (buffer->length == 0) do

await (new_input);

if (buffer->length == 0) then cooperate; end

end

{local (v) = get (buffer);}

if (local(v) == 0) then return; end

< processing the value >

{local(v)--;}

put (buffer,local(v));

generate (new_input);

end

end module

All accesses to the shared buffer are atomic under the condition that

all instances of process are created in the same

scheduler. This is of course the case when the implicit scheduler is

the unique scheduler in use.

3.1.5.2 Two areas

One now considers a situation where there are two buffers

in and

out, and a pool of threads that take data from

in,

process them, and then put results in

out. A distinct scheduler

is associated to each buffer.

channel in, out;

scheduler_t in_sched, out_sched;

event_t new_input, new_output;

The module process gets values from in, avoiding

busy-waiting by using the event new_input. A new thread

instance of process_value is run for each value.

module process ()

while (1) do

if (in->length > 0) then

run process_value (get (in));

else

await (new_input);

if (in->length == 0) then cooperate; end

end

end

end module

The process_value module process its parameter and then

links to out_sched to deliver the result in out. At delivery, the event new_output is generated to

awake threads possibly waiting for out to be filled.

module process_value (int v)

< processing the value >

link (out_sched);

put (out,local(v));

generate (new_output);

end module

This code shows a way to manage shared data using schedulers. The use

of standard locks is actually replaced by linking operations. Of

course, locks still exist in the implementation and are used when

linking operations are performed, but they are totally masked to the

programmer who can thus reason in a more abstract way, in terms of

linking actions to schedulers, and not in terms of low-level lock take

and release actions.

In this section, one considers the example of a little game for

measuring the reactivity of users. This example, issued from

Esterel, shows how

Loft can be used for basic reactive

programming.

The purpose of the game is to measure the reflexes of the user. Four

keys are used. The

c key means "put a coin", the

r key means "I'm ready", the

e key means "end the

measure" and the

q key means "quit the game". After putting

a coin, the user signals the game that he is ready; then, he waits

for the

GO! prompt; from that moment, the measure starts

and it lasts until the user ends the measure. After a series of four

measures, the game outputs the average score of the user. The game is

over when an error situation is encountered. There are actually two

such situations: when the player takes too much time to press a button

(the player has abandonned); or when

e is pressed before

GO! (this is considered as a cheating attempt).

First, one codes a preemption mechanism which will be used for

processing error situations.