Participants: Francois Bremond, Nadia Zouba, Van Thinh Vu, Monique Thonnat.

In the framework of the GER'HOME project, we have worked on multi-sensors analysis for everyday elderly activity monitoring in order to improve elderly life conditions at home and to reduce the costs of long hospitalizations. The techniques will allow elderly people to stay safely at home, to benefit from an automated medical supervision and will delay their entrance in nursing homes. More precisely, three issues need to be addressed to achieve these goals. First, alarming events or situations (eg. Falls) need to be detected. Second, the degree of frailty of elderly people should be objectively assessed based on multi-sensor analysis and human activity recognition. This automated assessment should follow a well-defined geriatric protocol. Third, the behavior profile of a person - its usual and average activities - should be identified and then any deviation (missing activities, disorder, interruptions, repetitions, inactivity) from this profile should be detected. Moreover, to help to better understand the behavior disorders of elderly people, we propose to build a library of reference behaviors (containing videos, other raw signal and various meta-data) characterizing people frailty. Thus, the objective of this work is the early detection of deteriorated health status and early diagnosis of illness.

In this work two experiments have been performed:

More precisly, we propose a video monitoring platform fed by a network of cameras and contact sensors. The platform performs 3 main tasks: (1) People detection, tracking and video event recognition; (2) Sensor stream filtering and contact event recognition; (3) Multimodal event recognition. The detection and tracking task detects and tracks mobile objects (mostly people) evolving in the scene. For each tracked mobile object the primitive event recognition task recognizes the events relative to the objects based on their visual features. Similarly, the contact event task recognizes the events characterized by contact information associated to the tracked objects. Finally the multimodal event recognition task consists in combining the previous video and contact events in order to recognize more complex events. These complex events are specified by medical experts thanks to a user friendly language.

|

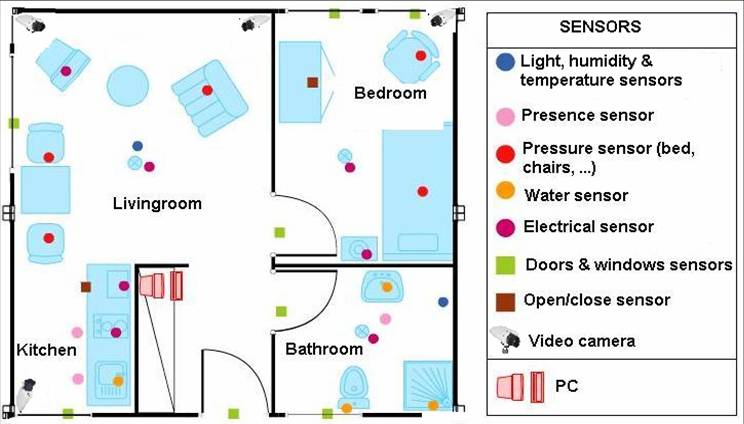

Our main goal is to improve the techniques of automatic data interpretation using complementary sensors installed in the apartment such as video cameras and contact sensors (installed on the doors, on the windows, in the kitchen cabinets and pressure sensors installed on the chairs). The proposed monitoring platform takes three types of input: (1) video stream(s) acquired by video camera(s), (2) data resulting from contact sensors embedded in the home infrastructure, and (3) a priori knowledge concerning event models and the 3D geometric and semantic information of the observed environment. The output of the platform is the set of recognized events at each instant.

Figure 2 illustrates the result of detection, classification and tracking of a person in GER'HOME laboratory.

In Figure 2(a), the detected moving pixels are highlighted in white and clustered into a mobile object enclosed in an orange bounding box. In Figure 2(b), the mobile object is classified as a person and a 3D parallelepiped matching the person indicates the position and orientation of the person. Figure 2(c) shows the individual identifier (IND 0) and a colored box associated to the tracked person. We illustrate on Figure 3 the recognition of a primitive state ``Inside_zone'' in the GER'HOME laboratory.

|

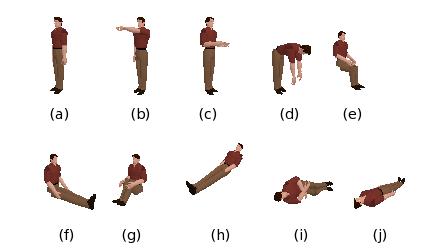

We have modeled a set of interesting activities for elderly at home by combining video cameras with environmental sensors. However, some activities at home require detecting a fine description of human body such as postures. For this, we have used a human posture recognition algorithm in order to detect in real time the posture of a person evolving in the scene. This algorithm needs 3D predefined models of human postures. We have adapted this algorithm for homecare applications, by modeling a set of interesting human postures which are useful to detect activities at home and critical situations for elderly (e.g. falls). For this purpose, we have modeled ten 3D key human postures which are displayed in Figure 4. Each of these postures plays a significant role in modeling and recognizing a set of interesting activities at home. For example, the posture standing with arm up is used to detect when a person reaches and opens kitchen cupboards and his/her ability to do it. The posture standing with hands up is used to detect when a person is carrying an object such as plates.

To validate our models, we have tested a set of human activities in the Gerhome laboratory. Two experiments have been performed. One with actors, and the second one with real elderly people.First experiment.

In the first experiment, we have acquired ten video sequences with only one human actor. The duration of each video is about ten minutes and each video contains about 4800 frames (about eight frames per second). In this experiment, we have tested some normal activities such as: open and close kitchen cupboards, use microwave and warm up a meal.

For example, to model the activities of interest specified by medical experts, we have defined 3 composite events: Use_food, Use_dishes and Prepare_meal. As an example, meal preparation entails at least the detection of a person in motion in the kitchen and use of cabinets where food, plates and/ or utensils are stored. Presence in the kitchen can be indicated by the detection of a person (video camera) in the kitchen lasting for a minimum duration of time, whereas the use of meal ingredients can be indicated by the use of a food storage cupboard or the refrigerator (contact sensors), etc. The multimodal (contact-video) event recognition algorithm recognizes which complex events occur combining primitive video events detected by the video detection module and the contact events detected by the contact detection module (watch the raw video 3.7M or the video with text annotation 2.7M ).

|

|

We have also tested two abnormal activities: "fainting" and "falling down" on Figures 7&8.

Second experiment.

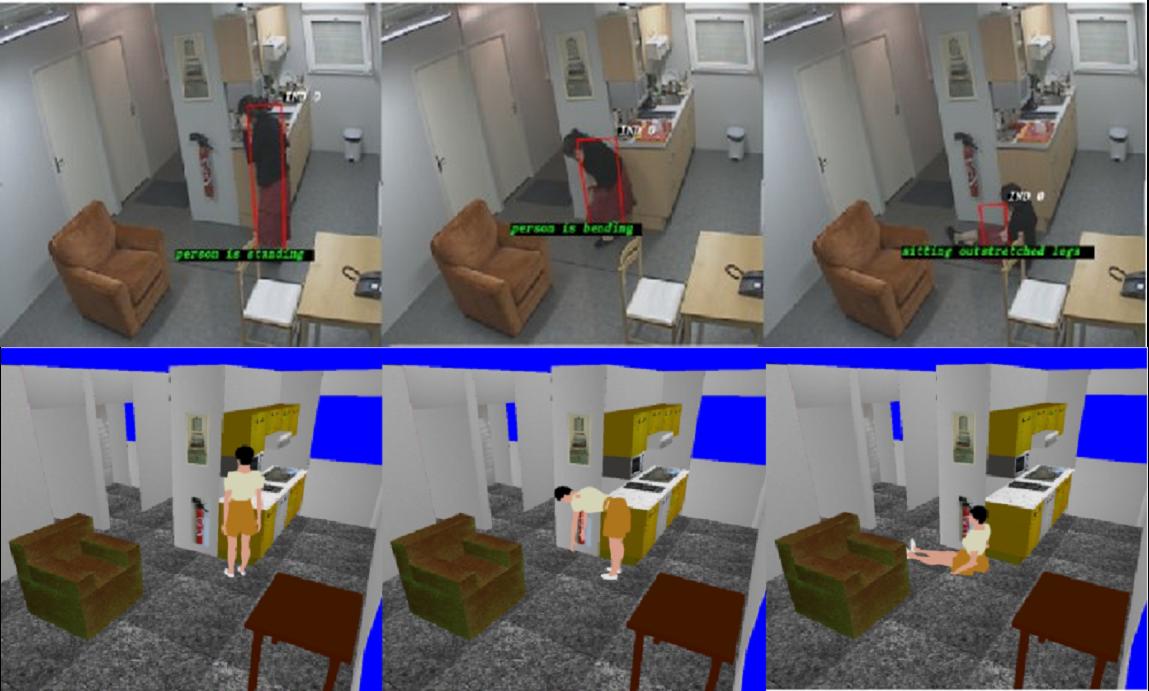

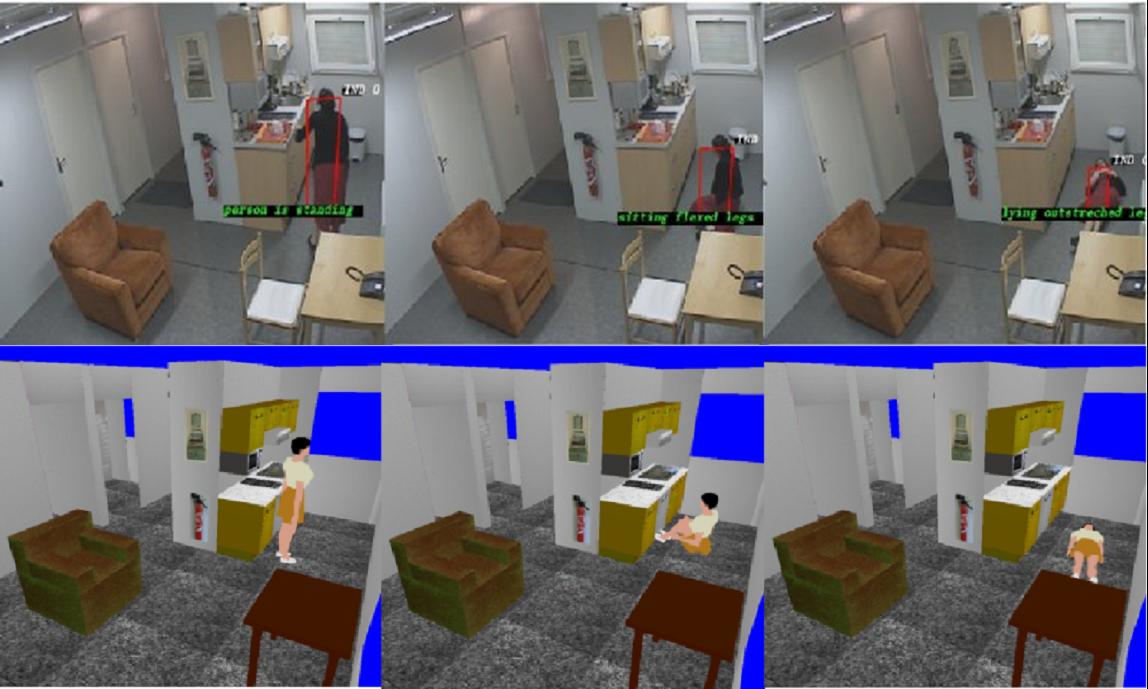

In the second experiment, while evolving in the laboratory, fourteen volunteers (aged from 60 to 85 years) have been observed, each one during 4 hours and 14 video scenes have been acquired by 4 video cameras (about ten frames per second). In this experiment the fourteen volunteers were asked to perform a set of household activities, such as preparing meal, taking meal, washing dishes, cleaning the kitchen, and watching TV. Each volunteer was alone in the laboratory during the experiment. Figure 9 shows the recognition of "bending in the kitchen" activity and the 3D visualization of this recognition. Figure 10 shows the recognition of "taking meal" activity and the 3D visualization of this recognition.

|

|

There are a total of 6GB(4hours - 100000frames) * 4cameras * (11 persons) = 300 GB of video data organized into several directories (see the README file). Each directory contains:

The access is limited and not yet available. Please download the form and send it back to us (Annie.Ressouche@inria.fr) to get the password and the ressource .

- A set of Video Data acquired by the 4 Video Cameras of the Gerhome Laboratory.

- A data file for the Non Video Sensors (Environmental Sensors: OPENCLOSE sensor, PRESENCE sensor, WATER sensor and USAGE sensor).

- The results of INRIA-PULSAR tracking algorithm which are stored in an XML file containing the 2D and the 3D information for each mobile object (e.g. Person).

- The results of INRIA-PULSAR event recognition algorithm, stored in an XML file.

- A Ground-truth annotation at the level of event recognition (stored in an XML file) for the video 2008-03-31 with camera2.

For more information, see the publications and the GERHOME Project Web-Site