Disclaimer

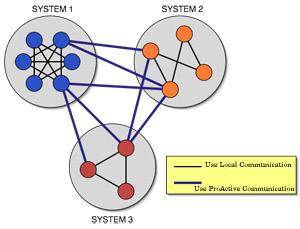

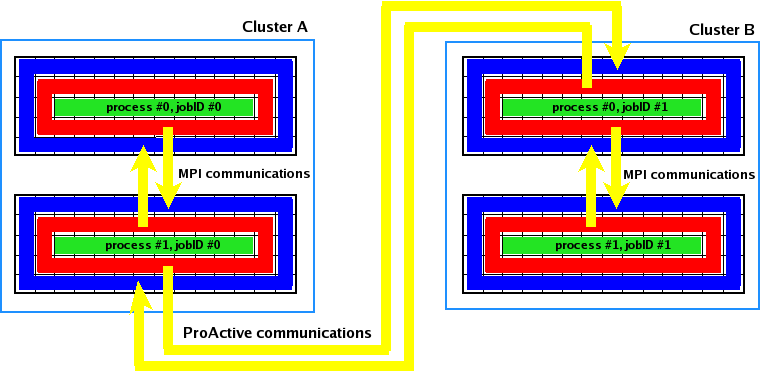

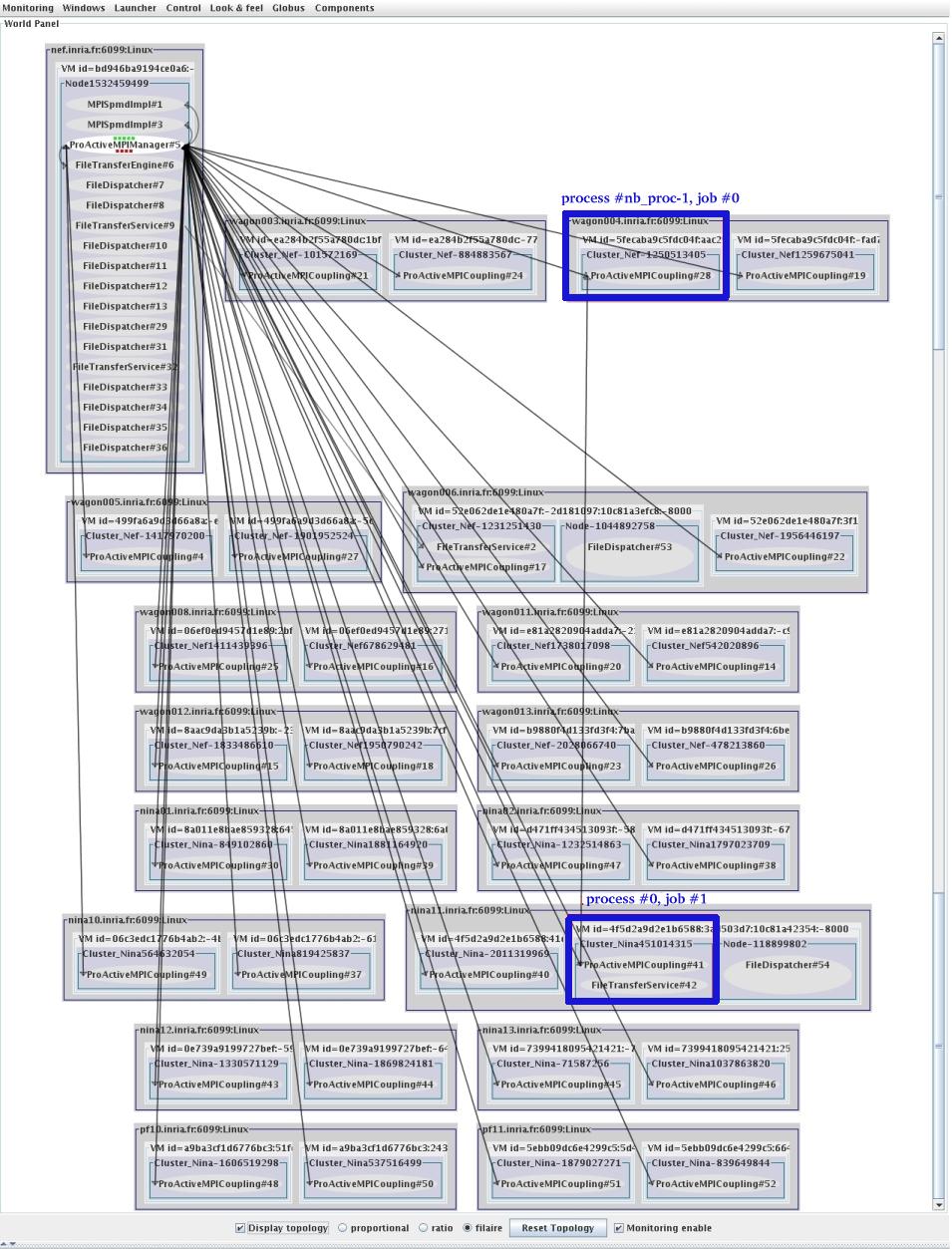

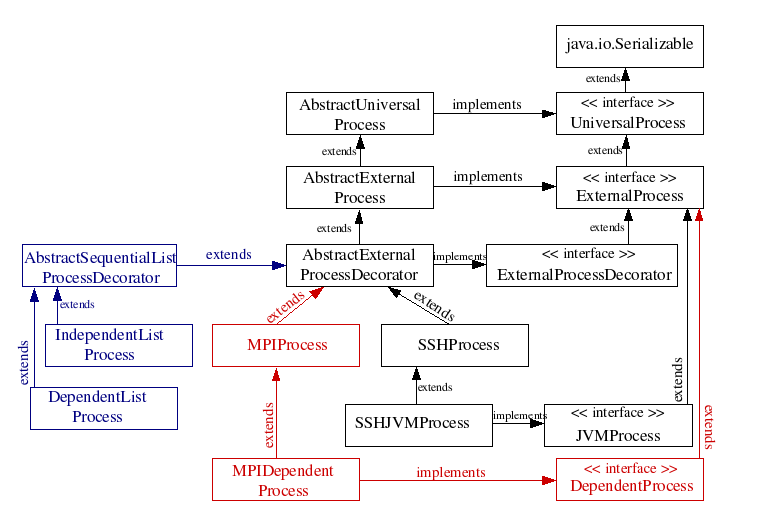

ProActive is a GRID middleware Java library for parallel, distributed, and concurrent computing, also featuring mobility and security in a uniform framework. With a reduced set of simple primitives, ProActive provides a comprehensive API allowing to simplify the programming of applications that are distributed on Local Area Network (LAN), on cluster of workstations, on P2P desktop Grids, or on Internet Grids. The library is based on an Active Object pattern that is a uniform way to encapsulate:

a remotely accessible object,

a thread as an asynchronous activity,

an actor with its own script,

a server of incoming requests,

a mobile and potentially secure entity.

On top of those basic features, ProActive also includes advanced aspects, such as:

Typed Group Communications,

Object-Oriented SPMD,

Distributed and Hierarchical Components,

Security,

Fault Tolerance with checkpointing,

a P2P infrastructure,

a powerful deployment model based on XML descriptors,

File transfer capabilities over the Grid,

a Graphical User Interface: IC2D.

ProActive is only made of standard Java classes, and requires no changes to the Java Virtual Machine, no preprocessing or compiler modification; programmers write standard Java code. Based on a simple Meta-Object Protocol, the library is itself extensible, making the system open for adaptations and optimizations. ProActive currently uses the RMI Java standard library as default portable transport layer, and also provides optimized RMI with IBIS, and HTTP transport.

ProActive features several optimizations improving performance. For instance, whenever two active objects are located within the same virtual machine, a direct communication is always achieved, without going through the network stack. This optimization is ensured even when the co-location occurs after a migration of one or both of the active objects.

ProActive and the (de facto) Standards: ProActive has an architecture that allows the library to interoperate with various official or de facto standards:

Web Service Exportation,

HTTP Transport, RMI/SSH tunneling Transport,

ssh, scp, rsh, rcp,

Globus GT2, GT3 and GT4, Unicore, GLite, ARC (NorduGrid), GSI-SSH,

LSF, PBS, Sun Grid Engine, OAR.

|

Team Leader:

Denis Caromel

INRIA

2004, Route des Lucioles

BP 93

06902 Sophia Antipolis Cedex

France

phone: +33 492 387 631

fax: +33 492 387 971

|

|

OASIS Team

|

Past and External Contributors

|

Public questions/comments/discussions should be posted on the ProActive public mailing list

Mailing list archive at

http://www.objectweb.org/wws/arc/proactive

Post bugs to ProActive bug-tracking system GForge

Table of Contents

Part I. Introduction

- Chapter 1. Principles

- Chapter 2. ProActive Installation

- 2.1. Quick Start

- 2.2. Download and expand the archive

- 2.3. Run a few examples for testing

- 2.4. CLASSPATH to set when writing application using ProActive

- 2.5. Create a java.policy file to set permissions

- 2.6. Create a log4j configuration file

- 2.7. ProActive and IDEs (Eclipse, ...)

- 2.8. Troubleshooting and support

- Chapter 3. ProActive Trouble Shooting

Part II. Guided Tour and Tutorial

- Chapter 4. Introduction to the Guided Tour and Tutorial

- Chapter 5. Introduction to ProActive Features

- Chapter 6. Hands-on programming

- Chapter 7. PI (3.14...) - Step By Step

- Chapter 8. SPMD PROGRAMMING

- Chapter 9. The nbody example

- Chapter 10. C3D - from Active Objects to Components

- Chapter 11. Guided Tour Conclusion

Part III. Programming

- Chapter 12. ProActive Basis, Active Object Definition

- Chapter 13. Active Objects: creation and advanced concepts

- 13.1. Instantiation-Based Creation

- 13.2. Object-Based Creation

- 13.3. Specifying the activity of an active object

- 13.4. Restrictions on reifiable objects

- 13.5. Using the Factory Method Design Pattern

- 13.6. Advanced: Customizing the Body of an Active Object

- 13.7. Advanced: Role of the elements of an active object

- 13.8. Asynchronous calls and futures

- 13.9. Automatic Continuation in ProActive

- 13.10. The Hello world example

- Chapter 14. Typed Group Communication

- Chapter 15. OOSPMD

- Chapter 16. Active Object Migration

- Chapter 17. Exception Handling

- Chapter 18. Branch and Bound API

- Chapter 19. High Level Patterns -- The Calcium Skeleton Framework

Part IV. Deploying

- Chapter 20. ProActive Basic Configuration

- Chapter 21. XML Deployment Descriptors

- Chapter 22. Variable Contracts for Descriptors

- Chapter 23. ProActive File Transfer Model

- Chapter 24. Using SSH tunneling for RMI or HTTP communications

- Chapter 25. Fault-Tolerance

- Chapter 26. Technical Service

- Chapter 27. ProActive Grid Scheduler

Part V. Composing

- Chapter 28. Components introduction

- Chapter 29. An implementation of the Fractal component model geared at Grid Computing

- Chapter 30. Configuration

- Chapter 31. Collective interfaces

- Chapter 32. Architecture Description Language

- Chapter 33. Component examples

- Chapter 34. Component perspectives: a support for our research work

Part VI. Advanced

- Chapter 35. ProActive Peer-to-Peer Infrastructure

- Chapter 36. Load Balancing

- Chapter 37. ProActive Security Mechanism

- Chapter 38. Exporting Active Objects and components as Web Services

- 38.1. Overview

- 38.2. Principles

- 38.3. Pre-requisite: Installing the Web Server and the SOAP engine

- 38.4. Steps to expose an active object or a component as a web services

- 38.5. Undeploy the services

- 38.6. Accessing the services

- 38.7. Limitations

- 38.8. A simple example: Hello World

- 38.9. C# interoperability: an example with C3D

- Chapter 39. ProActive on top of OSGi

- Chapter 40. An extended ProActive JMX Connector

- Chapter 41. Wrapping MPI Legacy code

Part VII. Graphical User Interface (GUI) and tools

Part VIII. Extending ProActive

- Chapter 45. How to write ProActive documentation

- Chapter 46. Adding Grahical User Interfaces and Eclipse Plugins

- Chapter 47. Developing Conventions

- Chapter 48. ProActive Test Suite API

- Chapter 49. Adding a Deployment Protocol

- Chapter 50. How to add a new FileTransfer CopyProtocol

- Chapter 51. Adding a Fault-Tolerance Protocol

- Chapter 52. MOP: Metaobject Protocol

Part IX. Back matters

- Appendix A. Frequently Asked Questions

- A.1. Running ProActive

- A.2. General Concepts

- A.2.1.

How does the node creation happen? - A.2.2.

How does the RMI Registry creation happen? - A.2.3.

What is the class server, why do we need it? - A.2.4.

What is a reifiable object? - A.2.5.

What is the body of an active object? What are its local and remote representations? - A.2.6.

What is a ProActive stub? - A.2.7.

Are the call to an Active Object always asynchronous?

- A.2.1.

- A.3. Exceptions

- A.3.1.

Why do I get an exception java.lang.NoClassDefFoundError about asm? - A.3.2.

Why do I get an exception java.lang.NoClassDefFoundError about bcel? - A.3.3.

Why do I get an exception java.security.AccessControlException access denied? - A.3.4.

Why do I get an exception when using Jini? - A.3.5.

Why do I get a java.rmi.ConnectException: Connection refused to host: 127.0.0.1 ?

- A.3.1.

- A.4. Writing ProActive-oriented code

- A.4.1.

Why aren't my object's properties updated? - A.4.2.

How can I pass a reference on an active object or the difference between this and ProActive.getStubOnThis()? - A.4.3.

How can I create an active object? - A.4.4.

What are the differences between instantiation based and object based active objects creation? - A.4.5.

Why do I have to write a no-args constructor? - A.4.6.

How do I control the activity of an active object? - A.4.7.

What happened to the former live() method and Active interface? - A.4.8.

Why should I avoid to return null in methods body? - A.4.9.

How can I use Jini in ProActive? - A.4.10.

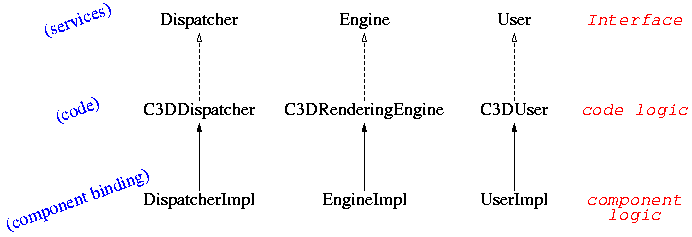

How do I make a Component version out of an Active Object version? - A.4.11.

How can I use Jini in ProActive? - A.4.12.

Why is my call not asynchronous?

- A.4.1.

- A.5. Deployment Descriptors

- A.5.1.

What is the difference between passing parameters in Deployment Descriptor and setting properties in ProActive Configuration file? - A.5.2.

Why do I get the following message when parsing my xml deployment file: ERROR: file:~/ProActive/descriptor.xml Line:2 Message:cvc-elt.1: Cannot find the declaration of element 'ProActiveDescriptor'

- A.5.1.

- Appendix B. Reference Card

- B.1. Main concepts and definitions

- B.2. Main principles: asynchronous method calls and implicit futures

- B.3. Explicit Synchronization

- B.4. Programming AO Activity and services

- B.5. Reactive Active Object

- B.6. Service methods

- B.7. Active Object Creation:

- B.8. Groups:

- B.9. Explicit Group Synchronizations

- B.10. OO SPMD

- B.11. Migration

- B.12. Components

- B.13. Security:

- B.14. Deployment

- B.15. Exceptions

- B.16. Export Active Objects as Web services

- B.17. Deploying a fault-tolerant application

- B.18. Peer-to-Peer Infrastructure

- B.19. Branch and Bound API

- B.20. File Transfer Deployment

- Appendix C. Files of the ProActive source base cited in the manual

- Bibliography

- Index

List of Figures

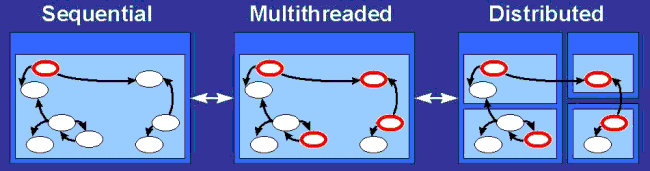

- 1.1. Different computing deployment paradigms

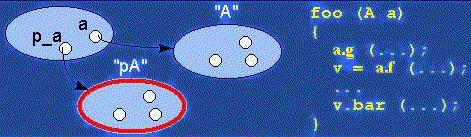

- 1.2. Polymorphism

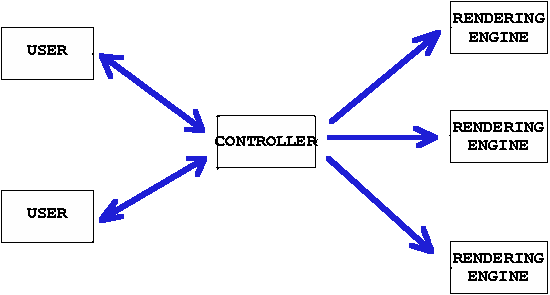

- 5.1. The active objects in the c3d application

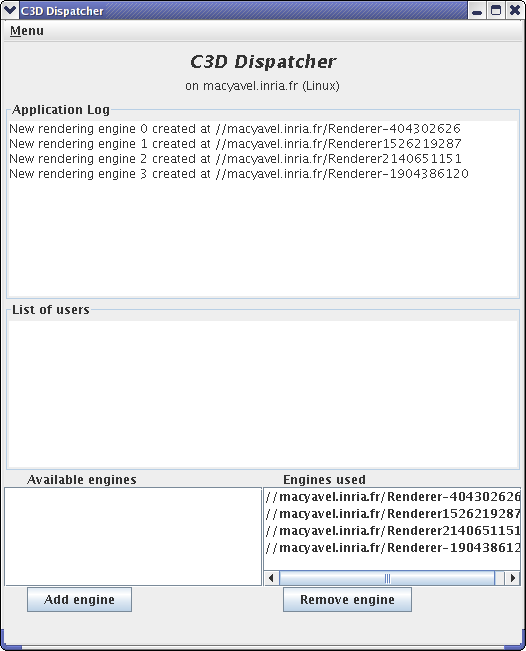

- 5.2. the dispatcher GUI is launched

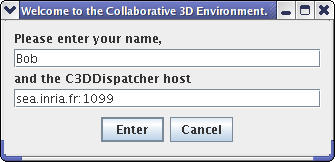

- 5.3. Specifying the host

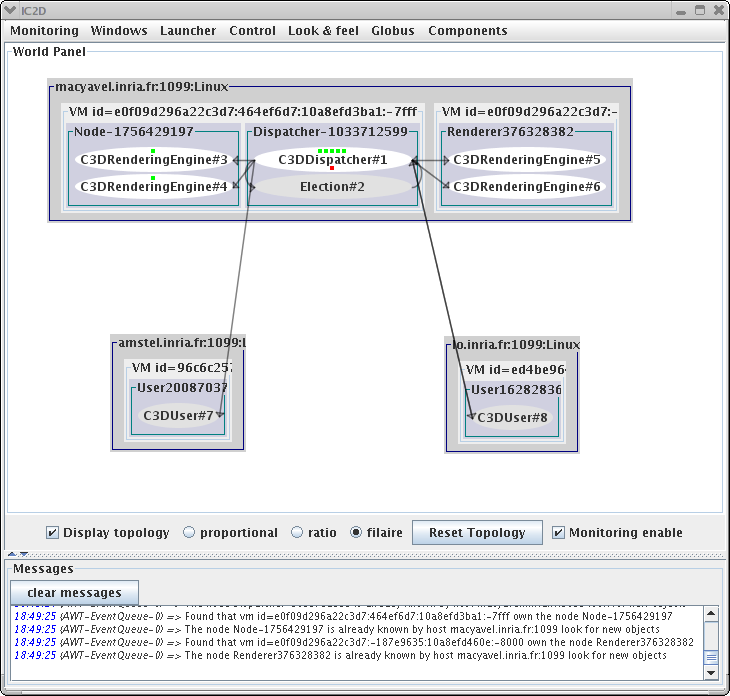

- 5.4. The C3D application when a new user joins in, seen with IC2D

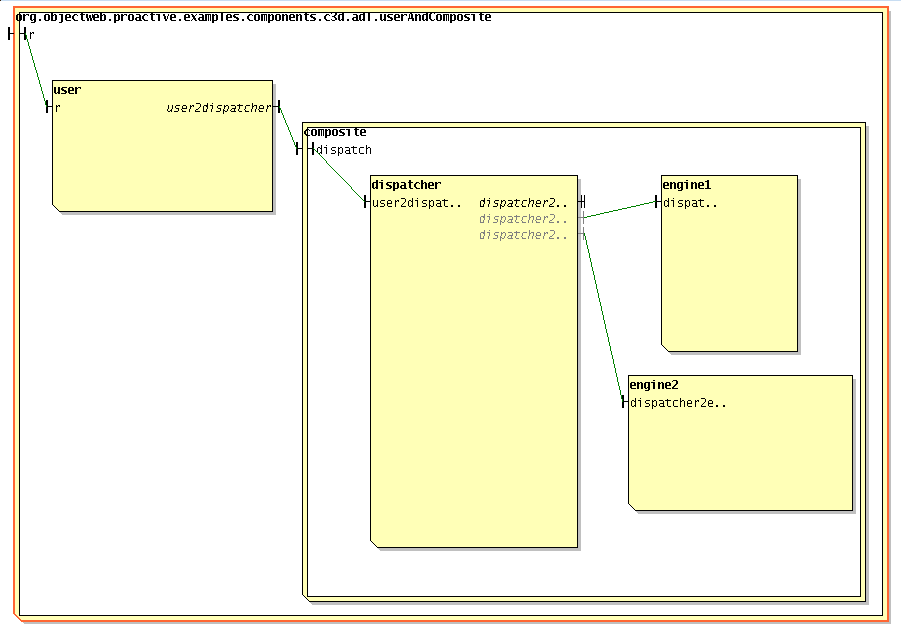

- 5.5. IC2D component explorer with the C3D example

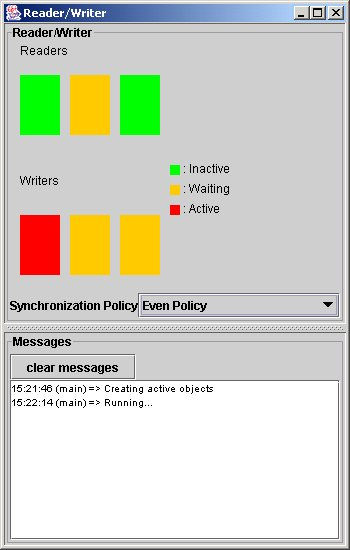

- 5.6. Using the readers script

- 5.7. A GUI is started that illustrates the activities of the Reader and Writer objects.

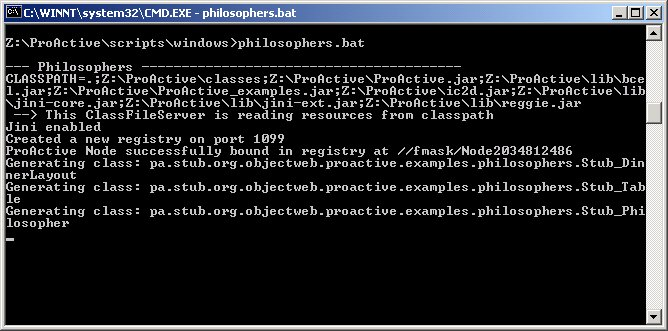

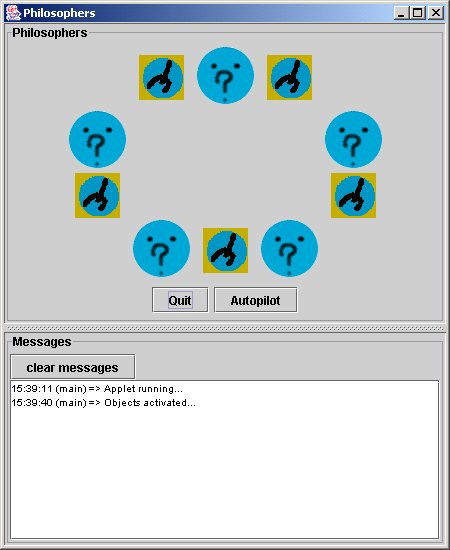

- 5.8. With philosophers.sh or philosophers.bat

- 5.9. The GUI is started.

- 5.10. Monitoring new RMI host with IC2D

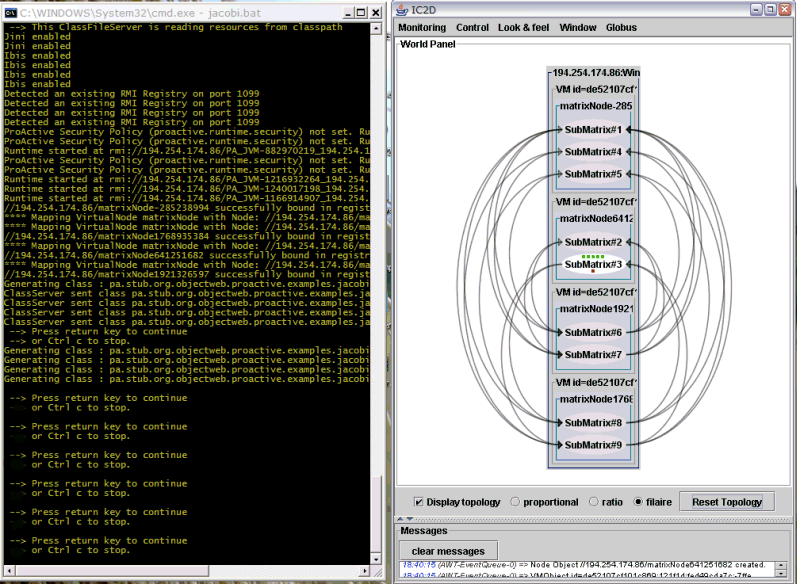

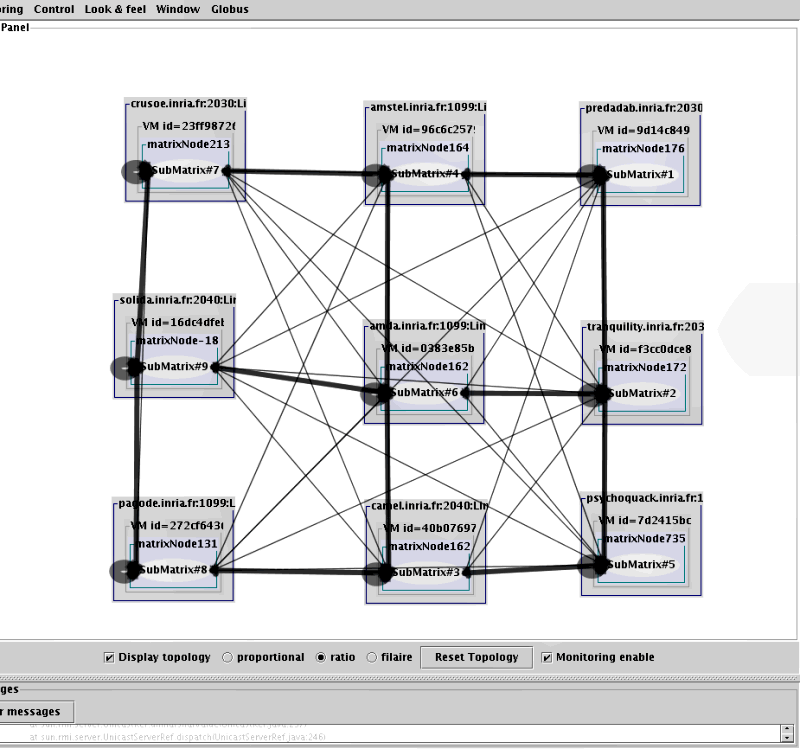

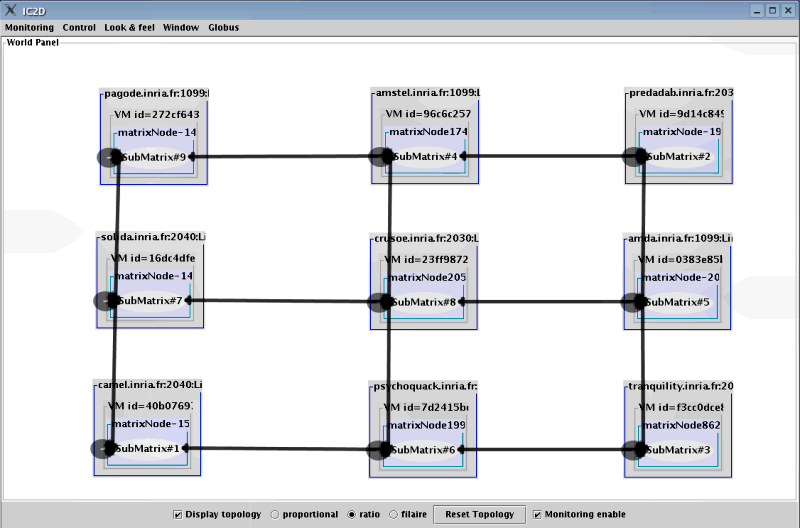

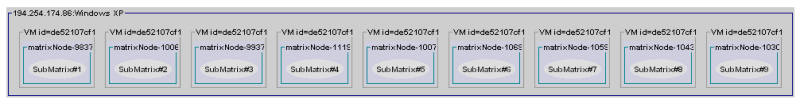

- 8.1. Running the Jacobi application, and viewing with IC2D

- 8.2. With all communications

- 8.3. With a barrier, there are many less comunications

- 8.4. IC2D viewing the Jacobi application with 9 JVMS on the same machine

- 8.5. Communication pattern - Step 1

- 8.6. Communication pattern - Step 2

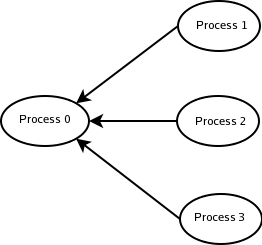

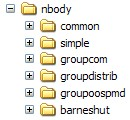

- 9.1. NBody screenshot, with 3 hosts and 8 bodies

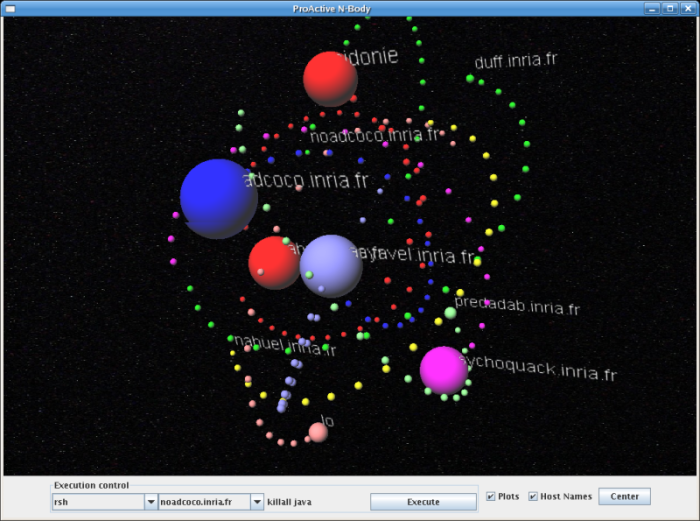

- 9.2. NBody screenshot, with the application GUI and Java3D installed

- 9.3. The nbody directory structure

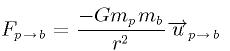

- 9.4. The equation of the force between two bodies

- 10.1. Informal description of the C3D Components hierarchy

- 10.2. IC2D component explorer with the C3D example

- 12.1. The Model: Sequential, Multithreaded, Distributed

- 12.2. A call onto an active object as opposed to a call onto passive one

- 13.1. The components of an active object

- 13.2. A future object

- 13.3. Sequence Diagram - single-threaded version of the program

- 13.4. The components of an active object

- 13.5. The components of a future object before the result is set

- 13.6. All components of a future object

- 13.7. Sequence Diagram

- 13.8. Sequence Diagram

- 18.1. The API architecture.

- 18.2. Broadcasting a new solution.

- 19.1. Task Flow in Calcium

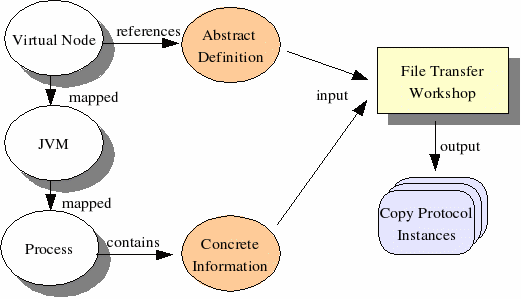

- 23.1. File Transfer Design

- 25.1. The nbody application, with Fault-Tolerance enabled

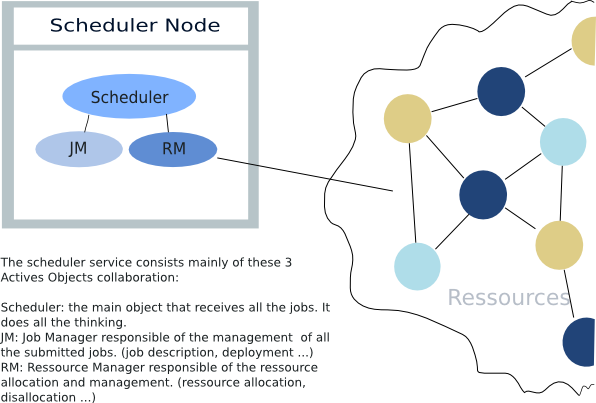

- 27.1. Representation of the scheduler and of its main objects

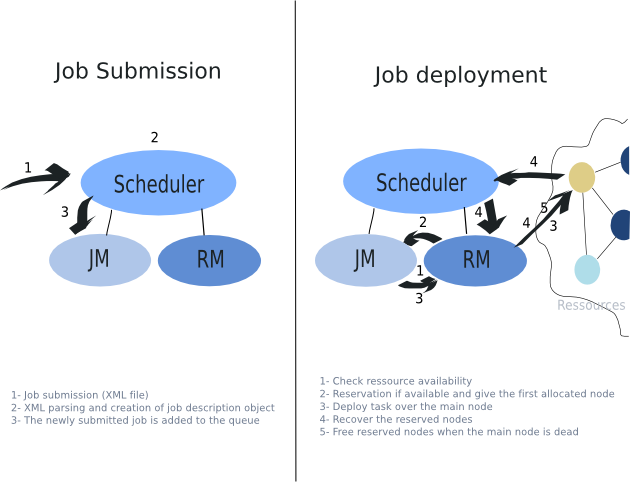

- 27.2. A short description of the mechanism of job deployment and submission

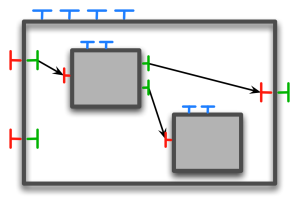

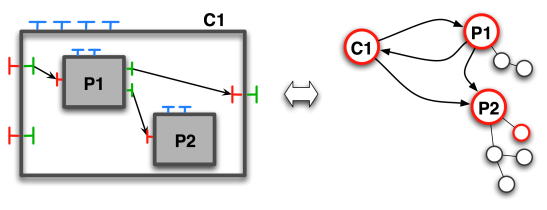

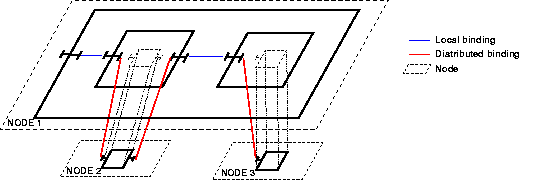

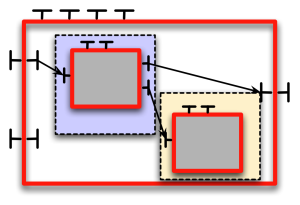

- 29.1. A system of Fractal components

- 29.2. A system of distributed ProActive/Fractal components (blue, yellow and white represent distinct locations)

- 29.3. Match between components and active objects

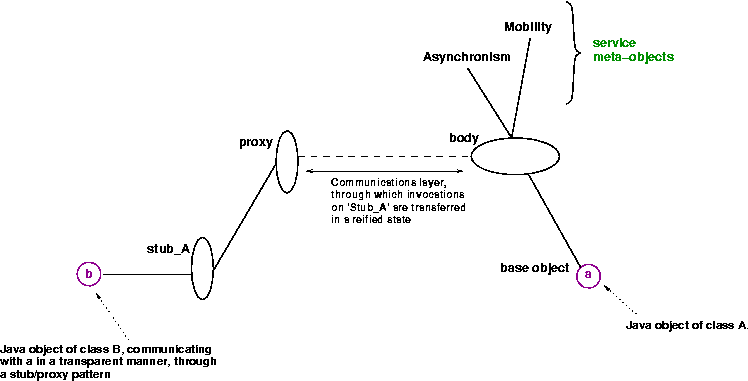

- 29.4. ProActive's Meta-Objects Protocol.

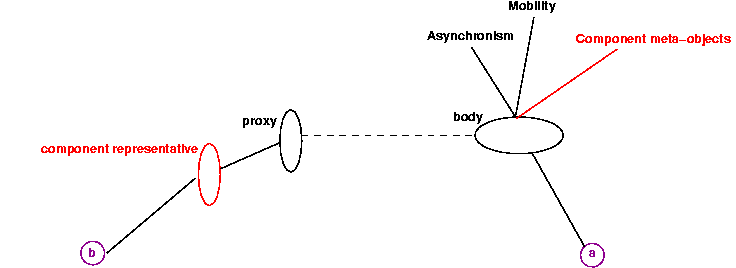

- 29.5. The ProActive MOP with component meta-objects and component representative

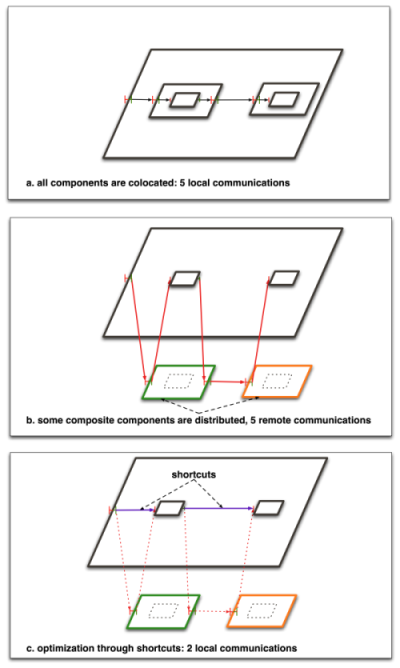

- 30.1. Using short cuts for minimizing remote communications.

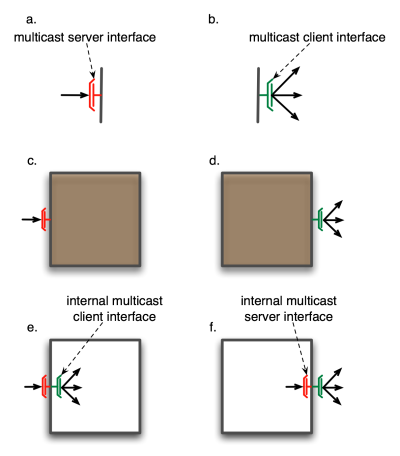

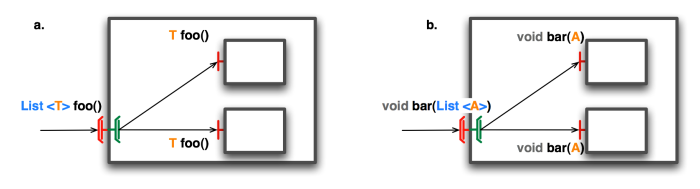

- 31.1. Multicast interfaces for primitive and composite component

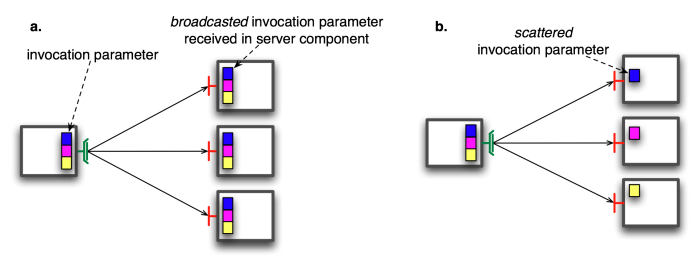

- 31.2. Broadcast and scatter of invocation parameters

- 31.3. Comparison of signatures of methods between client multicast interfaces and server interfaces.

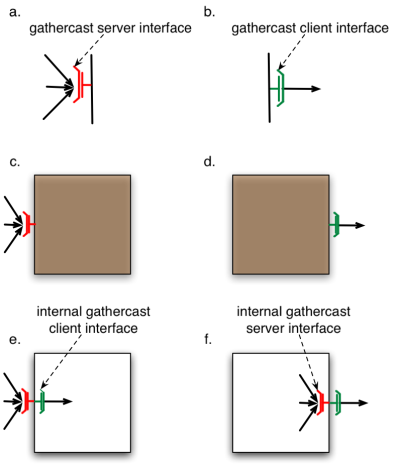

- 31.4. Gathercast interfaces for primitive and composite components

- 31.5. Aggregation of parameters with a gathercast interface

- 31.6. Comparison of signature of methods for bindings to a gathercast interface

- 33.1. Client and Server wrapped in composite components (C and S)

- 33.2. Without wrappers, the primitive components are distributed.

- 33.3. With wrappers, where again, only the primitive components are distributed.

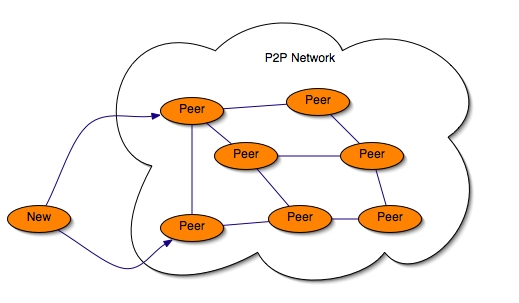

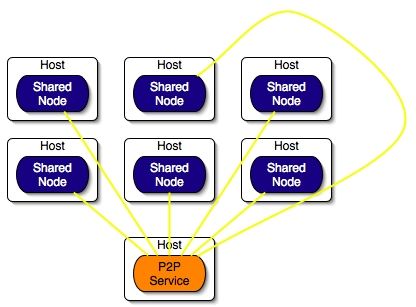

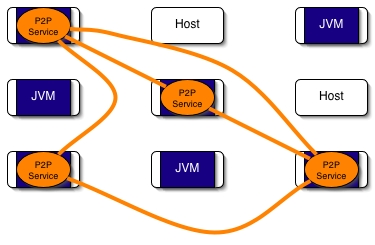

- 35.1. A network of hosts with some running the P2P Service

- 35.2. New peer trying to join a P2P network

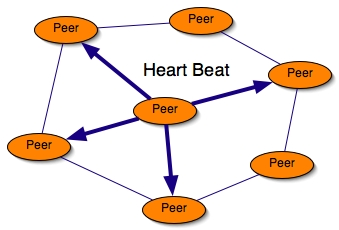

- 35.3. Heart beat sent every TTU

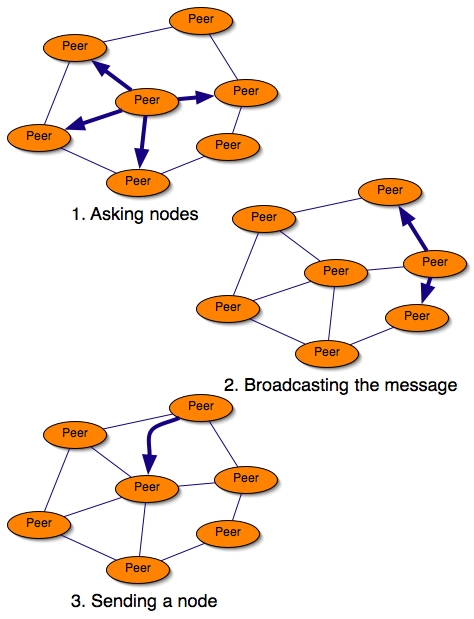

- 35.4. Asking nodes to acquaintances and getting a node

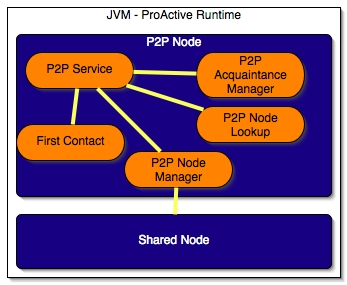

- 35.5. Nodes and Active Objects which make up a P2P Service.

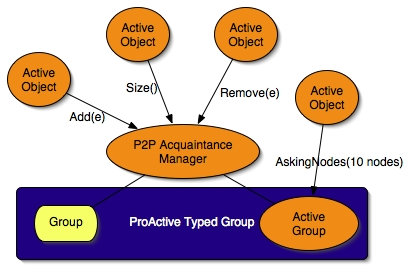

- 35.6. Dynamic Shared ProActive Typed Group.

- 35.7. nBody application deployed on P2P Infrastructure.

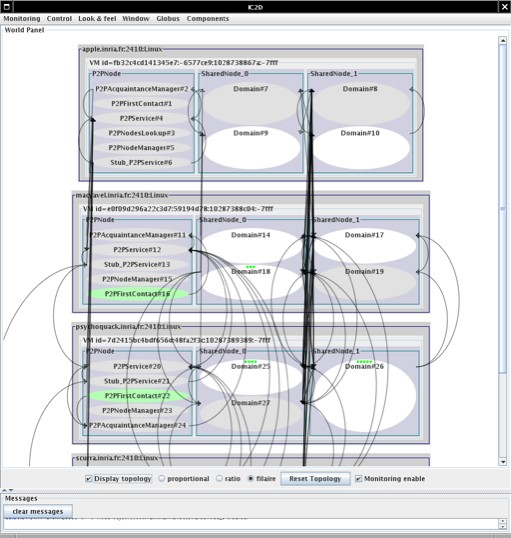

- 35.8. Usage example P2P network (after firsts connections)

- 35.9. A P2P Service which is sharing nodes deployed by a descriptor

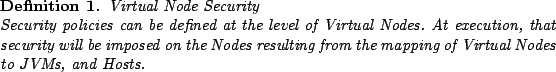

- 37.1. A typical object graph with active objects

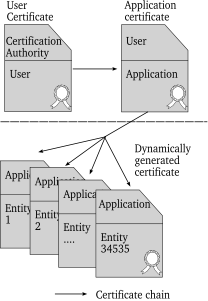

- 37.2. Certificate chain

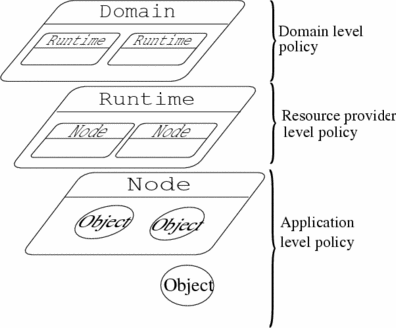

- 37.3. Hierarchical security

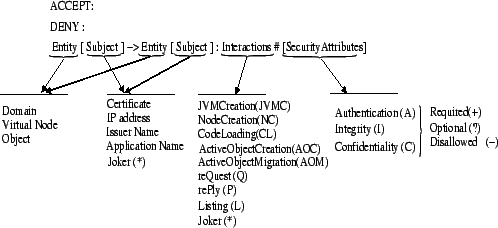

- 37.4. Syntax and attributes for policy rules

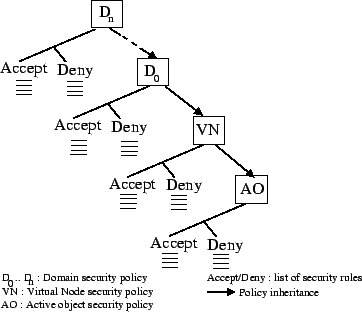

- 37.5. Hierarchical Security Levels

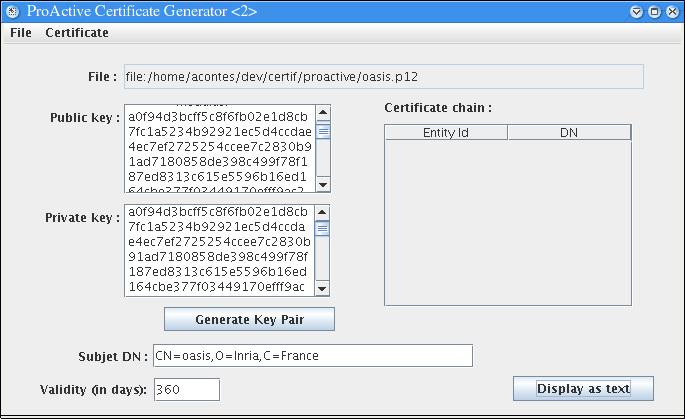

- 37.6. The ProActive Certificate Generator (for oasis)

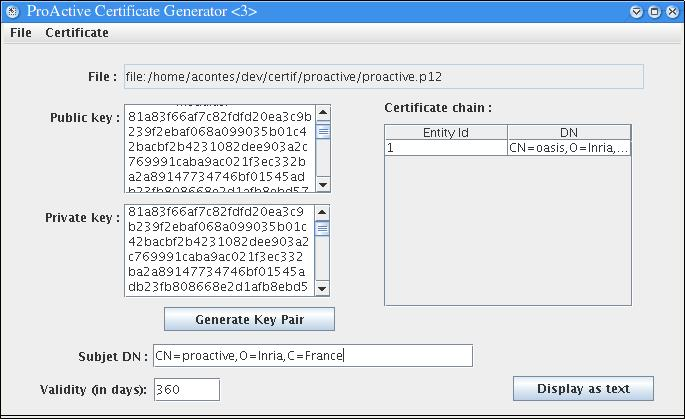

- 37.7. The ProActive Certificate Generator (for proactive)

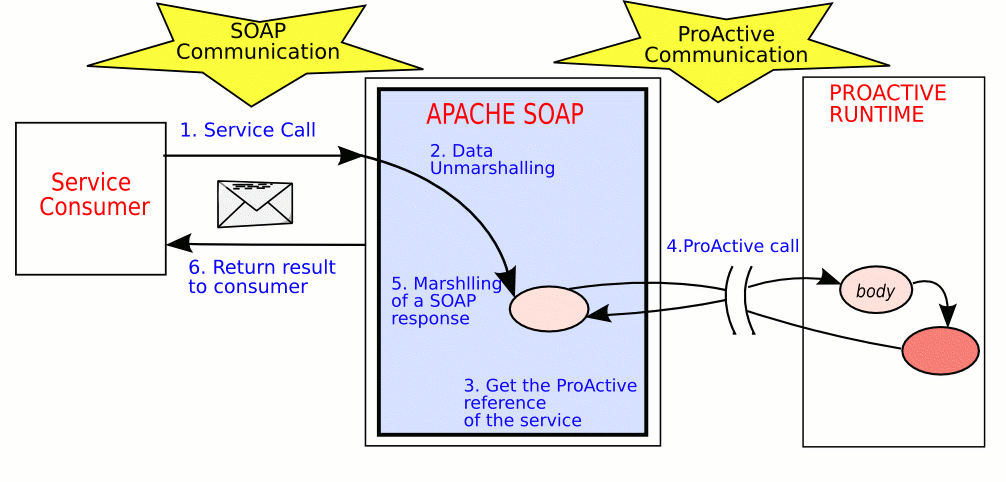

- 38.1. This figure shows the steps when a active object is called via SOAP.

- 38.2. The dispatcher handling all calls

- 38.3. The first screenshot is a classic ProActive application

- 38.4. C# application communicating via SOAP

- 39.1. The OSGi framework entities

- 39.2. The Proactive Bundle uses the standard Http Service

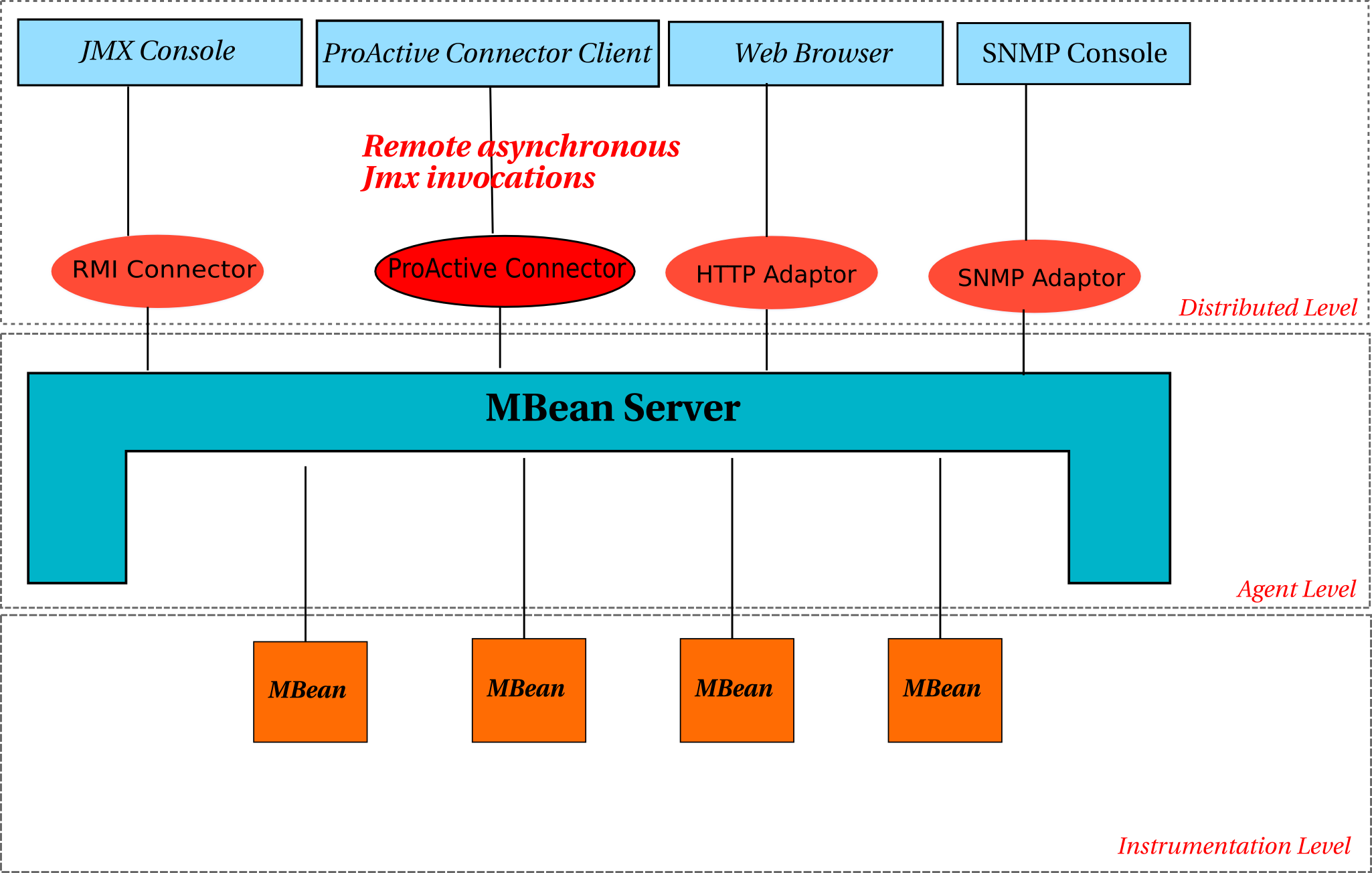

- 40.1. This figure shows the JMX 3 levels architecture and the integration of the ProActive JMX Connector.

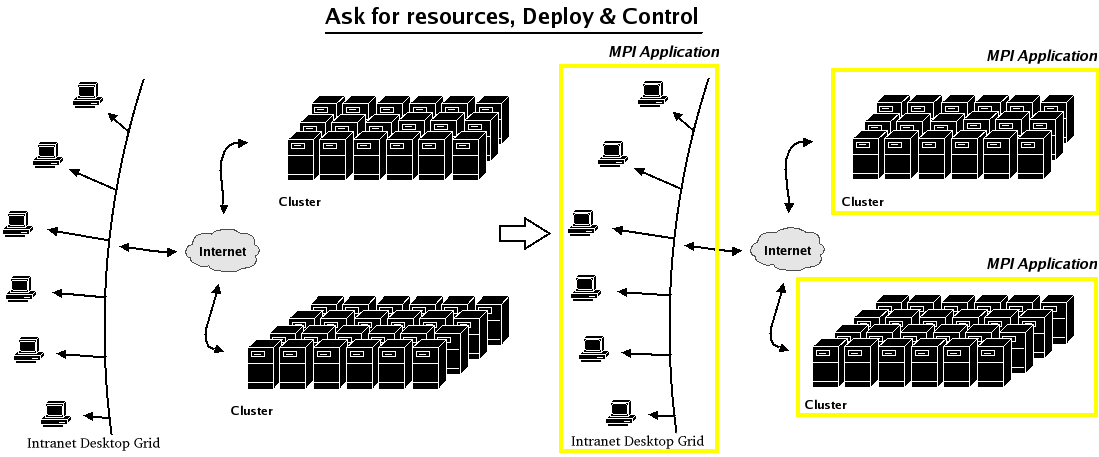

- 41.1. File transfer and asking for resources

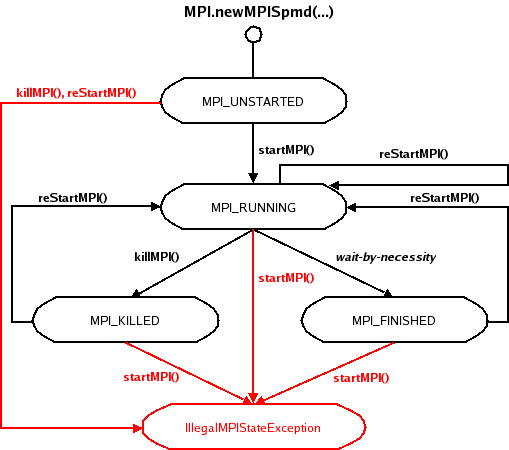

- 41.2. State transition diagram

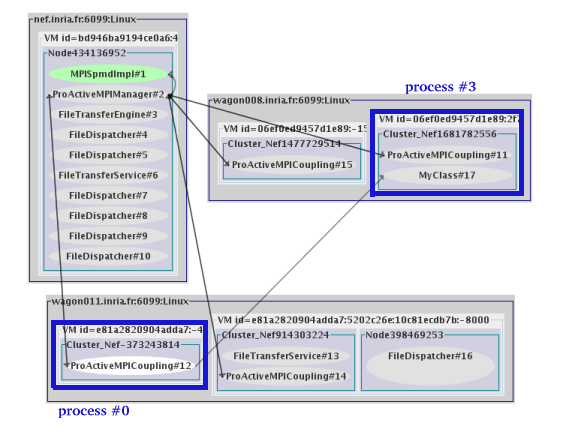

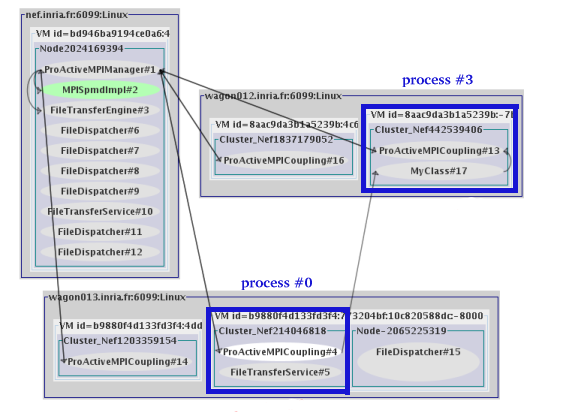

- 41.3. MPI to ProActive communication

- 41.4. ProActive to MPI communication

- 41.5. File transfer and asking for resources

- 41.6. Jacobi Relaxation - Domain Decomposition

- 41.7. IC2D Snapshot

- 41.8. Proxy Pattern

- 41.9. Process Package Architecture

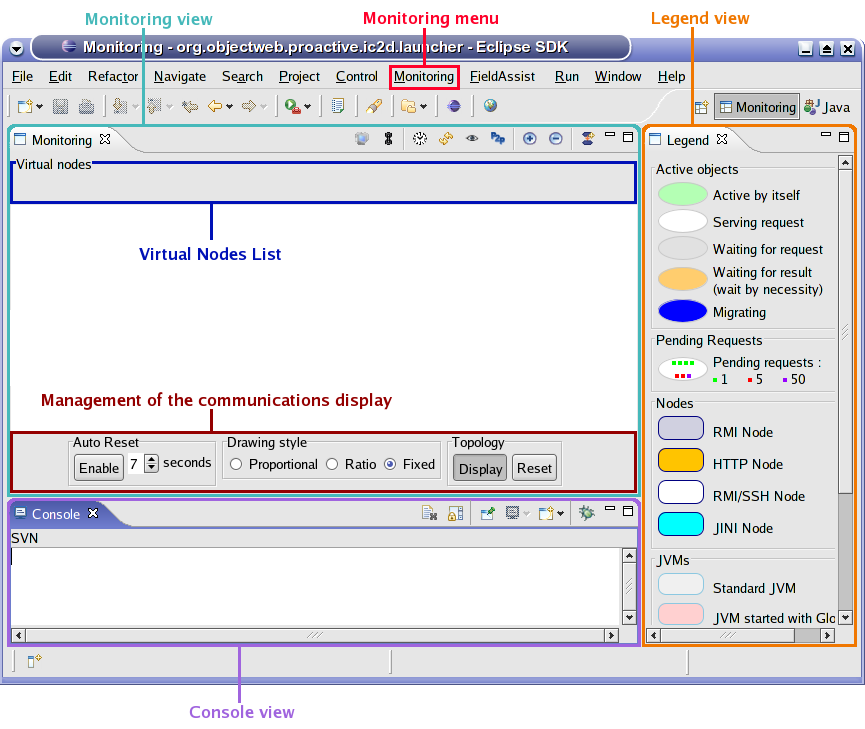

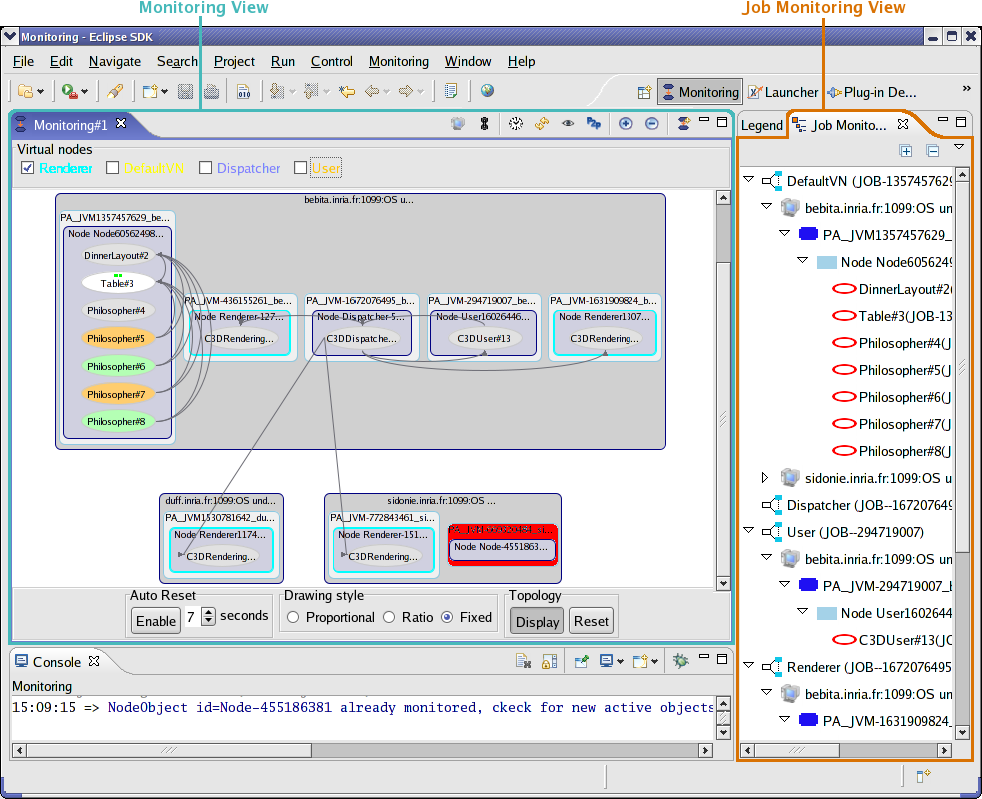

- 42.1. The Monitoring Perspective

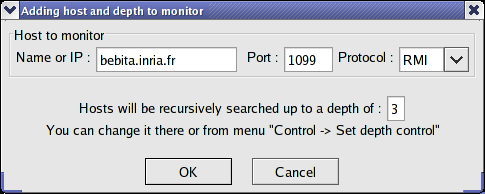

- 42.2. Monitor New Host Dialog

- 42.3. Monitor a new host

- 42.4. Set depth control

- 42.5. Set time to refresh

- 42.6. Refresh

- 42.7. Enable/Disable Monitoring

- 42.8. Show P2P objects

- 42.9. Zoom In

- 42.10. Zoom out

- 42.11. New Monitoring View

- 42.12. Virtual nodes List

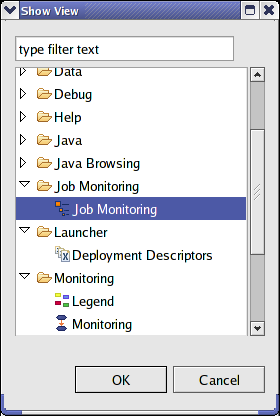

- 42.13. Select the Job Monitoring view in the list

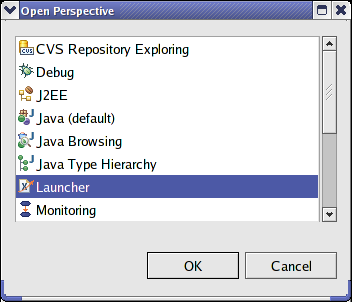

- 42.14. Select the Monitoring model

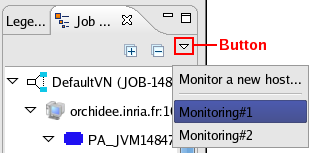

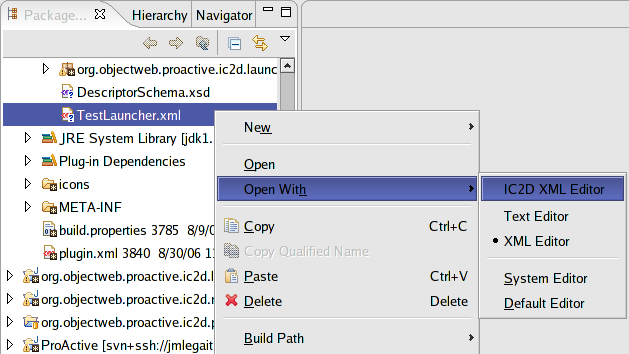

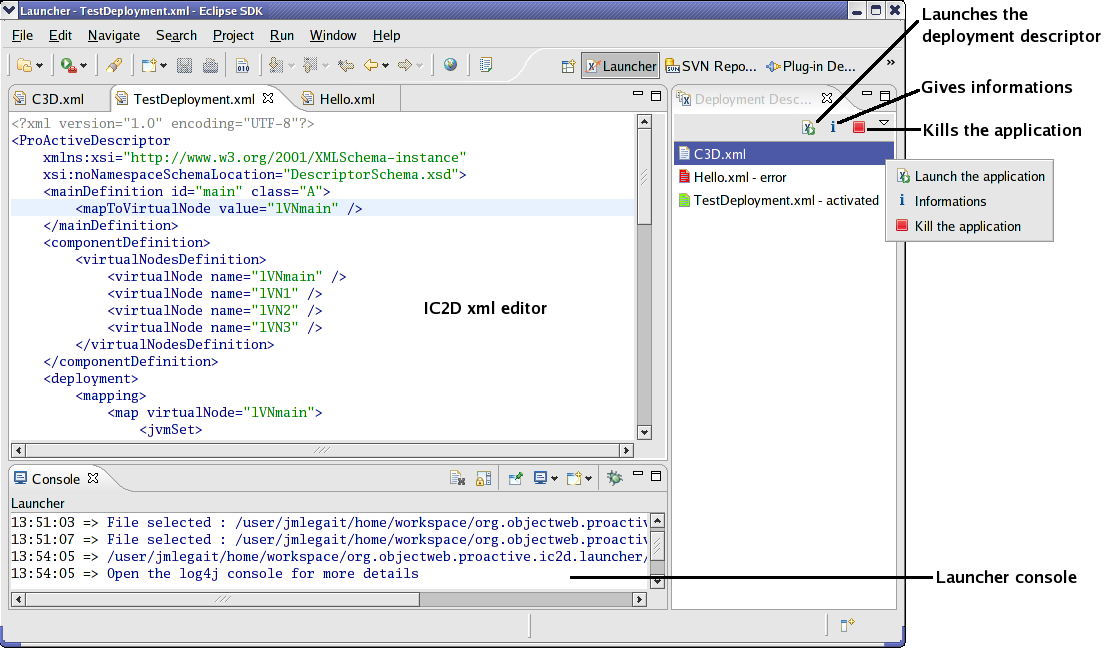

- 42.15. The monitoring views

- 42.16. Monitoring of 2 applications

- 42.17. The "Open Perspective" window

- 42.18. The open with action

- 42.19. The Launcher perspective

- 42.20. A wizard popup

- 42.21. The editor error highlighting

- 42.22. The plugin's interface

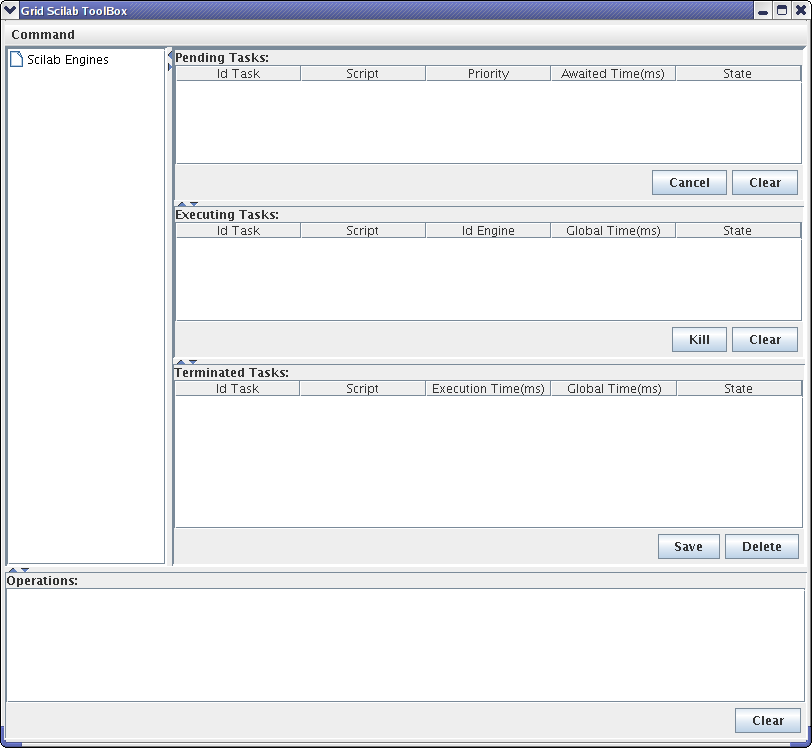

- 43.1. Main frame

- 43.2. Deployment of the application

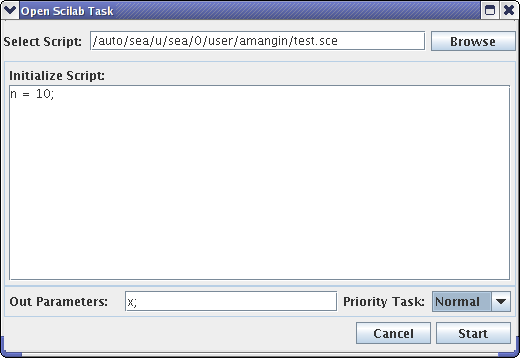

- 43.3. Creation of a task

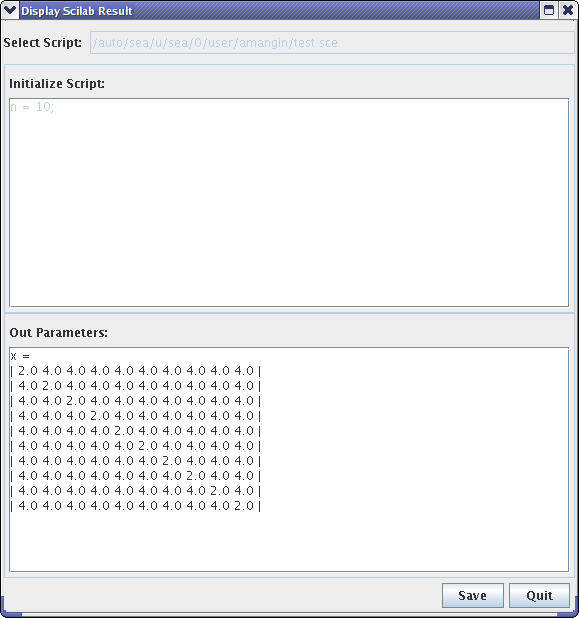

- 43.4. Display a result

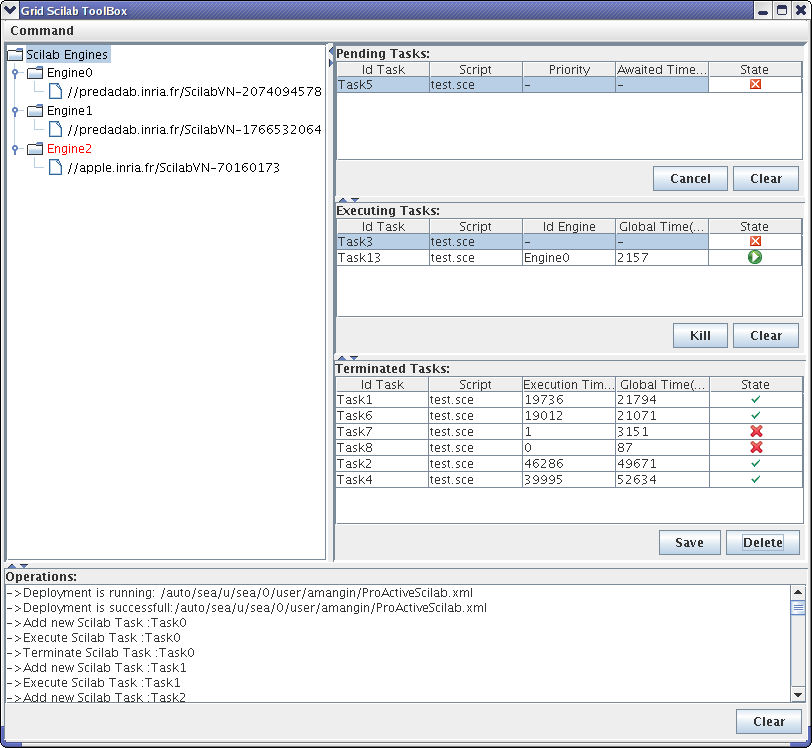

- 43.5. State of Engines

- 45.1. A Drawing using the FIGURE tag

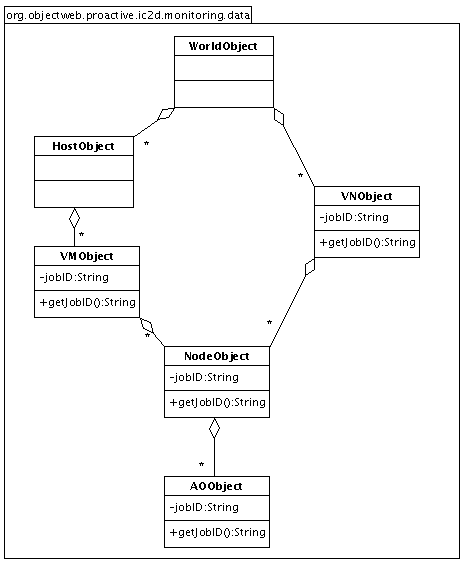

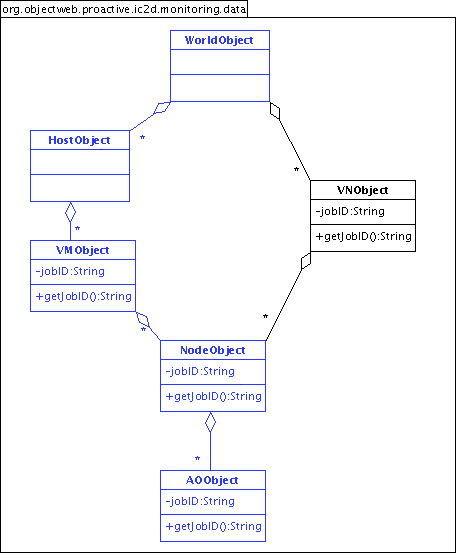

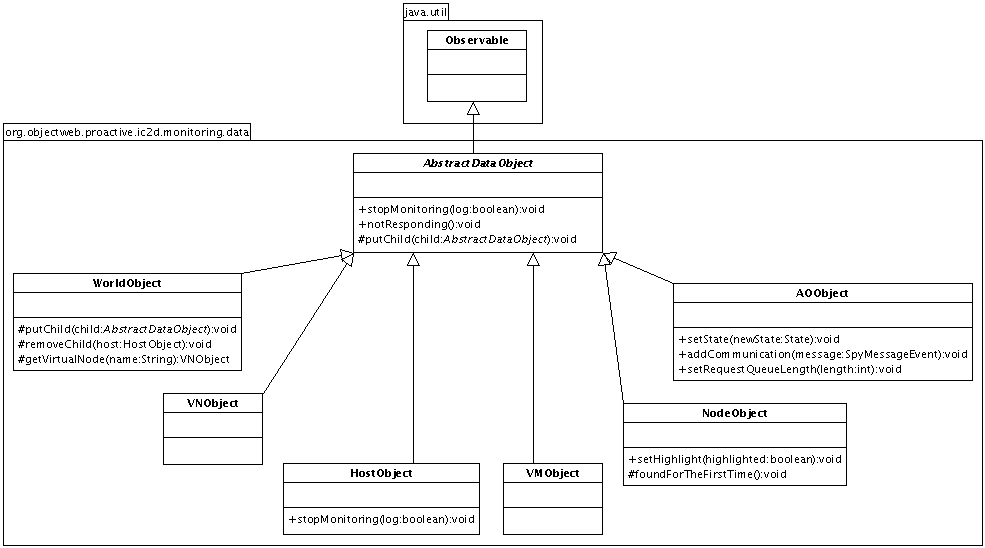

- 46.1. Graphical representation of the data

- 46.2. Class diagram

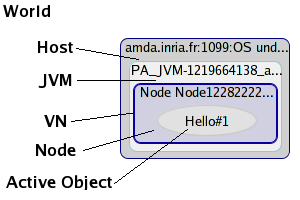

- 46.3. The world exploring itself for the first time

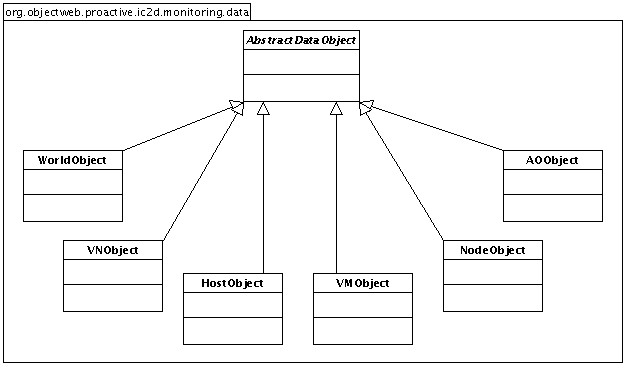

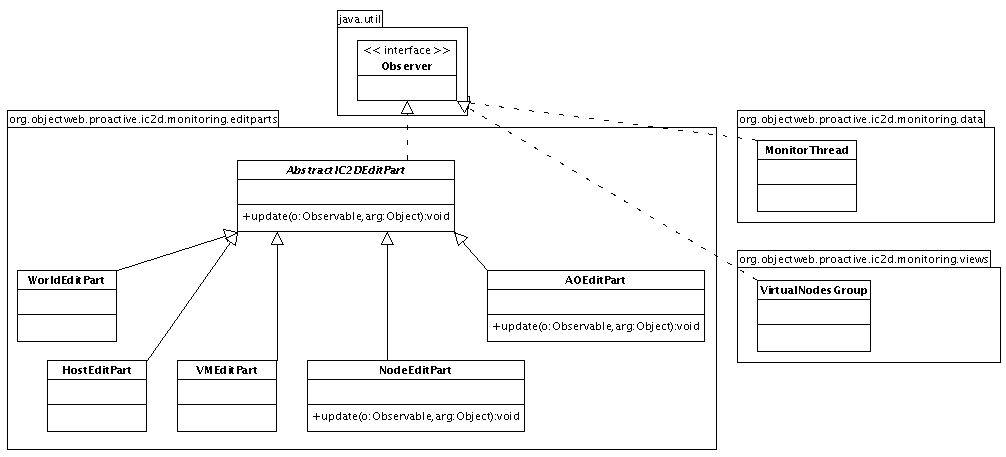

- 46.4. The Models

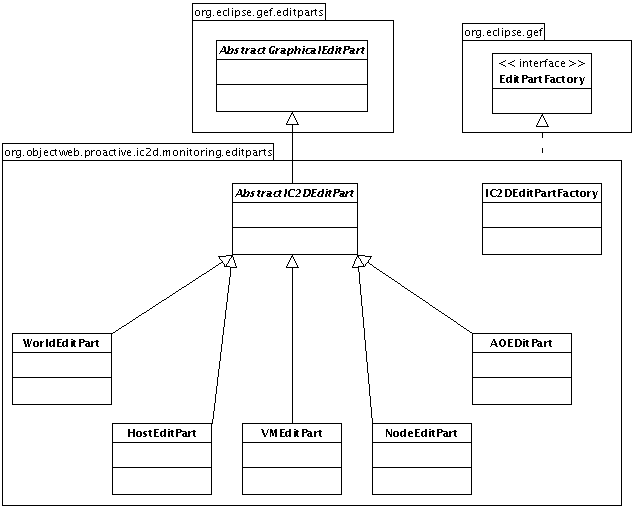

- 46.5. The Controllers and the factory

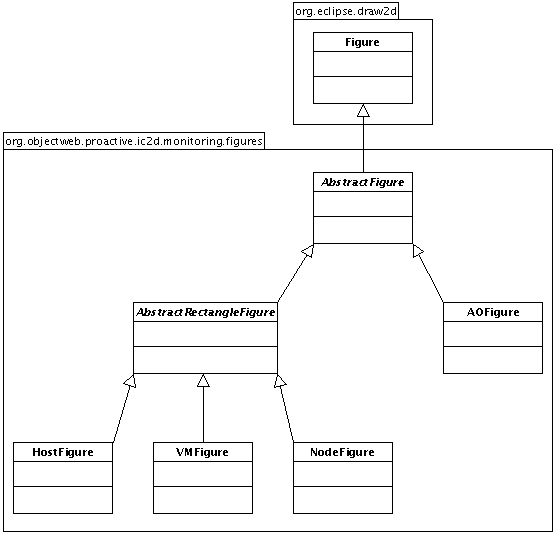

- 46.6. The Views

- 46.7. The data strucure of the monitoring plugin

- 46.8. Observable objects

- 46.9. Observer objects

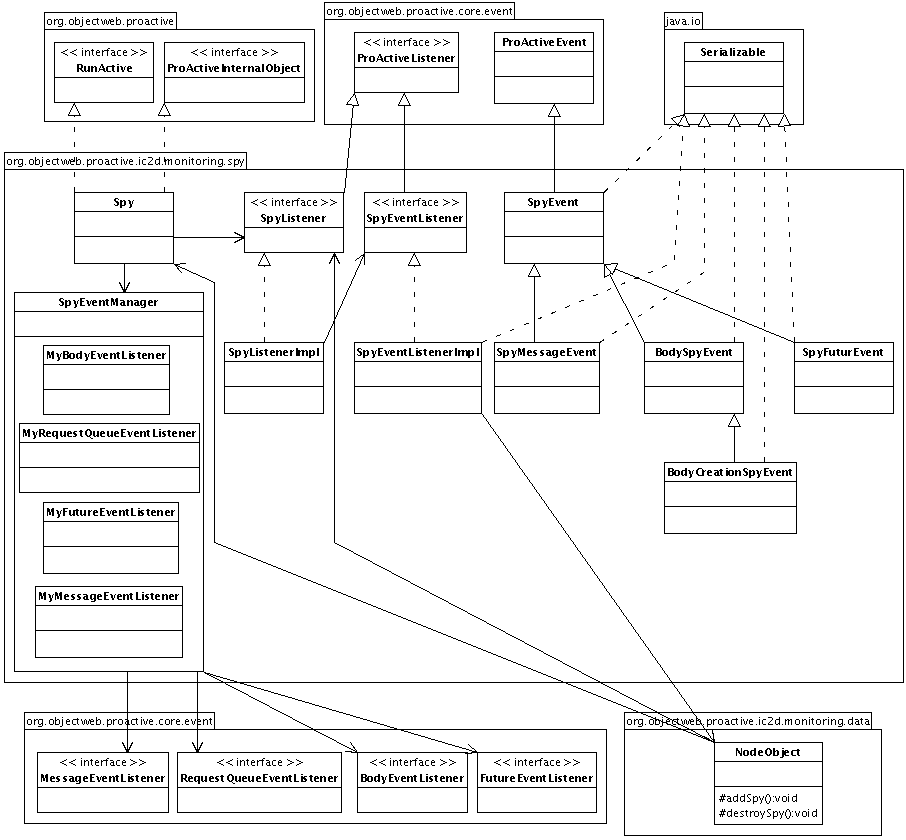

- 46.10. Spy classes

- 46.11. Active Objects' events management

- 46.12. SVN Repository

- 46.13. ic2d.product

- 46.14. Create a new project

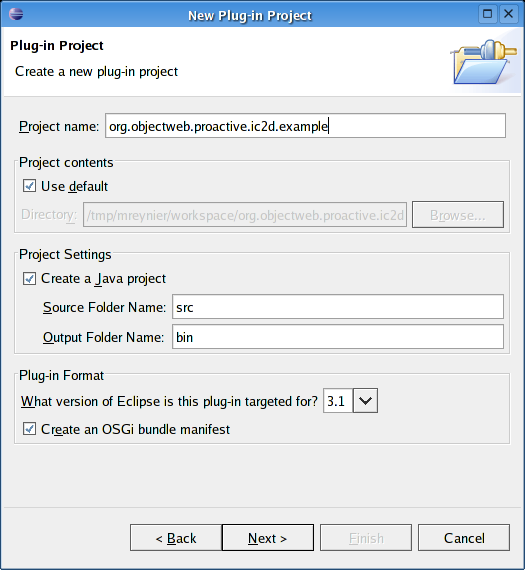

- 46.15. Specify name and plug-in structure

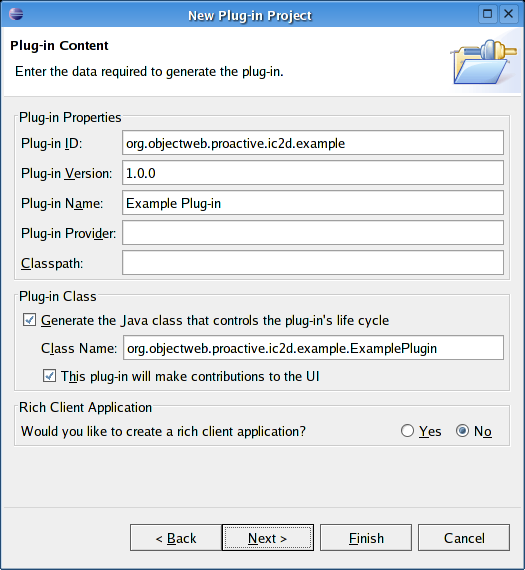

- 46.16. Specify plug-in content

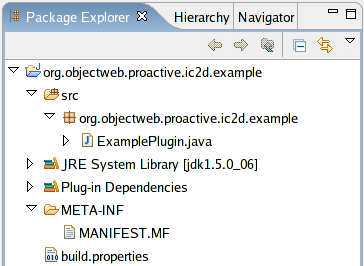

- 46.17. The plug-in structure

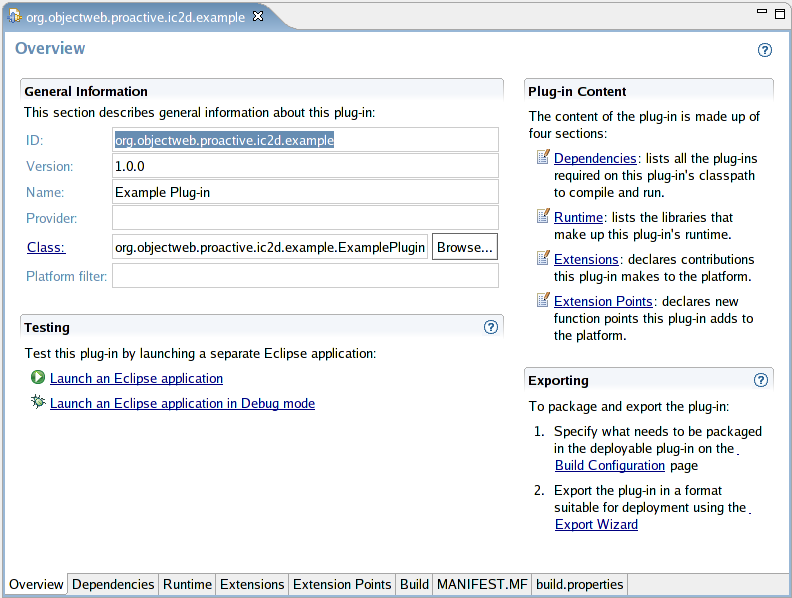

- 46.18. Interface for editing the manifest and related files.

- 46.19. Configuration

- 46.20. Plug-in selection

- 46.21. About product Plug-ins

- 46.22. Workbench structure

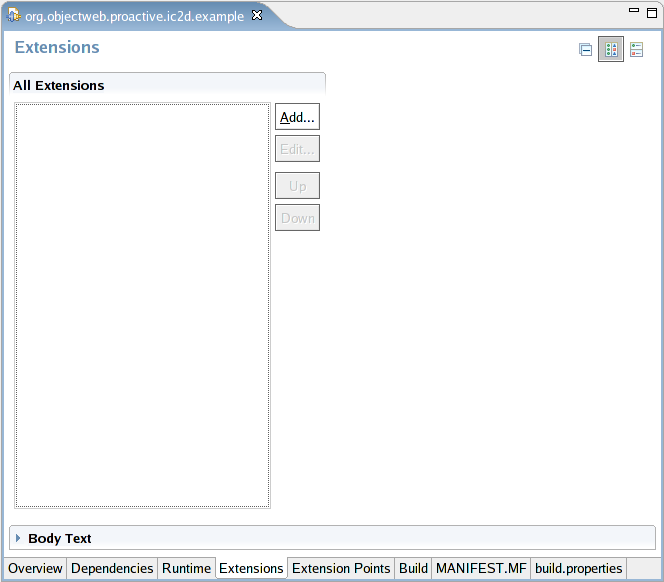

- 46.23. Extensions tab (no extensions)

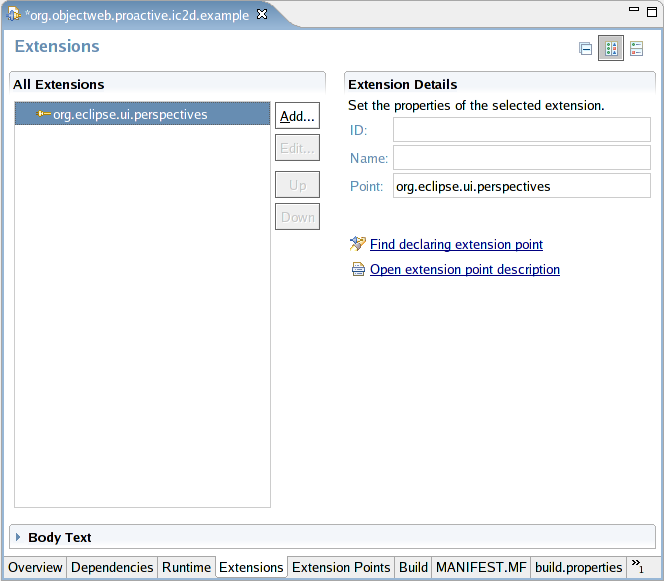

- 46.24. Extensions tab (org.eclipse.ui.perspectives)

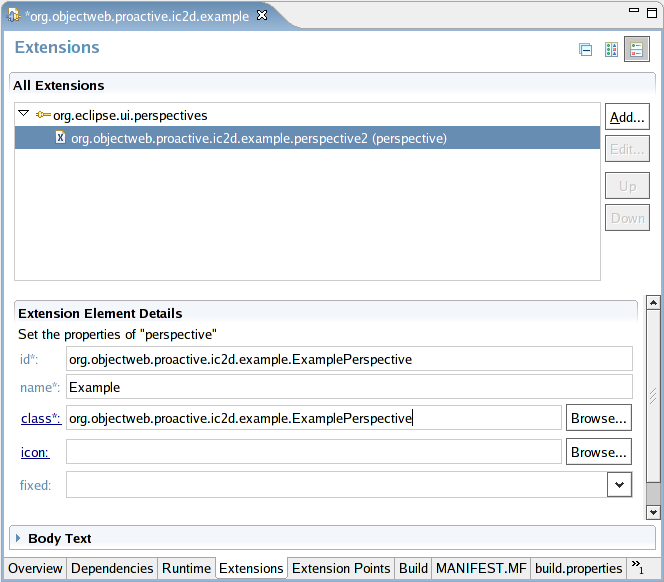

- 46.25. Extensions tab (Example)

- 49.1. core.process structure

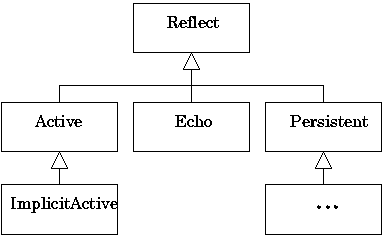

- 52.1. Metabehavior hierarchy

List of Tables

- 2.1. ProActive.zip contents

- 8.1. MPI to ProActive

- 13.1. Future creation, and asynchronous calls depending on return type

- 22.1. Variable Types

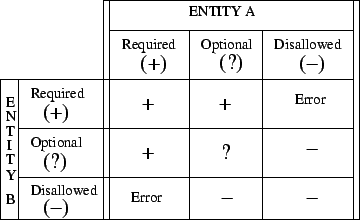

- 37.1. Result of security negotiations

- 41.1. Simple Wrapping of MPI Code

- 41.2. API for creating one Active Object per MPI process

- 41.3. MPI to ProActive Communications API

- 41.4. Java API for MPI message conversion

- 41.5. ProActiveMPI API for sending messages to MPI

- 41.6. MPI message reception from ProActive

- 41.7. MPI to MPI through ProActive C API

- 41.8. MPI to MPI through ProActive Fortran API

- 45.1. This is an example table

- 46.1. Observable and Observer objects

List of Examples

- 1.1. Class-based Active Object

- 1.2. Instantiation-based Active Object

- 1.3. Object-based Active Object

- 1.4. A possible implementation for the

TinyHelloclass - 2.1. A simple proactive-log4j file

- 10.1. The UserImpl class, a component wrapper

- 10.2. userAndComposite.fractal, a component ADL file

- 10.3. How to rename Virtual Nodes in ADL files

- 10.4. Component Lookup and Register

- 13.1. Custom Init and Run

- 13.2. Start, stop, suspend, restart a simulation algorithm in runActivity method

- 13.3. Reactive Active Object

- 13.4. A possible implementation for the

Helloclass: - 13.5. HelloClient.java

- 20.1. A configuration file example

- 21.1. C3D_Dispatcher_Render.xml

- 21.2. C3D_User.xml

- 43.1. Example: Interface Scilab

- 43.2. Descriptor deployment

- 45.1. JAVA program listing with file inclusion

- 45.2. XML program listing with file inclusion

- 46.1. MANIFEST.MF

- 46.2. ExamplePlugin.java

- 46.3. build.properties

- 46.4. plugin.xml

- 46.5. ExamplePlugin.java

- 47.1. declaring P2P loggers in the interface org.objectweb.proactive.core.util.Loggers

- 48.1. Example of HTML results

- 48.2. Agent class

- C.1. examples/RSH_Example.xml

- C.2. examples/SSH_Example.xml

- C.3. examples/SSHList_example.xml

- C.4. examples/SSHListbyHost_Example.xml

- C.5. examples/SSH_LSF_Example.xml

- C.6. examples/SSH_PBS_Example.xml

- C.7. examples/SSH_SGE_Example.xml

- C.8. examples/SSH_OAR_Example.xml

- C.9. examples/SSH_OARGRID_Example.xml

- C.10. examples/SSH_PRUN_Example.xml

- C.11. examples/Globus_Example.xml

- C.12. examples/Unicore_Example.xml

- C.13. examples/NorduGrid_Example.xml

- C.14. examples/SSH_GLite_Example.xml

- C.15. examples/SSH_MPI_Example.xml

- C.16. InitActive.java

- C.17. RunActive.java

- C.18. EndActive.java

- C.19. core/body/MetaObjectFactory.java

- C.20. core/body/ProActiveMetaObjectFactory.java

- C.21. ProActive.java

- C.22. core/process/ssh/SSHProcessList.java

- C.23. core/process/rsh/RSHProcessList.java

- C.24. core/process/rlogin/RLoginProcessList.java

- C.25. core/descriptor/data/ProActiveDescriptor.java

- C.26. Body.java

- C.27. core/body/UniversalBody.java

- C.28. InitializedHello.java

- C.29. InitializedHelloClient.java

- C.30. MigratableHello.java

- C.31. MigratableHelloClient.java

- C.32. HelloFrameController.java

- C.33. HelloFrame.java

- C.34. P2P configuration: proactivep2p.xsd

- C.35. P2P configuration: sample_p2p.xml

- C.36. SOAP configuration: webservices/web.xml

- C.37. MPI Wrapping: mpi_files/MPIRemote-descriptor.xml

GRID computing is now a key aspect, from scientific to business applications, from large scale simulations to everyday-life enterprise IT, including telcos and embedded domains. We are just entering the era of Ubiquitous Computing with many computers at hand of every single individual - after the old days of mainframes and servers, hundreds of persons sharing the same machines, and the quite current days of PCs, one person/one computer. Potentially spanning all over the world, involving several thousands or several hundred thousands of nodes, the programming of Grid applications call for a new paradigms. The ProActive Grid solution relies on systematic asynchronous method calls, allowing to master both complexity and efficiency.

Overall, ProActive promotes a few basic and simple principles:

Activites are distributed, remotely accessible objects

Interactions are done through asynchronous method calls

Results of interactions are called futures and are first class entities.

Callers can wait for results using a mechanism called wait-by-necessity

ProActive takes advantage of this sound programming model, to further propose advanced features such as groups, mobility, and components. In the framework of a formal calculus, ASP (Asynchronous Sequential processes), confluence and determinism have been proved for this programming model: CH05 and CHS04.

Asynchronous method calls with returns lead to an emerging abstraction: futures, the expected result of a given asynchronous method call. Futures turn out to be a very effective abstraction for large distributed systems, preserving both low coupling and high structuring.

Asynchronous method calls and first-class futures are provided in the unifying framework of an Active Object.

Most of the time, activities and distribution are not known at the beginning, and change over time. Seamless implies reuse, smooth and incremental transitions.

A huge gap still exists between multithreaded and distributed Java applications which impedes code reuse in order to build distributed applications from multithreaded applications. Both JavaRMI and JavaIDL, as examples of distributed object libraries in Java, put a heavy burden on the programmer because they require deep modifications of existing code in order to turn local objects into remotely accessible ones. In these systems, remote objects need to be accessed through some specific interfaces. As a consequence, these distributed objects libraries do not allow polymorphism between local and remote objects. This feature is our first requirement for a Grid Computing framework. It is strongly required in order to let the programmer concentrate first on modeling and algorithmic issues rather than lower-level tasks such as object distribution, mapping and load balancing.

Active Objects are the core of the ProActive

computing concept. An Active Object is both a Remote

Object (which allows to deploy it on a distant host) and a

Thread (which gives it its own activity, its own

independant behaviour and in concurrency with other Active Objects

deployed). Given a standard object, turning it into an Active Objects

provides:

location transparency

activity transparency

synchronization

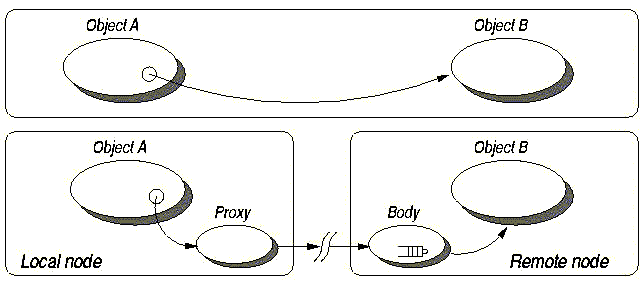

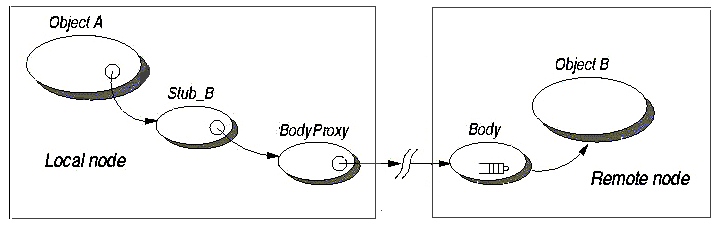

Communications to an active object are by default asynchronous. So, an active object is: a main object + a single thread + a queue of pending requests. As such, a reference to a remote object is equivalent to a reference to a remote activity. An activity is an object ; but being in a non-uniform model, not all objects are active objects, the majority remaining standard Java objects. As there cannot be any sharing, an active object is also a unit of computational mobility (see Chapter 16, Active Object Migration).

![[Note]](images/note.png) | Note |

|---|---|

The |

On activation, an object becomes a remotely accessible entity with

its own thread of control: an active object. Here are given as example

three ways to transform a standard Object into an

Active Object:

Object[] params = new Object[] { new Integer (26), "astring" }; A a = (A) ProActive.newActive("example.A", params, node);

Example 1.1. Class-based Active Object

public class AA extends A implements Active {} Object[] params = new Object[] { new Integer (26), "astring" }; A a = (A) ProActive.newActive("example.AA", params, node);

Example 1.2. Instantiation-based Active Object

Object-based Active Objects Allows to turn active

and set remote objects for which you do not have the source code; this is

a necessary feature in the context of code mobility.

A a = new A (26, "astring"); a = (A) ProActive.turnActive(a, node) ;

Example 1.3. Object-based Active Object

![[Note]](images/note.png) | Note |

|---|---|

Nodes allow to control the mapping to the hosts. See Section 13.1.2, “Using a Node” for an example use of a Node, and Section 21.2, “Principles” for a definition. |

Here is a summary of the computation model being used by ProActive:

Heterogeneous model both passive and active objects

Systematic asynchronous communications towards active objects

No shared passive object , Call-by-value between active objects

Automatic continuations , a transparent delegation mechanism

wait-by-necessity , automatic and transparent futures

Centralized and explicit control , libraries of abstractions

To compare to Java RMI, a Java remote object is not by essence an

activity. The fact that several threads can execute several remote method

calls simultaneously within a remote object does reveal that facet. When

writing ro.foo(p);, what ro identifies is not a remote

activity, but just a remote object. This has several consequences, along

with the presence of sharing between remote objects that prevents them

from being a unit of computational migration.

Two key features:

Wait-by-necessity: inter-objects synchronization. Systematic, implicit and transparent futures. Ease the programming of synchronization and reuse of existing methods

Polymorphism between standard and active objects

Type compatibility for classes and not just for interfaces

Needed and done for the future objects as well

Dynamic mechanism (dynamically achieved if needed)

This example implements the smallest program in ProActive. This is the easiest program you could write, using the Active Object concept. This is just to show quickly how code can be written, with minimal knowledge of the API.

You can get a more complete 'hello world' example, with deployment on a remote host, further on in the manual (Section 13.10, “The Hello world example”).

A client object displays a String received from

elsewhere (the original VM). This illustrates the creation of an Active

Object.

Only one class is needed: we have put the main method inside the class, which when deployed will be an Active Object.

This class can be used as an Active Object, serving requests. Its creation involves the following steps:

Provide an implementation for the required server-side functionalities

Provide an empty, no-arg constructor

Write a

mainmethod in order to instantiate one server object.

public class TinyHello implements java.io.Serializable {

static Logger logger = ProActiveLogger.getLogger(Loggers.EXAMPLES);

private final String message = "Hello World!";

/** ProActive compulsory no-args constructor */

public TinyHello() {

}

/** The Active Object creates and returns information on its location

* @return a StringWrapper which is a Serialized version, for asynchrony */

public StringMutableWrapper sayHello() {

return new StringMutableWrapper(

this.message + "\n from " + getHostName() + "\n at " +

new java.text.SimpleDateFormat("dd/MM/yyyy HH:mm:ss").format(new java.util.Date()));

}

/** finds the name of the local machine */

static String getHostName() {

try {

return java.net.InetAddress.getLocalHost().toString();

} catch (UnknownHostException e) {

return "unknown";

}

}

/** The call that starts the Acive Objects, and displays results.

* @param args must contain the name of an xml descriptor */

public static void main(String[] args)

throws Exception {

// Creates an active instance of class Tiny on the local node

TinyHello tiny = (TinyHello) ProActive.newActive(

TinyHello.class.getName(), // the class to deploy

null // the arguments to pass to the constructor, here none

); // which jvm should be used to hold the Active Object

// get and display a value

StringMutableWrapper received = tiny.sayHello(); // possibly remote call

logger.info("On " + getHostName() + ", a message was received: " + received); // potential

wait-by-necessity

// quitting

ProActive.exitSuccess();

}

}

Example 1.4. A possible implementation for the TinyHello

class

Implementing any remotely-accessible functionality is simply done

through normal Java methods in a normal Java class, in exactly the same

manner it would have been done in a non-distributed version of the same

class. Here, the only method is sayHello

Now that we know how to write the class that implements the

required server-side functionalities, let us see how to create the

server object. We want this active object to be created on the current

node, which is why we use newActive with only two

parameters (done in the main method).

The code snippet which instantiates the

TinyHello in the same VM is the following (in the

main method):

TinyHello tiny = (TinyHello) ProActive.newActive(

TinyHello.class.getName(), // the class to deploy

null // the arguments to pass to the constructor, here none

); // which jvm should be used to hold the Active Object

This is exactly like invoking a method on a local object of the same type. The user does not have to deal with catching exceptions related to the distant deployment.

As already stated, the only modification brought to the code by

ProActive is located at the place where active objects are created. All

the rest of the code remains the same, which fosters software reuse. So

the way to call the sayHello method in this example

is the following (in the main method):

StringMutableWrapper received = tiny.sayHello(); // possibly remote call logger.info("On " + getHostName() + ", a message was received: " + received); // potential wait-by-necessity

To launch the example, you may type:

linux> java -cp $CLASSPATH -Djava.security.policy=scripts/proactive.java.policy

-Dlog4j.configuration=file:scripts/proactive-log4j

org.objectweb.proactive.examples.hello.TinyHello windows> java -cp $CLASSPATH -Djava.security.policy=scripts\proactive.java.policy

-Dlog4j.configuration=file:scripts\proactive-log4j

org.objectweb.proactive.examples.hello.TinyHelloThere are also scripts in the scripts

directory:

linux> cd scripts/unix/ linux> tinyHello.sh

windows> cd scripts/windows windows> tinyHello.bat

[apple unix]tinyhello.sh --- Hello World tiny example --------------------------------- > This ClassFileServer is reading resources from classpath ProActive Security Policy (proactive.runtime.security) not set. Runtime Security disabled Created a new registry on port 1099 //apple.inria.fr/Node628280013 successfully bound in registry at //apple.inria.fr/Node628280013 Generating class: pa.stub.org.objectweb.proactive.examples.hello.Stub_TinyHello Generating class: pa.stub.org.objectweb.proactive.core.util.wrapper.Stub_StringMutableWrapper On apple/138.96.218.62, a message was received: Hello World! from apple/138.96.218.62 at 03/11/2005 14:25:32

ProActive is made available for download under a LGPL license. ProActive requires the JDK 1.5 or later to be installed on your computer. Please note that ProActive will NOT run with any version prior to 1.5 since some features introduced in JDK 1.5 are essential.

Download and unzip the ProActive archive

Include in your

CLASSPATHthe ProActive jar file (ProActive/ProActive.jar) along withProActive/lib/javassist.jar, ProActive/lib/log4j.jar, ProActive/lib/xercesImpl.jar, ProActive/lib/components/fractal.jar, ProActive/lib/bouncycastle.jarDepending on your project needs, you might need to include other libraries located in the ProActive/lib directory.

Don't forget to launch the JVM with a security policy file using the option -Djava.security.policy=pathToFile. A basic policy file can be found at ProActive/scripts/proactive.java.policy. You can also specify a log4j configuration file with the property

-Dlog4j.configuration=file:pathToFile. If not specified a default logger that logs on the console will be created.

Below are described the different steps in more details.

You can download the archive file (a standard zip file) containing ProActive from the download section of the ProActive home page. You will be asked to accept the licence agreement and provide a few personal details including your email address. You will then within a few minutes receive an email.

Unzip the archive using your favorite ZIP program, such as Winzip under Windows or the unzip command-line utility on most Unix systems. Unzipping the archive creates a ProActive directory and all the files contained in the archive go into this directory and its subdirectories.

Here is a quick overview of the directory structure of the archive:

Directory or File | Description |

| ProActive bytecode that you need to include in the CLASSPATH in order to use ProActive |

| The bytecode and resources of all examples included

with ProActive. This jar file needs to be included in the

CLASSPATH only when trying to run the examples. All examples rely

on ProActive and therefore the |

| The bytecode and resources of IC2D. This jar file

needs to be included in the CLASSPATH only when trying to run the

application IC2D. IC2D relies on ProActive and therefore the

|

| The external libraries used by ProActive |

| ProActive documentation including the full api doc |

| Unix sh scripts for running the examples |

| Windows .bat batch files for running the examples |

| For source version only, the full source code of ProActive |

| For source version only, the scripts to compile ProActive using Ant. |

Table 2.1. ProActive.zip contents

You can try to run the test applications provided with ProActive.

Each example comes with a script to launch the application. Depending on

you operating system, the script you need to launch is located either in

ProActive/scripts/unix or

ProActive/scripts/windows. The source code of all

examples can be found in the directory

ProActive/src/org/objectweb/proactive/examples.

A simple example

script :

hello.shorhello.batsource :

examples/hello

This example is the ProActive version of the Readers/Writers canonical problem. To illustrate the ease-of-use of the ProActive model, different synchronization policies can be applied without even stopping the application. This example is based on a easy to use Swing GUI.

script :

readers.shorreaders.batsource :

examples/readers

This example is one possible implementation of the well-known Dining Philosophers synchronization problem. This example is based on a easy to use Swing GUI.

script :

philosophers.shorphilosophers.batsource :

examples/philosophers

Note that if you use the scripts provided with the distribution to run the examples you do not need to update your classpath.

In order to use ProActive in your

application you need to place in your CLASSPATH the

following jars files :

lib/ProActive.jarThe library itself.lib/javassist.jar in lib directory. It is used to handle bytecode manipulation.

lib/log4j.jarLog4j is the logging mechanism used in ProActive.lib/xercesImpl.jarXerces is the library used to parse and validate xml files, like Deployment Descriptors, Configuration files and Component files (see Chapter 21, XML Deployment Descriptors, Chapter 20, ProActive Basic Configuration, and Chapter 28, Components introduction).lib/components/fractal.jar Fractal is the component model used for ProActive Components (see Chapter 28, Components introduction).

lib/bouncycastle.jarThis library is used by the ProActive security framework (see Chapter 37, ProActive Security Mechanism).

You do not need to modify your CLASSPATH permanently as long as you include the two entries above using a Java IDE or a shell script.

In addition to the jar files above you may want to add the following jar files. None of them are used directly by the core functionnalities of ProActive but only in part of the library. Their are needed to compile all the code but they are not needed at runtime if those specific functionnalities are not used.

lib/jsch.jar Used when tunneling with rmissh.

lib/jini/*.jar Used to interface with Jini.

lib/globus/*.jar Used to interface with Globus.

lib/components/*.jar Used by the the Fractal components.

lib/ws/*.jar Used by the Web Services features in ProActive.

lib/ibis.jar Used by Ibis if configured as communication protocol.

lib/unicore/*.jar Used when deploying to a unicore site.

lib/glite/*.jar Used to deploy on gLite sites.

If you use the scripts provided with the distribution to run the examples an existing policy file named proactive.java.policy will be used by default.

See Permissions in the JavaTM 2 SDK to learn more about Java permissions. The option -Djava.security.policy=pathToFile will specify which policy file to use within proactive. As a first approximation, you can create a simple policy file granting all for everything :

grant {

permission java.security.AllPermission;

}; ![[Note]](images/note.png) | Note |

|---|---|

If you use the scripts provided with the distribution to run the

examples an existing log4j file named |

# the default logging level is INFO

log4j.rootLogger=INFO, A1

#A1 uses PatternLayout

#and displays the associated message (%m)

#using the platform dependant separator (%n)

#Use %M for method names

#see log4j documentation for details

log4j.appender.A1=org.apache.log4j.ConsoleAppender

log4j.appender.A1.layout=org.apache.log4j.PatternLayout

log4j.appender.A1.layout.ConversionPattern=%m %n

#this appender displays :

# %c : name of logger,

# %C : name of the class,

# %M : method name,

# %L : line number, and the message with the following pattern

#log4j.appender.A1.layout.ConversionPattern=%c - %C{1}@%M,line %L :%n %m%n

########### Change here default logging level on a

########### per-logger basis

########### usage is log4j.logger.className=Level, Appender

Example 2.1. A simple proactive-log4j file

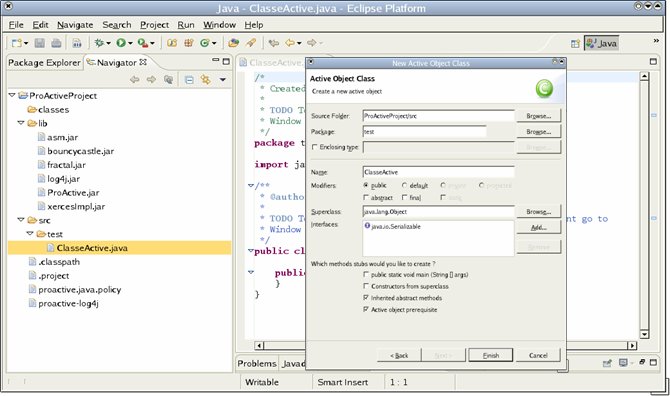

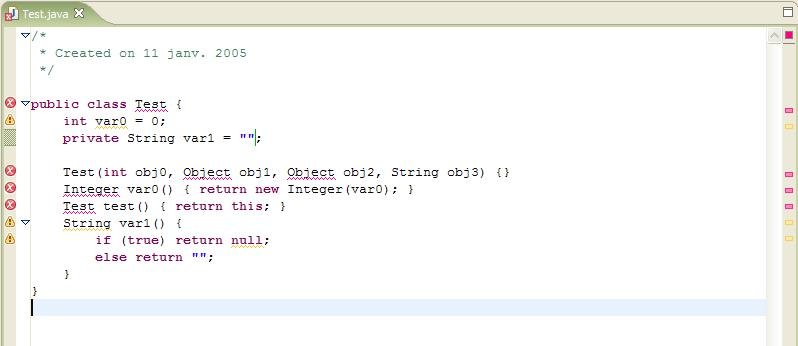

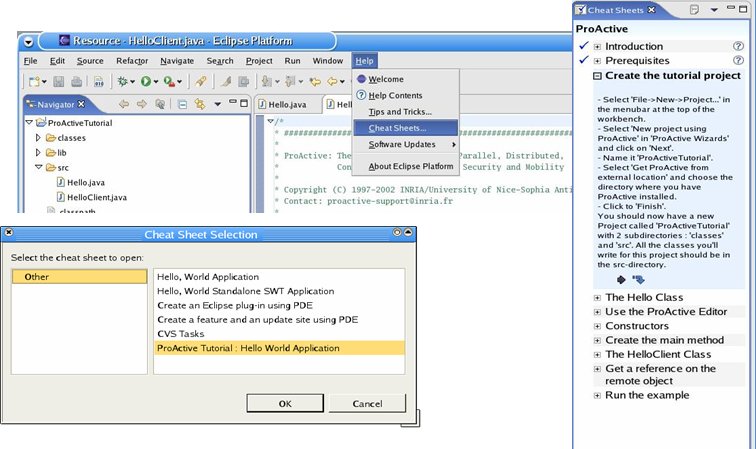

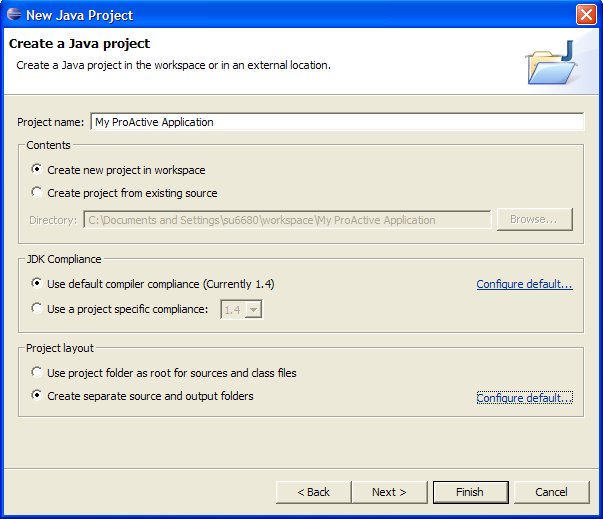

We recommend you use the Eclispe IDE to develop your ProActive applications. You can get this tool on the Eclipse website Just unzip and launch the eclipse executable. In order to develop your own ProActive application, you will need to create an eclipse project :

File -> New ... -> Project

Then choose Java Project . A wizard should appear and ask you to enter the project name :

In order to separate class files from source files (it can be useful if you write scripts which refer to these classes), you can check the Create separate source and ouput folders in the Project Layout Frame, and click on configure default ... to choose the folders names. Once you have choosen all informations about Project Name , Project location , you can click on Next .

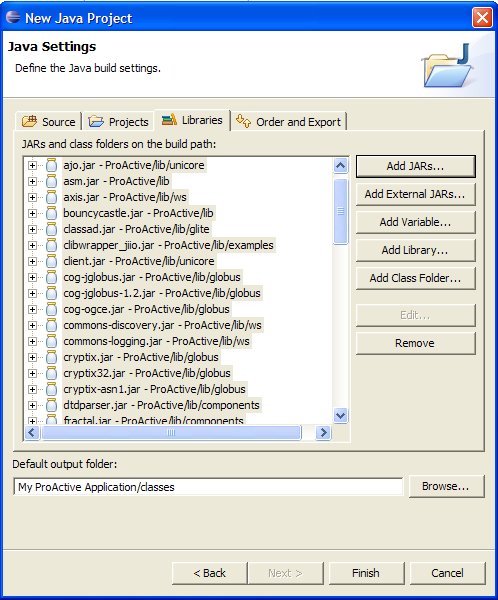

You have to specify some java settings in order to set the application classpath. Select the Librairies tab and click on the Add External Jar... button. Add the ProActive.jar and the librairies contained in the lib/ directory of the ProActive distribution.

The Librairies tab should look like this :

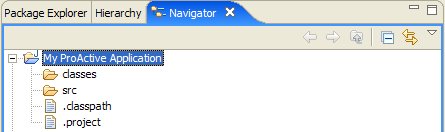

You can see now on the navigator tab on the left side, that there is a new Project with the source and output folders you've just created :

You are now able to create classes and packages that use the ProActive library.

![[Note]](images/note.png) | Note |

|---|---|

There is one file which is problematic with Eclipse:

compile$

build compile), there are no problems. The script checks the

java3d installation before compiling the 3d class. When java3d is not

installed, the nbody example only works only in 2d. |

All is now configured to create your ProActive application. Click on the Finish button.

We are currently developing an Eclipse plugin that will help developers to easily create ProActive applications. Have a look at the plugin documentation page, Chapter 42, IC2D: Interactive Control and Debugging of Distribution and Eclipse plugin .

If you encounter any problem with installing ProActive and running the examples, please make sure you correctly followed all the steps described above. If it doesn't help, here is a list of the most common mistakes:

Permission denied when trying to launch scripts under Linux Permissions do not allow to execute files. Just change the permissions with chmod 755 *.sh

Java complains about not being able to find ProActive's classes. Your

CLASSPATHenvironment variable does not contain the entry for the ProActive's or ASM's or Log4j's or Xerces' or Fractal's or BouncyCastle's classes.ProActive.jar,asmXX.jar, log4j.jar, xercesImpl.jar, fractal.jar, bouncycastle.jarmust be in yourCLASSPATH.Java complains about denial of access. If you get the following exceptions, you probably didn't change the file java.policy as described in Section 2.5, “Create a java.policy file to set permissions”.

org.objectweb.proactive.NodeException: java.security.AccessControlException: access denied (java.net.SocketPermission 127.0.0.1:1099 connect,resolve) at org.objectweb.proactive.core.node.rmi.RemoteNodeImpl.<init>(RmiNode.java:17) at org.objectweb.proactive.core.node.rmi.RemoteNodeFactory._createDefaultNode (RmiNodeFactory.java, Compiled Code) at org.objectweb.proactive.core.node.NodeFactory.createDefaultNode(NodeFactory.java:127) at org.objectweb.proactive.core.node.NodeFactory.getDefaultNode(NodeFactory.java:57) at org.objectweb.proactive.ProActive.newActive(ProActive.java:315) ... Exception in thread "main" java.lang.ExceptionInInitializerError: java.security.AccessControlException: access denied (java.util.PropertyPermission user.home re ad) at java.security.AccessControlContext.checkPermission (AccessControlContext.java, Compiled Code) at java.security.AccessController.checkPermission(AccessController.java:403) at java.lang.SecurityManager.checkPermission(SecurityManager.java:549) at java.lang.SecurityManager.checkPropertyAccess(SecurityManager.java:1243) at java.lang.System.getProperty(System.java:539) at org.objectweb.proactive.mop.MOPProperties.createDefaultProperties (MOPProperties.java:190) ...Java complains log4j initialization If you get the following message, you probably made a mistake when giving the

-Dlog4j.configurationproperty to the java command. Be sure that the given path is right, try also to add file: before the path.log4j:WARN No appender could be found for logger ..... log4j:WARN Please initialize the log4j system properlyExamples and compilation do not work at all under Windows system: Check if your java installation is not in a path containing spaces like C:\Program Files\java or C:\Documents and Settings\java. Batch scripts, indeed, do not run properly when JAVA_HOME is set to such a directory. To get rid of those problems, the best thing to do is to install the jdk under a space-free directory and path (e.g. C:\java\j2sdk.... or D:\java\j2sdk... ) and then set the JAVA_HOME environment variable accordingly.

If you cannot solve the problem, feel free to email us for support

at <proactive@objectweb.org>. Make sure that you include a

precise description of your problem along with a full copy of the error

message you get.

In this section we present common problems encountered while trying to use ProActive. For further assistance, please post your question on the ProActive mailing list proactive@objectweb.org .

To enable the debuging logger the following log file can be used:

-Dlog4j.configuration=file:ProActive/compile/proactive-log4j

In this file, the relevant loggers can be uncommented (by removing the leading #). For example, the deployment loggers are activated with the following lines:

log4j.logger.proactive.deployment = DEBUG, CONSOLE log4j.logger.proactive.deployment.log = DEBUG, CONSOLE log4j.logger.proactive.deployment.process = DEBUG, CONSOLE

To function properly, ProActive requires machines to have a correctly configured hostname and domain name. If the names of a machines is not properly configured, then remote nodes will be unable to locate the machine.

To test if the involved machines are properly configured, in L(U)nix you can run the following commands:

$>hostname localhost //This is an error! $>hostname -i 127.0.0.1 //This is an error!

hostname should print the hostname of the machine as known by the other hosts, and hostname -i should return the network interface accessible by other machines.

To work around misconfigured domain names ProActive can be activated to use IP adresses through the following java property:

-Dproactive.useIPaddress=true

This property should be given as parameter to java virtual machines deployed on machines who's names can not be properly resolved.

ProActive provides rmi tunneling through ssh for crossing firewalls that only allow ssh connections. Things to verify when using rmissh tunneling:

ProActlive/lib/jsch.jar must be uncluded in the classpath of the concerned machines.

The jvm that is only accesible with ssh must be started using: -Dproactive.communication.protocol=rmissh

A key without a passhprase must be installed on the machine accepting connections with ssh. It should be possible to log into the site without using an ssh-agent and without providing a password.

Methods that will be called remotely on an active object must be public. While java will impose this restriction between classes of different types, this problem usually takes place when invoking a remote method on an object of the same type.

class A{

public void foo(A a){

...

a.bar(); //This call will not be handled by the remote active object!!!

...

}

private void bar(){ //To fix this, change this method to public.

...

}

}This tour is a practical introduction to ProActive, giving an illustrated introduction to some of the functionality and facilities offered by the library, by means of a step-by-step tutorial.

First off, we give an explaination on how to install and configure ProActive, in Section 4.2, “Installation and setup”.

Next are introduced several features of the library through some running examples, in Chapter 5, Introduction to ProActive Features.

Then are given some details on how this is put down in code, and you will be challenged to write your bits of code, in Chapter 6, Hands-on programming. This should give you practical experience on how to program using ProActive.

Chapter 8, SPMD PROGRAMMING, will show how to use the OO-SPMD (Object-Oriented Single Program Multiple Data) programming paradigm.

The second-last part is the complete N-Body example, in Chapter 9, The nbody example. This application is first written trivially, then some speed-ups are inserted, to show how ProActive can help you.

Finally, we close the tutorial off by showing some components. In Chapter 10, C3D - from Active Objects to Components, the C3D example is wrapped with components, and is this way exposed as components.

We hope this will help your understanding of the library and the concepts driving it.

If you need further details on how the examples work, check the ProActive applications page.

Follow the instructions for downloading and installing ProActive, in Chapter 2, ProActive Installation.

The programming exercises in the first part imply that you:

Don't forget to add the required libraries to your classpath (i.e. the libraries contained in the ProActive/lib directory, as well as either the proactive.jar archive, or the compiled classes of proactive (better if you modify the source code)

use a policy file, such as ProActive/scripts/proactive.security.policy, with the JVM option -Djava.security.policy=/filelocation/proactive.java.policy

Set the CLASSPATH as follow, putting on one line:

Under linux:

export CLASSPATH=.:./ProActive_examples.jar:./ProActive.jar:./lib/bcel.jar:\ ./lib/asm.jar:./lib/log4j.jar:./lib/xercesImpl.jar:\ ./lib/components/fractal.jar:./lib/bouncycastle.jar

Under windows:

set CLASSPATH=.;.\ProActive_examples.jar;.\ProActive.jar;.\lib\bcel.jar;\ .\lib\asm.jar;.\lib\log4j.jar;.\lib\xercesImpl.jar;\ .\lib\components\fractal.jar;.\lib\bouncycastle.jar

Concerning the second part of the tutorial (examples of some functionalities):

Note that the compilation is managed by Ant ; we suggest you use this tool to make modifications to the source code, while doing this tutorial. Nevertheless, you can just change the code and recompile using

compile.sh(orcompile.batunder windows)The examples used in the second part of this tutorial are provided in the /scripts directory of the distribution.

The scripts are platform dependant:

.shfiles on linux are equivalent to the.batfiles on windows.

This chapter will present some of the features offered by ProActive, namely:

parallel processing: how you can run several tasks in parallel.

synchronization: how you can synchronize tasks.

migration: how you can migrate Active Objects.

Distribution is often used for CPU-intensive applications, where parallelism is a key for performance.

A typical application is C3D.

Note that parallelisation of programs can be facilitated with ProActive, thanks to asynchronous method calls (see Section 13.8, “Asynchronous calls and futures”), as well as group communications (see Chapter 14, Typed Group Communication).

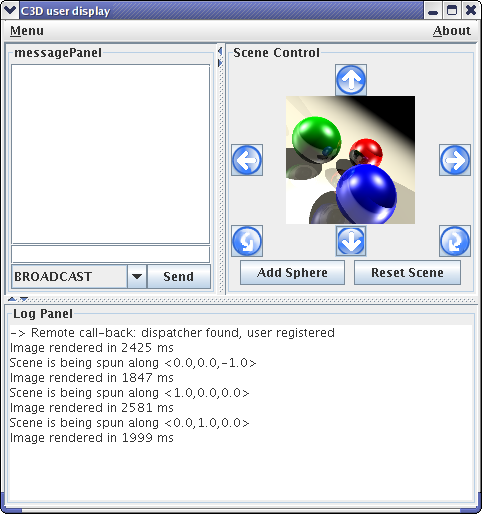

C3D is a Java benchmark application that measures the performance of a 3D raytracer renderer distributed over several Java virtual machines using Java RMI. It showcases some of the benefits of ProActive, notably the ease of distributed programming, and the speedup through parallel calculation.

Several users can collaboratively view and manipulate a 3D scene. The image of the scene is calculated by a dynamic set of rendering engines using a raytracing algorithm, everything being controlled by a central dispatcher.

Using the script c3d_no_user, a "Dispatcher"

object is launched (ie a centralized server) as well as 4 "Renderer"

objects, which are active objects to be used for parallel

rendering.

The bottom part of the window allows to choose which renderers should participate in the rendering. You may want to stop using a given machine (because for instance it is overloaded), and thus remove it from the renderers used in the current computation.

Using c3d_add_user,

Connect on the current host (proposed by default) by just giving your name.

For example, the user 'alice'

Spin the scene, add a random sphere, and observe how the action takes place immediately

Add and remove renderers, and observe the effect on the 'speed up' indication from the user window.

Which configuration is the fastest for the rendering?

Are you on a multi-processor machine?

![[Note]](images/note.png) | Note |

|---|---|

You might not perceive the difference of the performance. The difference is better seen with more distributed nodes and objects (for example on a cluster) . |

Using the c3d_add_user script, and

specifying the host (set to local host

bydefault)

If you use rlogin, make sure the DISPLAY is properly set. You must use the same version of ProActive on both machines!

Test the collaborative behavior of the application when several users are connected.

Notice that a collaborative consensus must be reached before starting some actions (or that a timeout occured).

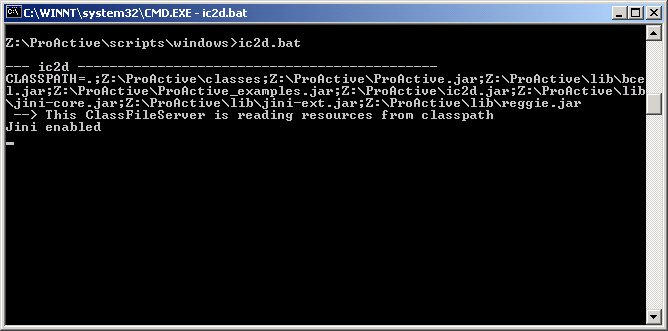

You will need at first to start IC2D using either ProActive/scripts/unix/ic2d.sh or ProActive/scripts/windows/ic2d.bat depending on your environment.

In order to visualize all Active objects, you need to acquire ('Monitoring/Monitor a new RMI host' menu):

The machine on which you started the 'Dispatcher'

The machine on which you started the second user

You'll need to type in the edit field asking it the name of each machine and the RMI port being used separated by a colon.

Add random spheres for instance, and observe messages (Requests) between Active Objects.

Add and remove renderers, and check graphically whether the corresponding Active Objects are contacted or not, in order to achieve the rendering.

You can textually visualize this information by activating 'add event timeline for this WorldObject' on the World panel with the right mouse button, and then 'show the event list window' on the top menu window

From IC2D, you can drag-and-drop active objects from one JVM to another. Click the right button on a C3DRenderingEngine, and drag and drop it in another JVM. Observe the migration taking place.

Add a new sphere, using all rendering engines, and check that the messages are still sent to the active object that was asked to migrate.

As migration and communications are implemented in a fully compatible manner, you can even migrate with IC2D an active object while it is communicating (for instance when a rendering action is in progress). Give it a try!

![[Note]](images/note.png) | Note |

|---|---|

You can also migrate Active Objects which create a GUI. If you do that for the User, you will see the graphical window beiung destroyed, and rebuilt once more. |

Manually you can start a new JVM - a 'Node' in the ProActive terminology - that will be used in a running system.

On a different machine, or by remote login on another host, start another Node, named for instance NodeZ

linux> startNode.sh rmi://mymachine/NodeZ & windows> startNode.bat rmi://mymachine/NodeZ

The node should appear in IC2D when you request the monitoring of the new machine involved (Monitoring menu, then 'monitor new RMI host'.

The node just started has no active object running in it. Drag and drop one of the renderers, and check that the node is now taking place in the computation

Spin the scene to trigger a new rendering

See the topology

![[Note]](images/note.png) | Note |

|---|---|

If you feel uncomfortable with the automatic layout, switch to manual using the 'manual layout' option (right click on the World panel). You can then reorganize the layout of the machines. |

To fully distribute the computation, start several nodes (you need 2 more) and drag-and drop renderers in them.

Depending on the machines you have, the complexity of the image, look for the most efficient configuration.

You can also write components with the Fractive API, which is an implementation of Fractal in ProActive. You should check the section on components for more information (Part V, “Composing”). There is a long explanation of the C3D component version (Chapter 10, C3D - from Active Objects to Components). The visual aspect is very similar to the standard Active Object C3D version. That's on purpose, to show how easy it is to transform code into components. If you want to run a components version of c3d, try this:

scripts/unix/components$ ./c3d.sh scripts/windows/components$ c3d.bat

The component binding is done through the Fractal ADL, which is a standard way of writing components through an xml file. You can have a visual representation with IC2D (start IC2D, menu->Components->Start the components GUI). You should specify how to read the file. You have to enter:

File->Storage:

ProActive/src.File->Open:

ProActive/src/org/objectweb/proactive/examples/components/c3d/adl/userAndComposite.fractal.

The main classes of this application are:

org.objectweb.proactive.examples.c3d.C3DUser.java

org.objectweb.proactive.examples.c3d.C3DRenderingEngine.java

org.objectweb.proactive.examples.c3d.C3DDispatcher.java

In the Dispatcher, look at the method public void

rotateScene(int i_user, String i_user_name, Vec angle) that

handles election of the next action to undertake.

ProActive provides an advanced synchronization mechanism that allows an easy and safe implementation of potentially complex synchronization policies.

This is illustrated by two examples:

The readers and the writers

The dining philosophers

The readers and the writers want to access the same data. In order to allow concurrency while ensuring the consistency of the readings, accesses to the data have to be synchronized upon a specified policy. Thanks to ProActive, the accesses are guaranteed to be allowed sequentially.

The implementation with ProActive uses 3 active objects: Reader, Writer, and the controller class (ReaderWriter).

ProActive starts a node (i.e. a JVM) on the current machine, and creates 3 Writer, 3 Reader, a ReaderWriter (the controller of the application) and a ReaderDisplay, that are active objects.

What happens when priority is set to 'reader priority'?

in

org.objectweb.proactive.examples.readers.ReaderWriter.java.

More specifically, look at the routines in:

public void evenPolicy(org.objectweb.proactive.Service service)

public void readerPolicy(org.objectweb.proactive.Service service)

public void writerPolicy(org.objectweb.proactive.Service service)

Look at the inner class MyRequestFilterm that

implements

org.objectweb.proactive.core.body.request.RequestFilter.

How does it work?

For instance, let several writers go through at the same time.

Observe the Writer Policy policy before recompiling

Recompile (using compile.sh readers or compile.bat readers)

Observe that stub classes are regenerated and recompiled

Observe the difference due to the new synchronization policy: what happens now?

Correct the bug and recompile again ; check that everything is back to normal

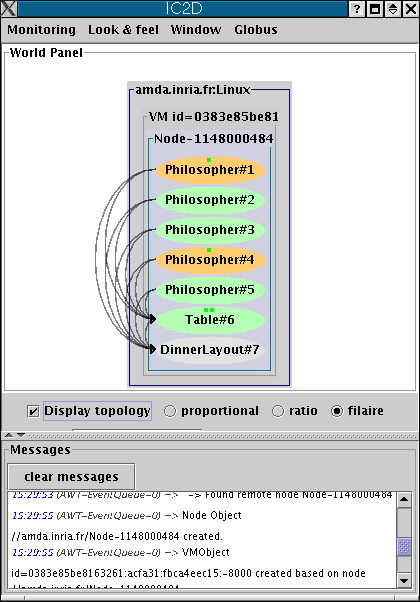

The 'dining philosophers' problem is a classic exercise in concurrent programming. The goal is to avoid deadlocks.

We have provided an illustration of the solution using ProActive, where all the philosophers are active objects, as well as the table (controller) and the dinner frame (user interface).

ProActive creates a new node and instantiates the active objects of the application: DinnerLayout, Table, and Philosopher

The pictures represent the state of the philosophers. They can be:

philosophing

philosophing hungry, wants the

fork!

hungry, wants the

fork! eating

eating

The forks can have two states:

taken

taken free

free

Click on the philosophers' heads to switch their modes

Test that there are no deadlocks!

Test that you can starve one of the philosophers (i.e. the others alternate eating and thinking while one never eats!)

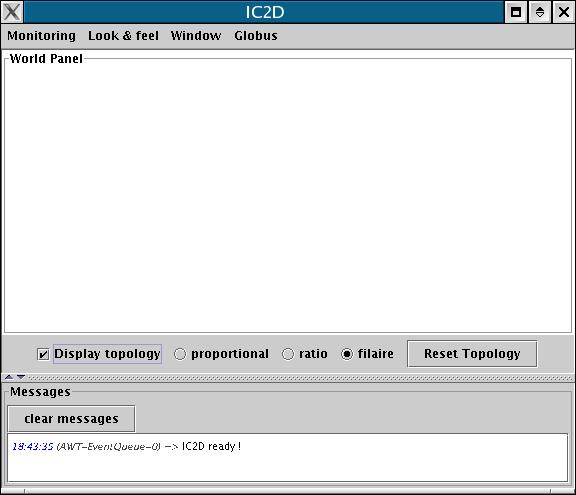

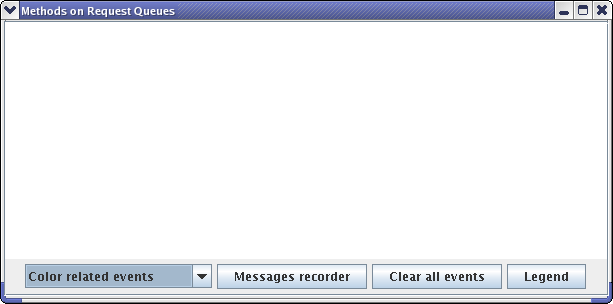

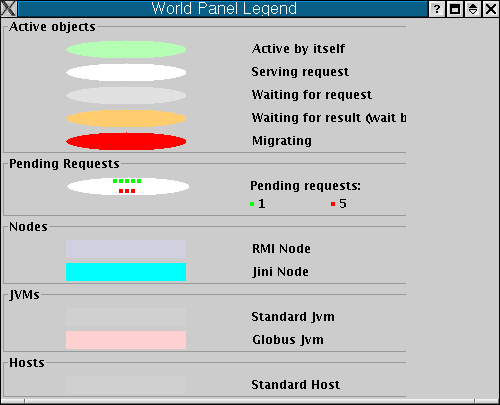

IC2D is a graphical environment for monitoring and steering of distributed and Grid Computing applications.

Being in the autopilot mode, start the IC2D visualization application (using ic2d.sh or ic2d.bat)

The ic2d GUI is started. It is composed of 2 panels: the main panel and the request queue panel

Acquire your current machine

It is possible to visualize the status of each active object (processing, waiting etc...), the communications between active objects, and the topology of the system (here all active objects are in the same node):

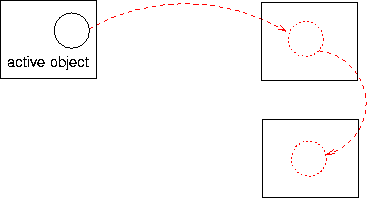

ProActive allows the transparent migration of objects between virtual machines.

A nice visual example is the penguin's one.

This example shows a set of mobile agents moving around while still communicating with their base and with each other. It also features the capability to move a swing window between screens while moving an agent from one JVM to the other.

Using the ic2d script

Acquire the machines you have started nodes on

On the Advanced Penguin Controller window: button 'add agent'

An agent is materialized by a picture in a java window.

Select it, and press button 'start'

Observe that the active object is moving between the machines, and that the penguin window disappears and reappears on the screen associated with the new JVM.

After selecting them, use the buttons to:

Communicate with them ('chained calls')

Start, stop, resume them

Trigger a communication between them ('call another agent')

Start the same script on a different computer, using another screen and keyboard

Monitor the corresponding JVM with IC2D

Drag-and-drop the active object 'AdvancedPenguinController' with IC2D into the newly created JVM: the control window will appear on the other computer

And its user can now control the penguins application.

Still with IC2D, doing a drag-and-drop back to the original JVM, you will be able to get back the window, and control yourself the application.

You've already seen quite sophisticated examples in the section Chapter 5, Introduction to ProActive Features. Here is an introduction to programming with ProActive.

The program that we will develop is a classic 'helloworld' example. We will increase the complexity of the example, so you get more familiar with different features of ProActive.

First, we will code a 'client-server' application, the server being an active object.

Second, we will see how we can control the activity of an active object.

Third, we will add mobility to this active object.

Eventually, we will attach a graphical interface to the active object, and will show how to move the widget between virtual machines (like in the penguin example).

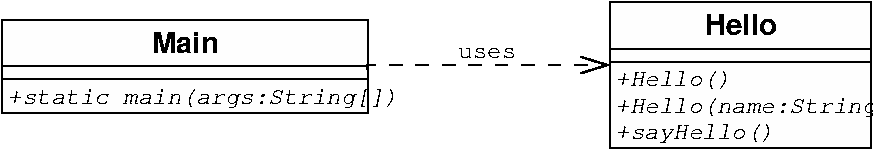

This example implements a very simple client-server application. It

has an in-depth explanation in Section 13.10, “The Hello world example”; you might

wish to skim through it. Summarized, it is a client object displaying a

StringWrapper received from a remote server.

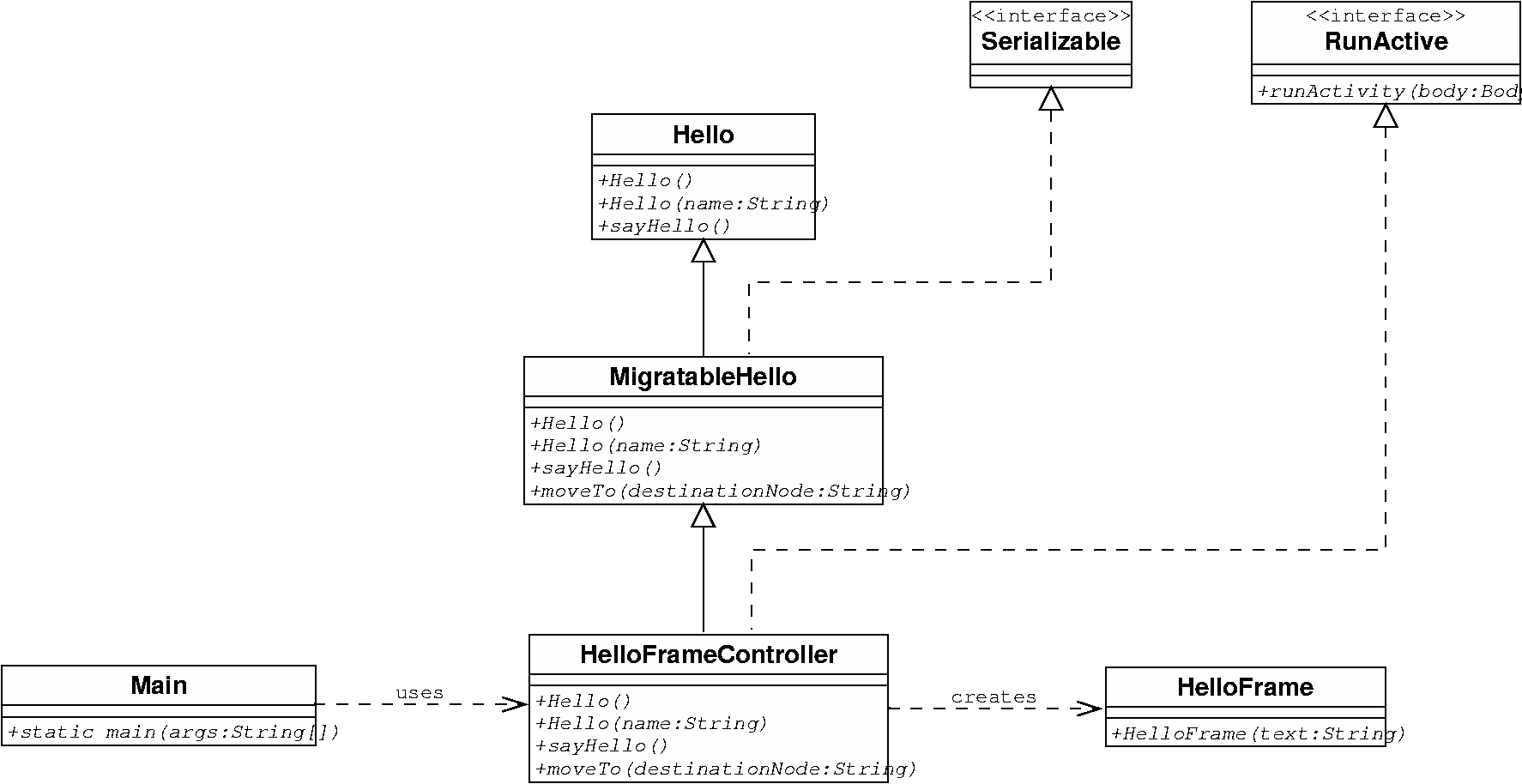

The corresponding class diagram is the following:

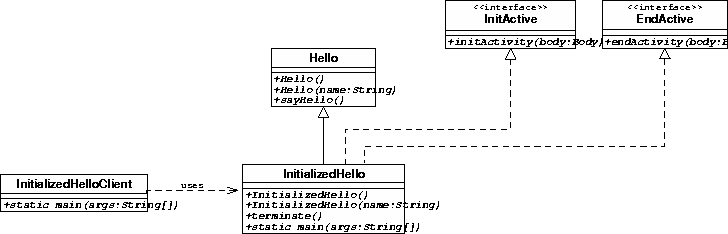

Active objects, as their name indicates, have an activity of their own (an internal thread).

It is possible to add pre and post processing to this activity, just by implementing the interfaces InitActive and EndActive, that define the methods initActivity and endActivity.

The following example will help you to understand how and when you can initialize and clean the activity.

When instantiated, the activity of an object is automatically started, but it will first do what is written in the initActivity method.

Ending the activity can only be done from inside the active object (i.e. from a call to its own body). This is the reason why we have written a terminate method in the following example.

The InitializedHello class extends the Hello class, and implements the interfaces InitActive and EndActive.It acts a a server for the InitializedHelloClient class.

The main method is overriden so that it can instantiate the InitializedHello class

The source code of the InitializedHello class is in Example C.28, “InitializedHello.java”.

initActivity and endActivity here just log messages onto the console, so you can see when they are called.

initActivity is called at the creation of the active object, while endActive is called after the activity has terminated (thanks to the method terminate).

Here is the initActivity method:

public void initActivity(Body body) { System.out.println("I am about to start my activity"); }

Here is the endActivity method:

public void endActivity(Body body) { System.out.println("I have finished my activity"); }

The following code shows how to terminate the activity of the active object:

public void terminate() throws IOException { // the termination of the activity is done through a call on the // terminate method of the body associated to the current active object ProActive.getBodyOnThis().terminate(); }

The only differences from the the previous example is the classes instantiated, which are now InitializedHello (and not Hello) and InitializedHelloClient, and you will add a call to hello.terminate().

The source code of InitializedHello is in Example C.28, “InitializedHello.java”, and the code for InitializedHelloClient is in Example C.29, “InitializedHelloClient.java”.

So, create InitializedHelloClient.java and InitializedHello.java in src/org/objectweb/proactive/examples/hello

Now compile all proactive sources

cd compile windows>build.bat examples linux>build examples cd ..

Add './classes' directory to CLASSPATH to use these two new source files

windows>set CLASSPATH=.;.\classes;.\ProActive_examples.jar;.\ProActive.jar;.\lib\bcel.jar;.\lib\asm.jar;.\lib\log4j.jar;\

.\lib\xercesImpl.jar;.\lib\fractal.jar;.\lib\bouncycastle.jar

linux>export CLASSPATH=.:./classes:./ProActive_examples.jar:./ProActive.jar:./lib/bcel.jar:./lib/asm.jar:./lib/log4j.jar:\

./lib/xercesImpl.jar:./lib/fractal.jar:./lib/bouncycastle.jarExecution is similar to the previous example; just use the InitializedHelloClient client class and InitializedHello server class.

linux> java -Djava.security.policy=scripts/proactive.java.policy \

-Dlog4j.configuration=file:scripts/proactive-log4j

org.objectweb.proactive.examples.hello.InitializedHello

windows> java -Djava.security.policy=scripts\proactive.java.policy \

-Dlog4j.configuration=file:scripts\proactive-log4j \

org.objectweb.proactive.examples.hello.InitializedHello &linux> java -Djava.security.policy=scripts/proactive.java.policy

-Dlog4j.configuration=file:scripts/proactive-log4j \

org.objectweb.proactive.examples.hello.InitializedHelloClient //localhost/Hello

windows> java -Djava.security.policy=scripts\proactive.java.policy \

-Dlog4j.configuration=file:scripts\proactive-log4j

org.objectweb.proactive.examples.hello.InitializedHelloClient //localhost/Hello This program is a very simple one: it creates an active object that migrates between virtual machines. It is a extension of the previous client-server example, the server now being mobile.

The conditions for MigratableHello to be a migratable active object are:

- it must have a constructor without parameters: this is a result of a ProActive restriction : the active object having to implement a no-arg constructor. </ p>

- implement the Serializable interface (as it will be transferred through the network).</>

Hello, the superclass, must be able to be serialized, in order to be transferred remotely. It does not have to implement directly java.io.Serializable, but its attributes should be serializable - or transient. For more information on this topic, check Chapter 16, Active Object Migration .

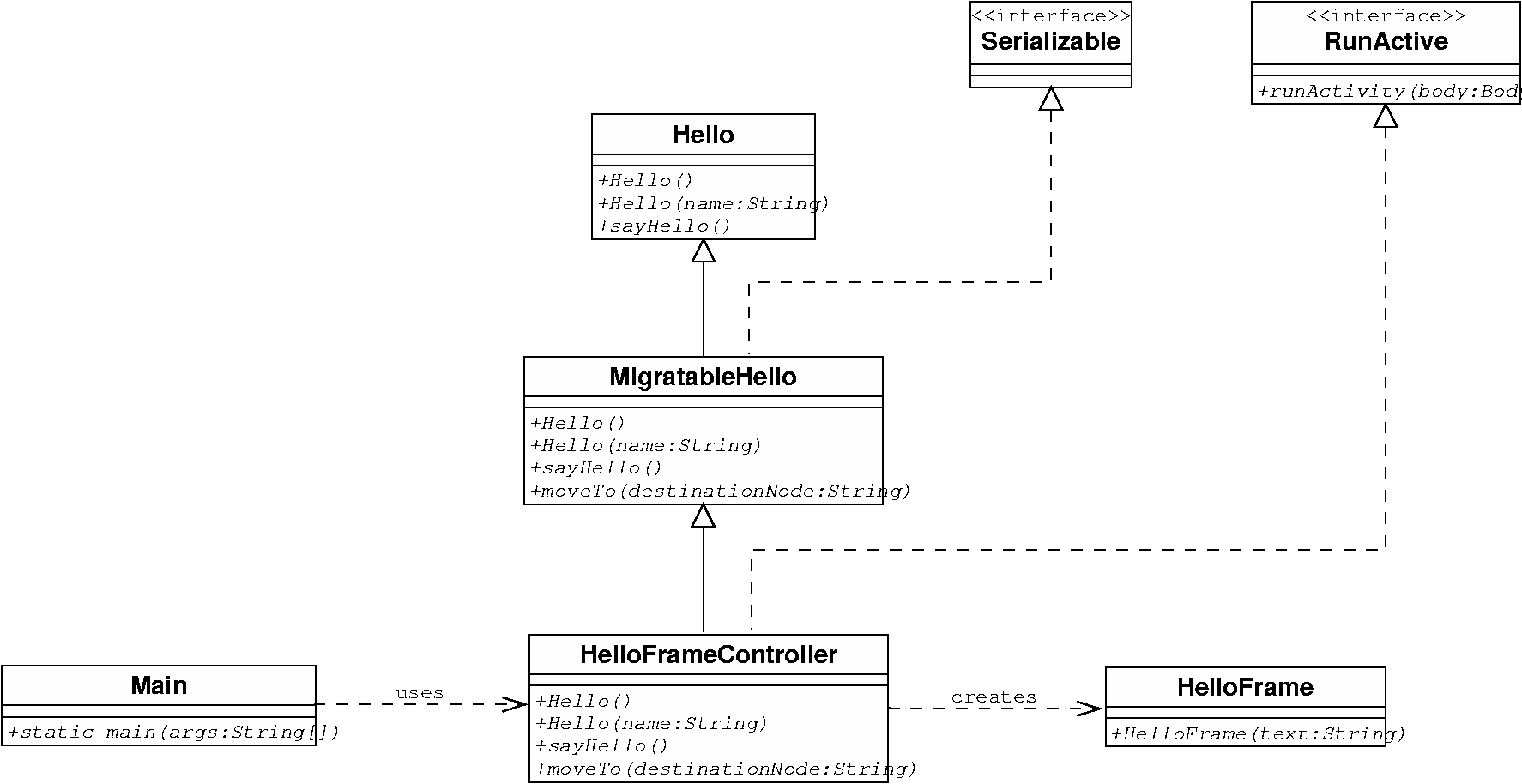

We want to further enhance InitializedHello it by making migratable: we'd like to be able to move it across virtual machines.

Thus, we create a MigratableHello class, that derives from InitializedHello. This class will implement all the non-functionnal behavior concerning the migration, for which this example is created. The Hello class (and InitializedHello) is left unmodified.

Note that the migration has to be initiated by the active object itself. This explains why we have to write the moveTo method in the code of MigratableHello - i.e. a method that contains an explicit call to the migration primitive. (cf Chapter 16, Active Object Migration for migration documentation )

MigratableHello also implements a factory method for instanciating

itself as an active object : static MigratableHello

createMigratableHello(String: name)

The class diagram for the application is the following:

The code of the MigratableHello class is in Example C.30, “MigratableHello.java”.

MigratableHello derives from the Hello class from the previous example

MigratableHello being the active object itself, it has to:

- implement the Serializable interface

- provide a no-arg constructor

- provide an implementation for using ProActive's migration mechanism.

A new method getCurrentNodeLocation is added for the object to tell the node where it resides..

A factory static method is added for ease of creation.

The migration is initiated by the moveTo method:

/** method for migrating * @param destination_node destination node */ public void moveTo(String destination_node) { System.out.println("\n-----------------------------"); System.out.println("starting migration to node: " + destination_node); System.out.println("..."); try { // THIS MUST BE THE LAST CALL OF THE METHOD ProActive.migrateTo(destination_node); } catch (MigrationException me) { System.out.println("migration failed: " + me.toString()); } }

Note that the call to the ProActive primitive

migrateTo is the last one of the method moveTo. See

Chapter 16, Active Object Migration for more information.

The entry point of the program is written in a separate class: MigratableHelloClient (see Example C.31, “MigratableHelloClient.java”).

It takes as arguments the locations of the nodes the object will be migrated to.

The program calls the factory method of MigratableHello to create an instance of an active object. It then moves it from node to node, pausing for a while between the transfers.

- start several nodes using the startnode

script.

windows>cd scripts/windows

startNode.bat //localhost/n1

startNode.bat //localhost/n2

linux>cd scripts/linux

./startNode.sh //localhost/n1

./startNode.sh //localhost/n2 - compile and run the program (run MigratableHelloClient), passing in parameter the urls of the nodes you'd like the agent to migrate to.

cd compile windows>build.bat examples linux>build examples cd ..

linux>java

-Djava.security.policy=scripts/proactive.java.policy

-Dlog4j.configuration=file:scripts/proactive-log4j

org.objectweb.proactive.examples.hello.MigratableHelloClient

//localhost/n1 //localhost/n2

windows>java

-Djava.security.policy=scripts\proactive.java.policy

-Dlog4j.configuration=file:scripts\proactive-log4j

org.objectweb.proactive.examples.hello.MigratableHelloClient

//localhost/n1 //localhost/n2

- observe the instance of MigratableHello migrating:

During the execution, a default node is first created. It then hosts the created active object. Then the active object is migrated from node to node, each time returning 'hello' and telling the client program where it is located.

Graphical interfaces are not serializable, yet it is possible to migrate them with ProActive.

The idea is to associate the graphical object to an active object. The active object will control the activation and desactivation of this graphical entity during migrations.

Of course, this is a very basic example, but you can later build more sophisticated frames.

We will write a new active object class, that extends MigratableHello. The sayHello method will create a window containing the hello message. This window is defined in the class HelloFrame

The code of the HelloFrameController is in Example C.32, “HelloFrameController.java”.

This class extends MigratableHello, and adds an activity and a migration strategy manager to the object .

It creates a graphical frame upon call of the sayHello method.

Here we have a more complex migration process than with the previous example. We need to make the graphical window disappear before and reappear in a new location after the migration (in this example though, we wait for a call to sayHello). The migration of the frame is actually controlled by a MigrationStrategyManager, that will be attached to the body of the active object.. An ideal location for this operation is the initActivity method (from InitActive interface), that we override:

/** * This method attaches a migration strategy manager to the current active object. * The migration strategy manager will help to define which actions to take before * and after migrating */ public void initActivity(Body body) { // add a migration strategy manager on the current active object migrationStrategyManager = new MigrationStrategyManagerImpl((Migratable) ProActive.getBodyOnThis()); // specify what to do when the active object is about to migrate // the specified method is then invoked by reflection migrationStrategyManager.onDeparture('clean'); }

The MigrationStrategyManager defines methods such as 'onDeparture', that can be configured in the application. For example here, the method 'clean' will be called before the migration, conveniently killing the frame:

public void clean() { System.out.println("killing frame"); helloFrame.dispose(); helloFrame = null; System.out.println("frame is killed"); }

This is an example of a graphical class that could be associated with the active object (see code in Example C.33, “HelloFrame.java” ).

Create a new class HelloFrameControllerClient: take the code of MigratableHelloClient used in the previous part, change the class declaration to HelloFrameControllerClient and replace the line

MigratableHello migratable_hello =

MigratableHello.createMigratableHello("agent1");

with

MigratableHello migratable_hello =

HelloFrameController.createHelloFrameController("agent1");

Similarly to the simple migration example (use the HelloFrameControllerClient class), you will start remote nodes and specify a migration path.

you have 2 ways for handling the display of the graphical objects:

look on the display screens of the machines

export the displays: in startNode.sh, you should add the following lines before the java command:

DISPLAY=myhost:0 export DISPLAY

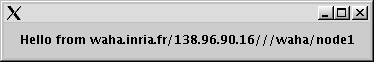

The displayed window: it just contains a text label with the location of the active object.

In this document we show how to create a distributed application to compute the number PI using the ProActive Grid Middleware. Distributed programming is achieved using the ProActive deployment framework combined with the active object model.

Download and install the JDK 5.0 Update 9 from here.

Set the environment variable JAVA_HOME to the java installation location.

Go into the tutorial directory: ProActive/src/org/objectweb/proactive/examples/pi/. This directory contains:

config/ <-- Configuration directory

descriptors/ <-- Deployment descriptors directory

doc/ <-- Documentation directory

fractal/ <-- Component directory

scripts/ <-- Launch scripts directory

Interval.java <-- The parameter passed to remote objects

PiBPP.java <-- The main code

PiComputer.java <-- The remote object code (worker)

Results.java <-- The results returned by the workers

MyPi.java <-- Base class to test Pi with ProActiveIn this step by step we will implement our own version of PiBPP.java.

Create the file MyPi.java inside the tutorial directory with initially the following content:

package org.objectweb.proactive.examples.pi; import org.objectweb.proactive.ProActive; import org.objectweb.proactive.core.descriptor.data.ProActiveDescriptor; import org.objectweb.proactive.core.descriptor.data.VirtualNode; import org.objectweb.proactive.core.group.ProActiveGroup; import org.objectweb.proactive.core.node.Node; class MyPi{ // global variables will go here public static void main(String args[]) throws Exception{ Integer numberOfDecimals = new Integer(args[0]); String descriptorPath = args[1]; // the main code will go here }

Inside the main we add the code for acquiring the resources.

ProActiveDescriptor descriptor = ProActive.getProactiveDescriptor(descriptorPath); //Parse the xml descriptor descriptor.activateMappings(); //Acquire the resources VirtualNode virtualNode = descriptor.getVirtualNode("computers-vn"); //Get the virtual node named "computers-vn" Node[] nodes = virtualNode.getNodes();

PiComputer piComputer = (PiComputer) ProActiveGroup.newGroupInParallel( PiComputer.class.getName(), new Object[] { numberOfDecimals }, nodes); int numberOfWorkers = ProActiveGroup.getGroup(piComputer).size();

Interval intervals = PiUtil.dividePI(numberOfWorkers, numberOfDecimals.intValue());

ProActiveGroup.setScatterGroup(intervals);

Result results = piComputer.compute(intervals);

Result result= PiUtil.conquerPI(results);

System.out.println("Pi:"+result);package org.objectweb.proactive.examples.pi;

import org.objectweb.proactive.ProActive;

import org.objectweb.proactive.core.descriptor.data.ProActiveDescriptor;

import org.objectweb.proactive.core.descriptor.data.VirtualNode;

import org.objectweb.proactive.core.group.ProActiveGroup;

import org.objectweb.proactive.core.node.Node;

public class MyPi {

public static void main(String args[]) throws Exception{

Integer numberOfDecimals = new Integer(args[0]);

String descriptorPath = args[1];

ProActiveDescriptor descriptor = ProActive.getProactiveDescriptor(descriptorPath);

descriptor.activateMappings();

VirtualNode virtualNode = descriptor.getVirtualNode("computers-vn");

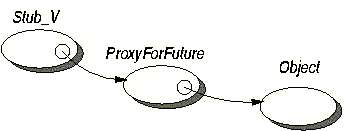

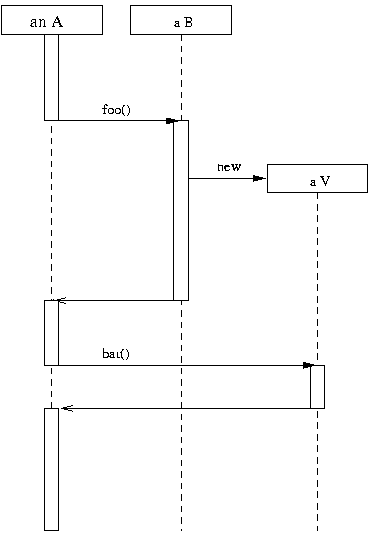

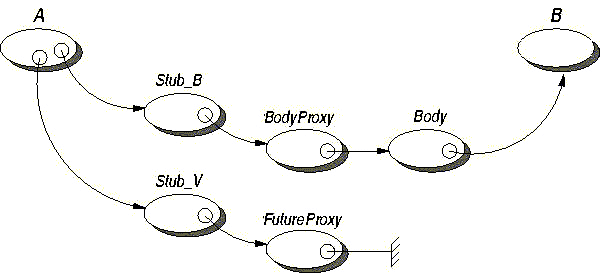

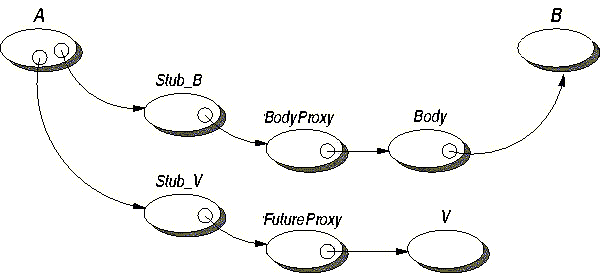

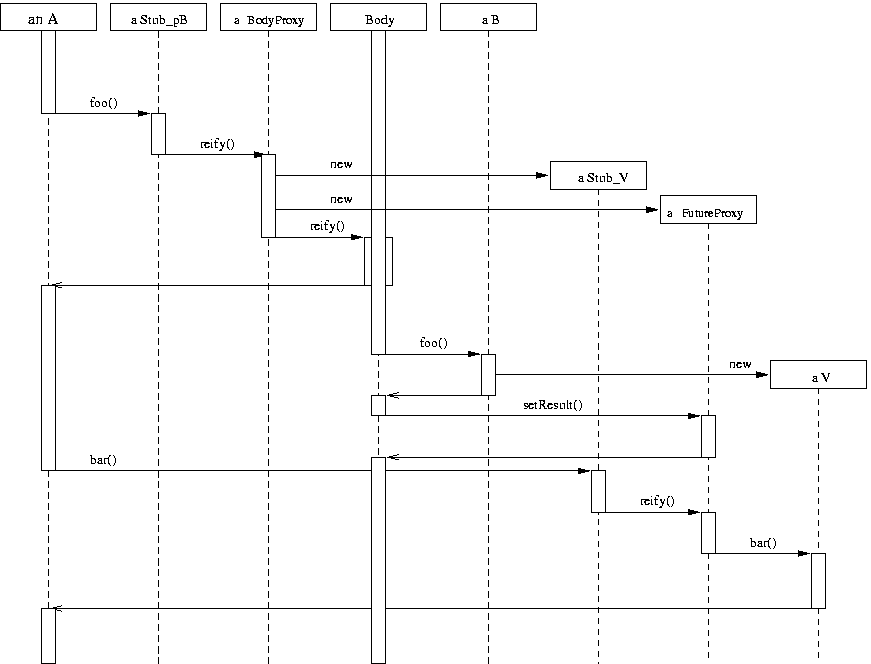

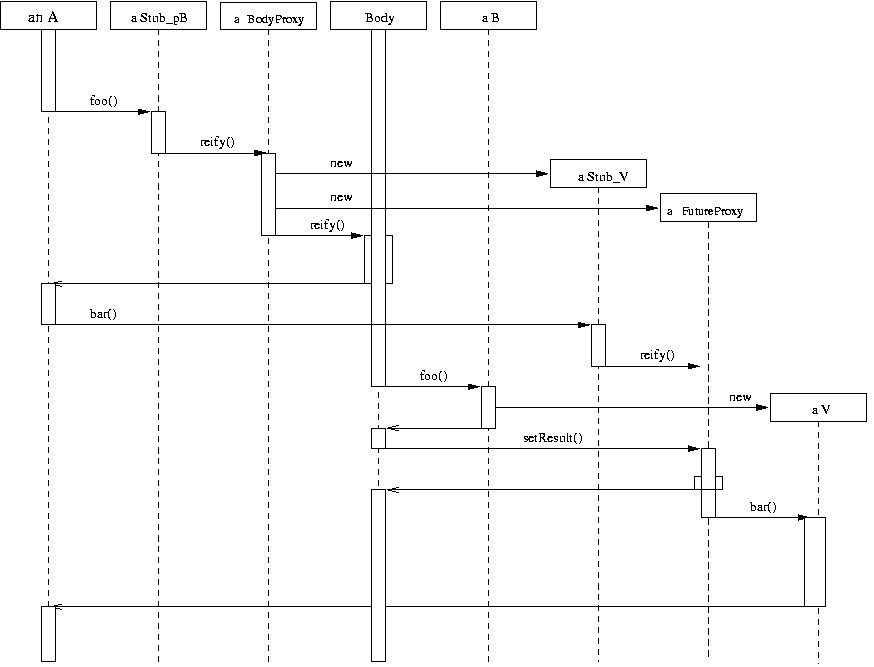

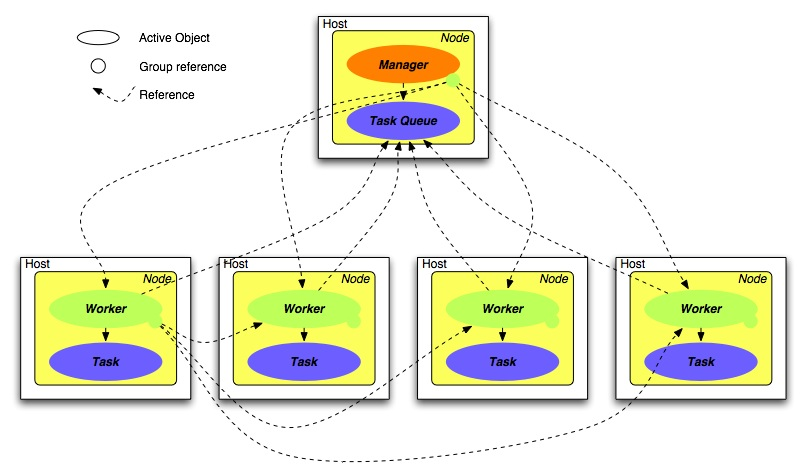

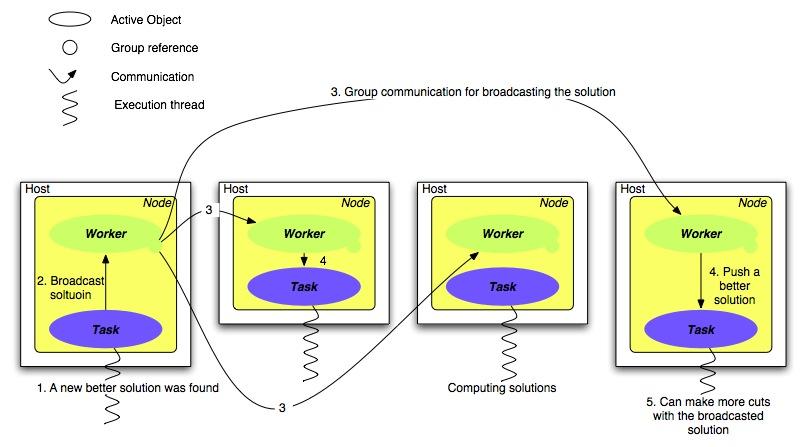

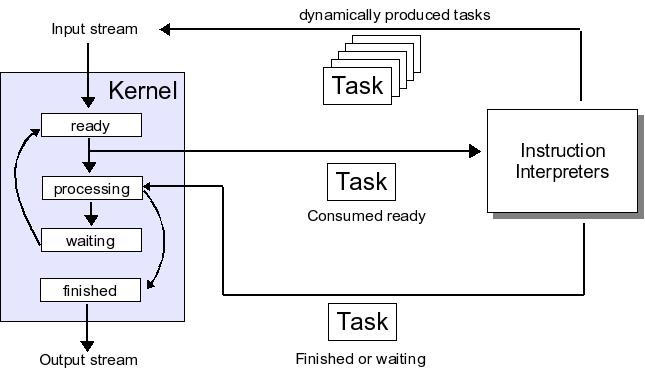

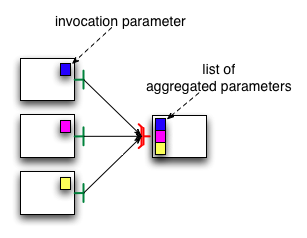

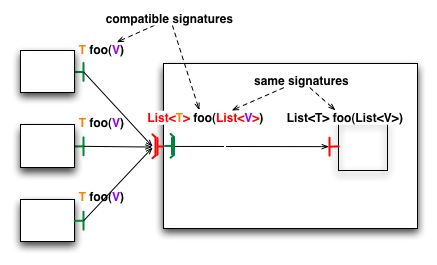

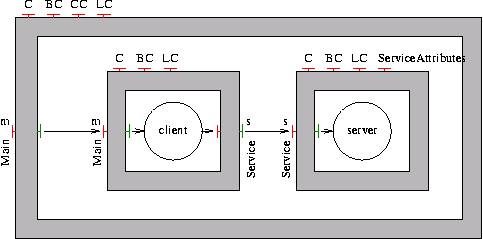

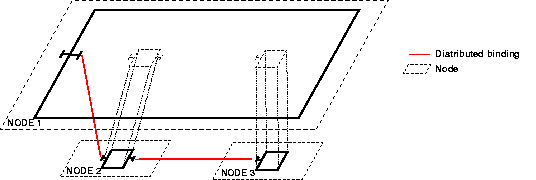

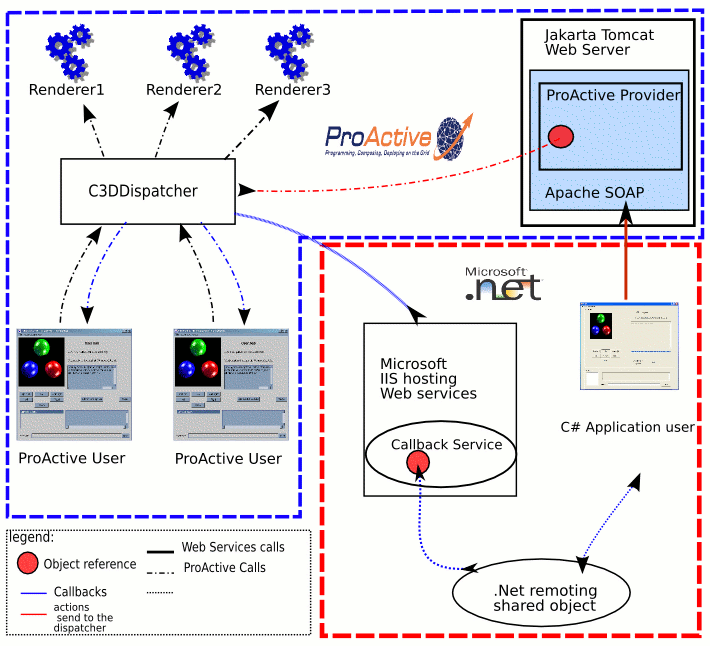

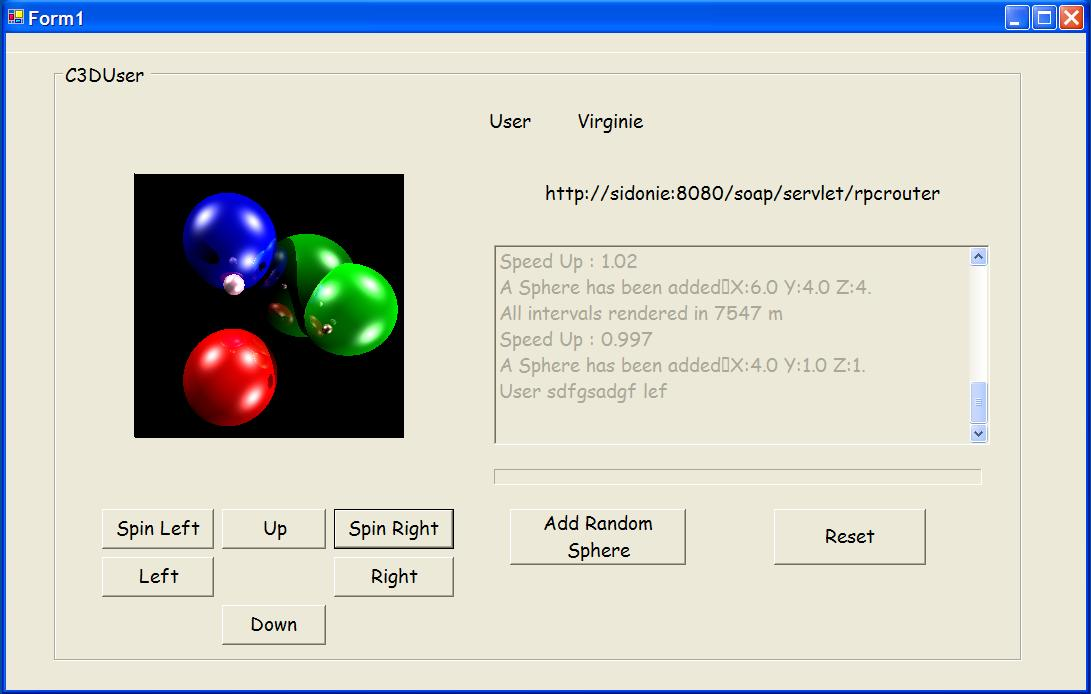

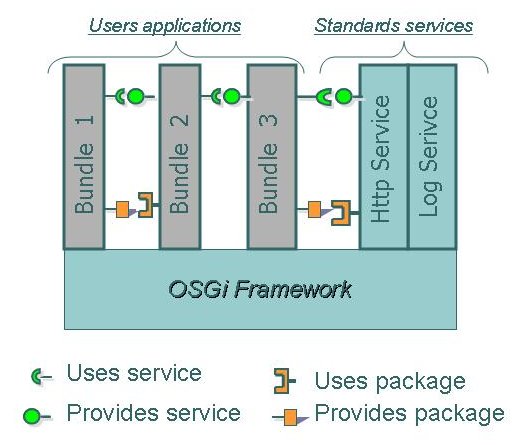

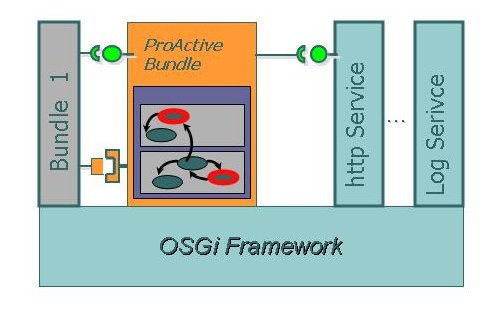

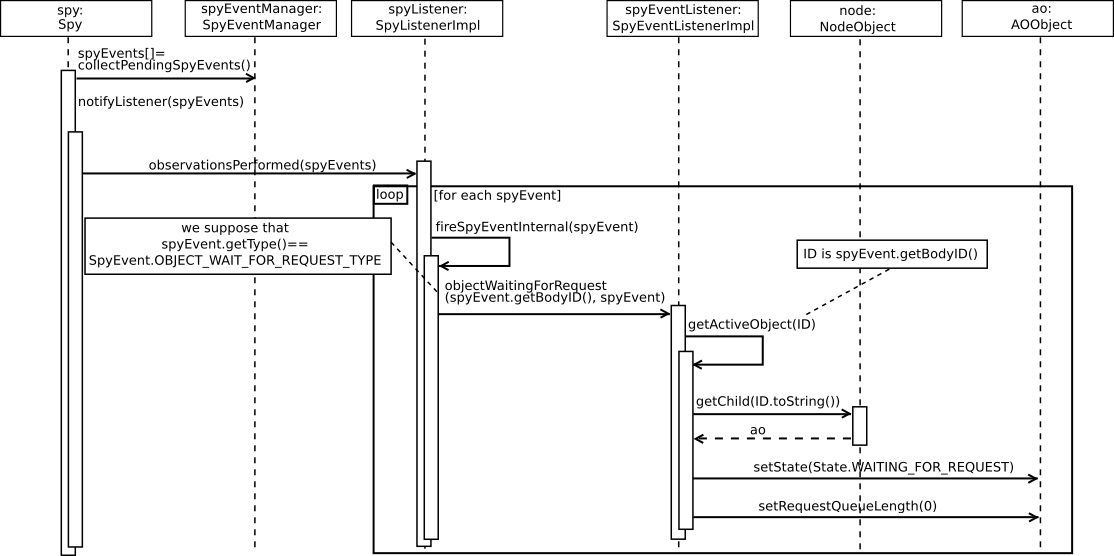

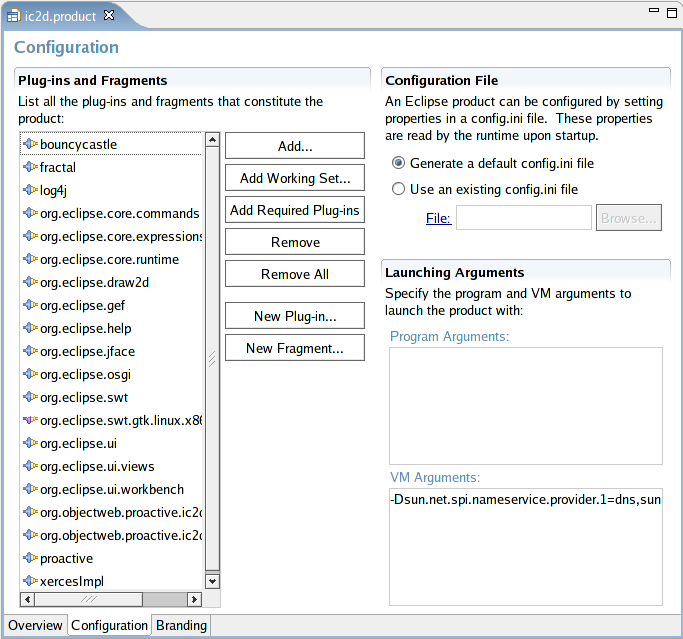

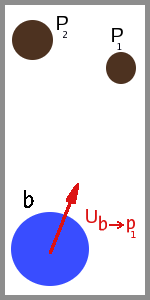

Node[] nodes = virtualNode.getNodes();