Learning for Graphics, Graphics for Learning

|

Abstract

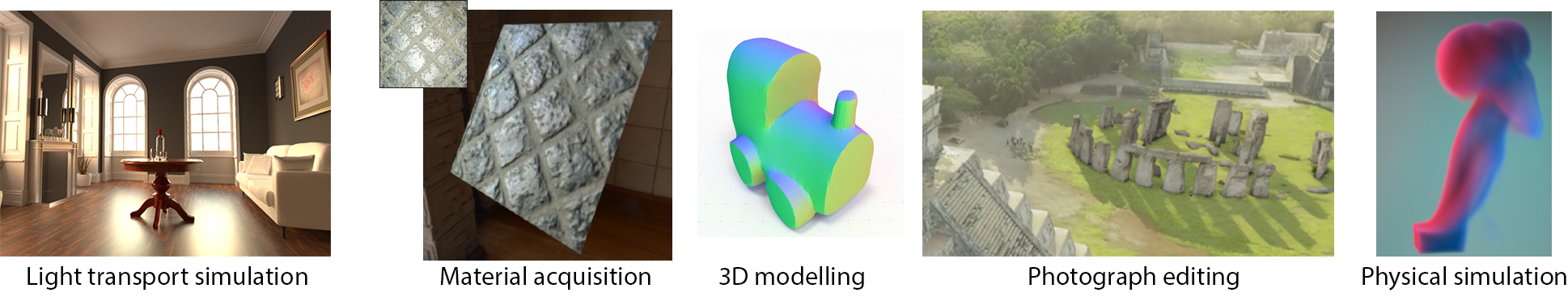

Computer graphics cover a wide range of algorithms to generate synthetic images with computers. While traditional computer graphics algorithms strive to model and simulate the physics that underpin the formation of images, recent methods leverage the impressive expressive power of machine learning algorithms to capture and reproduce visual effects from large quantity of training data. Yet, visual data is sometimes difficult to acquire, wich also motivates the use of traditional computer graphics simulation as a way to generate large synthetic datasets used for training models that interpret real-world images.

This class will cover the recent progress made in computer graphics thanks to novel machine learning methods, as well as the impact that computer graphics algorithms have on the development of recent machine learning models.

Content

- Background on deep learning, introduction to deep generative modeling

- CNNs for image classification and segmentation

- From image segmentation to image generation

- Generative Adversarial Networks

- Light transport simulation

- Rendering equation

- Monte-Carlo light transport

- Deep denoising

- Geometric representations for learning

- Image-space representations (depth maps, normal maps)

- Object-space representations (voxel grids, point clouds, triangular meshes, signed distance functions)

- Procedural shapes, domain-specific languages for shapes

- Physical simulation

- Fluid simulation

- Handling 4D data

- Learning physics, differentiable solvers and transport

- Recurrent networks and applications for temporally evolving systems

- Material representations for learning

- Material models

- textures, SVBRDFs

- Material estimation from images

- Inverse rendering and differentiable rendering

- Automatic differentiation

- Differentiable rasterization

- Differentiable path tracing

- Inverse rendering by latent space optimization

- Neural rendering & Relighting

- Image-based rendering

- Deep novel-view synthesis, representations and methods (lightfield, multi-plane images, deep blending, NeRF)

- Lighting estimation from images

- Deep relighting

- Generative models for image editing

- Controllable generative adversarial networks

- Style transfer

- Internal learning for super-resolution and denoising

Prerequisite

Students should be fluent in Python programming. All practical exercises will be done on Google Colab. Knowledge in image processing and computer graphics is a plus (see the M1 course on computational geometry and digital images).Evaluation

Students will be graded on the prartical exercises, as well as on a short project. The short project should extend one of the pratical exercises based on suggested references. Both the project and the references will be presented to the class at the end of the semester. The final grade will be computed as: 50% for practical exercises, 50% for project.Lecturers

- Guillaume Cordonnier recently joined Inria after doing his postdoc at ETH Zurich. Guillaume works on physically-based simulation of natural phenomena.

- Adrien Bousseau works on image creation and manipulation, with a focus on digital drawing and photography.

- George Drettakis works on rendering algorithms to visualize synthetic or captured scenes.