YMU Face

Matching Results

We provide here information on the performance of state-of-art face matchers on the YMU dataset.

In YMU there are four images associated with each subject: two images without makeup and two images with makeup. Let N1 and N2 denote the images without makeup, and M1 and M2 denote

the images with makeup. Genuine and impostor scores for each of the three face matchers were generated according to the following protocol:

The EERs (Equal Error Rates) of the matching scenarios considered in the YMU database are summarized in Table 1. The general observation here is that EERs for the N vs M cases are substantially higher than for the M vs M and N vs N cases.

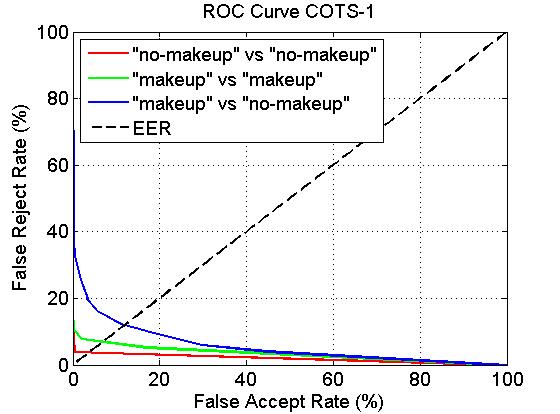

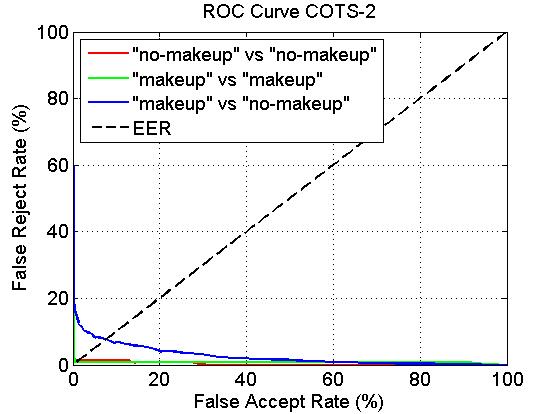

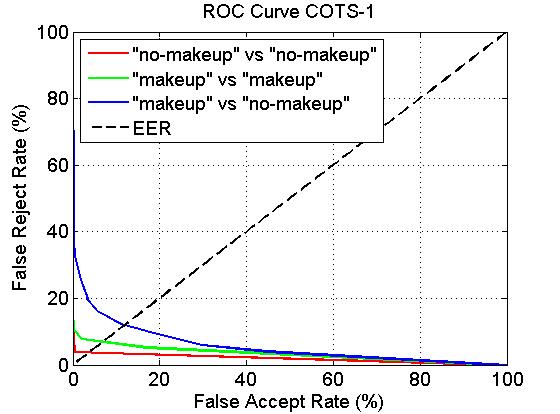

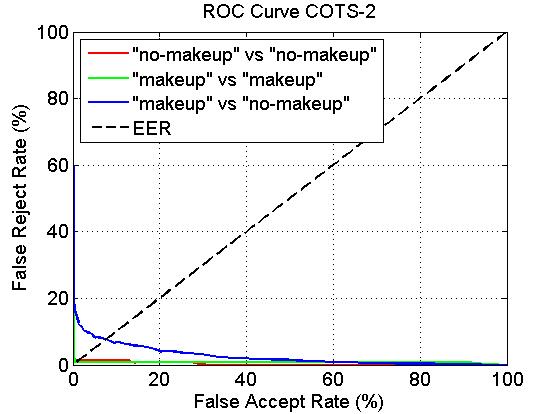

ROC Curves

Here we present the related Receiver Operating Characteristic (ROC) curves: the false rejection rate (FRR) is plotted as a function of the false accept rate (FAR).

ROC curves of all seven algorithms on the YMU database are presented in the following Figures 1-7.

We provide here information on the performance of state-of-art face matchers on the YMU dataset.

In YMU there are four images associated with each subject: two images without makeup and two images with makeup. Let N1 and N2 denote the images without makeup, and M1 and M2 denote

the images with makeup. Genuine and impostor scores for each of the three face matchers were generated according to the following protocol:

- Matching N1 against N2: Both the images to be compared do not have makeup (the before-makeup images).

- Matching M1 against M2: Both the images to be compared have makeup (the after-makeup images).

- Matching N1 against M1, N1 against M2, N2 against M1, N2 against M2: One of the images to be compared has no makeup while the other has makeup.

- 151 genuine and 45,300 impostor scores,

- 151 genuine and 45,300 impostor scores,

- 604 genuine and 90,600 impostor scores.

The EERs (Equal Error Rates) of the matching scenarios considered in the YMU database are summarized in Table 1. The general observation here is that EERs for the N vs M cases are substantially higher than for the M vs M and N vs N cases.

Table 1: Equal Error Rates

(%) corresponding to the seven face matchers on the YMU dataset.

|

No-makeup

vs No-makeup |

Makeup

vs Makeup |

No-makeup

vs Makeup |

|

|

COTS-1 |

3.845 |

7.079 |

12.04 |

|

COTS-2 |

0.6912 |

1.327 |

7.692 |

|

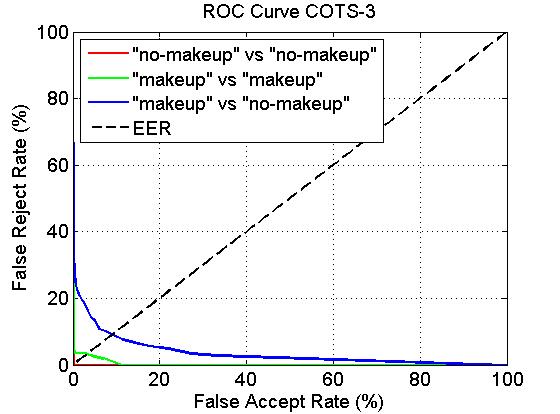

COTS-3 |

0.1141 |

3.291 |

9.178 |

|

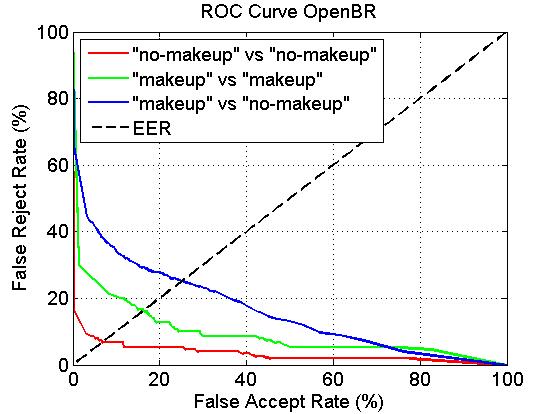

OpenBR |

6.872 |

16.44 |

25.2 |

|

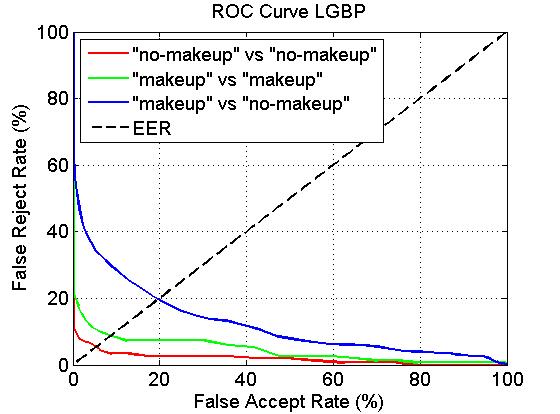

LGBP |

5.345 |

8.773 |

19.71 |

|

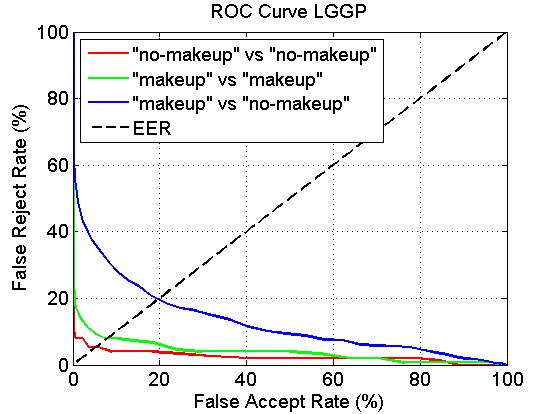

LGGP |

5.359 |

8.007 |

19.7 |

|

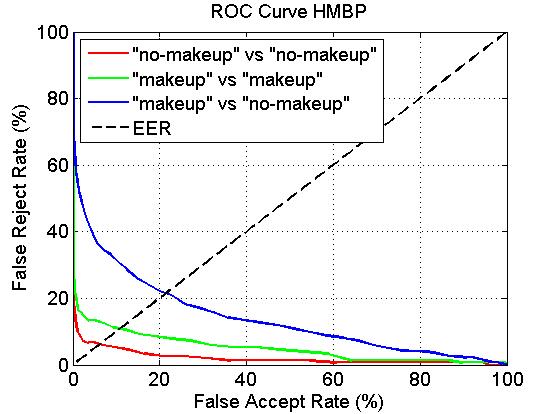

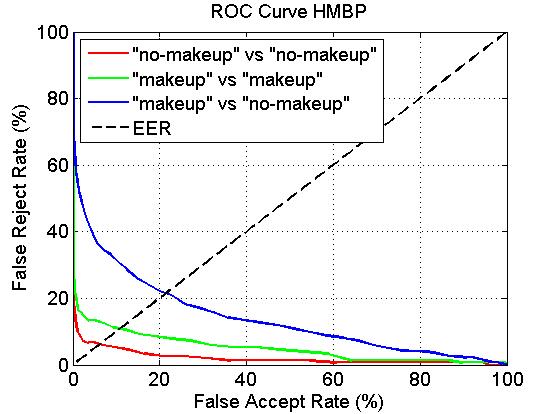

HMBP |

6.25 |

10.87 |

21.54 |

ROC Curves

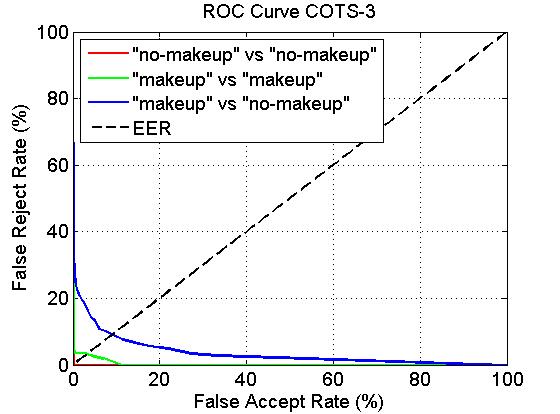

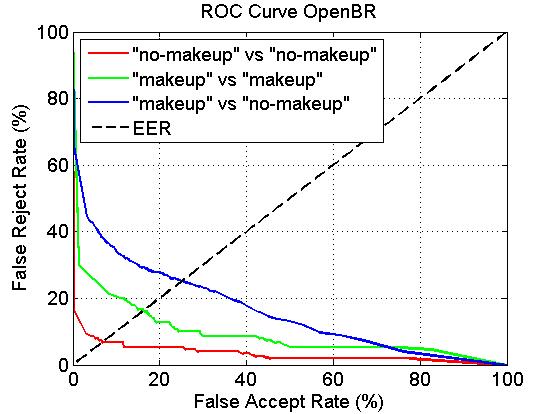

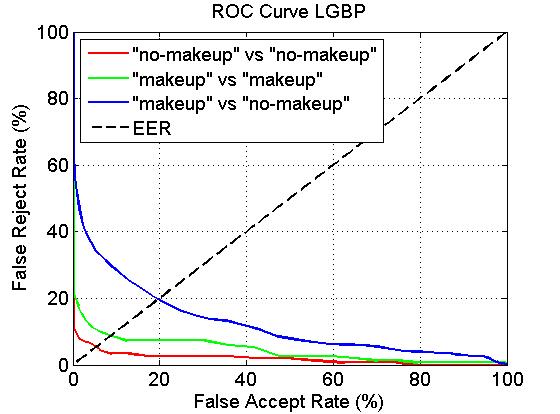

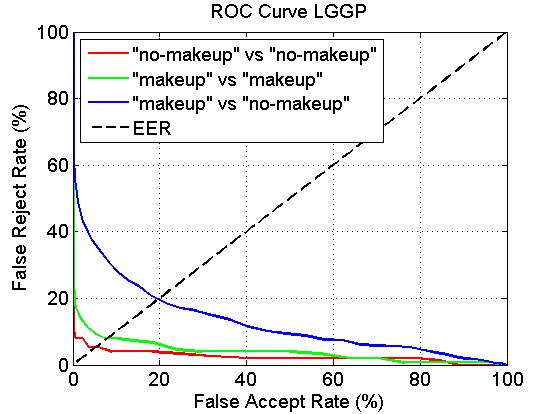

Here we present the related Receiver Operating Characteristic (ROC) curves: the false rejection rate (FRR) is plotted as a function of the false accept rate (FAR).

ROC curves of all seven algorithms on the YMU database are presented in the following Figures 1-7.

Fig. 1: ROC Curve COTS-1.

Fig. 2: ROC Curve COTS-2.

Fig. 3: ROC Curve COTS-3.

Fig. 4: ROC Curve OpenBR.

Fig. 5: ROC Curve LGBP.

Fig. 6: ROC Curve LGGP.

Fig. 7: ROC Curve HMBP.

Fig. 3: ROC Curve COTS-3.

Fig. 4: ROC Curve OpenBR.

Fig. 5: ROC Curve LGBP.

Fig. 6: ROC Curve LGGP.

Fig. 7: ROC Curve HMBP.

VMU Face

Matching Results

We provide here information on the performance of state-of-art face matchers on the VMU dataset.

In VMU there are four images associated with each subject: the original image, and three images after adding makeup using the publicly available tool from Taaz, (a) application of lipstick only; (b) application of eye makeup only; and (c) application of a full makeup consisting of lipstick, foundation, blush and eye makeup. Let N denote the image without makeup, L for lipstick, E for eye makeup and F for full makeup.

We have conducted experiments on the VMU dataset with eight commercial and academic algorithms: COTS-1, COTS-2, COTS-3, LBP [5], Gabor [6], HMBP [4], LGGP[3] and LGBP [2]. COTS-1, COTS-2 and COTS-3 are the same commercial algorithm as employed in the YMU experiments above.

The EERs (Equal Error Rates) of the matching scenarios considered in the VMU database are summarized in Table 2. The general observation here is that EERs for the N vs F and N vs E cases are substantially higher than for the N vs L case.

When using these results in your research, please cite the following papers:

We provide here information on the performance of state-of-art face matchers on the VMU dataset.

In VMU there are four images associated with each subject: the original image, and three images after adding makeup using the publicly available tool from Taaz, (a) application of lipstick only; (b) application of eye makeup only; and (c) application of a full makeup consisting of lipstick, foundation, blush and eye makeup. Let N denote the image without makeup, L for lipstick, E for eye makeup and F for full makeup.

- Matching N against L: An image without makeup is compared against the same image with lipstick added.

- Matching N against E: An image without makeup is compared against the same image with eye makeup added.

- Matching N against F: An image without makeup is compared against the same image with full makeup added.

We have conducted experiments on the VMU dataset with eight commercial and academic algorithms: COTS-1, COTS-2, COTS-3, LBP [5], Gabor [6], HMBP [4], LGGP[3] and LGBP [2]. COTS-1, COTS-2 and COTS-3 are the same commercial algorithm as employed in the YMU experiments above.

The EERs (Equal Error Rates) of the matching scenarios considered in the VMU database are summarized in Table 2. The general observation here is that EERs for the N vs F and N vs E cases are substantially higher than for the N vs L case.

Table 2: Equal Error Rates

(%) corresponding to the eight face matchers on the MU dataset.

References|

No-makeup vs Lip-makeup |

No-makeup vs Eye-Makeup |

No-makeup vs Full-Makeup |

|

|

COTS-1 |

1.97 |

0.9849 |

1.959 |

|

COTS-2 |

0 |

0 |

0 |

|

COTS-3 |

0.4805 |

1.984 |

2.248 |

|

LBP |

3.43 |

4.32 |

4.79 |

|

Gabor |

8.56 |

8.15 |

11.38 |

|

HMBP |

5.0 |

8.46 |

8.05 |

|

LGGP |

4.805 |

8.688 |

8.164 |

|

LGBP |

2.9 |

5.44 |

5.42 |

- J. Klontz, B. Klare, S. Klum, E. Taborsky, M. Burge, and A. K. Jain, "Open source biometric recognition," in Proc. of BTAS, 2013.

- W. Zhang, S. Shan, W. Gao, X. Chen, H. Zhang, "Local Gabor binary pattern histogram sequence (LGBPHS): a novel non-statistical model for face representation and recognition," in Proc. of ICCV, 2005.

- C. Chen and A. Ross, "Local Gradient Gabor Pattern (LGGP) With Applications in Face Recognition, Cross-spectral Matching and Soft Biometrics," Proc. of SPIE Biometric and Surveillance Technology for Human and Activity Identification X, 2013.

- M. Yang, L. Zhang, L. Zhang and D. Zhang, "Monogenic Binary Pattern (MBP): A Novel Feature Extraction and Representation Model for Face Recognition," Proc. of ICPR 2010.

- T. Ahonen, A. Hadid, and M. Pietikainen, "Face description with local binary patterns: application to face recognition," IEEE Trans. on PAMI, 28:2037–2041, 2006.

- C.

Liu and H. Wechsler, "Gabor feature based classification using the

enhanced fisher linear discriminant model for face recognition," IEEE

Trans. on Image Processing, 11(4):467–

476, 2002.

When using these results in your research, please cite the following papers:

- A. Dantcheva, C. Chen, A. Ross, "Can Facial Cosmetics Affect the Matching Accuracy of Face Recognition Systems?," Proc. of 5th IEEE International Conference on Biometrics: Theory, Applications and Systems (BTAS), (Washington DC, USA), September 2012.

- C. Chen, A. Dantcheva, A. Ross, "Automatic Facial Makeup Detection with Application in Face Recognition," Proc. of 6th IAPR International Conference on Biometrics (ICB), (Madrid, Spain), June 2013.