3D-Audio Matting, Post-Editing and Re-rendering from Field Recordings

E. Gallo1,2, N. Tsingos1 and G. Lemaitre1

1REVES-INRIA and 2CSTB

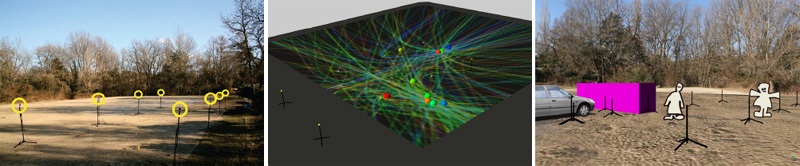

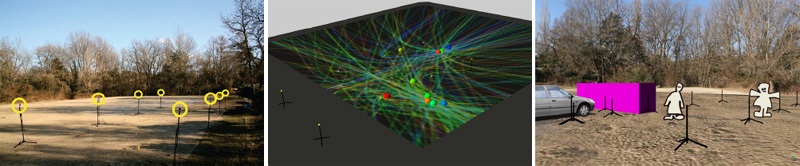

Left: We use multiple arbitrarily positioned microphones (circled in yellow) to simultaneously record real-life auditory environments. Middle: We analyze the recordings to extract the positions of various sound components through time. Right: This high-level representation allows for post-editing and re-rendering the acquired soundscape within generic 3D audio rendering architectures.

We present a novel approach to real-time spatial rendering of realistic auditory

environments and sound sources recorded live, in the field.

Using a set of standard microphones distributed throughout a real-world

environment we record the sound-field simultaneously from several locations.

After spatial calibration, we segment from this set of recordings a number of

auditory components, together with their location. We compare existing

time-delay of arrival estimations techniques between pairs of widely-spaced

microphones and introduce a novel efficient hierarchical localization

algorithm. Using the high-level representation thus obtained, we can edit and

re-render the acquired auditory scene over a variety of listening setups. In

particular, we can move or alter the different sound sources and arbitrarily

choose the listening position. We can also composite elements of different

scenes together in a spatially consistent way. Our approach provides efficient

rendering of complex soundscapes which would be challenging to model using

discrete point sources and traditional virtual acoustics techniques. We

demonstrate a wide range of possible applications for games, virtual and

augmented reality and audio-visual post-production.

Download a video describing our technique and early results here ! (divx format)

(NEW)

Video comparing original monophonic recordings and our approach (divx

format)

(full FIR filtering using

head-related transfer function (HRTF) data from the LISTEN HRTF database)

Additional example results

Explicit background/foreground separation and resulting re-renderings

Example 1: Outdoor scene with two moving speakers

Example 2: Seashore scene

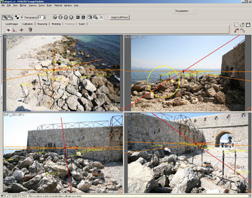

(click on picture for a larger view of the image-based calibration using

ImageModeler © RealViz)

Comparison of our warping algorithm (delay/distance compensation based on

estimated source positions)

compared to direct blending between recordings

Example 1: Synthetic case with telephone + chopper mixture

Example 2: Indoor recording with two speakers

More recent and improved results in an indoor environment

Compositing of two auditory scenes (car + moving speakers).

Click here for the Divx movie file (12 subbands + hardware HRTF rendering using DirectSound and SoundBlaster Audigy)

Related publications

This work is submitted for publication

Acknowledgments

This research was made possible by a grant from the

région PACA and the RNTL Project OPERA.