Participants: Francois Bremond, Mohamed Becha Kaaniche, Monique Thonnat.

|

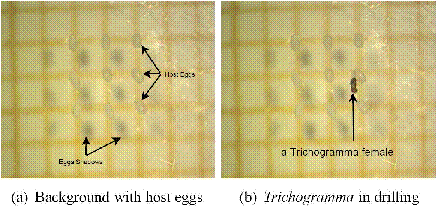

We are interested in extending previous work on object shape recognition and to apply activity monitoring techniques to the biology field. Trichogramma species are recognized as important biological control agents to substitute pesticides in field crops, forests and fruits. As a parasite of caterpillar pests, it protects several vegetables such as corn, rice and sugarcane. Current studies are focused on analyzing the variations of handling-time and on understanding their foraging mechanisms for screening better agents for biological control and improving their efficiency to control their hosts when they are released in the field. To conduct this work, it is essential to understand the behavior of parasites. Currently, the video sequences of laboratory experiments (see Fig. 8 for sample) are analyzed manually by experts. To handle the hugeness of the video sequences to explore, we are interested in automating this task by using scenario recognition techniques.

Traditionally, video understanding is focused on recognizing human activities. Furthermore, behaviors are usually recognized through the study of trajectories and positions of studied objects and using a priori knowledge about the scene. This is quite sufficient when we deal with scenes having a large field of view and coarse human activities. However, we often need to compute visual features characterizing the shape of the mobile object (i.e. Trichogramma) in order to identify its behavior. In this research, our aim is to adapt an automatic video interpretation system to recognize Trichogramma behaviors. The system is composed of a vision module and a scenario recognition module. It takes two types of input: (1) a video stream acquired by camera(s) and (2) a priori knowledge concerning scenario models predefined by experts and the 3D geometric and semantic information of the observed environment. The output of the system is a set of recognized scenarios at each instant.

In general words, the system performs through three steps. First, a low-level image processing algorithm subtracts the current frame with the background frame and detects moving regions. Then a tracking algorithm tracks the detected regions and computes their trajectory. Finally, the scenario recognition module identifies the tracked moving regions as mobile objects and interprets the scenarios that are relative to their behaviors.

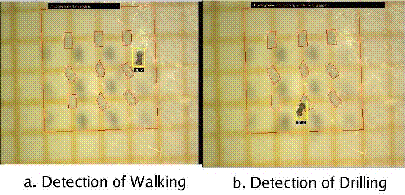

Our goal is to build the history of six Trichogramma activities. First, we detect every entry (``Enter'' event) and exit (``Exit'' event) in an experimental zone surrounding the host eggs; if the Trichogramma female exits from this zone and stays out more than sixty seconds, the experimentation is stopped. Then, we recognize the ``Walk'' event which corresponds to the walking of the Trichogramma females in the experimental zone between host eggs. Finally, we focus on the duration and time-bound of the three phases of egg laying behavior. The three phases are: (1) antennal drumming, (2) ovipositor drilling and (3) oviposition.

To reach this goal, we have used VSIP (Video Surveillance Intelligent Platform), described in [1], including a scenario recognition module based on [15]. We have also integrated a module to handle Trichogramma specific behaviors. Our approach computes the visual features characterizing mobile object shape to distinguish between the three phases of the egg laying behavior and allow the recognition of the global activities. After extending the vision module of this system, we have conceived a module which extracts these visual features and bind them to a scenario recognition module. As a feasibility proof, we have obtained acceptable results according to the complexity of the egg laying behavior. Figure 9 shows the output of the system at two instants: when it recognizes a Walk scenario (a) and when it recognizes a Drilling scenario (b).

This work can be improved by developing the following aspects. First, we plan to improve the segmentation algorithm in order to have a better determination of the three egg laying behavior phases. Currently, we focus on applying program supervision techniques to dynamically tune the parameters of the segmentation algorithm. A second task consists in refining the definition of the walk activity by a better understanding of the knowledge of the expert. Third, in order to develop an operational system, we plan to automate the description of the context of the scene (i.e. host eggs). For instance, currently we have to define the context for each new experiment due to the variations of the background. We are also planning to develop a learning module for automating the acquisition of the experimental context which will define the context of the scene without expert help. Finally, in the long term, we also plan to add a new front-end tool which will collect the output of the scenario recognition module for all experiments and mine these output results to deduce the frequent activities and their probabilistic law: it is claimed that the behavior of the Trichogramma while selecting a host egg can be described by the dynamic game theory.

The work accomplished up to now has been published in the International Cognitive Vision Workshop, (ICVW'2006).