Participants: Francois Bremond, Bernard Boulay, Monique Thonnat.

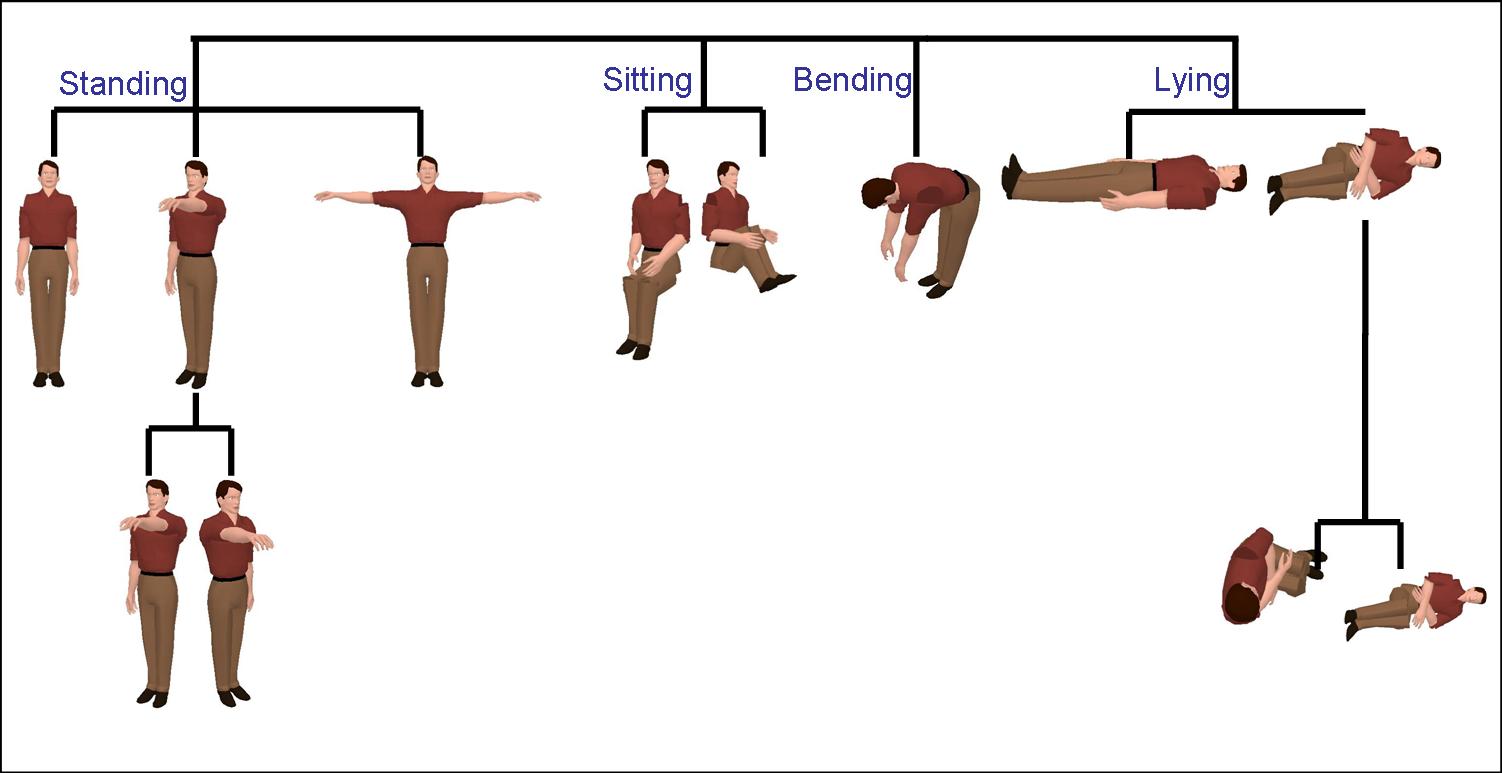

We have proposed a real-time human posture recognition algorithm to be part of the automatic interpretation of monocular image sequences. This algorithm takes as input the silhouette (a pixel bitmap coding the detected person) provided by vision algorithms. The proposed approach is composed of four main tasks:

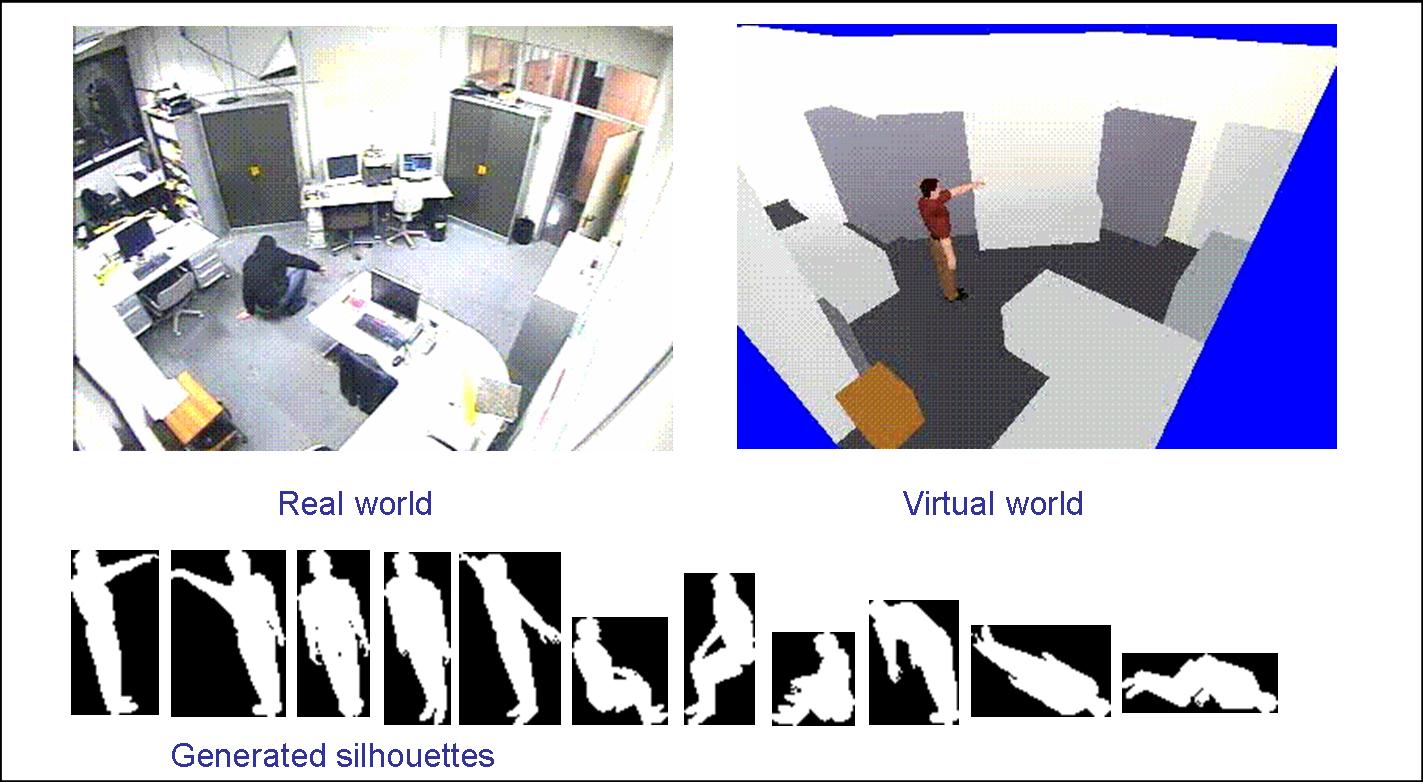

the silhouettes of the 3D posture avatars are generated for all possible orientations according to the estimated 3D position of the detected person and a virtual camera;

the generated silhouettes and the 2D silhouette of the detected person are compared using 2D techniques;

the posture of the detected person is selected according to the task 2;

the posture filter task uses postures computed on several frames to repair posture recognition errors from task 3.

|

The silhouettes of the 3D posture avatars are generated for all possible postures of interest. The generated silhouettes are obtained by projecting the corresponding 3D human model on the image plane, using the estimated 3D position of the person and a virtual camera which has the same characteristics (position, orientation and field of view) than the real camera.

The generated silhouettes are compared with the detected silhouette depending on the chosen silhouette representation. Several 2D silhouette representations have been studied and 4 representations have been chosen according to requirements in terms of computation time and silhouette quality: geometric features, Hu moments, skeletonisation and horizontal and vertical projections. The posture of the detected person is chosen as the posture which maximizes the similarity in term of silhouette.

|

|