Participants: Francois Bremond, Van Thinh Vu, Gabriele Davini, Monique Thonnat.

Our goal was to extend existing video Event Description Language (EDL) and video event recognition algorithms for audio-video event representation and recognition for automatic scene understanding in the framework of the SAMSIT and SERKET projects.

|

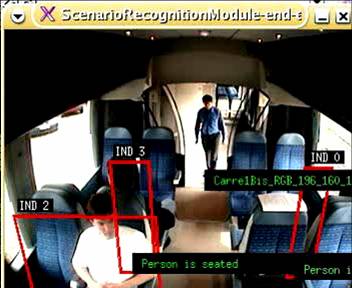

For the first objective the representation of audio-video events, we have added the notion of audio event in our video EDL for audio events that are directly detected by audio processing algorithms. In the extended EDL, a primitive audio event is represented as a state computed from audio processing and is related to the location of the associated microphone. To link this audio event to human activities, we suppose that this audio event has been performed by the people surrounding the microphone. Then, an audio-video event is described as a composite event. Figure 1 shows an example of a composite event combining both audio and video information for describing a real situation for inside train surveillance.

|

For the second objective the real-time recognition of audio-video events, there are two main issues to be focused on: (1) synchronization of audio and video information to fuse both events and (2) fast recognition of complex events. To cope with the synchronization issue, we currently use different configurations of transmission delays between components composing a complex audio-video event for the recognition algorithm to process temporal constraints with time tolerances. More precisely, we define different event models corresponding to variations of reception order between audio and video processing for modeling one audio-video event. In our experimentation, audio events are always detected with an important delay and consequently they are modeled to be at the end of the complex audio-video events. Besides, the recognition of audio-video events remains as the recognition of video events.

Our audio-video EDL and the recognition algorithm have been tested and evaluated on different data of real-word applications. The experimental results show that the proposed algorithm can recognize robustly in real-time activities of interests specified for different applications (e.g. the French SAMSIT project of on-board train surveillance and the European SERKET project on security and threat assessment).

Our research results have contributed to a publication in the Imaging for Crime Detection and Prevention 2006 conference.