Participants: Slawomir Bak, Sundaram Suresh, Francois Bremond, Monique Thonnat.

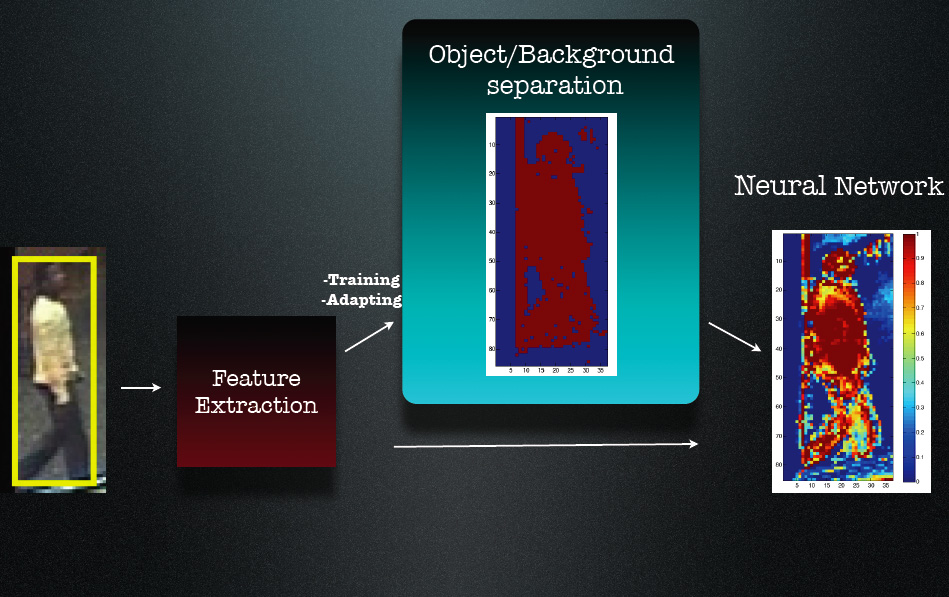

This project focus on the object tracking problem. The information coming from motion segmentation is fused with online adaptive neural classifier. Then, the classifier is used to differentiate the object from its local background. This neural classifier adapts to the change in illumination and appearance during a sequence. The feedback from the motion segmentation helps in avoiding drifting problems due to similar appearance in the local background region.

Here you can get an article in preparation of Camera-Ready Contributions to INSTICC Proceedings:

Bak, S., Suresh, S., Bremond, F., and Thonnat, M. (2008).

Fusion of motion segmentation and learning based

tracker for visual surveillance.

In Internal report: , INRIA

Sophia Antipolis, 8 pages.

The initialization of new targets is based on the motion information obtained from the object classification. Only classified objects are tracked.

The basic building block of the neural classifier is the Radial Basic Function Network (RBFN).

The input of the classifier are feature vectors extracted from objects and their local background region. To compute the error in the learning part we compare output of the neural network and results of the object(inside)/background (surrondings) separation scheme.

Figure 1.

The neural network classifier is composed of three components. |

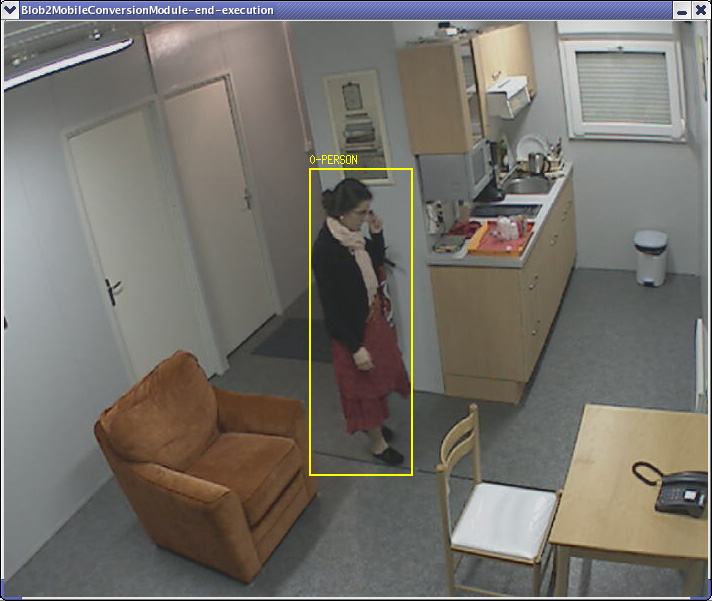

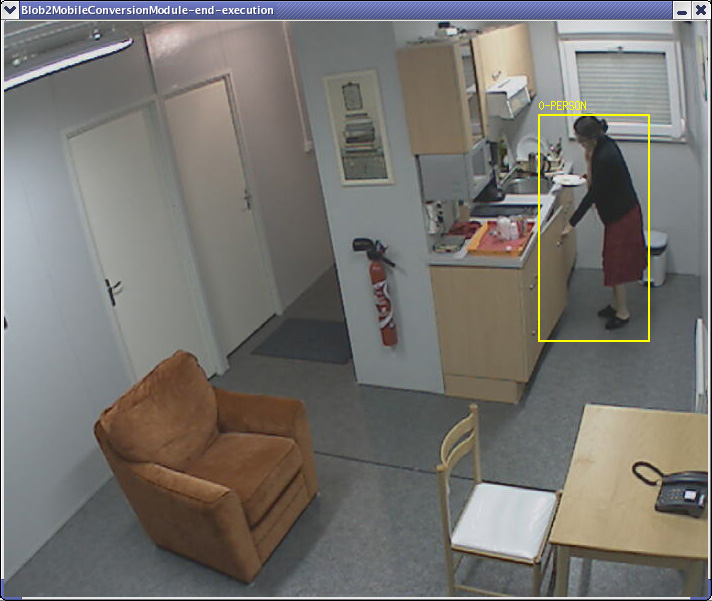

We have tested our approach on many data sets. We have used the data obtained from the GERHOME laboratory which is a realistic site reproducing the environment of a typical apartment of an elderly person. The laboratory promotes research in the domain of activity monitoring and assisted living.

Figure 2 illustrates the tracking results in GERHOME laboratory.

Figure 2.

Tracking results from GERHOME laboratory (video)

|

|

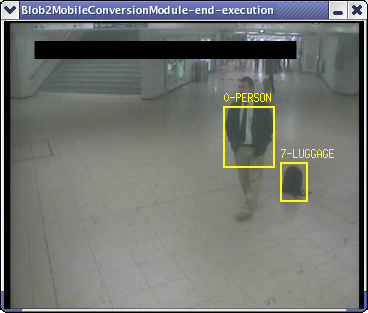

We have also performed our algorithm on ETISEO video sequence. We show a sequences from metro scene (ETI-VS1-MO-7-C1) where a man drops his bag in a

metro station, Fig 3. This is an example of video with weakly contrasted objects.

Figure 3.

Tracking results for ETISEO data with weakly contrasted objects.(video) |

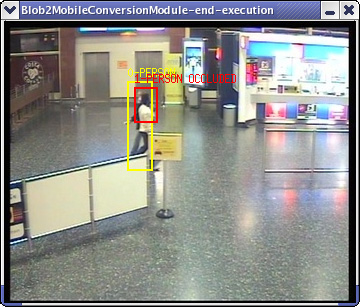

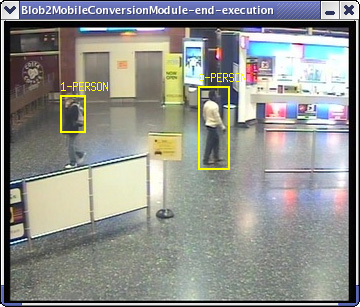

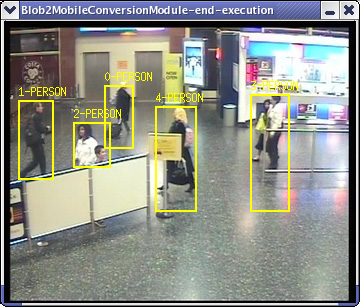

The approach was also tested with TREC Video Retrieval Evaluation (TRECKVID 2008) data obtained from Gatwick Airport surveillance system. In Figure 4 and 5, we can find many complex sequences where many targets are crossing each other.

Figure 4.

Tracking results with TREC Video Retrieval Evaluation data for Crossing people.(video)

|

|

Figure 5.

Tracking results with TREC Video Retrieval Evaluation data for complex sequences.(video)

|

|