Statistics

of spike trains.

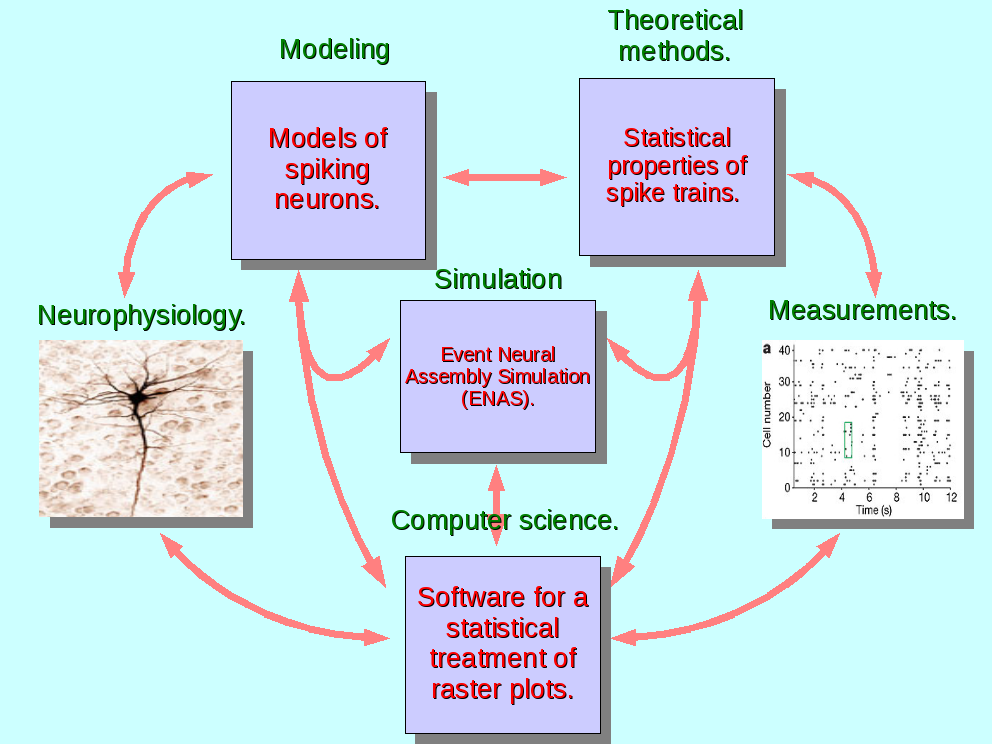

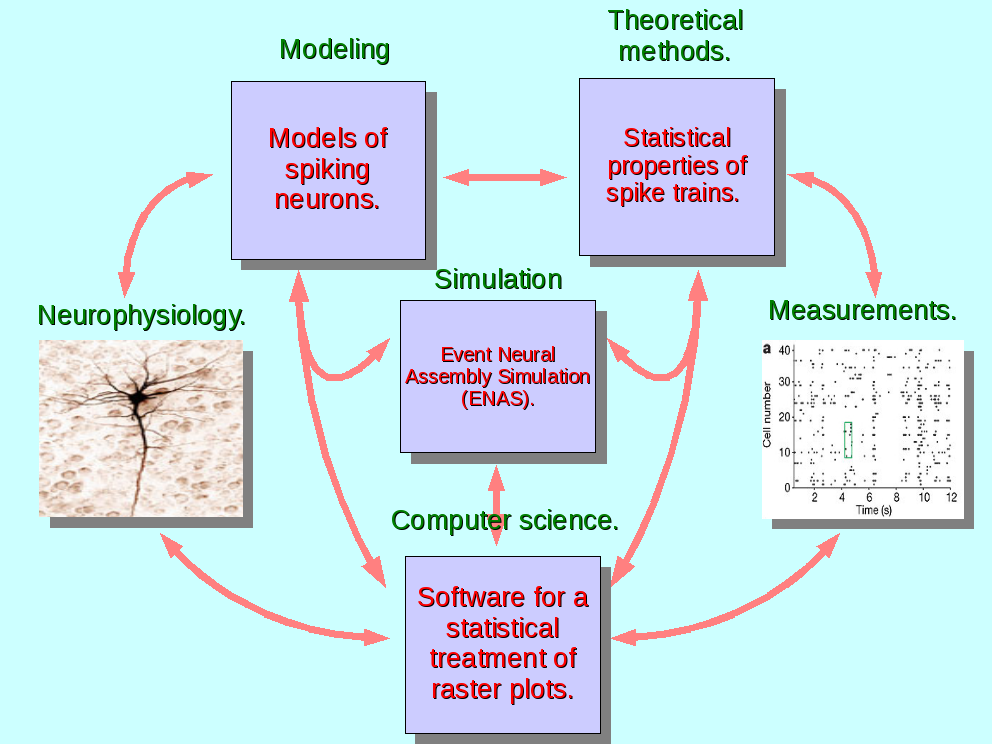

Information transport by neurons is mediated by spikes trains. How information is encoded remains an open issue despite numerous significant experiments and theoretical advances [Rieke-et-al:99],[Grammont-et-al, 03], [Georgopoulos-et-al, 07],[Schneidman-et-al, 06], [Starkl-Abeless, 07], [Womelsdorf-et-al, 07]). Thus, the analysis of spike trains obtained from in vivo or in vitro experimental data, requires suitable statistical models, while the models commonly used are ad hoc (e.g. Poissonnian) and are not necessarily the most adapted. Recent advances have attempted to construct these models from general principles. A significant example is due to Schneidman and collaborators [Schneidman-et-al, 06]. In experiments on Salamander retina, these authors have shown that spike trains statistics is well described by a probability distribution of Gibbs type, obtained via a variational principle, where one maximizes statistical entropy with the contraints of matching experimental measures of spikes correlations. We believe that there is, in this approach, a fundamental method that can be formalised and developed in the framework of dynamical systems theory and statistical physics. With this idea in mind, we are developing the following research directions.

On the one hand we are analysing models of spiking neural networks using tools from dynamical systems theory and ergodic theory (especially thermodynamic formalism). We have firstly made a complete characterization of the dynamics exhibited by Integrate and Fire models (with conductance based synapses) and we have especially shown how spike trains provide a symbolic coding for dynamics [Cessac:07,Cessac-Vieville:08]. Since these models are considered as rather good approximations of real neurons (see for example [jolivet-et-al:06]) we expect that this analysis provide relevant issues in theoretical and experimental neuroscience. Our main goal is the characterization of spikes train statistics generated by these models dynamics. We have recently shown how the thermodynamic formalism used in ergodic theory allows the construction of Gibbs distribution on spike trains via a variational principle, especially after synaptic adaptation mechanisms (synaptic plasticity). In particular, a general class of synaptic plasticity rules can be formulated in terms of the variation of a quantity, the topological pressure, closely related to thermodynamic potentials in statistical physics. As a consequence of this analysis spike trains statistics are more likely described by Gibbs probability distributions than by the classical Poisson distributions. The other way round, we believe that some variational problems could be solved by spiking neural networks after a suitable training. We are also investigating this issue.

On

the other hand, we are developing numerical tools for the analysis of

spike trains, such as entropy

estimation

and

approximation of probability

distributions

of

spike trains in raster plots. Part of these numerical tools has been

integrated in the ENas

(Event

Neural Assemby Simulation) library developed at INRIA. Especially

we want to approximate the empirical measure associated to a

realisation of a spike train, by a Gibbs

measure

with

a suitable (finite range) potential,

estimated by experimental constraints. We want to use these tools,

combined with the information obtained from the theoretical analysis,

to study experimental data. Especially, we are working on data

obtained at Institut de Neurosciences de la Mediterrann? INCM on the

monkey

in

collaboration with the DyVa team at INCM and F. Grammont (Nice

University) []. The potential outcome are twolfold. On a fundamental

ground, the goal is to make a step further in a promising research

area: the statistical

characterization of spike trains

obtained

from in vivo and in vitro data. On a practical ground, the goal would

be, on the long term, to provide a software

allowing

an automatic treatment of experimental data.

Main Results.

Theoretical analysis of spiking neurons.

We derive rigorous results describing the asymptotic dynamics of a discrete time model of spiking neurons introduced in [Soula, Beslon, Mazet, Neural Computation 18:1 (2006)]. Using symbolic dynamic techniques we show how the dynamics of membrane potential has a one to one correspondence with sequences of spikes patterns (``raster plots’’). Moreover, though the dynamics is generically periodic, it has a weak form of initial conditions sensitivity due to the presence of a sharp threshold in the model definition. As a consequence, the model exhibits a dynamical regime indistinguishable from chaos in numerical experiments.

We present a mathematical analysis of a networks with Integrate-and-Fire neurons and adaptive conductances. Taking into account the realistic fact that the spike time is only known within some finite precision, we propose a model where spikes are effective at times multiple of a characteristic time scale d, where d can be arbitrary small (in particular, well beyond the numerical precision). We make a complete mathematical characterization of the model-dynamics and obtain the following results. The asymptotic dynamics is composed by finitely many stable periodic orbits, whose number and period can be arbitrary large and can diverge in a region of the synaptic weights space, traditionally called the ``edge of chaos'', a notion mathematically well defined in the present paper. Furthermore, except at the edge of chaos, there is a one-to-one correspondence between the membrane potential trajectories and the raster plot. This shows that the neural code is entirely ``in the spikes'' in this case. As a key tool, we introduce an order parameter, easy to compute numerically, and closely related to a natural notion of entropy, providing a relevant characterization of the computational capabilities of the network. This allows us to compare the computational capabilities of leaky and Integrate-and-Fire models and conductance based models. The present study considers networks with constant input, and without time-dependent plasticity, but the framework has been designed for both extensions.

With care, and keeping in mind that the biological plausibility of neuronal models with respect to the real brain activity is still an open question, we revisit some mathematical and numerical aspects of generalized Integrate and Fire (gIF) models and propose to eliminate assumptions related to spurious discontinuities. This concerns both the fire regime and then the integrate regime of the neuron. With this new point of view, some ``biological’’ results obtained on ``models’’ are to be reconsidered. This has also positive consequences. It allows us to reduce the bio-physical membrane equation to a very simple but powerful gIF numerical model. This also dramatically reduces the algorithmic complexity of event-based network simulations, as experimented here.

B. Cessac, H. Rostro, J.C. Vasquez, T. Vi?ille, "Statistics of spikes trains, synaptic plasticity and Gibbs distributions", submitted to NeuroComp 2008.

We introduce a mathematical framework where the statistics of spikes trains, produced by neural networks evolving under synaptic plasticity, can be analysed.

B. Cessac, H. Rostro, J.C. Vasquez, T. Vi?ille, "To which extend is the ``neural code'' a metric ?", submitted to NeuroComp 2008.

Here is proposed a review of the different choices to structure spike trains, using deterministic metrics. Temporal constraints observed in biological or computational spike trains are first taken into account The relation with existing neural codes (rate coding, rank coding, phase coding, ..) is then discussed. To which extend the ``neural code'' contained in spike trains is related to a metric appears to be a key point, a generalization of the Victor-Purpura metric family being proposed for temporal constrained causal spike trains.

Numerical analysis of spike trains.

ENas (Event Neural Assemby Simulation).

Analysis of real data.

In progress.