SCIENTIFIC

Philosopher Doctorate Thesis in INFORMATICS

INRIA

and University of Nice - Sophia Antipolis — Doctoral School of Sciences and

Technologies of Information and Communication (S.T.I.C.)

Distributed Artificial Intelligence and Knowledge Management:

Distributed Artificial Intelligence and Knowledge Management:

ontologies and multi-agent systems for a corporate

semantic web *

by Fabien GANDON

defended Thursday the 7th of

November 2002

* Intelligence

artificielle distribuée et gestion des connaissances : ontologies et systèmes

multi-agents pour un web sémantique organisationnel

|

Thesis

Director:

|

§ Mme Rose Dieng-Kuntz (Research Director)

|

|

Reporters:

|

§ Mr Joost Breuker (Professor)

§ Mr Les Gasser (Professor)

§ Mr Jean-Paul Haton (Professor)

|

|

Jury:

|

§ Mr Pierre Bernhard (Professor - President of the jury)

§ Mr Jacques Ferber (Professor)

§ Mr Hervé Karp (Consultant)

§ Mr Gilles Kassel (Professor)

§ Mr Agostino Poggi (Professor)

|

Dedicated

To my whole family without whom I

would not be where I am, and even worse, I would not be at all.

To my parents Michel and Josette Gandon for their infallible

support and advice to a perpetually absent son.

To my sister Adeline Gandon from a

brother always on the way to some other place.

To my friends for not giving up

their friendship to the ghost of myself.

Acknowledgements

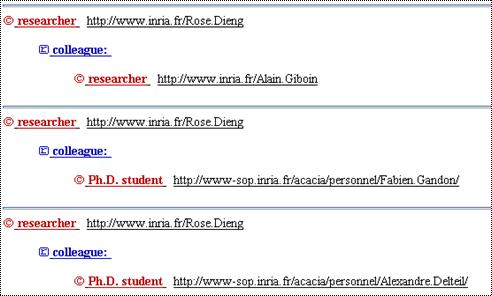

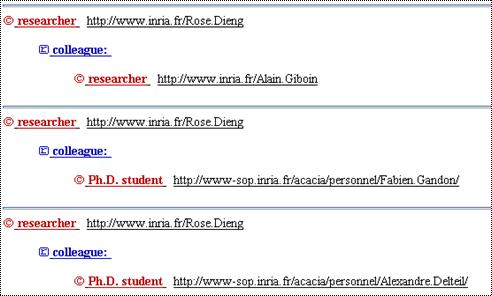

To Rose Dieng-Kuntz, director of

my PhD, for her advice, her support and her trust in me and whithout whom this

work would never have been done.

To Olivier Corby, for the

extremely fruitful technical discussions we had and his constant meticulous

work on CORESE.

To Alain Giboin, for the inspiring

discussions we had on cognitive aspects and his supervision of the ergonomics

and evaluation matters.

To the whole ACACIA team for the work we did in common, in particular to Laurent Berthelot, Alexandre Delteil, Catherine

Faron-Zucker and Carolina Medina

Ramirez.

To the assistant of the ACACIA team, Hortense

Hammel and Sophie Honnorat for

their advice and help in the day-to-day work and their assistance in the

logistic of the PhD.

To all the partners of the CoMMA IST European project, as well as all the

trainees involved in it.

To the members of the jury and the reporters of this Ph.D for accepting the

extra amount of work.

To Catherine Barry and Régine Loisel who introduced me to

research during my M.Phil.

To the SEMIR department of INRIA, in charge of the computer facilities for

its excellent work in maintaining a high quality working environment.

To the librarian department of INRIA, that did a valuable work to provide me

with information resources and references vital to my work.

To INRIA and University

of Nice Sophia Antipolis

for providing me with the means to carry out this work.

To the European Commission that funded the CoMMA IST project

Table of

Contents

Introduction 17

Guided tour of relevant literature 21

1 Organisational

knowledge management 23

1.1 Needs

for knowledge management 24

1.1.1 Needs

due to the organisational activity 24

1.1.2 Needs

due to external organisational factors 24

1.1.3 Needs

due to internal organisational factors 25

1.1.4 Needs

due to the nature of information and knowledge 26

1.2 Organisational

memories 27

1.2.1 Nature

of organisational knowledge 29

1.2.2 Knowledge

management and organisational memory lifecycle 33

1.2.3 Typology

or facets of organisational memories 36

1.3 Organisation

modelling 40

1.3.1 Origins

of organisational model 40

1.3.2 Ontology

based approaches for organisation modelling 42

1.3.3 Concluding

remarks 44

1.4 User-modelling

and human-computer interaction 45

1.4.1 Nature

of the model 45

1.4.2 Adaptation

and customisation 46

1.4.3 Application

domains and use in knowledge management 47

1.5 Information

retrieval systems 49

1.5.1 Resources

and query: format and surrogates 50

1.5.1.1 Initial

structure 50

1.5.1.2 Surrogate

generation 51

1.5.1.2.1 Surrogate

for indexing and querying 51

1.5.1.2.2 Statistic

approaches to surrogate generation 53

1.5.1.2.3 Semantic

approaches to surrogate generation 54

1.5.2 Querying

a collection 56

1.5.3 Query

and results: the view from the user side 57

1.5.4 The

future of information retrieval systems 60

2 Ontology

and knowledge modelling 61

2.1 Ontology:

the object 62

2.1.1 Ontology:

an object of artificial intelligence and conceptual tool of Knowledge Modelling 62

2.1.2 Definitions

adopted here 65

2.1.3 Overview

of existing ontologies 70

2.1.3.1 A

variety of application domains 70

2.1.3.2 Some

ontologies for organisational memories 71

2.1.4 Lifecycle

of an ontology: a living object with a maintenance cycle 72

2.2 Ontology:

the engineering 74

2.2.1 Scope

and Granularity: the use of scenarios for specification 74

2.2.2 Knowledge

Acquisition 77

2.2.3 Linguistic

study & Semantic commitment 81

2.2.4 Conceptualisation

and Ontological commitment 83

2.2.5 Taxonomic

skeleton 84

2.2.5.1 Differential

semantics [Bachimont, 2000] 86

2.2.5.2 Semantic

axis [Kassel et al., 2000] 87

2.2.5.3 Checking

the taxonomy [Guarino and Welty, 2000] 88

1.1.1.4 Formal

concept analysis for lattice generation 91

1.1.1.5 Relations

have intensions too 92

1.1.1.6 Semantic

commitment 93

1.1.6 Formalisation

and operationalisation of an ontology 94

1.1.6.1 Logic

of propositions 96

1.1.6.2 Predicate

or first order logic 96

1.1.6.3 Logic

Programming language 97

1.1.6.4 Conceptual

graphs 97

1.1.6.5 Topic

Maps 98

1.1.6.6 Frame

and Objet oriented formalisms 98

1.1.6.7 Description Logics 99

1.1.6.8 Conclusion on formalisms 100

1.1.7 Reuse 100

3 Structured

and semantic Web 105

3.1 Beginnings

of the semantic web 106

3.1.1 Inspiring

projects 106

3.1.1.1 SHOE 106

3.1.1.2 Ontobroker 106

3.1.1.3 Ontoseek 107

3.1.1.4 ELEN 107

3.1.1.5 RELIEF 107

3.1.1.6 LogicWeb 107

3.1.1.7 Untangle 108

3.1.1.8 WebKB 108

3.1.1.9 CONCERTO 108

3.1.1.10 OSIRIX 109

3.1.2 Summary

on ontology-based information systems 109

3.2 Notions

and definitions 111

3.3 Toward

a structured and semantic Web 113

3.3.1 XML:

Metadata Approach 113

3.3.2 RDF(S):

Annotation approach 117

3.3.2.1 Resource

Description Framework: RDF datamodel 117

3.3.2.2 RDF

Schema: RDFS meta-model 120

3.4 Summary

and perspectives 125

3.4.1 Some

implementations of the RDF(S) framework 126

3.4.1.1 ICS-FORTH

RDFSuite 126

3.4.1.2 Jena 126

3.4.1.3 SiLRI

and TRIPLE 127

3.4.1.4 CORESE 127

3.4.1.5 Karlsruhe

Ontology (KAON) Tool Suite 127

3.4.1.6 Metalog 128

3.4.1.7 Sesame 128

3.4.1.8 Protégé-2000 128

3.4.1.9 WebODE 129

3.4.1.10 Mozilla RDF 129

3.4.2 Some extension initiatives 130

3.4.2.1 DAML-ONT 130

3.4.2.2 OIL

and DAML+OIL 130

3.4.2.3 DRDF(S) 131

3.4.2.4 OWL 132

3.5 Remarks

and conclusions 133

4 Distributed

artificial intelligence 135

4.1 Agents

and multi-agent systems 137

4.1.1 Notion

of agent 137

4.1.2 Notion

of multi-agent systems 143

4.1.3 Application

fields and interest 144

4.1.4 Notion

of information agents and multi-agent information systems 145

4.1.4.1 Tackling

the heterogeneity of the form and the means to access information 145

4.1.4.2 Tackling

growth and distribution of information 146

4.1.4.3 Transversal

issues and characteristics 146

4.1.4.4 The

case of organisational information systems 149

4.1.5 Chosen

definitions 150

4.2 Design

rationale for multi-agent systems 151

4.2.1 Overview

of some methodologies or approaches 151

4.2.1.1 AALAADIN

and the A.G.R. model 151

4.2.1.2 AOR 152

4.2.1.3 ARC 152

4.2.1.4 ARCHON 153

4.2.1.5 AUML 153

4.2.1.6 AWIC 154

4.2.1.7 Burmeister's

Agent-Oriented Analysis and Design 154

4.2.1.8 CoMoMAS 155

4.2.1.9 Cassiopeia

methodology 155

4.2.1.10 DESIRE 156

4.2.1.11 Dieng's

methodology for specifying a co-operative system 156

4.2.1.12 Elammari

and Lalonde 's AgentOriented Methodology 157

4.2.1.13 GAIA 157

4.2.1.14 Jonker

et al.'s Design of Collaborative

Information Agents 158

4.2.1.15 Kendall

et al.'s methodology for developing

agent systems for enterprise integration 158

4.2.1.16 Kinny

et al. 's methodology for systems of

BDI agents 159

4.2.1.17 MAS-CommonKADS 160

4.2.1.18 MASB 161

4.2.1.19 MESSAGE 161

4.2.1.20 Role

card model for agents 162

4.2.1.21 Verharen's

methodology for co-operative information agents design 162

4.2.1.22 Vowels 163

4.2.1.23 Z

specifications for agents 163

4.2.1.24 Remarks

on the state of the art of methodologies 164

4.2.2 The

organisational design axis and role turning-point 166

4.3 Agent

communication 168

4.3.1 Communication

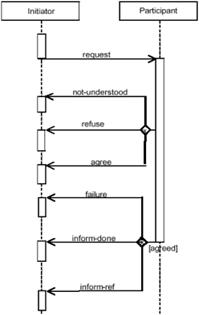

language: the need for syntactic and semantic grounding 168

4.3.2 Communication

protocols: the need for interaction rules 169

4.3.3 Communication

and content languages 170

4.4 Survey

of multi-agent information systems 174

4.4.1 Some

existing co-operative or multi-agent information systems 174

4.4.1.1 Enterprise Integration Information Agents 174

4.4.1.2 SAIRE 175

4.4.1.3 NZDIS 175

4.4.1.4 UMDL 176

1.1.1.5 InfoSleuth™ 176

1.1.1.6 Summarising

remarks on the field 178

1.1.2 Common

roles for agents in multi-agent information systems 179

1.1.2.1 User

agent role 179

1.1.2.2 Resource

agent role 180

1.1.2.3 Middle

agent role 182

1.1.2.4 Ontology

agent role 183

1.1.2.5 Executor

agent role 183

1.1.2.6 Mediator

and facilitator agent role 184

1.1.2.7 Other

less common specific roles 184

1.5 Conclusion

of this survey of multi-agent systems 185

5 Research

context and positioning 187

5.1 Requirements 188

5.1.1 Application

scenarios 188

5.1.1.1 Knowledge

management to improve the integration of a new employee 188

5.1.1.2 Knowledge

management to support technology monitoring and survey 189

5.1.2 The

set of needed functionalities common to both scenarios 190

5.2 Implementation

choices 192

5.2.1 Organisational

memory as an heterogeneous and distributed information landscape 192

5.2.2 Stakeholders

of the memory as an heterogeneous and distributed population 193

5.2.3 Management

of the memory as an heterogeneous and distributed set of tasks 193

5.2.4 Ontology:

the cornerstone providing a semantic grounding 194

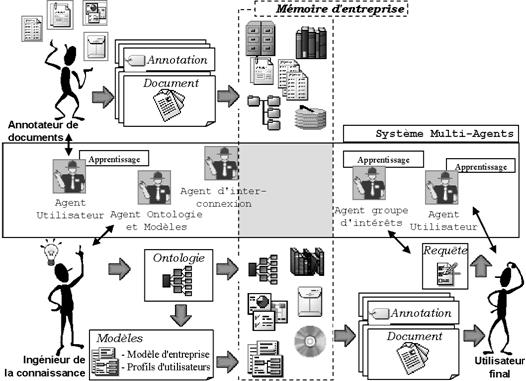

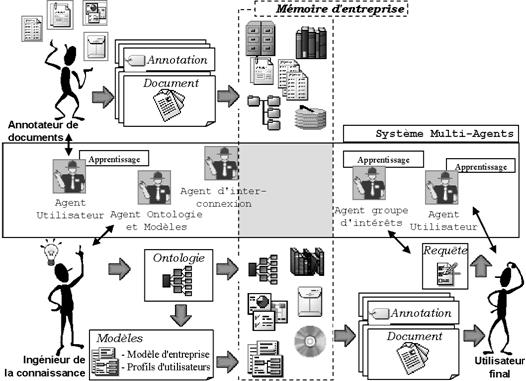

Positioning and envisioned system 195

5.3.1 Related

works and positioning in the state of the art 195

5.3.2 Overall

description the CoMMA solution 198

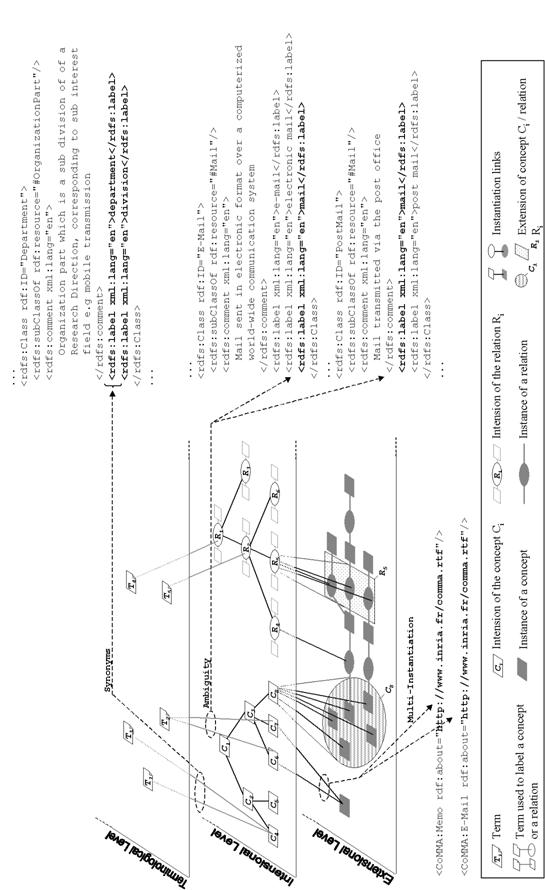

Ontology & annotated corporate memory 201

6 Corporate

semantic web 203

6.1 An

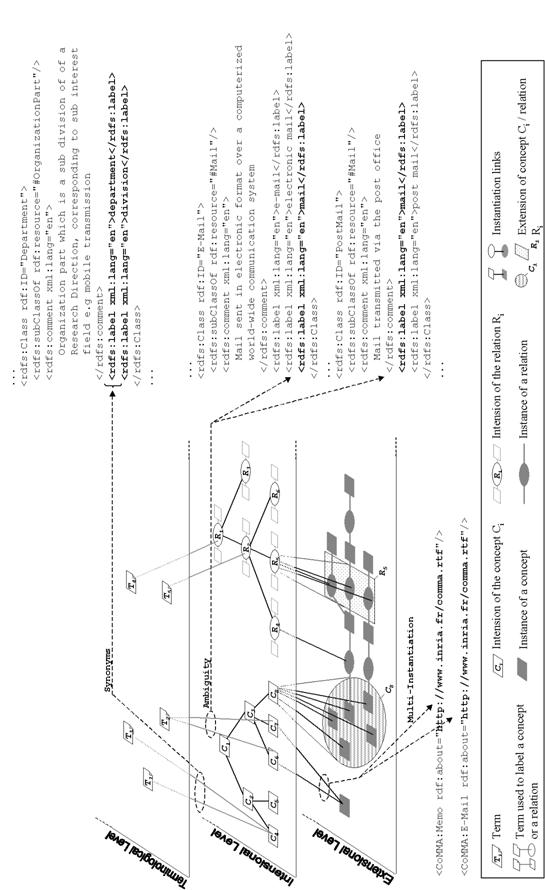

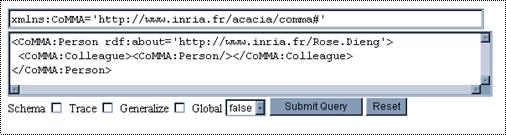

annotated world for agents 204

6.2 A

model-based memory 205

6.3 CORESE:

COnceptual REsource Search Engine 208

6.4 On

the pivotal role of an ontology 212

7 Engineering

the O'CoMMA ontology 213

7.1 Scenario

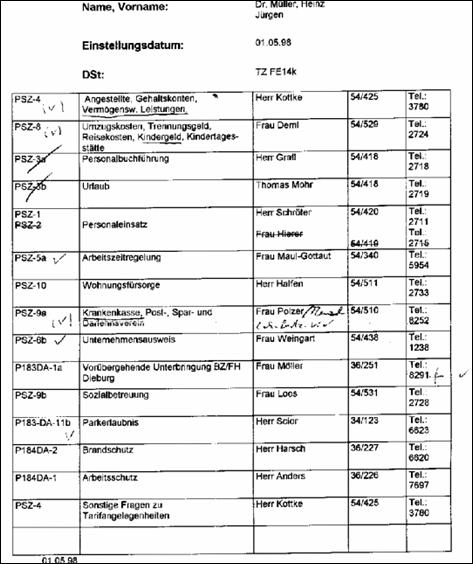

analysis and Data collection 214

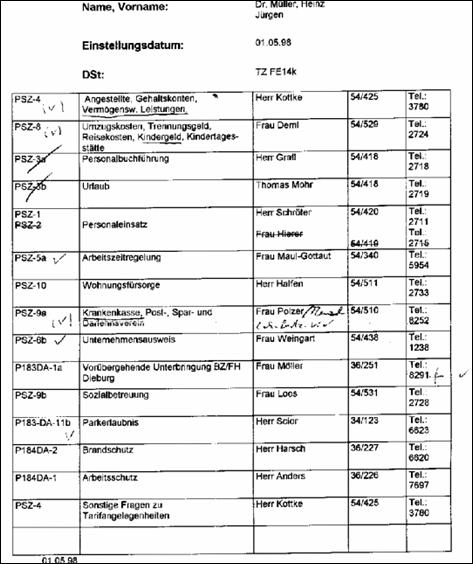

7.1.1 Scenario-based

analysis 214

7.1.2 Semi-structured

interviews 218

7.1.3 Workspace

observations 219

7.1.4 Document

Analysis 220

7.1.5 Discussion

on data collection 222

7.2 Reusing

ontologies and other sources of expertise 223

7.3 Initial

terminological stage 224

7.4 Perspectives

in populating and structuring the ontology 225

7.5 From

semi-informal to semi-formal 227

7.6 On a

continuum between formal and informal 230

7.7 Axiomatisation

and needed granularity 238

7.8 Conclusion

and abstraction 242

8 The

ontology O'CoMMA 245

8.1 Overall

structure of O'CoMMA 246

8.2 Top

of O'CoMMA 247

8.3 Ontology

parts dedicated to organisational modelling 248

8.4 Ontology

parts dedicated to user profiles 250

8.5 Ontology

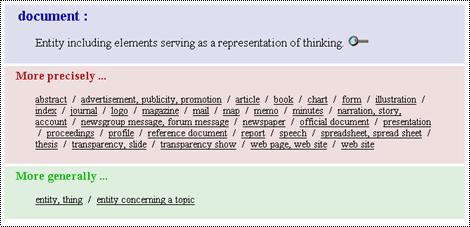

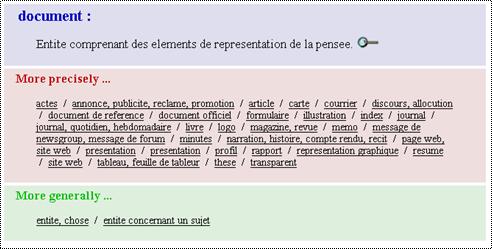

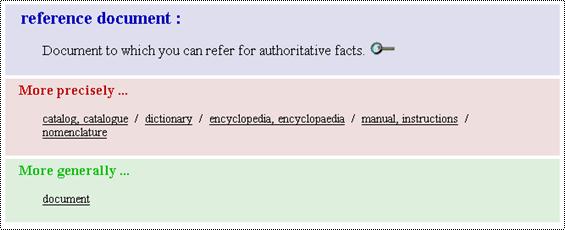

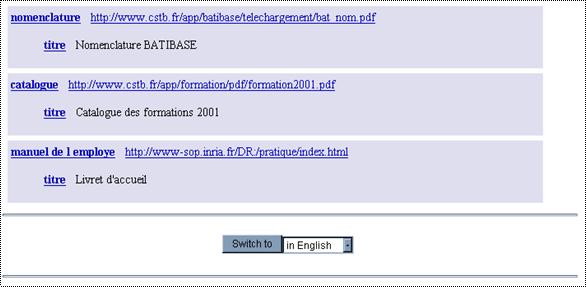

parts dedicated to documents 253

8.6 Ontology

parts dedicated to the domain 254

8.7 The

hierarchy of properties 255

8.8 End-Users'

extensions 257

8.9 Discussion

and abstraction 261

Multi-agent system for memory management 265

9 Design

rationale of the multi-agent architecture 267

9.1 From

macroscopic to microscopic 268

9.1.1 Architecture

versus configuration 268

9.1.2 Organising

sub-societies 269

9.1.2.1 Hierarchical

society: a separated roles structure 269

9.1.2.2 Peer-to-peer

society: an egalitarian roles structure 270

9.1.2.3 Clone

society: a full replication structure 271

9.1.2.4 Choosing

an organisation 271

9.1.3 Sub-society

dedicated to ontology and organisational model 271

9.1.4 Annotation

dedicated sub-society 272

9.1.5 Interconnection

dedicated sub-society 273

9.1.6 User

dedicated sub-society 273

9.1.6.1 Possible

options 274

9.1.6.2 User

profile storage 274

9.1.6.3 Other

possible roles for interest group management 275

9.1.7 Overview

of the sub-societies 276

9.2 Roles,

Interactions and behaviours 277

9.2.1 Accepted

roles 277

9.2.1.1 Ontology

Archivist 277

9.2.1.2 Corporate

Model Archivist 278

9.2.1.3 Annotation

Archivist 278

9.2.1.4 Annotation

Mediator 279

9.2.1.5 Directory

Facilitator 279

9.2.1.6 Interface Controller 280

9.2.1.7 User Profile Manager 280

9.2.1.8 User

Profile Archivist 281

9.2.2 Characterising

and comparing roles 282

9.2.3 Social

interactions 284

9.2.4 Behaviour

and technical competencies 287

9.3 Agents

types and deployment 288

9.3.1 Typology

of implemented agents 288

9.3.1.1 Interface

Controller Agent Class implementation 288

9.3.1.2 User

Profile Manager Agent Class implementation 291

9.3.1.3 User

Profile Archivist Agent Class implementation 291

9.3.1.4 Directory

Facilitator Agent Class implementation 292

9.3.1.5 Ontology

Archivist Agent Class implementation 292

9.3.1.6 Annotation

Mediator Agent Class implementation 292

9.3.1.7 Annotation

Archivist Agent Class implementation 292

9.3.2 Deployment

configuration 293

9.4 Discussion

and abstraction 294

10 Handling

annotation distribution 297

10.1 Issues

of distribution 298

10.2 Differentiating

archivist agents 300

10.3 Annotation

allocation 303

10.3.1 Allocation protocol 303

10.3.2 Pseudo semantic distance 305

10.3.2.1 Definition

of constants 305

10.3.2.2 Distance

between two literals 305

10.3.2.3 Pseudo-distance

literal - literal interval 307

10.3.2.4 Distance

between two ontological types 307

10.3.2.5 Distance

between a concept type and a literal 309

10.3.2.6 Pseudo-distance

annotation triple - property family 309

10.3.2.7 Pseudo-distance

annotation triple - ABIS 309

10.3.2.8 Pseudo-distance

annotation triple - CAP 310

10.3.2.9 Pseudo-distance

annotation - ABIS 310

10.3.2.10 Pseudo-distance

annotation - CAP 310

10.3.2.11 Pseudo-distance

annotation - AA 310

10.3.3 Conclusion

and discussion on the Annotation allocation 311

10.4 Distributed

query-solving 312

10.4.1 Allocating

tasks 312

10.4.2 Query

solving protocol 314

10.4.3 Distributed

query solving (first simple algorithm) 316

10.4.3.1 Query

simplification 316

10.4.3.2 Constraint

solving 317

10.4.3.3 Question

answering 317

10.4.3.4 Filtering

final results 317

10.4.3.5 Overall

algorithm and implementation details 319

10.4.4 Example

and discussion 321

10.4.5 Distributed

query solving (second algorithm) 326

10.4.5.1 Discussion

on the first algorithm 326

10.4.5.2 Improved

algorithm 327

10.5 Annotation

push mechanism 329

10.6 Conclusion

and discussion 331

Lessons learned & Perspectives 333

11 Evaluation

and return on experience 335

11.1 Implemented

functionalities 336

11.2 Non-implemented

functionalities 337

11.3 Official

evaluation of CoMMA 338

11.3.1 Overall

System and Approach evaluation 338

11.3.1.1 First

trial evaluation 339

11.3.1.2 Final

trial evaluation 340

11.3.2 Criteria

used for specific aspects evaluation 342

11.3.3 The

ontology aspect 344

11.3.3.1 Appropriateness

and relevancy 344

11.3.3.2 Usability

and exploitability 344

11.3.3.3 Adaptability,

maintainability and flexibility 345

11.3.3.4 Accessibility

and guidance 345

11.3.3.5 Explicability

and documentation 346

11.3.3.6 Expressiveness,

relevancy, completeness, consistency and reliability 346

11.3.3.7 Versatility,

modularity and reusability 346

11.3.3.8 Extensibility 347

11.3.3.9 Interoperability

and portability 347

11.3.3.10 Feasibility,

scalability and cost 347

11.3.4 The

semantic web and conceptual graphs 348

11.3.4.1 Usability,

exploitability, accessibility and cost 348

11.3.4.2 Flexibility,

adaptability, extensibility, modularity, reusability and versatility 349

11.3.4.3 Expressiveness 349

11.3.4.4 Appropriateness

and maintainability 350

11.3.4.5 Portability,

integrability and interoperability 350

11.3.4.6 Feasibility,

scalability, reliability and time of response 351

11.3.4.7 Documentation 352

11.3.4.8 Relevancy,

completeness and consistency 352

11.3.4.9 Security,

explicability, pro-activity and guidance 352

11.3.5 The

multi-agent system aspect 353

11.3.5.1 Appropriateness,

modularity and exploitability 353

11.3.5.2 Usability,

feasibility, explicability, guidance and documentation 353

11.3.5.3 Adaptability

and pro-activity 354

11.3.5.4 Interoperability,

expressiveness and portability 354

11.3.5.5 Scalability,

reliability and time of response 355

11.3.5.6 Security 355

11.3.5.7 Cost 355

11.3.5.8 Integrability

and maintainability 355

11.3.5.9 Flexibility,

versatility, extensibility and reusability 356

11.3.5.10 Relevancy,

completeness, consistency 356

11.4 Conclusion

and discussion on open problems 357

12 Short-term

improvements 359

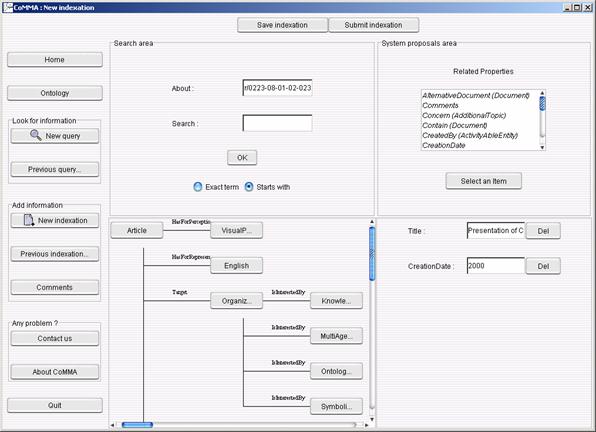

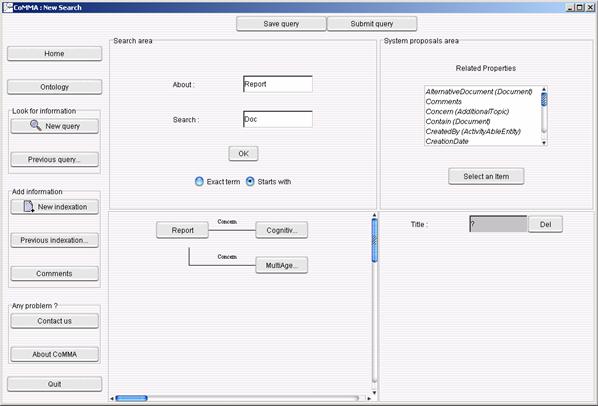

12.1 Querying

and annotating through ontologies: from conceptual concerns to users' concerns 360

12.2 Improving

the pseudo-distance 370

12.2.1 Introducing

other criteria 370

12.2.2 The

non-semantic part problem 370

12.2.3 The

subsumption link is not a unitary length 371

12.3 Improving

distributed solving 376

12.3.1 Multi-instantiation

and distributed solving 376

12.3.2 Constraint

sorting 377

12.3.3 URI

in ABIS 377

12.4 Ontology

service improvements 378

12.4.1 Precise

querying to retrieve the ontology 378

12.4.2 Propagating

updates of the ontology 378

12.5 Annotation

management improvements 379

12.5.1 Query-based

push registration 379

12.5.2 Allowing

edition of knowledge 379

12.5.3 Update

scripting and propagation 380

12.6 Conclusion

on short-term perspectives 381

13 Long-term

perspectives 383

13.1 Ontology

and multi-agent systems 384

13.1.1 Ontological

lifecycle 384

13.1.1.1 Emergence

and management of the ontological consensus 384

13.1.1.2 Maintenance

and evolution 385

13.1.1.3 Ontologies

as interfaces, ontologies and interfaces 386

13.1.1.4 Conclusion 388

13.1.2 Ontologies

for MAS vs. MAS for ontologies 389

13.1.2.1 Use

of ontologies for multi-agent systems 389

13.1.2.2 Use

of multi-agent systems for ontologies 389

13.2 Organisational

memories and multi-agent systems 390

13.2.1 Opening

the system: no actor is an island 391

13.2.1.1 Extranets:

coupling memories 391

13.2.1.2 Web-mining:

wrapping external sources 392

13.2.1.3 Collaborative

filtering: exploiting the organisational identity 394

13.2.2 Closing

the system: security and system management 395

13.2.3 Distributed

rule bases and rule engines 395

13.2.4 Workflows

and information flows 396

13.2.5 New

noises 396

13.3 Dreams 399

Conclusion 403

Annexes 409

14 Résumé in french 411

14.1 Le projet CoMMA 413

14.2 Web sémantique d'entreprise 415

14.2.1 Du Web Sémantique à l'intraweb sémantique 415

14.2.2 Une mémoire basée sur un modèle 415

14.2.2.1 Description des profils utilisateur : "annoter les

personnes" 416

14.2.2.2 Description de l'entreprise : "annoter

l'organisation" 416

14.2.2.3 Architecture de la mémoire 416

14.3 CORESE: moteur de recherche sémantique 418

14.4 Conception De L'ontologie O'CoMMA 419

14.4.1 Position et Définitions 419

14.4.2 Analyse par scénarios et Recueil 420

14.4.3 Les scénarios comme guides de conception 420

14.4.4 Recueil spécifique à l’entreprise 420

14.4.5 Recueil non spécifique à l’entreprise 422

14.4.6 Phase terminologique 422

14.4.7 Structuration : du semi-informel au semi-formel 423

14.4.8 Formalisation de l'ontologie 426

14.4.9 Extensions nécessaires à la formalisation 428

14.5 Contenu de l'ontologie O'CoMMA 429

14.6 Les agents de la mémoire 431

14.6.1 Une architecture multi-agents 431

14.6.1.1 Système d'information multi-agents 431

14.6.1.2 Du niveau macroscopique du SMA au niveau microscopique des

agents 432

14.6.1.3 Rôles, interactions et protocoles 433

14.7 Cas de la société dédiée aux annotations 436

14.8 Discussion

et perspectives 437

15 O'CoMMA 439

15.1 Lexicon

view of concepts 439

15.2 Lexicon

view of relations 452

16 Tables

of illustrations 455

16.1 List

of figures 455

16.2 List

of tables 460

17 References 463

Introduction

The beginning is the most

important

part of the work.

— Plato

Human societies

are structured and ruled by organisations. Organisations

can be seen as abstract holonic living entities composed of individuals and

other organisations. The raison d'être

of these living entities is a set of core activities that answer needs of other

organisations or individuals. These core activities are the result of the

collective work of the members of the organisation. The global activity relies

on organisational structures and infrastructures that are supervised by an

organisational management. The management aims at effectively co-ordinating the

work of the members in order to obtain a collective achievement of the core

organisational activities.

The individual

work, be it part of the organisational management or the core activities,

requires knowledge. The whole knowledge mobilised by an organisation for its

functioning forms an abstract set called organisational knowledge; a lack of

organisational knowledge may result in organisational dysfunction.

As the speed of

markets is rising and their dimensions tend towards globalisation, reaction

time is shortening and competitive pressure is growing; information loss may

lead to a missed opportunity. Organisations must react quickly to changes in

their domain and in the needs they answer, and even better they must anticipate

them. In this context, knowledge is an organisational asset for competitiveness

and survival, the importance of which has been growing fast in the last decade.

Thus organisational management now explicitly includes the activity of knowledge management that addresses problems of identification,

acquisition, storage, access, diffusion, reuse and maintenance of both internal

and external knowledge.

One approach for

managing knowledge in an organisation, is to set up an organisational memory

management solution: the organisational

memory aspect is in charge of ensuring the persistent storage and/or

indexing of the organisational knowledge and its management solution is in charge of capturing relevant pieces of

knowledge and providing the concerned persons with them, both activities being

carried out at the appropriate time, with the right level of details and in an

adequate format. An organisational memory relies on knowledge resources i.e., documents, people, formalised

knowledge (e.g.: software, knowledge bases) and other artefacts in which

knowledge has been embedded.

Such memory and

its management require methodologies and tools to be operationalised.

Resources, such as documents, are information supports therefore, their

management can benefit from results in informatics and the assistance of

software solutions developed in the field. The work I am presenting here, was

carried out during my Ph.D. in Informatics, within ACACIA,

a multidisciplinary research team of INRIA

that aims at offering models, methods and tools for building a corporate

memory. My research was applied to the realisation of CoMMA (Corporate Memory

Management through Agents) a two-year European IST project.

In this thesis, I

shall show that (1) the semantic webs can

provide distributed knowledge spaces for knowledge management; (2) ontologies are applicable and effective

means for supporting distributed knowledge spaces; (3) multi-agent systems are applicable and effective architectures for

managing distributed knowledge spaces. To this end, I devided the plan of

this document into four parts which structure a total of thirteen chapters:

The first part is a guided tour of relevant

literature. It consists of five chapters, the first four chapters analyse

the literature relevant to the work and the fifth one positions CoMMA within

this survey.

Chapter 1 - Organisational knowledge management. I shall describe the needs for knowledge

management and the notion of corporate memory aimed at answering these needs. I

shall also introduce three related domains that contribute to building

organisational knowledge management solutions: corporate modelling, user

modelling and information retrieval systems.

Chapter 2 - Ontology and knowledge modelling. I shall analyse the latest advance in

knowledge modelling that may be used for knowledge management, especially

focusing on the notion of ontology that is that part of the knowledge model

that captures the semantics of primitives used to make formal assertions about

the application domain of the knowledge-based solution. The chapter is divided

in two large sections respectively addressing the nature and the design of an

ontology.

Chapter 3 - Structured and semantic web. More and more organisations rely on

intranets and internal corporate webs to implement a corporate memory solution.

In the current Web technology, information resources are machine readable, but

not machine understandable. To improve exploitation and management mechanisms

of a web requires the introduction of formal knowledge; in the semantic web

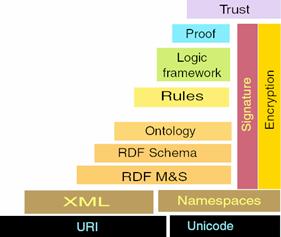

vision of the W3C, this

takes the form of semantic annotations about the information resources. After a

survey of the pioneer projects that prefigured the semantic web, I shall detail

the current foundations layed down by the W3C, and summarise the on-going and

future trends.

Chapter 4 - Distributed artificial intelligence. Artificial intelligence studies artificial

entities, that I shall call agents, reproducing individual intelligent

behaviours. In application fields where the elements of the problem are

scattered, distributed artificial intelligence tries to build artificial

societies of agents to propose adequate solutions; a semantic web is a

distributed landscape that naturally calls for this kind of paradigm.

Therefore, I shall introduce agents and multi-agent systems and survey design

methodologies. I shall focus, in particular, on the multi-agent information

systems.

Chapter 5 - Research context and positioning. Based on the previous surveys, this

chapter will identify the requirements of the application scenarios of CoMMA

and show that the requirements can be matched with solutions proposed in

knowledge modelling, semantic web and distributed artificial intelligence. I

shall describe and justify the overall solution envisioned and the

implementation choices, and I shall also position CoMMA in the state of the

art.

The second part concerns ontology &

annotated corporate memory. It consists of three chapters presenting a

vision of the corporate memory as a corporate semantic web as well as the

approach followed in CoMMA to build the underlying ontology and the result of

this approach i.e., the ontology

O'CoMMA.

Chapter 6 - Corporate semantic web. It is a short introduction to the vision

of a corporate memory as a corporate semantic web providing an annotated world

for information agents. I shall present the motivations, the structure and the

tools of this model-based documentary memory.

Chapter 7 - Engineering the O'CoMMA ontology. The ontology plays a seminal role in the

vision of a corporate semantic web. This chapter explains step-by-step how the

ontology O'CoMMA was built, following a scenario-based design rationale to go

from the informal descriptions of requirements and specifications to a formal

ontology. I shall discuss the tools designed and adopted as well as the

influences and motivations that drove my choices.

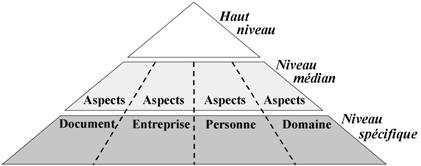

Chapter 8 - The ontology O'CoMMA. The ontology O'CoMMA is the result

produced by the methodology adopted in CoMMA and provides the semantic

grounding of a solution. I shall describe the O'CoMMA ontology and different

aspects of the vocabulary organised in three layers and containing an abstract

top layer, a part dedicated to the organisational structure modelling, a part

dedicated to the description of people, and a part dealing with domain topics.

I shall also discuss some extensions and appropriation experiences with

end-users.

The third part focuses on a multi-agent system

for memory management. It consists of two chapters respectively presenting

the design rationale of the whole CoMMA system and the specific design of the

mechanisms handling annotation distribution.

Chapter 9 - Design rationale of the multi-agent

architecture. I present

the design rationale that was followed in CoMMA to obtain the multi-agent architecture

supporting the envisaged corporate memory management scenarios. I shall explain

every main stage of the organisational top-down analysis of functionalities and

describe the characteristics and documentation of roles and protocols

supporting each agent sub-society, finally coming down to their implementation

into agent behaviours.

Chapter 10 - Handling annotation distribution. I detail how some aspects of the

conceptual foundations of the semantic web can support a multi-agent system in

allocating and retrieving semantic annotations in a distributed corporate

memory. I shall show how our agents exploit the semantic structures and the

ontology, when deciding where to allocate new annotations and when resolving

queries over distributed bases of annotations.

The fourth part gives the lessons learned and

the perspectives. It consists of three chapters, the first one discussing

the evaluation and return on experience of the CoMMA project, the second one

describing short-term improvements and current work, and the last one proposing

some long-term perspectives.

Chapter 11 - Evaluation and return on experience. This chapter gives the implementation

status of CoMMA and the trial process to get a feedback from the end-users and

carry-out the official evaluation of CoMMA. I shall present the criteria used,

the trial feeback, and the lessons learned.

Chapter 12 - Short-term improvements. This chapter gives beginnings of answers

to some of the problems raised by the evaluation. The ideas presented are

extensions of the work done in CoMMA, some of them already started to be

implemented and tested, while others are shallow specifications of possible

improvements.

Chapter

13 - long-term perspectives. I provide considerations on extensions that

could be imagined to go towards a complete real-world solution and I give

long-term perspectives that can be considered as my future research interests.

As shown on the

above schema, the reading follows a classical U-shape progress from generic

concerns to specific contributions, back and forth. I included as much

information material as I found necessary to provide this document with a

reasonable autonomy for a linear reading. I sincerely hope that the readers of

these pages will find information here to build new knowledge.

— Fabien Gandon

The objectives of

knowledge management are usually structured by three key issues: Capitalise (i.e., know where you are and you come from to know where you go), Share ( i.e., switch from individual to collective intelligence), Create (i.e., anticipate and innovate to survive) [Ermine, 2000]. How to

improve identification, acquisition, storage, access, diffusion and reuse and

maintenance of both internal and external knowledge in an organisation? This is

the question at the heart knowledge management. One approach for managing

knowledge in an organisation is the set-up of an organisational memory.

Information or knowledge management systems, and in particular a corporate

memory, should provide the concerned persons with the relevant pieces of

knowledge or information at the appropriate time, with the right level of

details and the adequate format of presentation. I shall discuss the notion of

organisational memory in the second sub-section.

Then, I shall give

an introduction to three domains that are now closely linked to organisational

knowledge management

- corporate modelling: the need for capturing an explicit view of the organisation that

can support the knowledge management solution led to include organisational

models as parts of the organisational memory.

- user modelling: the need for capturing the profile of the organisation's members

as future users, led user modelling field to find a natural application in

information and knowledge management for organisations.

- information retrieval

systems: accessing the right information at the

right moment may enable people to get the knowledge to take the good decision

at the right time; the retrieval is one of the main issues of information

systems and different techniques have been developed that can be use to support

knowledge management.

Core business resource: in 1994, Drucker already noticed how knowledge was a

primary resource of the society and he believed that the implications of this

shift would prove increasingly significant for organisations (commercial

companies, public institutions, etc.) [Drucker, 1994]. The past decade with its

emergence of knowledge workers and knowledge intensive organisations proved he

was right. Knowledge is now considered as a capital which has an economic

value; it is a strategic resource for increasing productivity; it is a

stability factor in a unstable and dynamic competitive environment and it is a

decisive competitive advantage [Ermine, 2000]. This encompasses also protecting

intellectual property of this capital of knowledge; as soon as a resource

becomes vital to a business, security and protection concern arise. Knowledge

management thus is also concerned with the problem of securing new and existing

knowledge and storing it to make it persistent over time. Also, as knowledge

production becomes more critical, managers will need to do it more reflectively

[Seely Brown and Duguid, 1998]. This means there is a requirement for methods

and tools to assist the management processes.

Transversal resource: knowledge concerns R&D, management (strategy,

quality, etc.), production (data management, documentation, workflows,

know-how, etc. ), human resources (competencies, training, etc.) [Ermine,

1998]. Moreover, knowledge is often needed far from were it was created (in

time and space): e.g. the production data may be useless to the worker of the

production line, but they are vital to the analyst of the management group that

will mine them and correlate them to build key indicators and take strategic

decisions; likewise the people of the workshop may be extremely interested in

the knowledge discovered by technology monitory group or lessons learned in

other workshops. As summarised in [Nagendra Prasad and Plaza, 1996], timely

availability of relevant information from resources accessible to an

organisation can lead to more informed decisions on the part of individuals,

thereby promoting the effectiveness and viability of decentralised decision

making. Decision making can be on the assembly line to solve a problem (e.g.

past project knowledge) or at the management board (e.g. manage large amount of

data and information and mine them to extract key indicators that will drive

strategic decisions). Sharing the activity in a group also implies to share the

knowledge involved in the activity. To survive, the organisation needs to share

knowledge among its members and create and collect new knowledge to anticipate

the future. [Abecker et al., 1998]

Competitive advantage: "in an economy where the only certainty is

uncertainty, the sure source of lasting competitive advantage is

knowledge" [Nonaka and Takeuchi, 1995]. Globalisation is leading to strong

international competition which is expressed in rapid market developments and

short lifecycles for products and services [Weggeman, 1996]. Therefore, it is

vital to maintain knowledge generation as a competitive advantage for knowledge

intensive organisations: this is critical, for instance, in trying to reduce

the time to market, costs, wastes, etc. An organisation is a group of people

who work together in a structured way for a shared purpose. Their activity will

mobilise knowledge about the subject of their work. This knowledge has been

obtained by experience or study and will drive the behaviour of the people and

therefore, determine the actions and reactions of the organisation as a whole.

Through organisational activities, additional knowledge will be collected and

generated; if it is not stored, indexed and reactivated when needed, then it is

lost. In a global market where information flows at the speed of Internet,

organisational memory loss is dangerous; an efficient memory is becoming a

vital asset for an organisation especially if its competitors also acknowledge

that fact.

Globalisation: globalisation was terribly boosted by the evolution

of information technology. As described by Fukuda in 1995, it started with the

use of main-frame computers for business information processing in the 60s and

70s, then it spread through the organisations with the micro computers even

starting to enter homes in the 80s and it definitively impacted the whole

society from the mid 80s by introducing networks and their world-wide webs of

interconnected people and organisations. "Now that they can communicate

interactively and share information, they come to know that they are affected

or regulated by the information power". [Fukuda, 1995]

Turnover: as the story goes, if NASA was to send men to the moon again, it would

have to start from scratch, having lost not the data, but the human expertise

that made possible the event of the 13th of July 1969. An

organisation's knowledge walks out of the door every night and it might never

come back.

Employees and knowledge gained during their engagement are often lost in the

dynamic business environment [Dzbor et

al., 2000]. The turn over in artefacts (e.g. software evolution, products

evolution) and in members of the organisation (retirement, internal and

external mobility, etc.) are major breaks in knowledge capitalisation.

Need for awareness: members of an organisation are often unaware of

critical resources that remain hidden in the vast repositories. Most of the

knowledge is thus forgotten in a relatively short time after it was invented

[Dzbor et al., 2000]. Competitive

pressure requires quick and effective reactions to the ever changing market

situations. The gap between the evolving and continuously changing collective

information and knowledge resources of an organisation and the employees'

awareness of the existence of such resources and of their changes can lead to

loss in productivity. [Nagendra Prasad and Plaza, 1996]. Too often one part of

an organisation repeats work of another part simply because it is impossible to

keep track of, and make use of, knowledge in other parts. Organisations need to

know what their corporate knowledge assets are and how to manage and make use

of these assets to capitalise on them. They are realising how important it is

to "know what they know" and be able to make maximum use of this

knowledge [Macintosh, 1994].

Project oriented management: this new way of management aims at

promoting organisations as learning systems and avoiding repeating the same

mistakes. Information about past projects - protocols, design specifications,

documentation of experiences: both failures and successes, alternatives

explored - can all serve as stimulants for learning, leading to "expertise

transfer" and "cross-project fertilisations" within and across

organisations. [Nagendra Prasad and Plaza, 1996]

Internationalisation and geographic dispersion: The other side of the coin of

globalisation is the expansion of companies to global trusts. As companies

expand internationally, geographic barriers can affect knowledge exchange and

prevent easy access to information [O’Leary, 1998]. It thus amplifies the

already existing problem of knowledge circulation and knowledge distribution

between artefacts and humans dispersed in the world. Moreover, the

internationalisation raises the problem of making knowledge cross-cultural and

language boundaries.

Information overload: with more and more data online (databases, internal

web, data warehouse, Internet etc.), “we are drowning in information, but

starved of knowledge”.

The question raised are: what is relevant and what is not? what are the

relevance criteria? how do they evolve? can we predict / anticipate future

needs? The amount of available information resources augment exponentially,

overloading knowledge-workers; too much information hampers decision making

just as much as insufficient knowledge does.

Information heterogeneity: the knowledge assets reside in many different places

such as: databases, knowledge bases, filing cabinets and people heads. They are

distributed across the enterprise [Macintosh, 1994] and the intranet and

internet phenomena amplifies that problem. The dispersed, fragmented and

diverse sources augment the cognitive overload of knowledge workers that need

integrated homogeneous views to interpret them into 'enactable' knowledge.

For all these

reasons, one of the rare consensus in the knowledge management domain is that

knowledge is now perceived as an organisational and production asset, a

valuable patrimony to be managed and thus there is a need for tools and methods

assisting this management.

One approach for

managing knowledge in an organisation is the set-up of an organisational

memory. Information or knowledge management systems, and in particular a corporate

memory, should provide the concerned persons with the relevant pieces of

knowledge or information at the appropriate time with the right level of

details and the adequate format of presentation. I shall discuss the notion of

organisational memory in the following sub-section.

I use 'corporate

memory' or 'organisational memory' as synonyms; the different concepts that are

sometimes denoted by these terms will be explored in the section about typology

of memories.

Organisations are

societies of beings: if these beings have an individual memory, the

organisation will naturally develop a collective memory being at the very least

the sum of these memories and often much more. From that perspective the

question here is what is knowledge management with regard to corporate

memories? For [Ackerman and Halverson, 1998] it is important to consider an

organisational memory as both an object and a process: it holds its state and

it is embedded in many organisational and individual processes. I stress the

difference here between this static aspect (the knowledge captured) and dynamic

aspect of the memory (the ability to memorise and remember) because I believe

they are unconditionally present in a complete solution.

|

i

|

A memory without an intelligence (singular or plural) in charge of it is

destined for obsolescence, and an intelligence without a memory (internal or

external) is destined for stagnation.

|

In fact, I see

three aspects that are used to define what is a corporate memory: the memory

content (what) i.e., the nature of

knowledge; the memory form (where) i.e., the

storage support; the memory working (how) i.e.,

the system managing knowledge. Here are some elements of definition found

in the literature for each one of these facets:

- content: it contains the organisational experience acquired by employees

and related to the work they carry out [Pomian, 1996]. It is a repository of

knowledge and know-how from a set of individuals working in a particular firm

[Euzenat, 1996]. It captures a company's accumulated know-how and other

knowledge assets [Kuhn and Abecker, 1997]. It consists of the total sum of the

information and knowledge resources within an organisation. Such resources are

typically distributed and are characterised by multiplicity and diversity:

company databases, machine-readable texts, documentation resources and reports,

product requirements, design rationale etc. [Nagendra Prasad and Plaza, 1996]

The corporate knowledge is the whole know-how of the company, i.e., its business processes, its

procedures, its policies (mission, rules, norms) and its data [Gerbé, 2000]. It

is an explicit, disembodied, persistent representation of the knowledge and

information in an organisation [Van Heijst et

al., 1996]. It preserves the reasoning and knowledge with their diversity

and contradictions in order to reuse them later [Pomian, 1996]

- form: [Kuhn and Abecker, 1997] characterised corporate memory as a

comprehensive computer system. In other approaches organisational memories take

the form of an efficient librarian solution based on document management

systems. Finally, it can rely on a human resources management policy relying on

human as 'knowledge containers'.

- working: a corporate memory makes knowledge assets available to enhance the

efficiency and effectiveness of knowledge-intensive work processes [Kuhn and

Abecker, 1997]. It is a system that enables the integration of dispersed and

unstructured organisational knowledge by enhancing its access, dissemination

and reuse among an organisation’s members and information systems [von Krogh,

1998]. The main function of a corporate memory is that it should enhance the

learning capacity of an organisation [Van Heijst et al., 1996]

My colleagues

proposed their own definition of a corporate memory: "explicit,

disembodied, persistent representation of knowledge and information in an

organisation, in order to facilitate its access and reuse by members of the

organisation, for their tasks" [Dieng et

al., 2001]. While I find it a good digest of the different definitions, I

do not think that knowledge must be disembodied and explicitly represented. I

believe that a complete corporate memory solution (i.e., memory & management system) should allow for the indexing

of external sources (may be embodied in a person or hold in another memory).

This is because, contrary to its human counterpart, the organisational memory

tends not to be centralised, localised and enclosed within a physical boundary,

but distributed, diffuse and heterogeneous. Moreover, just like its human

counterpart, it does not memorise everything it encounters: sometimes it is

better to reference/index an external resource rather than duplicate it so that

we can still consult it, but do not really have to memorise and maintain it.

The reasons may be because it is cost-effective, because it is not feasible

(copyright, amount of data, difficult formalisation, etc.), because it is ever

changing and volatile, because the maintenance is out of our expertise, etc.

Therefore, I would

suggest an extended definition:

|

i

|

An organisational memory is an explicit, disembodied, persistent representation and indexing of knowledge

and information or their sources in an organisation, in order to

facilitate its access, share and reuse by members of the organisation, for

their individual and collective tasks.

|

The desire of

management and control over knowledge, contrasts with its fluid, dispersed,

intangible, subjective and sometime tacit original nature. How can we manage

something we even have problem to define? Therefore, there has been an

extensive effort to analyse this knowledge asset and characterise it. I shall

give an overview of this discussion in a first following sub-part.

Management

consists of activities of control and supervision over other activities. In the

case of knowledge management, what are these activities? what does management

of the collective knowledge of an organisation consist in? In the second

following sub-part, I shall identify and describe the different activities and

processes involved.

Finally, I shall

give a typology of the memories that have been envisaged so far. It could also

be seen as a typology of the different facets of a complete solution trying to

integrate the different types of knowledge that must flow and interact, for an

organisation to live.

According to

[Weggeman, 1996], knowledge and information are primitive terms i.e., terms that are understood although

they cannot be accurately defined and the meaning of which lies in the correct

use of the concept that can only be learnt by practising the use.

If we take the

ladder of understanding (Figure 1) used in the librarian community, we can

propose some definitions of data, information and knowledge. These definitions

can be built bottom-up or top-down.

Starting from the

bottom, I have merged my definitions with the disjunctive definition of

knowledge information and data of [Weggeman, 1996] and [Fukuda, 1995]:

- Data is a perception, a

signal, a sign or a quantum of interaction (e.g.

'40' or 'T' are data). Data is symbolic representation of numbers, fact,

quantities; an item of data is what a natural or artificial sensor indicates

about a variable. [Weggeman, 1996]. Data are made up of symbols and figures which

reflect a perception of the experiential world [Fukuda, 1995].

- Information is data

structured according to a convention (e.g. T=40°).

Information is the result of the comparison of data which are situationally

structured in order to arrive at a message that is significant in a given

context [Weggeman, 1996]. Information is obtained from data which have been

given a significance and selected as useful [Fukuda, 1995].

- Knowledge is information

with a context and value that make it usable (e.g.

"the patient of the room 313 of the Hospital of Orleans

has a temperature T=40°"). Knowledge is what places someone in the

position to perform a particular task by selecting, interpreting and evaluation

information depending on the context. [Weggeman, 1996] This is why knowledge

empowers people. Knowledge is systematised information which I understand as

the information being arranged according to a definite plan or scheme i.e., existing knowledge. Knowledge is

an information which was interpreted (i.e.,

the intended meaning of which was decided) in context and which meaning was

articulated with already acquired knowledge [Fukuda, 1995].

Wisdom can be

defined as timeless knowledge and [Fukuda, 1995] adds an intermediary step for

theory which he defines as generalised knowledge.

Interestingly, I found no trace in

literature of top-down definitions while in presenting and re-presenting cycles

for individual and collective manipulation of knowledge, we are going up the

ladder to peruse and going down the ladder to communicate. Going down the

ladder, I would propose:

Interestingly, I found no trace in

literature of top-down definitions while in presenting and re-presenting cycles

for individual and collective manipulation of knowledge, we are going up the

ladder to peruse and going down the ladder to communicate. Going down the

ladder, I would propose:

- Knowledge is understanding of a subject which has been obtained by experience or study.

- Information can be defined

as knowledge expressed according to a convention or

knowledge in transit; The Latin root informare

means "to give form to". In an interview, Nonaka explained that

information is a flow of a messages, and knowledge is a stock created by

accumulating information; thus, information is a necessary medium or material

for eliciting and constructing knowledge. The second difference he made was

that information is something passive while knowledge comes from belief, so it

is more proactive.

- Data can be defined as the

basic element of information coding.

Figure 1

Ladder of understanding

I now look at the

different categories and characteristics of knowledge that were identified and

used in the literature:

- Formal knowledge vs.

informal knowledge: In its natural state, the

knowledge context includes a situation of interpretation and an actor

interpreting the information. However, when we envisage an explicit,

disembodied and persistent representation of knowledge, it means that it is an

artificial knowledge (the counterpart of an artificial intelligence), or more

commonly called formalised knowledge where information and context are captured

in a symbolic system and its attached model that respectively enable automatic

processing and unambiguous interpretation of results and manipulations. This

view which was developed in artificial intelligence is close to the wishful thinking

of knowledge management according to which knowledge could be an artificial

resource that only has value within an appropriate context, that can be

devalued and revalued, that is inexhaustible and used, but not consumed.

Unfortunately a lot of knowledge comes with a container (human, news letter,

etc.) that does not comply to this. The first axiom of [Euzenat, 1996] is that

"knowledge must be stated as formally as possible (...) However, not

everything can and must be formalised and even if it were, the formal systems

could suffer from serious limitations (complexity or incompleteness). " It

followed by a second axiom stating that "it must be possible to wrap up a

skeleton of formal knowledge with informal flesh made of text, pictures, animation,

etc. Thus, knowledge which has not yet reached a formal state, comments about

the production of knowledge or informal explanations can be tied to the formal

corpora." [Euzenat, 1996]. These axioms acknowledge the fact that in a

memory there will be different degrees of knowledge formalisation and that the

management system will have to deal with that aspect.

- Cognitivist perspective vs.

constructionist perspective [von Krogh, 1998]: The cognitivist perspective suggests

that knowledge consists of "representations of the world that consist of a

number of objects or events". The constructionist perspective suggests

that knowledge is "an act of construction or creation". Also, Polanyi

[Polanyi, 1966] regards knowledge as both static "knowledge" and

dynamic "knowing".

- Tacit knowledge vs.

explicit knowledge [Nonaka, 1994] drawing on

[Polanyi, 1966]: tacit knowledge is mental (mental schemata, beliefs, images,

personal points of view and perspectives, concrete know-how e.g. reflexes,

personal experience, etc.). Tacit knowledge is personal, context-specific,

subjective and experience based knowledge, and therefore, hard to formalise and

communicate. It also includes cognitive skills such as intuition as well as

technical skills such as craft and know-how. Explicit knowledge, on the other

hand, is formalised, coded in a language natural (French, English, etc.) or

artificial (UML, mathematics, etc.) and can be transmitted. It is objective and

rational knowledge that can be expressed in words, sentences, numbers or

formulas. It includes theoretical approaches, problem solving, manuals and

databases. As explicit knowledge is visible, it was the first to be managed or,

at least, to be archived. [Nonaka and Takeuchi, 1995] pointed out that tacit

knowledge is also important and raises additional problems; it was illustrated

by these companies having to hire back their fired or retired seniors because

they could not be replaced by the newcomers having only the explicit knowledge

of their educational background and none of the tacit knowledge that was vital

to run the business. Tacit knowledge and explicit knowledge are not totally

separate, but mutually complementary entities. Without experience, we cannot

truly understand. But unless we try to convert tacit knowledge to explicit

knowledge, we cannot reflect upon it and share it in the whole organisational

(except through mentoring situations i.e.,

master-apprentice co-working to ensure transfer of know how).

- Tacit knowledge vs. focal

knowledge: with references to Polanyi, [Sveiby,

1997] discusses tacit vs. focal

knowledge. In each activity, there are two dimensions of knowledge, which are

mutually exclusive: the focal knowledge about the object or phenomenon that is

in focus; the tacit knowledge that is used as a tool to handle or improve what

is in focus. The focal and tacit dimensions are complementary. The tacit

knowledge functions as a background knowledge which assists in accomplishing a

task which is in focus. What is tacit varies from one situation to another.

- Hard knowledge vs. soft

knowledge: [Kimble et al., 2001] propose hard and soft knowledge as being two parts of

a duality. That is all knowledge is to some degree both hard and soft. Harder

aspects of knowledge are those aspects that are more formalised and that can be

structured, articulated and thus ‘captured’. Soft aspects of knowledge on the

other hand are the more subtle, implicit and not so easily articulated.

- Competencies (know-how responsible and validated) / theoretical knowledge "know-that" / procedural knowledge / procedural

know-how / empirical know-how / social know-how [Le Bortef, 1994].

- Declarative knowledge (fact, results, generalities, etc.) vs. procedural knowledge (know-how, process, expertise, etc.)

- Know-how vs. skills: [Grundstein, 1995; Grundstein and Barthès, 1996] distinguish on

the one hand, know-how (ability to design, build, sell and support products and

services) and on the other hand, individual and collective skills (ability to

act, adapt and evolve).

- Know-what vs. know-how [Seely Brown and Duguid, 1998]: the organisational knowledge that

constitutes "core competency" requires know-what and know-how. The

know-what is explicit knowledge which may be shared by several persons. The

"know-how" is the particular ability to put know-what into practice.

While both work together, they circulate separately. Know-what circulates with

relative ease and is consequently hard to protect. Know-how is embedded in work

practice and is sui generis

and thus relatively easy to protect. Conversely, however, it can be hard to

spread, co-ordinate, benchmark, or change. Know-how is a disposition, brought

out in practice. Thus, know-how is critical in making knowledge actionable and

operational.

- Company knowledge vs.

corporate knowledge [Grunstein and Barthès, 1996]:

company knowledge is technical knowledge used inside the company, its business

units, departments, subsidiaries (knowledge needed everyday by the company

employees); corporate knowledge is strategic knowledge used by the management

at a corporate level. (knowledge about the company)

- Distributed knowledge vs.

centralised knowledge: [Seely Brown and Duguid,

1998] The distribution of knowledge in an organisation, or in society as a

whole, reflects the social division of labour. As Adam Smith insightfully

explained, the division of labour is a great source of dynamism and efficiency.

Specialised groups are capable of producing highly specialised knowledge. The

tasks undertaken by communities of practice develop particular, local, and

highly specialised knowledge within the community. Hierarchical divisions of

labour often distinguish thinkers from doers, mental from manual labour,

strategy (the knowledge required at the top of a hierarchy) from tactics (the

knowledge used at the bottom). Above all, a mental-manual division predisposes

organisations to ignore a central asset, the value of the know-how created

throughout all its parts.

- Descriptive knowledge vs.

deductive knowledge vs. documentary knowledge

[Pomian, 1996]: descriptive knowledge is about history of the organisation,

chronological events, actors, and domain of activity. Deductive knowledge is

about the reasoning (diagnostic, planning, design, etc.) and their

justification (considered aspects, characteristics, facts, results, etc.). It

is equivalent to what some may call logic and rationale. Finally, documentary

knowledge is knowledge about documents (types, use, access, context, etc.),

their nature (report, news, etc.), their content (a primary document contains

raw / original data; a secondary document contains identification / analysis of

primary documents; a tertiary contains synthesis of primary or secondary

documents) and if they are dead (read-only document, frozen once for all) or

living (changing its content, etc.). J. Pomian rightly insists on the importance

of the interactions between these types of knowledge. One can also find

finer-grained descriptions: here the static knowledge was decomposed into

descriptive and documentary knowledge, but the dynamic aspect could be

decomposed into rationale (plan, diagnostic, etc.) vs. heuristic (rule of the thumb, etc.) vs. theories vs. cases vs. (best) practices.

- Tangible knowledge vs.

intangible knowledge [Grunstein and Barthès, 1996]:

tangible assets are data, document, etc. while intangible assets are abilities,

talents, personal experience, etc. Intangible assets require an elicitation to

become tangible before they can participate to a materialised corporate memory

- Technical knowledge vs.

management knowledge [Grunstein and Barthès, 1996]:

the technical knowledge is used by the core business in its day-to-day work.

The strategic or management knowledge is used by the managers to analyse and

the organisation functioning and build management strategies

And so on: content vs. context [Dzbor et al., 2000], explicable knowledge (with justifications) vs. not explicable (bare facts),

granularity (fuzzy vs. precise,

shallow vs. deep, etc.), individual vs. collective, volatile vs. perennial (from the source point of

view), ephemeral vs. stable (from the

content point of view), specialised vs. common

knowledge, public vs. personal/private,

etc.

A first remark is

that the differences proposed here are not always mutually exclusive and

neither always compatible. Secondly a knowledge domain is spread over a

continuum between the extremes proposed here. However, most traditional company

policies and controls focus on the tangible assets of the company and leave

unmanaged their important knowledge assets [Macintosh, 1994].

|

i

|

An organisational memory may include (re)sources at different levels of

the data-information-knowledge scale and of different nature. It implies that

a management solution must be able to handle and integrate this

heterogeneity.

|

The stake in building

a corporate memory management system is the coherent integration of this

dispersed knowledge in a corporation with the objective to "promote

knowledge growth, promote knowledge communication and in general preserve

knowledge within an organisation" [Steels, 1993]. This implies a number of

activities to turn the memory into a living object.

In [Dieng et al., 2001] my colleagues discussed

the lifecycle of a corporate memory. I added the overall activity of managing

the different knowledge management processes i.e., as described in [Zacklad and Grundstein, 2001a; 2001b] to

promote, organise, plan, motivate, etc. the whole cycle. The result is depicted

in Figure 2 and I shall comment the different phases

with references to the literature.

Figure 2

Lifecycle of a corporate

memory

Here are some cycles proposed

in literature:

- [Grunstein and Barthès, 1996]: locate crucial knowledge, formalise /

save, distribute and maintain, plus manage that was added in [Zacklad and

Grundstein, 2001a; 2001b]

- [Zacklad and Grundstein, 2001a; 2001b]: manage, identify, preserve,

use, and maintain.

- [Abecker et al., 1998]:

identification, acquisition, development, dissemination, use, and preservation.

- [Pomian, 1996]: identify, acquire/collect, make usable.

- [Jasper et al., 1999]:

create tacit knowledge, discover tacit or explicit knowledge, capture tacit

knowledge to make it explicit, organise, maintain, disseminate through push

solutions, allow search through pull solutions, assist reformulation,

internalise, apply

- [Dieng et al., 2001]:

detection of needs, construction, diffusion, use, evaluation, maintenance and

evolution.

I have tried to

conciliate them in the schema in Figure 2, where the white boxes are the

initialisation phase and the grey ones are the cycle strictly speaking. The

central 'manage' activity oversees the others. I shall describe them with some

references to the literature:

- Management: knowledge management is difficult and costly. It requires a

careful assessment of what knowledge should be considered and how to conduct

the process of capitalising such knowledge [Grunstein and Barthès, 1996]. All

the following activities have to be planned and supervised; this is the role of

a knowledge manager.

- Inspection: inventory of fixtures to identify knowledge that already exists

and knowledge that is missing [Nonaka, 1991]; identify strategic knowledge to

be capitalised [Grundstein and Barthès, 1996]; make an inventory and a

cartography of available knowledge: identify assets and their availability to

plan their exploitation and detect lacks and needs to offset weak points [Dieng

et al., 2001]

- Construction: build the corporate memory and develop necessary new knowledge

[Nonaka, 1991], memorise, link, index, integrate different and/or heterogeneous

sources of knowledge to avoid loss of knowledge [Dieng et al., 2001]

- Diffusion: irrigate the organisation with knowledge and allocate new

knowledge [Nonaka, 1991]. Knowledge must be actively distributed to those who

can make use of it. The turn-around speed of knowledge is increasingly crucial

for the competitiveness of companies. [Van Heijst et al., 1996] Make the knowledge flow and circulate to improve

communication in the organisation: transfer of the pieces of knowledge from

where they were created, captured or stored to where they may be useful. It is

called "activation of the memory" to avoid oblivion knowledge buried

and dormant in a long forgotten report [Dieng et al., 2001]. This implies a facility for deciding who should be

informed about a particular new piece of knowledge and this point justify this

sections about user modelling and organisation modelling.

- Capitalisation: process which allows to reuse, in a relevant way, the knowledge of

a given domain, previously stored and modelled, in order to perform new tasks

[Simon, 1996], to apply knowledge [Nonaka, 1991], to build upon past experience

to avoid reinventing the wheel, to generalise solutions, combine and/or adapt

them to go further and invent new ones, improve training and integration of new

members [Dieng et al., 2001] The use

is tightly linked to diffusion since the way knowledge is made available

conditions the way it may be exploited.

- Evaluation: it is close to inspection since it assesses the availability and

needs of knowledge. However it also aims at evaluating the solution chosen for

the memory and its adequacy, comparing the results to the requirements, the

functionalities to the specifications etc.

- Evolution: it is close to construction phase since it will deal with additions

to the current memory. More generally it is the process of updating changing

knowledge and removing obsolete knowledge [Nonaka, 1991]; it is where the

learning spiral takes place to enrich/update existing knowledge (improve it,

augment it, precise it, re-evaluate it etc.).

|

i

|

The memory management consists of two initial activities (inspection of

the knowledge situation and construction of the initial memory) and four

cyclic activities (diffusion, capitalisation, evaluation, evolution); all

these activities being planned and supervised by the management.

|

In his third

axiom, [Euzenat, 1996] insisted on the collaborative dimension: people must be

supported in discussing about the knowledge introduced in the knowledge base.

In this perspective, re-using, diffusing and maintaining knowledge should be

participatory activities and underlines the strong link between some problems

addressed in knowledge management and results of research in the field of

Computer Supported Collaborative Work. "Users will use the knowledge only

if they understand it and they are assured that it is coherent. The point is to

enforce discussion and consensus while the actors are still at hand rather than

hurrying the storage of raw data and discovering far latter that it is of no

help." [Euzenat, 1996]

[Nonaka and Takeuchi, 1995] details

the social and individual processes at play during the learning spiral of an

organisation and focuses on the notion of tacit and explicit knowledge (Figure 3).

Figure 3

Learning spiral of [Nonaka

and Konno, 1998]

[Nonaka and

Takeuchi, 1995] believe that knowledge creation processes are more effective

when they spiral through the four following activities to improve the

understanding:

- Socialisation (Tacit ® Tacit):

transfers tacit knowledge in one person to tacit knowledge in another person.

It is an experiential and active process where the knowledge is captured by

walking around, through direct interaction, by observing behaviour by others

and by copying their behaviours and beliefs. It is close to the remark of

[Kimble et al., 2001] saying that

communities of practice are central to the maintenance of soft knowledge.

- Externalisation (Tacit ®

Explicit): getting tacit knowledge into an explicit form so that you can look

at it, manipulate it and communicate it. It can be an individual articulation

of one’s own tacit knowledge where one is looking for awareness and expressions

of one's ideas, images, mental models, metaphors, analogies, etc. It can also

consist in eliciting and expressing the tacit knowledge of others into explicit

knowledge.

- Combination (Explicit ®

Explicit): take explicit explainable knowledge, combine it with other explicit

knowledge and develop new explicit knowledge. This is where information

technology is most helpful, because explicit knowledge is conveyed in artefacts

(e.g. documents) that can be collected, manipulated, and disseminated allowing

knowledge transfer across organisations.

- Internalisation (Explicit ® Tacit):

understanding and absorbing collectively shared explicit knowledge into

individual tacit knowledge actionable by the owner. Once one deeply learned a

process, it becomes completely internal and one can apply it without noticing,

as reflex or automatic natural activities. Internalisation is largely

experiential through the actual doing, in real situation or in a simulation. A

symptom is that generally when one tries to pay attention to how one does these

things, it impairs one's performance and this is a break to elicitation

processes.

Finally, concerning

the learning, [Van Heijst et al.,

1996] differentiate two types:

- top-down learning, or strategic learning: at some management level a

particular knowledge area is recognised as strategic and deliberate action is

planed and undertaken to acquire that knowledge.

- bottom-up learning: a worker learns something which might be useful

and that this lesson learned is distributed through the organisation.

As far as I know

and so far, no one has tackled all the different types of knowledge and

activities of management in one project coming up with a complete solution.

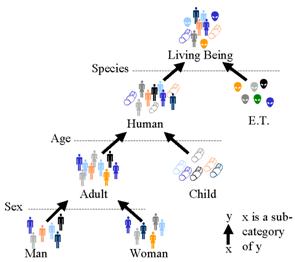

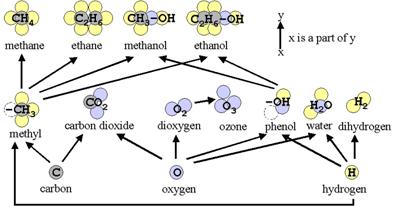

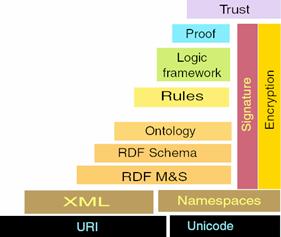

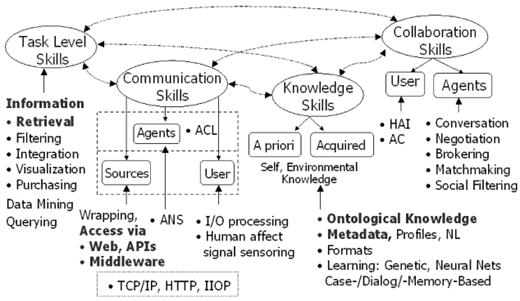

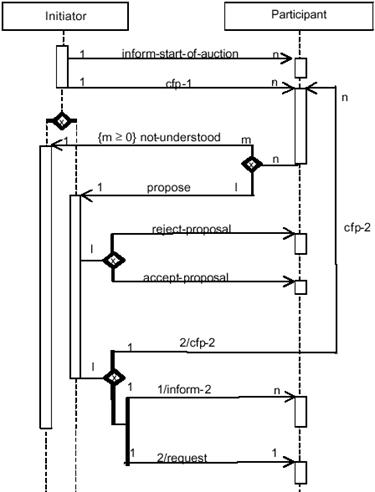

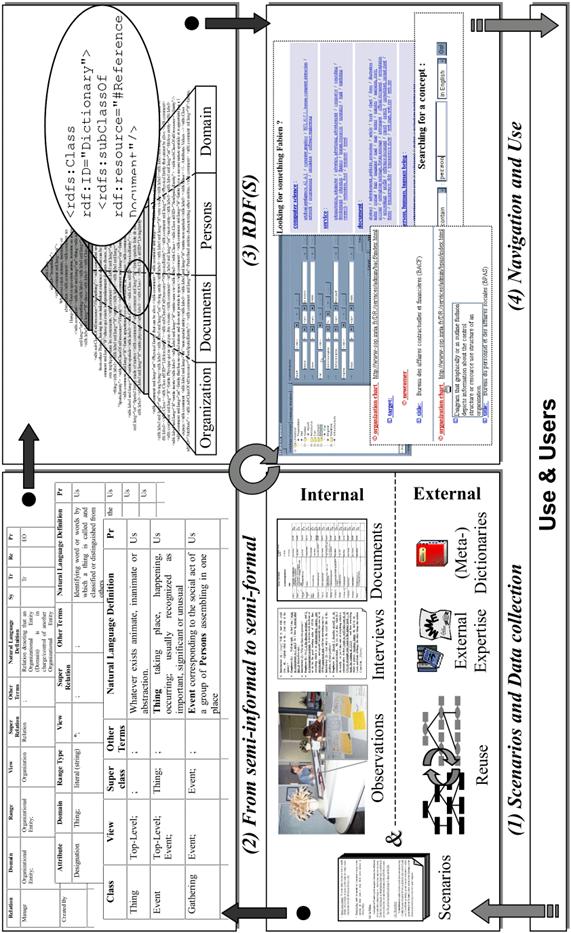

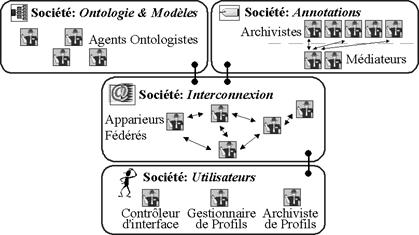

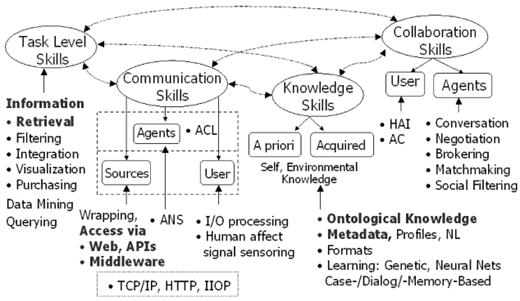

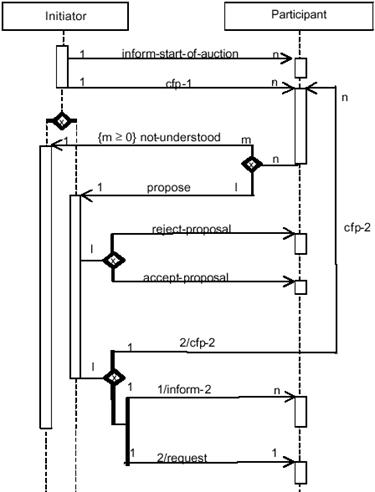

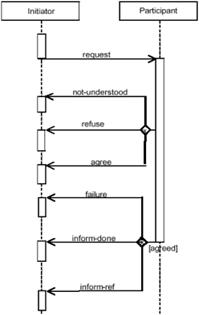

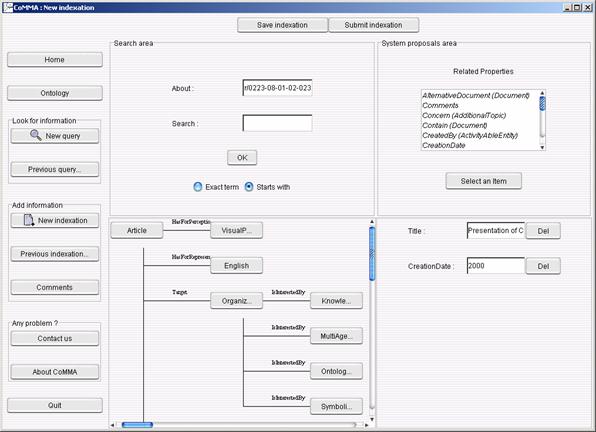

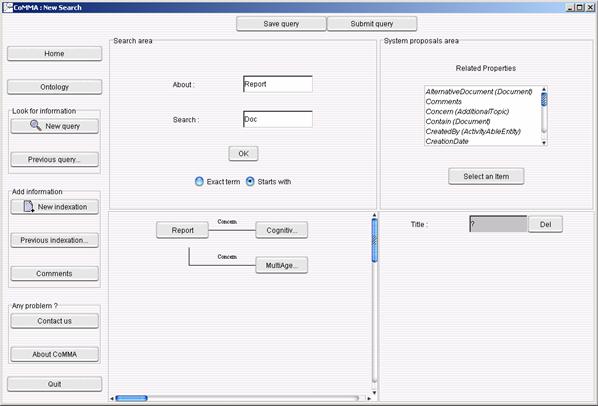

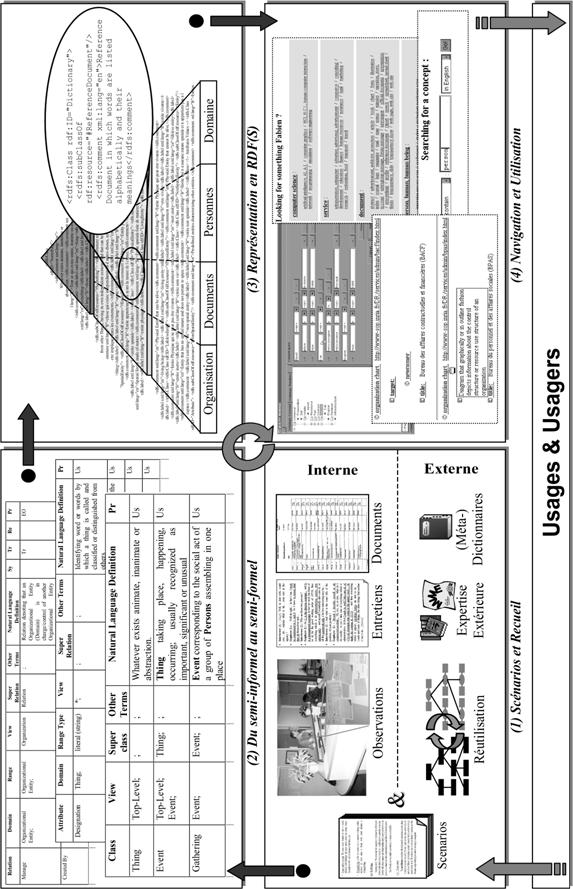

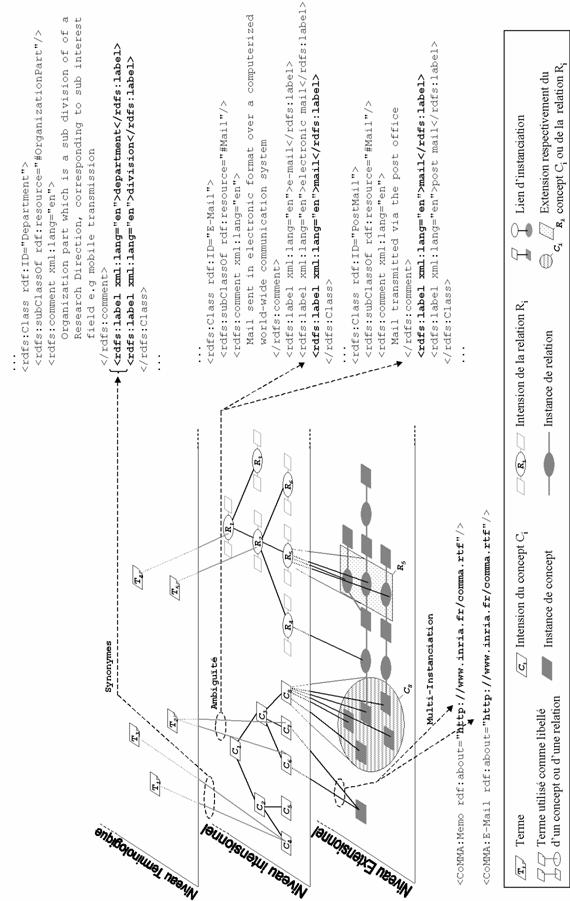

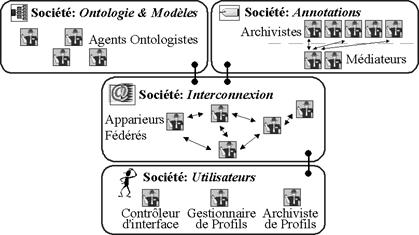

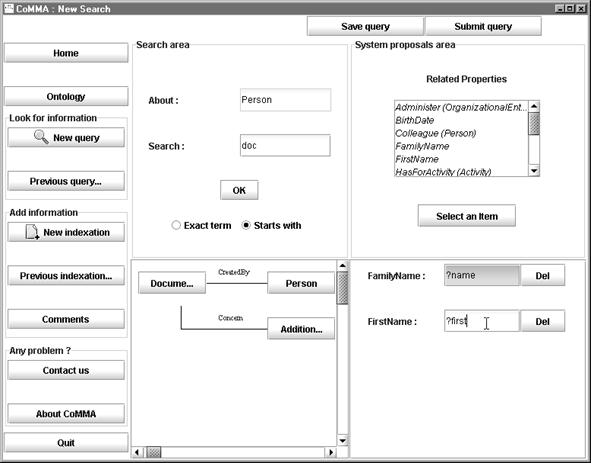

Every study and project focused on selected types of knowledge and knowledge